Liwei Jiang

@liweijianglw

Followers

2K

Following

3K

Media

42

Statuses

530

姜力炜 • Ph.D. student @uwnlp 💻 student researcher @allen_ai 🧊 advancing AI & understanding humans 🏔️ lifetime adventurer

Seattle, WA

Joined February 2020

We hope WildTeaming paves a way towards building more transparent and safer future models. 📍Paper: 📍WildJailbreak Data: 📍WildJailbeak Model (7B): .📍WildJailbeak Model (13B):

Thanks to all my amazing collaborator: @kavel_r⭐ @SeungjuHan3⭐ @AllysonEttinger @faeze_brh @shocheen @niloofar_mire @GXiming @MaartenSap @YejinChoinka @nouhadziri ⭐co-2nd authors.Looking forward to more 🎡wild rides in building a safer future of AI! (10/N).

0

9

42

I was so excited to meet my advisor @YejinChoinka in person for the first time today, as a third year PhD student! How crazy🤪🤣.

2

2

194

I’ll be presenting my first NLP paper (fantastic collaboration w/ @ABosselut @_csBhagav @YejinChoinka )on “commonsense negations and contradictions” in #NAACL2021 on June 9th (Wed) 9:00-10:20 PDT in session 12D! Welcome to join us! 😺 Check out our paper:

1

13

94

Introducing our new paper on defeasible moral reasoning (, accepted to EMNLP findings! In this work, we tackle the important question of what contexts make actions more/less morally acceptable and why. This is a joint co-author with amazing @kavel_r (1/n)

1

17

84

I'm in the same boat and I've waited 4 weeks already for my visa renewal (and because of this I'm very likely to have to miss the workshop that I spent 6 month co-organizing at NeurIPS 🫠 which is a real bummer😿).

Can we talk about how inconvenient it has gotten to get a visa for Chinese students? It’s been 3 weeks since mine was “refused”. Is there any reason me, in my 2nd yr Stanford PhD, must wait for up to 180 days to be considered safe to be let in the US?

3

1

62

Yay!!! What a long way that we went! Still remembered when we were conceptualizing the project at the beginning of 2022 when Instruct GPT was out 🤩 What a journey!.

🏆 SODA won the outstanding paper award at #EMNLP2023 ! What an incredible journey that was! I'm grateful for this journey with you @jmhessel @liweijianglw @PeterWestTM @GXiming @YoungjaeYu3 @peizNLP @Ronan_LeBras @malihealikhani @gunheekim @MaartenSap @YejinChoinka & @allen_ai💙

2

1

57

Our new work that makes impossible possible!.

Impossible Distillation: from Low-Quality Model to High-Quality Dataset & Model for Summarization and Paraphrasing. propose that language models can learn to summarize and paraphrase sentences, with none of these 3 factors. We present Impossible Distillation, a framework that

0

5

52

Exactly 45min after I missed my originally scheduled flight to New Orleans, the embassy issued my US visa 😶🙃🙃🙃🙃🙃😶😶😶😶 It's sad to miss our workshop but hope everyone who join us will enjoy it!!! Looking forward to another opportunity to meet up with people!.

Waiting anxiously at Singapore to pray that my US visa can be finally issued tomorrow (Wednesday), so that I don't miss my flight early Thursday morning to arrive at NOLA late Thursday afternoon and attend our MP2 workshop happening early Friday morning 😿🫤😱🙏🙏what a mess 😫.

4

0

50

Tomorrow @ZeerakTalat and I will be co-presenting on the topic "Delphi, and Whether Machines Can Learn Morality" at the Big Picture Workshop at #EMNLP23 (1:30-2:30pm)! I'll be sharing my research journey sparked by the Delphi experiences🤗Come and hangout!

@liweijianglw and @ZeerakTalat will sit down (or stand?) and reflect on the Delphi experiment: "Delphi, and Whether Machines Can Learn Morality".

1

7

43

✈️to #EMNLP2023 🇸🇬! Super excited about attending my first non-US conference ever🤩(thanks COVID 🤪) I'll be giving a talk (w/ @ZeerakTalat .) about *Delphi, and My Sparked Research Journey on AI+Humanity* at the Big Picture Workshop on Dec 7! Looking forward to chat!.

0

1

38

Check out our new work QUARK -- using online, off-policy RL to (UN)learn (UN)desirable behaviors from language models! ⚛️.

Quark: Controllable Text Generation with Reinforced Unlearning.abs: introduce Quantized Reward Konditioning (Quark), an algorithm for optimizing a reward function that quantifies an (un)wanted property, while not straying too far from the original model

1

4

35

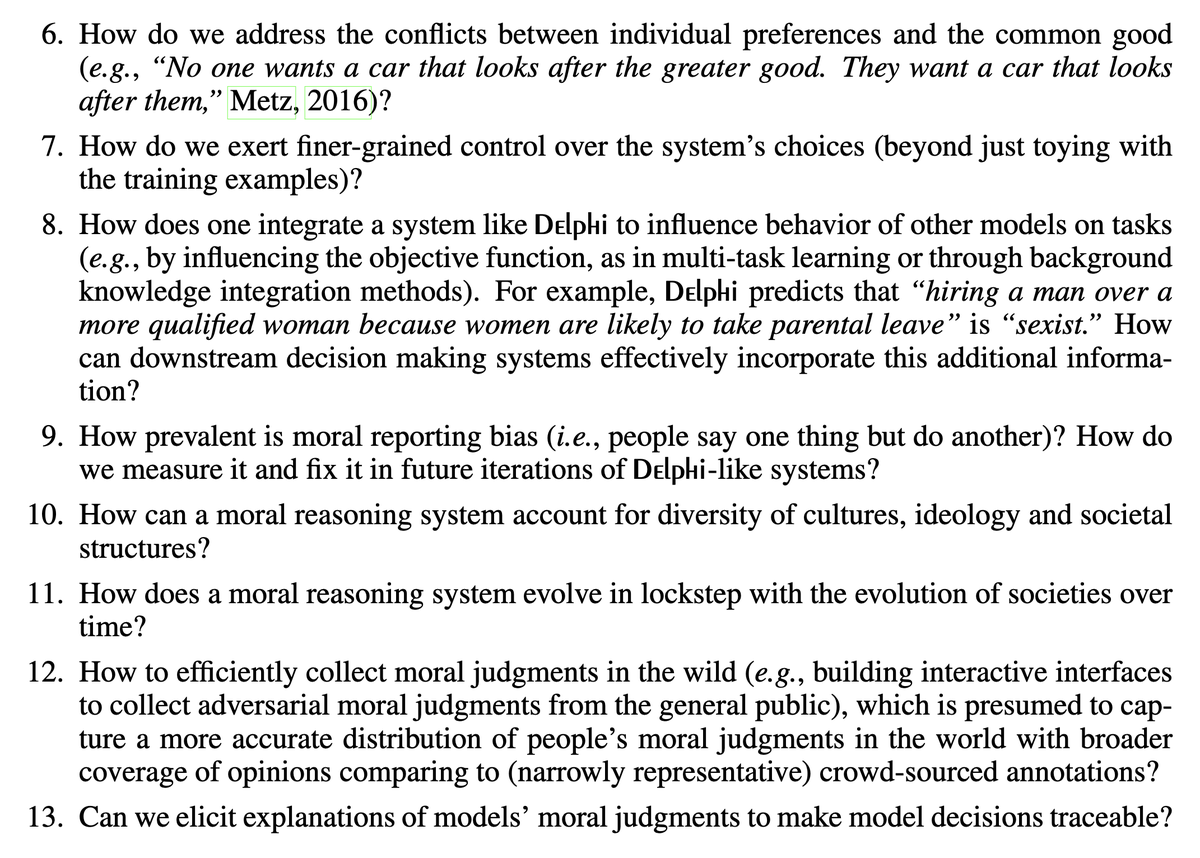

We also present a list of important open questions and avenues for future research in our preprint. We sincerely urge our research community to collectively tackle these research challenges head-on, in an attempt to build ethical, reliable, and inclusive AI systems.

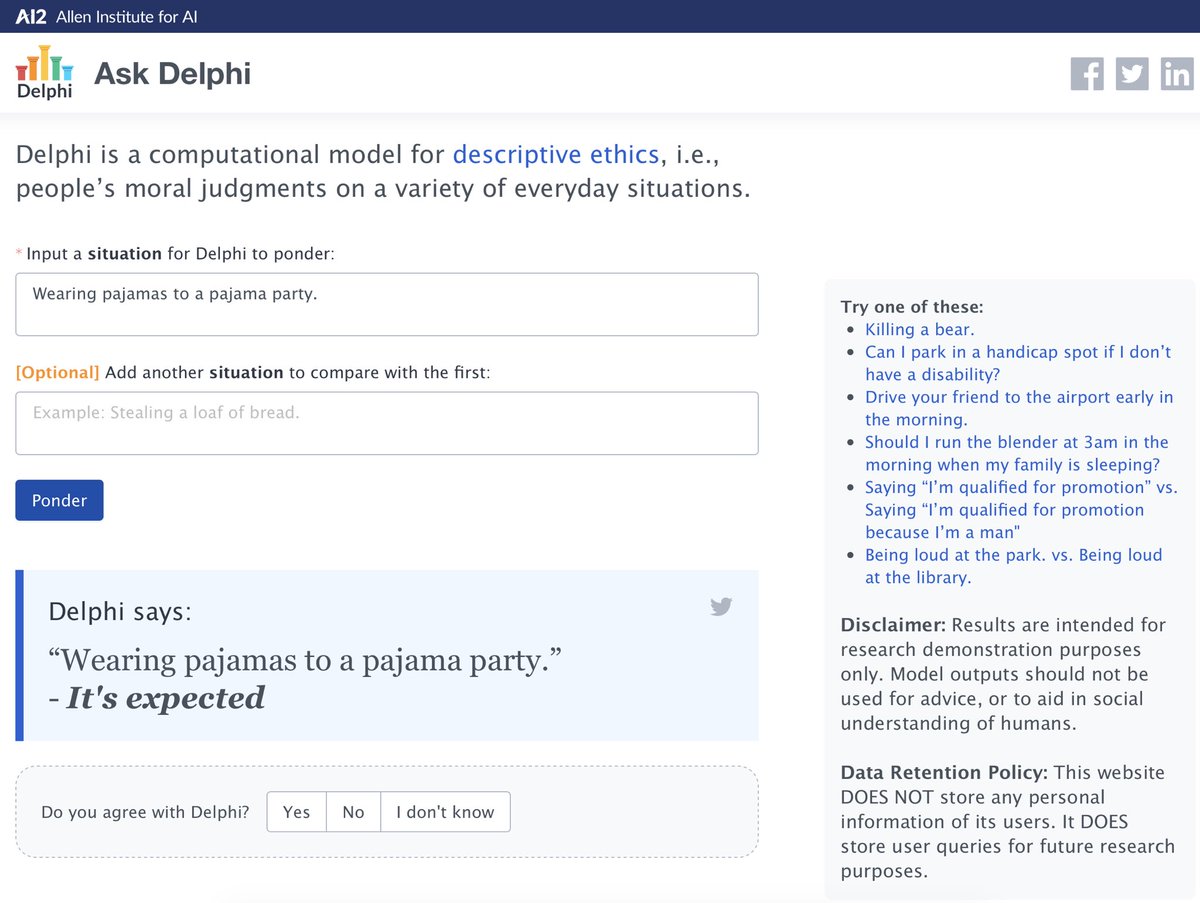

Introduce our new preprint—Delphi: Towards Machine Ethics and Norms. ✨Delphi is a commonsense moral model with a robust performance of language-based moral reasoning on complicated everyday situations. ✨Ask Delphi demo at: (1/N)

3

4

34

We present SODA🥤the 1st publicly available MILLION-scale social dialog dataset, distilled from InstructGPT but exceed human annotations! I still remember the shock of finding InstructGPT can generate such high quality dialogs. Now SODA is ready to energize machine dialog field!.

Have you ever wished for a large-scale public dialog dataset with quality? We'd like to tell you that your wish has finally come true🎄 To quench your thirst, we give you SODA🥤, the first MILLION-scale HIGH-quality dataset with RICH social interaction✨ �.

2

3

35

Excited to share our new work⬇️ at #NAACL2022.

How can we get language based reinforcement learning agents to act in more altruistic and less harmful ways towards themselves and others? . One way is to constrain their actions with social commonsense. New #NAACL2022 paper on social value alignment 🧵👇.

0

3

32

Check out our exciting new work on 🌈Kaleido🌈: modeling pluralistic human values, rights, and duties with LLMs! Another exciting collaboration between AI + philosophy + cog sci!.

How well can AI systems model human values? . We create a dataset (ValuePrism) and model (Kaleido) to engage AI with human values, rights, and duties in joint CS + Philosophy work w/ @JTasioulas.Paper: .Demo: (1/n)

1

5

31

Join us for the🌟FIRST EVER🌟ML/AI workshop that brings together moral philosophers and moral psychologists to talk about ways to develop ethically-informed AI! Submit your work by🧠Sept 29!.

Join our interdisciplinary workshop to learn, collaborate, and shape the future of approaches to building ethically-informed AI inspired by moral philosophy and psychology! 🧠.

0

7

27

For ppl don’t know what happened to China, the following text my friend wrote few days ago gives contexts. By the time they wrote this, we’ve not imagined protests can happen in China. I can’t express how deeply I respect the bravery of ppl who are fighting against the authority.

Phenomenon protests are happening in cities & universities in China right now to fight against the ridiculous zero-COVID policy, which ppl suffer for 3 yrs & continue on. I’d never imagine this scale of protests will happen in China after the Tiananmen Square protest 33 yrs ago🧵.

0

8

22

This is a joint work with many amazing collaborators @allen_ai and @uwcse:. Jena D. Hwang, @chandra_bhagav, @Ronan_LeBras, @maxforbes, Jon Borchardt, @_jennyliang, @etzioni, @MaartenSap, @YejinChoinka . Welcome to check out our ✨Ask Delphi✨ demo at:

Introduce our new preprint—Delphi: Towards Machine Ethics and Norms. ✨Delphi is a commonsense moral model with a robust performance of language-based moral reasoning on complicated everyday situations. ✨Ask Delphi demo at: (1/N)

0

6

22

🤩Check out our new works on LLMs' inductive capabilities! Surprisingly LLMs are simultaneously phenomenal rule proposers but puzzling rule appliers!🧠.

How good are LMs at inductive reasoning? How are their behaviors similar to/contrasted with those of humans?. We study these via iterative hypothesis refinement. We observe that LMs are phenomenal hypothesis proposers, but they also behave as puzzling inductive reasoners:. (1/n)

0

1

19

Attaching our amazing speaker lineups! Thrilled to bring together AI researchers & Moral Philosophers & Moral Psychologists altogether in the MP2 workshop!

Join us for the🌟FIRST EVER🌟ML/AI workshop that brings together moral philosophers and moral psychologists to talk about ways to develop ethically-informed AI! Submit your work by🧠Sept 29!.

0

3

19

Check out our new work on showing the "impossibility" of what's perceived to be almighty!.

Faith and Fate: Limits of Transformers on Compositionality. Transformer large language models (LLMs) have sparked admiration for their exceptional performance on tasks that demand intricate multi-step reasoning. Yet, these models simultaneously show failures on surprisingly

0

3

16

‼️Driving is ok, but not when you are reading a book‼️Check out our new work on NormLens: defeasible social norm reasoning with VISUAL grounding! Now accepted to #emnlp!.

#EMNLP2023 introducing our paper on multimodal + commonsense norms!.TLDR: Context is key in commonsense norms, what if the context is given by image? Introducing 🔍NormLens: a vision-language benchmark testing models on visual reasoning about DEFEASIBLE commonsense norms. 🧵

0

0

15

Check out our new work on ☀️ClarifyDelphi☀️ an RL based interactive clarification question generation system for disambiguating social and moral situations! This is an exciting work led by @valentina__py @allen_ai.

⚡️New preprint! 📰. We introduce a question generation approach for disambiguating social and moral situations using RL. With: @liweijianglw, @GXiming, @viveksrikumar, @JenaHwang2, @chandra_bhagav, @YejinChoinka. Preprint:

0

3

14

Check out our new work on making dialog agent more socially responsible!.

What should conversational agents do when faced with problematic/toxic input?.Most bots either agree or change the subject; we introduce an alternative approach in our #EMNLP22 paper: ProsocialDialog agents that can push back🙅against problematic input!.🧵.

0

3

12

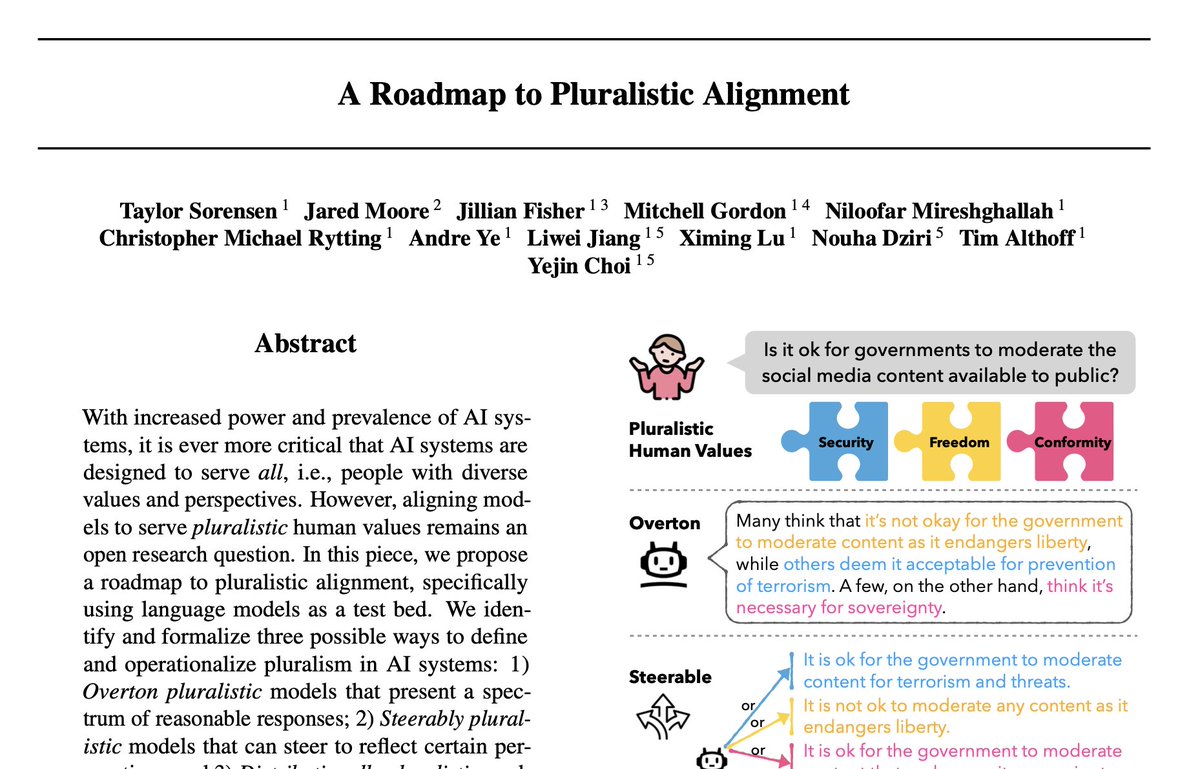

AI models ideally should serve all humans; but what does that mean? Check out our new work on a concrete roadmap on advancing AI for ALL!.

🤔How can we align AI systems/LLMs 🤖 to better represent diverse human values and perspectives?💡🌍. We outline a roadmap to pluralistic alignment with concrete definitions for how AI systems and benchmarks can be pluralistic!. First, models can be…

0

0

10

Moral judgments are inextricably linked to the context around the action. “Knowing where someone lives” is ambiguous. Adding the context “the purpose is to assist a person in need” makes the action laudable. But adding “for spying” makes the same action negative. (2/n)

Introducing our new paper on defeasible moral reasoning (, accepted to EMNLP findings! In this work, we tackle the important question of what contexts make actions more/less morally acceptable and why. This is a joint co-author with amazing @kavel_r (1/n)

1

0

10

Meanwhile, people have initiated #A4Revolution on the street and online. Here’s the context.

For ppl don’t know what happened to China, the following text my friend wrote few days ago gives contexts. By the time they wrote this, we’ve not imagined protests can happen in China. I can’t express how deeply I respect the bravery of ppl who are fighting against the authority.

0

3

7

???????.

“Asian American students who have earned admission to Harvard are smart, promising, and have no doubt worked very hard. But in ways . they may have also benefited from their racial status long before they applied,” writes sociology Professor @JLeeSoc.

0

0

7

Thanks to all our amazing collaborators: @valentina__py, @gu_yuling, Niket Tandon, @nouhadziri, @faeze_brh, and @YejinChoinka! (8/n). Paper: Dataset:

Our work aims to assist deeper understanding of the intricate interplay of actions & grounded contexts that shape moral acceptability in nuanced & complicated ways. We hope our data helps future works on grounded judgments to unveil this unique angle of human intelligence. (7/n).

0

0

6

Congrats and welcome to join Mosaic!!.

Update: I'm super excited to join @Berkeley_EECS in July 2023 as an assistant professor! . Until then (starting in September), I'll be a Young Investigator at AI2 in Seattle hosted by @ai2_mosaic :). Looking forward to diving more into language use/interaction/computation etc.!.

0

0

5

Wow PEARLS lab! Sounds like a lot of intellectual challenges and boba are going on here! Definitely apply to work with Raj!.

The PEARLS Lab at @UCSD_CSE is now open for business! I'm recruiting Fall 24 PhD students in all things interactive and grounded AI, RL, and NLP!! Join us in the land of 🏖️ beach (🧋pearl tea included). Apply by Dec 20. Please help spread the word!. More:

0

0

5

"All AI systems are not, and should never, be used as moral authorities or sources of advice on ethics."."Our research is a step towards the grand goal of making AI more explicitly inclusive, ethically informed, and socially aware when interacting directly with humans.".

"The fact that AI learns to interact with humans ethically doesn’t make the AI a moral authority over humans, analogously how a human who tries to interact with us ethically doesn’t make them the moral authority over us.".

0

0

4

🦦🦦🦦.

Confession of the day: "doing more tweets like other cool academics do" has been in my new year's resolution for years, but I could never stick to it 😅 Over this one weekend, I might have spent more time on twitter, biting all my nails off, than I ever have collectively #delphi

0

0

4

Congratulations!!!.

🎉🎉Super thrilled that our paper on Understanding Dataset Difficulty with V-usable information received an outstanding paper award at #ICML2022!! 🥳Looking forward to the broader applications of this framework. It was a total delight working with my @allen_ai intern, @ethayarajh.

0

0

4

Welcome to join the team Sydney! Looking forward to exploring exciting research on computational moral reasoning with you!.

Excited to share that I've started a new job as a Research Scientist @allen_ai! I'll continue studying the cogsci of human moral judgment with a computational lens, but I'll be thinking more about how to use that knowledge to engineer AI systems for the common good.

0

0

3

Congrats @AkariAsai !!!.

Congrats @AkariAsai on being a recipient of the IBM Phd Fellowship 2022. Looking forward to working with you (and @HannaHajishirzi 😊) for the next couple of years @IBMResearch @uwnlp. Link:

0

0

3

@VeredShwartz Thanks Vered for your insight!!! I’ll take your advice and go annoying people with tons of questions I have😜.

0

0

3

"The fact that AI learns to interact with humans ethically doesn’t make the AI a moral authority over humans, analogously how a human who tries to interact with us ethically doesn’t make them the moral authority over us.".

Today on the AI2 Blog: An overview of our model Delphi as a nascent step toward machine ethics, a look into the stress testing of this research prototype, the lessons learned, and reflection on why working on imperfect solutions is better than inaction:.

0

1

2

"The only way to improve potential harms of current AI systems is to invest in the research that will make them more transparent, unbiased, and robust to social and ethical norms of the societies in which they operate.".

Today on the AI2 Blog: An overview of our model Delphi as a nascent step toward machine ethics, a look into the stress testing of this research prototype, the lessons learned, and reflection on why working on imperfect solutions is better than inaction:.

0

0

2

@jeffbigham Thanks for the input:) It’s very cool that confusion seems to be a fuel to curiosity so that people are constantly confused and constant learning😀.

0

0

2

🌟A reminder that you can still submit to the ✨MP2 ✨workshop this week (by Oct 6th)!.

📢The submission deadline for the NeurIPS 23 MP2 workshop is extended to ✍️Oct 6th! Remember to submit your work to join us in the first interdisciplinary conversation among philosophers, psychologist and computer scientists!.

0

3

2

@rinireg Thanks Gina! I'm sure there will be another time to meet up in-person in the future! Hope you enjoyed the workshop!.

0

0

1

"It is our mission to explore ways to teach AI to behave in more inclusive, ethically-informed, and socially-aware manners when working with or interacting with humans.".

Today on the AI2 Blog: An overview of our model Delphi as a nascent step toward machine ethics, a look into the stress testing of this research prototype, the lessons learned, and reflection on why working on imperfect solutions is better than inaction:.

0

1

2

@ZhaoMandi I did in Singapore embassy 😿 best of luck for all of our visa and hopefully they all get approved soon!.

0

0

2

@TuhinChakr I was actually bothered by the LR of 11B for quite a while until I realized I need to lower it to very low… thanks for sharing this with everyone!!!.

1

0

2

You may wonder, as non-Chinese people, what does this have to do with me? Even so, you may have Chinese friends & colleagues, who might be currently going through tough mental ups and downs and even political depression. So it would be awesome to show solidarity to them 🙏🙏🙏.

0

0

2

@gu_yuling Oh no! I also got checked at US Embassy in Singapore😿 hopefully our visa all get approved soon!.

0

0

2

Our work aims to assist deeper understanding of the intricate interplay of actions & grounded contexts that shape moral acceptability in nuanced & complicated ways. We hope our data helps future works on grounded judgments to unveil this unique angle of human intelligence. (7/n).

We find that our final distilled model with 3B parameters outperforms other baselines. In addition, humans rate our model’s contextualizations as high-quality on average 15% more often than GPT-3, the source of seed knowledge for the training pipeline. (6/n)

0

0

2

@yuntiandeng Oh no I'm so sorry Yuntian! I hope your visa come our soon! FYI it took me ~40 days to eventually receive the visa stamp.

1

0

1

@jerroldsoh Thanks for recognizing the gists of our work and like our demo! It means a lot to our team to continue our efforts on this imperative & critical research area!.

0

0

1