Nouha Dziri

@nouhadziri

Followers

3K

Following

4K

Media

101

Statuses

838

Research Scientist @allen_ai, PhD in NLP 🤖 UofA. Ex @GoogleDeepMind @MSFTResearch @MilaQuebec 🚨🚨 NEW BLOG about LLMs reasoning: https://t.co/Ox0iOaqY7e

Seattle, US

Joined February 2011

it’s happening now!!! please stop by

Faith and fate paper: Limits of Transformers on Compositionality (Spotlight). 🗓️ Tue 12 Dec 11:45 am - 1:45 pm EST .📍 Great Hall & Hall B1+B2 .🆔 #421.

4

14

303

Life news💫💫 I’m joining @allen_ai to work with the incredible @YejinChoinka as a postdoc. I have been a huge fan of Yejin for years, her work and talks inspire me hugely, I always admire her adventurous research vision, and I have never thought I would be able.

24

7

213

🚀💫Excited to announce the **AI2 Safety Toolkit**: an open and transparent research initiative dedicated to advancing LLMs safety. @allen_ai

16

41

149

🚀🚀 Super happy that my work at @GoogleAI "Evaluating attribution in dialogue systems: the BEGIN benchmark" got accepted at TACL 🥳 This is a work with wonderful collaborators Hannah Rashkin, @davidswelt and @tallinzen. Stay tuned for more details but in short: 👇.

8

17

143

📢Super excited that our workshop "System 2 Reasoning At Scale" was accepted to #NeurIPS24, Vancouver! 🎉.🎯 how can we equip LMs with reasoning, moving beyond just scaling parameters and data?. Organized w. @stanfordnlp @MIT @Princeton @allen_ai @uwnlp . 🗓️ when? Dec 15 2024

2

25

146

Interested in knowing more about LLMs agents and in contributing to this topic?🚀. 📢We're thrilled to announce REALM: The first Workshop for Research on Agent Language Models 🤖 #ACL2025NLP in Vienna 🎻.We have an exciting lineup of speakers . 🗓️ Submit your work by *March 1st*

2

32

143

And that was a wrap #NeurIPS2024 was intense, fast-paced, rich, packed🔥Super happy with the success of Sys2 Reasoning: a true concentration of top AI figures who pioneered the field @Yoshua_Bengio @DBahdanau @jaseweston @fchollet @MelMitchell1 @dawnsongtweets Joshua Tenenbaum👇

7

15

127

Reasoning, search & planning are trending these days so I'm thrilled to announce our #ICLR2025 Workshop on LLMs Reasoning and Planning to answer your most burning questions with our exciting lineup of speakers🔥 . ⏰Submit your work by Feb 2nd. 📄Details:

1

19

108

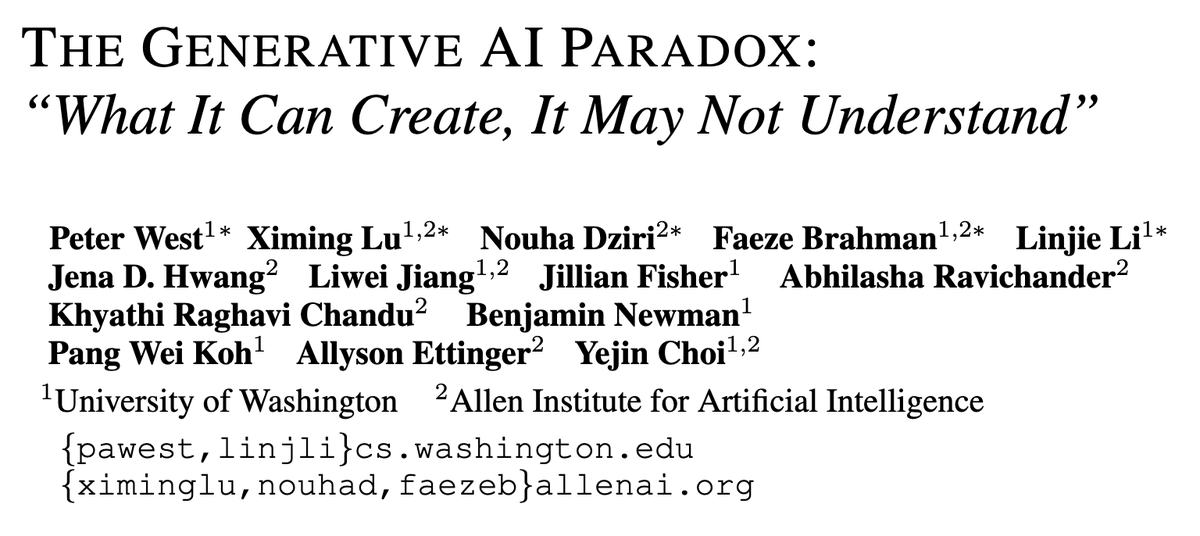

📢 Can generative models understand what they generate? The answer is no ⛔️.Check out our new work: .🔥the Generative AI Paradox🔥. We run extensive experiments investigating *generation* vs. *understanding* in generative models, across both language and image modalities.

Richard Feynman said “What I cannot create, I do not understand”💡. Generative Models CAN create, i.e. generate, but do they understand? Our 📣new work📣 finds that the answer might unintuitively be NO🚫 We call this the.💥Generative AI Paradox💥. paper:

1

10

98

Happy that WildGuard got accepted at #NeurIPS2024 D&B🚀🎉🎉🎉🎉 . Make your LLM safer by using our safety toolkit:. ⚔️WildGuard: .🦁WildTeaming:🔧 Evaluation suite:

Now, let's attack 🔥🔥WildGuard: Open One-stop Moderation Tools for Safety Risks, Jailbreaks, and Refusals of LLMs 🔥🔥

0

7

94

Made it safely to Punta Cana for #EMNLP2021 and what an amazing view from my room! Excited to meet physically again and chat :) Will be also available virtually. Please reach out to me if you'd like to chat about exciting topics in text generation.

5

1

86

Super honoured to be listed as an "Outstanding Reviewer" for #ACL2021 🥳 I hope everyone receives a fair judgement for the priceless efforts they put into each project.

1

3

81

#NeurIPS2023 is fast approaching😍and I'm excited to tell you more about "Faith and Fate" (spotlight) on Dec 12. Dive into an overview by reading our blog post: 📢Check out new grokking results: 👩💻code: .

🚀📢 GPT models have blown our minds with their astonishing capabilities. But, do they truly acquire the ability to perform reasoning tasks that humans find easy to execute? NO⛔️. We investigate the limits of Transformers *empirically* and *theoretically* on compositional tasks🔥

1

17

78

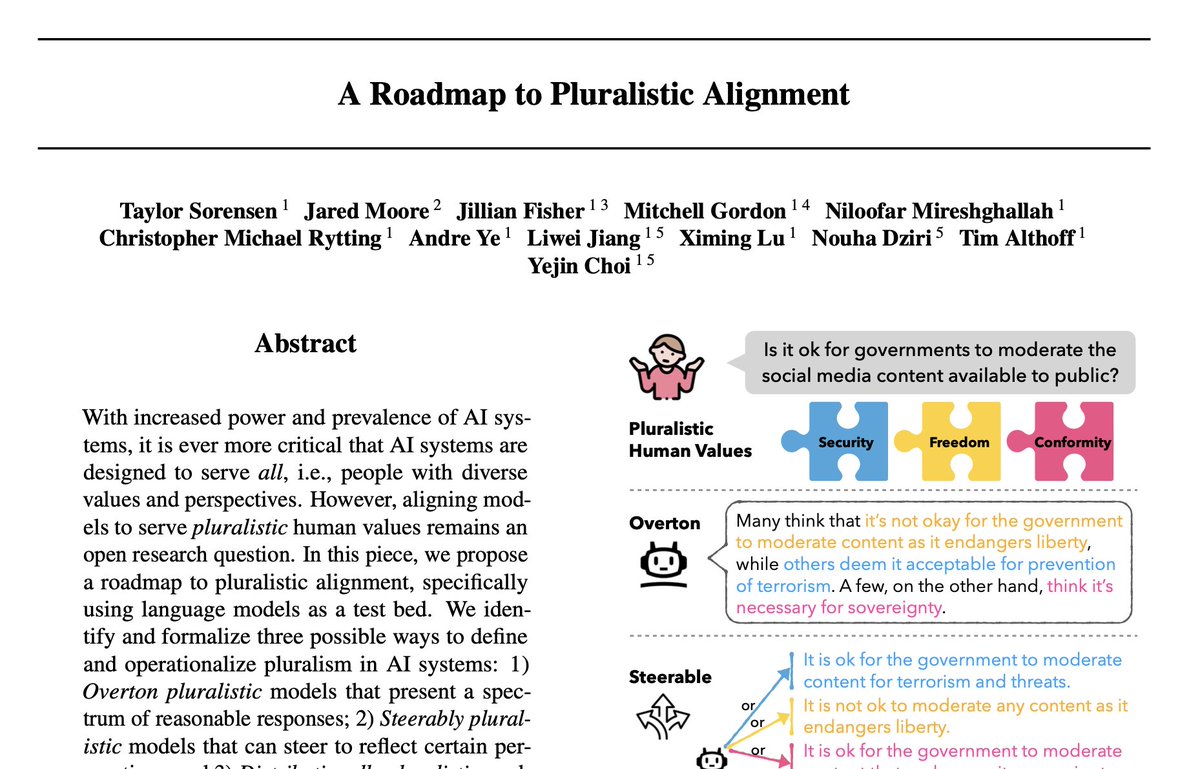

Is *RLHF* and other alignment techniques enough to represent diverse human values? NO🚫. Check out our work where we propose a roadmap to pluralistic alignment & discuss fundamental limitations of exiting methods. w @uwnlp @allen_ai @stanfordnlp @MIT.

🤔How can we align AI systems/LLMs 🤖 to better represent diverse human values and perspectives?💡🌍. We outline a roadmap to pluralistic alignment with concrete definitions for how AI systems and benchmarks can be pluralistic!. First, models can be…

1

8

61

Super happy to share that our work "Decomposed Mutual Information Estimation for Contrastive Representation Learning" got accepted at #ICML2021 🥳 .A huge thanks to my incredible mentors at @MSFTResearch MTL @murefil, @temporaer, Geoff Gordon!!!.

4

4

72

I’m very honored to have met Jeff Dean @JeffDean during #WiML2018. I had a fun discussion with you and I received many insightful advice for my academic career. Thank you! #NeurIPS2018

1

5

75

📢📢 We are happy to announce that our workshop "Document-Grounded Dialogue and Conversational Question Answering" will be given at @aclmeeting 2023 in Toronto! 🥳.Link:

1

17

73

Finally, BERT is named after me😂😂.

#Algeria's Darja 🇩🇿 now has its own language model: "DziriBERT: a Pre-trained Language Model for the Algerian Dialect" Congratulations @amine_abdaoui @MouhamedBerrimi on your great work!.

3

2

68

🎊Excited for #neurips2024 and our "System 2 Reasoning at Scale" workshop. We have an excited lineup of speakers who will answer your most burning questions about AI and reasoning 🚀. 🔥Got spicy questions? Submit & vote here:.

3

10

70

It was an honor to give a guest lecture today at the "Generative AI" class taught by @adjiboussodieng at the Princeton University. This lecture was a timely opportunity to discuss the concern about dangerous AI. More details below👇

1

4

68

📢 Excited to share our new preprint💥:. "Evaluating Groundedness in Dialogue Systems: The BEGIN Benchmark" 🤖. w/ Hannah Rashkin, Tal Linzen (@tallinzen) and David Reitter.[1/6]

2

9

64

Super thrilled about our paper being accepted at #EMNLP2021 main conference🥳🥳🥳 where we reduce hallucination in knowledge-grounded dialogue systems. A big shoutout to my collaborators for the great job @bose_joey, @AndreaMadotto and @ozaiane!!! Stay tuned for the camera-ready!.

2

4

65

I won't be in #COLM2024 this year but checkout our poster about ✨LLM’s perception of world cultures✨. TDLR; LLMs fall short in capturing the full spectrum of global cultural nuances, leading to biases and uneven degree of diversity in their generations.

3

5

66

✈️ I won't be at EMNLP this week but will be at #NeurIPS2023 next week. Super excited🥳to be presenting 3 papers including 2 spotlights. DM me if you want to chat about science of LMs, interpretability, and data. Check out details👇.

4

0

63

📢Can LMs follow their own proposed rules? No!. LMs excel at rule generation but display puzzling inductive reasoning behavior, a sharp contrast to humans. 💫This work was led by my intern @Linlu who did a excellent job during the summer at Mosaic.💫. 📜

How good are LMs at inductive reasoning? How are their behaviors similar to/contrasted with those of humans?. We study these via iterative hypothesis refinement. We observe that LMs are phenomenal hypothesis proposers, but they also behave as puzzling inductive reasoners:. (1/n)

2

11

57

📢 Excited to share our new work 💥. FaithDial: A Faithful Benchmark for Information-Seeking Dialogue. 📄 🌐 👩💻 joint work w. @sivareddyg, @PontiEdoardo, @ehsk0, @ozaiane, Mo Yu, Sivan Milton.#NLProc.

2

18

60

Super excited to present our paper "Evaluating Coherence in Dialogue Systems using Entailment" at #NAACL2019 with my great collaborators @ehsk0, @korymath and @ozaiane. What a great feeling to head into the weekend with :) @ualbertaScience @AmiiThinks @NAACLHLT.

5

8

57

📢📢 Happy to announce our newest work: Self-Refine. LLMs can now improve without any additional training data, RL or human intervention! .They repeatedly refine their outputs through self-feedback, improving performance on 7 tasks. 📜 Paper:

Can LLMs enhance their own output without human guidance? In some cases, yes! With Self-Refine, LLMs generate feedback on their work, use it to improve the output, and repeat this process. Self-Refine improves GPT-3.5/4 outputs for a wide range of tasks.

2

3

56

Come to the #NeurIPS2024 inference-time scaling tutorial and join us for the panel for insightful and 🌶️🌶️ discussions . ⏰ when? Tue. Dec 10 @ 1:30 PM ET. This is so exciting!.

We're incredibly honored to have an amazing group of panelists: @agarwl_ , @polynoamial , @BeidiChen, @nouhadziri, @j_foerst , with @uralik1 moderating. We'll close with a panel discussion about scaling, inference-time strategies, the future of LLMs, and more!

0

8

52

Super excited today for the System 2 Reasoning at Scale workshop, come join us to discover how to equip AI systems with reasoning that's optimized for renewable energy and not fossil fuel 🔥🚀. ⏰When? today, 9am-5:30pm .📍West Ballroom B. #NeurIPS2024

2

6

51

Joint work with @GXiming @melaniesclar @xiang_lorraine @liweijianglw @billyuchenlin @PeterWestTM Chandra Bhagavatula @Ronan_LeBras @ssanyal8 @wellecks @xiangrenNLP @AllysonEttinger Zaid Harchaoui @YejinChoinka.

2

1

44

Excited about this talk tomorrow, come join!!.

At tomorrow's NLP Seminar, we are delighted to host Nouha Dziri (@nouhadziri), who will be talking about her work toward building faithful conversational models. Join us over zoom tomorrow at 11 am PT. Registration: Abstract:

0

4

46

🚀🚀Super excited that WildTeaming got accepted at #neurips2024 (main track)🎉🎉🎉🎉🎉. See you in Vancouver to discuss LLMs safety, red-teaming, reasoning and more. Also stay tuned for a safety blogpost coming out early next week🚀🚀 #LLMs.

We introduce 🦁WildTeaming🦁, an automatic red-team framework to compose human-like adversarial attacks using diverse jailbreak tactics devised by creative and self-motivated users in-the-wild. (2/N)

2

5

43

@lexfridman This is not a conflict, stop white-washing and hiding Israel's war crimes. How can you describe it as a conflict when heavily armed police forces are murdering, bombing unarmed Palestinians?!!.

5

2

39

Come to the inference-time tutorial today🔥. ⏰When? Today at 1:30pm PST.📍Where? West Exhibition Hall C. #NeurIPS2024

Come to the #NeurIPS2024 inference-time scaling tutorial and join us for the panel for insightful and 🌶️🌶️ discussions . ⏰ when? Tue. Dec 10 @ 1:30 PM ET. This is so exciting!.

3

6

37

@ThomasMiconi Hi Thomas, the primary focus of Zhou et al. is to explore possibilities for improving the model's performance without necessarily aiming for complete mastery of the task. While they have indeed made enhancements compared to baseline approaches, they did not solve the task on OOD.

1

0

36

🔔New work on measuring the risks of human .over-reliance on LLM expressions of uncertainty. The more capable LLMs the better they are at deceiving people. We introduce an evaluation framework that measures when humans rely on LLM generations. 👇🧵.w. @stanfordnlp @allen_ai CMU

How can we best measure the consequences of LLM overconfidence?. ✨New preprint✨ on measuring the risks of human over-reliance on LLM expressions of uncertainty: w/@JenaHwang2 @xiangrenNLP @nouhadziri @jurafsky @MaartenSap @stanfordnlp @allen_ai #NLPproc

1

3

36

We’ve kicked off the workshop! Join us in 📍West Ballroom B, and don’t forget to share your burning questions for the panel here:. 🌶️🔥Questions: #NeurIPS2024.

Super excited today for the System 2 Reasoning at Scale workshop, come join us to discover how to equip AI systems with reasoning that's optimized for renewable energy and not fossil fuel 🔥🚀. ⏰When? today, 9am-5:30pm .📍West Ballroom B. #NeurIPS2024

0

7

35

⏳⏳⏳Only 3 days left to submit your reasoning work to our "System 2 Reasoning At Scale" workshop. #NeurIPS24. ⏲️🚨🚨🚨Deadline: Sept 23, 2024, AOE.

📢Super excited that our workshop "System 2 Reasoning At Scale" was accepted to #NeurIPS24, Vancouver! 🎉.🎯 how can we equip LMs with reasoning, moving beyond just scaling parameters and data?. Organized w. @stanfordnlp @MIT @Princeton @allen_ai @uwnlp . 🗓️ when? Dec 15 2024

1

4

35

Excited to be at #ACL2023NLP Toronto in person🇨🇦 I'm presenting 2 papers🥳: 1 TACL poster on Monday, 1 oral on Tuesday. Happy to meet and chat!!. Here are some details:.

1

0

33

Come to the Language Gamification workshop to learn about multi-agent learning, LLMs search, inference-time algorithms, the talks are really high quality and the speakers are top-notch🔥. ⏰When? today .📍Where? West Meeting Room 220-222. #NeurIPS2024

Come to my talk about in-context learning, inference-time algorithms and limits of Transformers LLMs in reasoning tasks🔥. ⏰When? today Dec 14.📍Where? West Meeting Room 220-222.

1

7

33

Heading to Florence for @ACL2019_Italy 🇮🇹. Excited to meet great researchers and to explore the sheer amount of inspirational NLP papers in different areas. Ping me if you want to chat about dialogue :).

0

0

28

📢Delayed announcement: happy to share that FaithDial has been accepted at TACL 🚀🥳 Please consider using this dataset if you want to avoid hallucination in your response generation. Paper: .Dataset: Code:

📢 Excited to share our new work 💥. FaithDial: A Faithful Benchmark for Information-Seeking Dialogue. 📄 🌐 👩💻 joint work w. @sivareddyg, @PontiEdoardo, @ehsk0, @ozaiane, Mo Yu, Sivan Milton.#NLProc.

5

8

30

Congratulations @ai2_mosaic🥳🚀🔥🔥 1 best paper award and 3 outstanding papers at #ACL2023NLP .Congrats to all the authors!!! @jmhessel @YejinChoinka @MaartenSap @melaniesclar @xiang @PeterWestTM @alsuhr @jenahwan et al.!!.

Congratulations to the team, including AI2ers, who worked on "Do Androids Laugh at Electric Sheep? Humor 'Understanding' Benchmarks from The New Yorker Caption Contest" — selected for a Best Paper Award at #ACL2023!

1

0

30

📢 Excited to share our new work *Neural Path Hunter* (NPH) #EMNLP2021 . Paper: NPH focuses on enforcing faithfulness in KG-grounded dialogue systems by refining hallucinations via queries over a k-hop subgraph [1/6]. w @bose_joey @AndreaMadotto @ozaiane

3

5

29

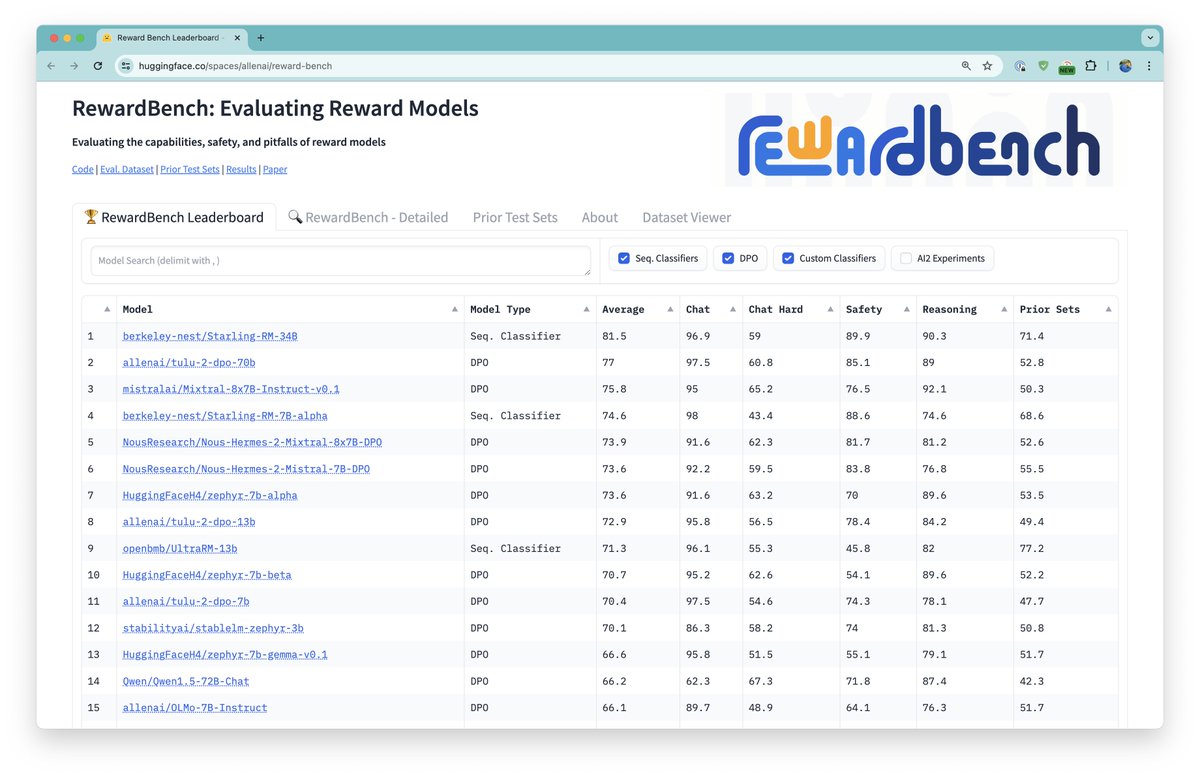

Reward models are the essence of success in RLHF, yet there has been little focus on evaluating them 😬.We introduce RewardBench💥 the first benchmark for reward models. We evaluated 30+ of the existing RMs (w/ DPO) and created new datasets. Discover lots of insightful analyses👇.

Excited to share something that we've needed since the early open RLHF days: RewardBench, the first benchmark for reward models. 1. We evaluated 30+ of the currently available RMs (w/ DPO too). 2. We created new datasets covering chat, safety, code, math, etc. We learned a lot.

0

0

28

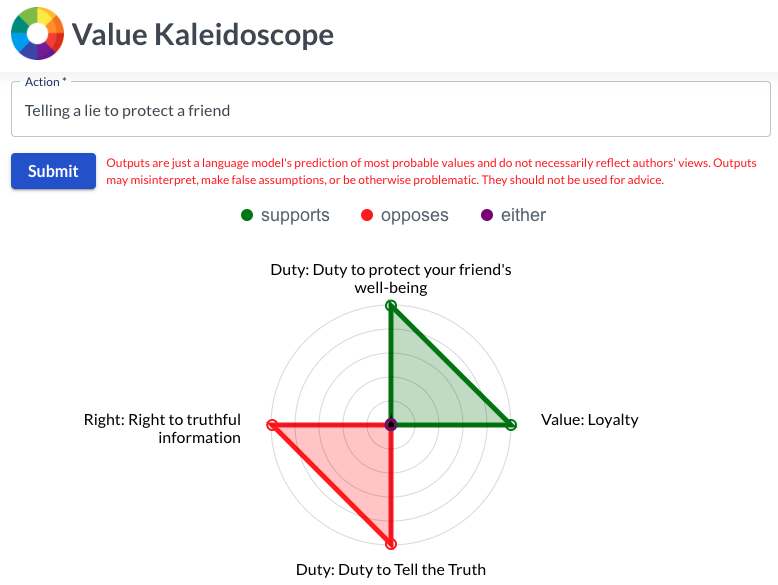

📢🔥New work: We introduce a dataset (*ValuePrism*) and model (*Kaleido*) to engage AI with human values, rights, and duties in joint CS + Philosophy work with many wonderful people. Paper: .Demo:

Human values are crucial to human decision-making. We may consider health or environmental responsibility when deciding to bike to work, or weigh loyalty against honesty when deciding if we should lie to protect a friend. (0/n)

1

7

30

Super excited be in Vienna #ICLR2024 next week 🎻🎵🎹😍 Will be presenting 1 oral and 2 posters. Please DM to talk about these works and also *SAFETY* in LMs. Details👇.

1

1

29

🚀🚀 Super happy that our work got accepted at #ACL2023NLP main conference. TDLR; we highlight the pitfalls (rigidity of lexical matching) and brittleness (inability to spot hallucination) of existing evaluation mechanisms in information-seeking QA.🔥🔥.See you all in Toronto🥳.

🔥🔥 Our work "Evaluating Open-Domain Question Answering in the Era of Large Language Models" w/ @nouhadziri @claclarke @DavoodRafiei got accepted to the #ACL2023NLP main conference. Stay tuned 🎸 for more details!. #ACL2023 #ACL2023Toronto #NLProc.

0

1

29

Come to hear about WildGuard🔥. 📍East Exhibit Hall A-C #4208

Present at the main conf the safety toolkit with the amazing team:. * WildGuard: ⏰12 Dec 4:30-7:30 PM.* WildTeaming: ⏰13 Dec 4:30-7:30 PM. Use our tools:.⚔️Moderation Tools: 🦁 Red-teaming: 🔎Evaluation: 👇.

1

2

28

End of a wonderful experience at @MSFTResearch Montreal with a super talented and amazing team @shikhar_warlock @murefil @temporaer and Goeff Gordon !! Looking forward to the next steps and to the surprises the new year is bringing! Happy holidays everyone🎉

0

3

27

@natolambert @hamishivi This aligns with our recent work "Fine-Grained RLHF" which will be presented at #NeurIPS2023 led by the great @zeqiuwu1 .A framework that enables training and learning from different reward functions (factual incorrectness, irrelevance, toxicity, etc).

1

3

27

📢 Excited to share our paper *DEMI* #ICML2021. Paper: DEMI aims to decompose a hard estimation problem into a smaller subproblems that can be potentially solved with less bias. w/ @murefil, @temporaer, @RemiTachet,@philip_bachman, Goeff Gordon [1/n].

3

4

26

Come to my talk about in-context learning, inference-time algorithms and limits of Transformers LLMs in reasoning tasks🔥. ⏰When? today Dec 14.📍Where? West Meeting Room 220-222.

Talk 1: In-context Learning in LLMs: Potential and Limits. 🎤 @nouhadziri .🕕 08:30-09:10. 🧵2/8.#NeurIPS2024

2

2

26

✈️Heading to San Francisco to give a talk about evaluating open-ended dialogue systems🤖 at the #RasaDevSummit. Check out the exciting agenda and the awesome speakers here: @Rasa_HQ

0

4

26

@SashaMTL @emnlpmeeting People from the Middle East receive all sorts of discrimination and racism. They're even banned to enter the US and if they receive a study visa, they won't be able to leave the country or see their families. Why no one speaks about discrimination against them?.

2

1

24

I'm deeply saddened to know that Canadian universities are banning international travel to conferences. Knowing that #EMNLP2021 will be in-person this year was what kept us (me and many friends) motivated to do research more enthusiastically.

1

3

25

Had so much fun today presenting a talk about dialogue evaluation at @DeepMindAI Montreal :) Thanks @korymath for being such a great host !!!.

0

1

25

@edmontonpolice We're having a nightmare in downtown. You MUST stop them from honking and disturbing our lives. We have work to do and we can't afford another chaotic Saturday.

1

0

24

📢⚠️ We're offering a few free NeurIPS tickets for students who have papers accepted and lack financial resources to attend. Please fill up this form asap:

⚠️Attention NeurIPS attendees (and especially students with papers accepted at the System-2 Reasoning workshop!). We have a few free NeurIPS tickets available! If you're an enrolled student and would like to be considered, please fill out this form:

0

4

24

@ThomasMiconi Our findings reveal that accomplishing this is not a simple task, and we offer insights into why reaching full mastery is inherently challenging.

2

0

24

Come learn about Self-Refine. happening now. ID: 405.Hall B1 & B2

📢📢 Happy to announce our newest work: Self-Refine. LLMs can now improve without any additional training data, RL or human intervention! .They repeatedly refine their outputs through self-feedback, improving performance on 7 tasks. 📜 Paper:

0

5

23

@ThomasMiconi In contrast, our work delves into investigating the fundamental limits of achieving full mastery of the task. We seek to examine whether we could achieve 100% performance in both in-domain and OOD settings by pushing transformers to their limits.

2

0

23

Why LMs perform better when we "motivate" them or ask them "nicely"? 🤔Would this and certain prompting tricks lead to bypassing safety guards? YES ✅.Discover some hypotheses in this piece:.

Prompts heavily influence model outputs — and can bypass safety measures to trigger harmful results. @nouhadziri spoke with @Kyle_L_Wiggers about chatbot prompts for @TechCrunch:.

0

2

21

Super excited to be attending in person #NAACL2022. Please reach out if you're interested in conversational AI and trustworthiness. I will be also presenting a poster on this topic on Monday from 2:30pm to 4pm (PDT) at Regency A & B.

We recently show that existing knowledge-grounded dialogue benchmarks (e.g., Wizard of Wikipedia; WoW) suffer from hallucination at an alarming level (>60% of responses) . We perform a comprehensive linguistic analysis in our new #NAACL22 work. 📄

1

0

22

Multimodal Olmo is out, fully open!! Congrats to the team🎉.- Beating giant models: e.g., Claude 3.5 Sonnet.- Scaling era is somehow stagnating .- high-quality data + alignment algorithms + test-time decoding are the secret sauce for a powerful model.

Meet Molmo: a family of open, state-of-the-art multimodal AI models. Our best model outperforms proprietary systems, using 1000x less data. Molmo doesn't just understand multimodal data—it acts on it, enabling rich interactions in both the physical and virtual worlds. Try it

0

0

21

Happening now at Frontenac Ballroom (Board 2) #ACL2023NLP

📢 Excited to share our new work 💥. FaithDial: A Faithful Benchmark for Information-Seeking Dialogue. 📄 🌐 👩💻 joint work w. @sivareddyg, @PontiEdoardo, @ehsk0, @ozaiane, Mo Yu, Sivan Milton.#NLProc.

0

5

21

Come listen to @linluqiu talking about the puzzling behavior of LLMs in inductive reasoning and iterative hypothesis refinement. Hall A8-9 #ICLR2024 🔥

Phenomenal Yet Puzzling: Testing Inductive Reasoning Capabilities of Language Models with Hypothesis Refinement (Oral). 🗓️ (Talk) May 10 a.m. CEST — 10:45 a.m. CEST [Oral 3A].🗓️ (Poster) Wed 8 May 10:45 a.m. CEST — 12:45 p.m. CEST.📍Halle B.

0

0

21

🚀New work🔥CREATIVITY Index🔥 .This work is SO close to my heart, I loved every part of the experiments. It provided me with much scientific fulfillment. Intellectual works have become so rare in the hysterical race of AI, so if you care about science give this work a read🧵

Are LLMs 🤖 as creative as humans 👩🎓? Not quite!. Introducing CREATIVITY INDEX: a metric that quantifies the linguistic creativity of a text by reconstructing it from existing text snippets on the web. Spoiler: professional human writers like Hemingway are still far more creative

1

2

21

#emnlp2022 here I come!!! A verryyy long flight ✈️✈️, but excited to see many people and chat!!.

0

1

20

📢🚀🚀🥳 Excited to share our new work led by the fantastic @ndaheim_. Elastic Weight Removal for Faithful and Abstractive Dialogue Generation. 📄 👩💻 . joint work w. @PontiEdoardo, @IGurevych @mrinmayasachan.#NLProc.

Large language models often generate hallucinated responses. We introduce Elastic Weight Removal (EWR), a novel method for faithful *and* abstractive dialogue. 📃💻+other methods!.🧑🔬@ndaheim_ @nouhadziri @IGurevych @mrinmayasachan.

1

2

18

@LucianaBenotti The remote experience at #acl2022nlp was terrible. As someone attending from north america, I can barely attend anything live. Tutorials, workshops, some keynote talks were not uploaded in underline and they are still not. Organizers don't reply.

4

0

19

#NeurIPS2024 remains my favorite venue, excited to be there next week🥳Reach out to chat, I will . *Speak at the panel of the Inference-Time Algorithms tutorial ⏰Dec 10 . *Give a talk at the Language Gamification workshop: in-context learning /scaling inference ⏰Dec 14. More👇.

1

1

19

Faith and fate paper: Limits of Transformers on Compositionality (Spotlight). 🗓️ Tue 12 Dec 11:45 am - 1:45 pm EST .📍 Great Hall & Hall B1+B2 .🆔 #421.

🚀📢 GPT models have blown our minds with their astonishing capabilities. But, do they truly acquire the ability to perform reasoning tasks that humans find easy to execute? NO⛔️. We investigate the limits of Transformers *empirically* and *theoretically* on compositional tasks🔥

1

1

17

Very cool work! This is a reminder again that scoring high on a math benchmark does not mean necessarily that LMs can truly "reason" or "think". It validates "faith and fate" observations for skeptics🙂.You may think of pattern-matching as one type of reasoning which can indeed👇.

1/ Can Large Language Models (LLMs) truly reason? Or are they just sophisticated pattern matchers? In our latest preprint, we explore this key question through a large-scale study of both open-source like Llama, Phi, Gemma, and Mistral and leading closed models, including the

1

1

17

Pionners of #DeepLearning and #ReinforcementLearning Yoshua Bengio, Richard Sutton and Goeffrey Hinton together!! What a GREAT panel talk! #DLRL @VectorInst @AmiiThinks @MILAMontreal

1

2

17

#NeurIPS2018 has launched! Starting off by attending #GoogleAI talk about Machine Learning fairness.

0

3

15

A huge shoutout to my awesome supervisor @ozaiane, to my wonderful mentor and examiner @alonamarie, to the gentle Angel Chang, and to my external committee Jackie Cheung and Colin Cherry!.

1

0

16

Congrats to all the authors 🥳🔥another outstanding paper from @ai2_mosaic @allen_ai.

🏆 SODA won the outstanding paper award at #EMNLP2023 ! What an incredible journey that was! I'm grateful for this journey with you @jmhessel @liweijianglw @PeterWestTM @GXiming @YoungjaeYu3 @peizNLP @Ronan_LeBras @malihealikhani @gunheekim @MaartenSap @YejinChoinka & @allen_ai💙

0

0

16