Bipasha Sen

@bipashasen31

Followers

2K

Following

909

Media

35

Statuses

256

🚀 Introducing Frank 🤖—a whole-body robot control system for day-to-day household chores. Frank has been in works for the past year—a tightly coupled hardware and a remote teleoperation interface co-led by @michl_wang, @nst372, and @pulkitology. Thanks to @skymanaditya1 and

8

34

244

I'll be starting my Ph.D. at @MIT_CSAIL advised by @pulkitology!. I was fortunate to receive offers from amazing labs and I wished - many times - to clone myself and join each of them -- I wouldn't be here if not for my advisors, Prof. C V Jawahar, @vinaypn, and Madhav Krishna!

30

10

595

My personal highlight of #ICRA2023! . ICRA was grand in every aspect, be it the exhibitions showcasing the state of the art robots, the amazing posters, and mind-blowing keynotes and plenary talks. And interestingly. this is still just the beginning for robotics!

3

14

95

Excited to share "HyP-NeRF" recently accepted to #NeurIPS2023! . HyP-NeRF doesn't do just one thing -- it does many things!!. It can generate NeRFs (directly the parameters!) from text, single-view, and multi-view (occluded/non-occluded) images! . Page: 📃

1

13

74

Our technical report is here: 🔊🔊 SOUND ON!! .Here's a clip of you coming back home to 🤖 Frank 🤖setting up your dinner table 🫘😋🍲🥘🍢

🚀 Introducing Frank 🤖—a whole-body robot control system for day-to-day household chores. Frank has been in works for the past year—a tightly coupled hardware and a remote teleoperation interface co-led by @michl_wang, @nst372, and @pulkitology. Thanks to @skymanaditya1 and

0

7

66

I'm presenting HyP-NeRF at #NeurIPS2023 at booth 438 now along with @melonmusk42 and @skymanaditya1 ! Come check us out!

Excited to share "HyP-NeRF" recently accepted to #NeurIPS2023! . HyP-NeRF doesn't do just one thing -- it does many things!!. It can generate NeRFs (directly the parameters!) from text, single-view, and multi-view (occluded/non-occluded) images! . Page: 📃

0

3

53

Had such a great last few weeks at @UMontreal / @Mila_Quebec, it feels really sad to leave! . I not only got to work on really cool real-world robotic setups, but also make so many memories! Especially, Montreal, it is unlike any other city I've lived in -- Au revoir! 👾🤖

2

1

41

We presented our paper "SCARP: Shape Completion in ARbitrary Poses for Improved Grasping" yesterday at #ICRA2023! . Along with @skymanaditya1, @melonmusk42, at @ieee_ras_icra

0

4

37

I will be presenting EDMP at #CoRL2023 Pretraining for Robot Learning Workshop!.

📢📢 Introducing EDMP - Ensemble-of-costs-guidance diffusion for motion planning. EDMP combines the strengths of deep learning and classical planning to solve complex plans DIRECTLY AT INFERENCE without ANY specialized training!! . Webpage: 🧵.

1

3

35

Check out our #IROS2024 work on diffusion-based Constrained 6-DoF Grasp Generation led by @melonmusk42 !.

📢Excited to share that our paper CGDF has been accepted to #IROS2024🎉. 📖 Title: Constrained 6-DoF Grasp Generation on Complex Shapes for Improved Dual-Arm Manipulation. ✨ CGDF is a grasp diffusion model that generates targeted grasps on object parts efficiently. 🚀. 🧵1/n

0

2

30

Excited to share that our work, SCARP: 3D Shape Completion in ARbitrary Poses for Improved Grasping has been accepted at #ICRA2023 @ieee_ras_icra! . Get started with SCARP at More details to be out soon!. @drsrinathsridha, @skymanaditya1, @melonmusk42

0

5

23

I'll be officially representing @ieee_ras_icra (#ICRA2023) as a Social Media Ambassador! . This is super duper exciting in many ways - first time at a robotics conference, my first robotics paper, and my first in-person major conference! See you at ICRA 2023 in London! @ieeeras.

1

1

21

FaceOff tries to address a critical problem faced by the movie industry 🎬🎥 -- automating the task of swapping an actors' face and expression on a double's body COSTING MILLIONS OF💲!. Explore our code below!. Refer to to learn more about the problem!.

Happy to announce the code release of our #WACV2023 project that brings the task of video-to-video face-swapping 🎭to the world of moviemaking!🎥.🎞️🌟 Lights, camera, face swap! 🎬💫.Give it a go here- .Check out the page for interesting future directions!

0

2

19

Perhaps, @CVPR should consider making the author rebuttals longer than just one page? . So many points are missed and not thoroughly explained because of the 1-page limitation to answer complex questions posed by 3-4 reviewers?.

1

0

15

Building great hardware is underrated. The right hardware is deeply problem-specific—if there's no strong fit, trusting an off-the-shelf solution can either bottleneck performance or lead to overengineering for a simple problem.

Companies who want to bring AI into the physical world are quickly realizing hardware isn’t a commodity. Building great functioning robot hardware is incredibly difficult. Its no longer a story of great AI & dumb hardware. This is a story of Great Hardware + Great AI.

0

1

15

Presenting "INR-V" at the Indian Conference on Computer Vision, Graphics and Image Processing! Thank you @iitgn for the amazing platform and hosting the amazing audience! . Check our work here - Code will be out soon! . @skymanaditya1 and @vinaypn

1

0

10

Excited to dive deep into discussions on humanoids, robot arms, and general-purpose robotics with anyone around Palo Alto/SF! . If you're passionate about hardware, software, or AI in robotics and have hard opinions, let's brainstorm and share insights. @Stanford @UCBerkeley.

1

1

10

Wohoo! @benjamin_s70415 and @shankaravaidy are two of the most brilliant minds I know, and I am fortunate to have worked with them!! . Super excited to see where this goes 🚀.

Robots can do flips and play chess, but they still can’t grab a snack or clean your table. Teleoperation is the key to unlocking real dexterity. It’s not a compromise—it’s a proven, powerful approach that combines human intuition with robotic precision. We’re not just using

0

0

9

🎥 production teams needed to remove cameras and equipment reflections from the mirrors in the shot! . Looks like now they'll need to do the same from the retinas too!.

Love this problem statement! Reconstruct the world from retinal reflections: The original paper from 2004 by Nishino and Nayar was a super inspiring example of creative research for me: Great to see a 3D version of it!!

0

0

8

Our code is out at - Shout-out to my collaborators - @aks1812, Madhav Krishna, @skymanaditya1, @VishalMandadi, @_ksaha, @Jayaram_Robot10, @Ajitsrikanth !.

0

1

7

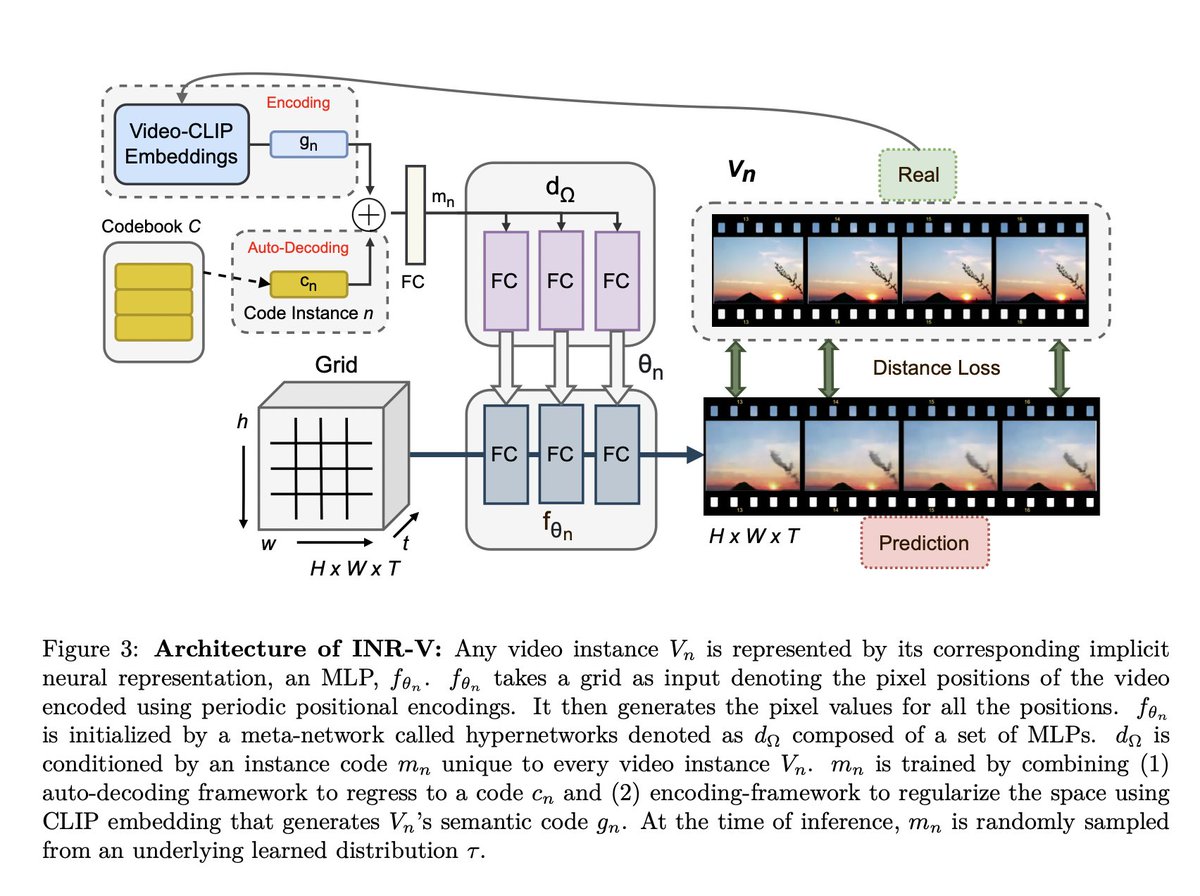

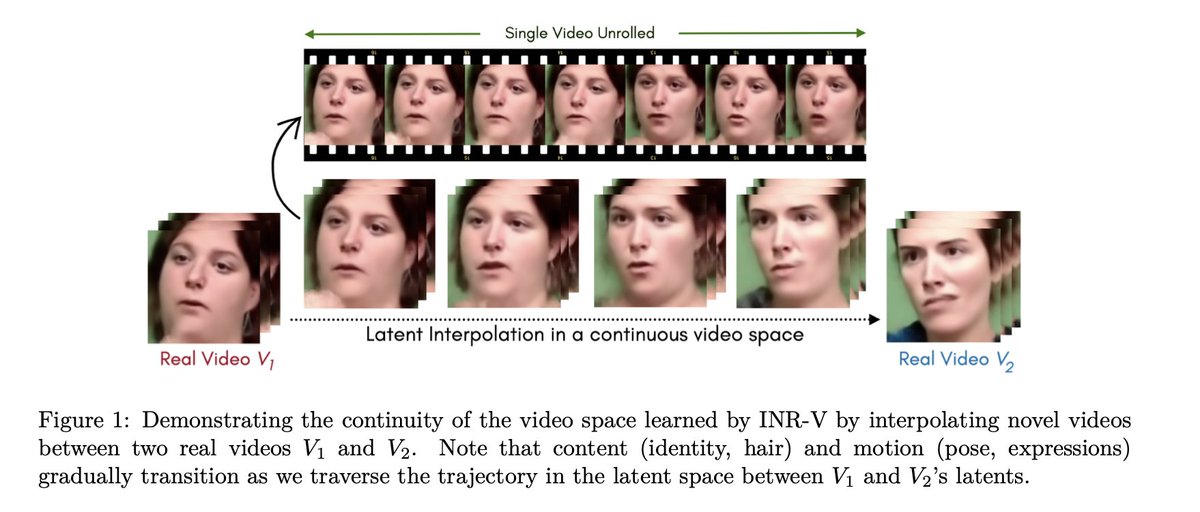

We have seen extensive work on "Image Inversion"; but what is "Video Inversion?". In our latest work, INR-V, accepted at @TmlrOrg, we propose a novel video .representation space that can be used to invert videos (complete and incomplete!). Project page:

INR-V: A Continuous Representation Space for Video-based Generative Tasks. Bipasha Sen, Aditya Agarwal, Vinay P Namboodiri, C.V. Jawahar.

1

1

6

@ManusMeta gloves are, without a doubt, the best finger-tracking gloves I’ve ever used. Pairing them with @PSYONICinc's Ability Hand made dexterous manipulation feel seamless. Excited to see these advancements paving the way for a robots-filled future we once only.

What if robots could peek around corners or adjust their viewpoint like humans? . @MIT’s new research uses MANUS gloves and an actuated neck for intuitive #teleoperation, enabling robots to learn and adapt like never before. Read the full paper:

0

2

5

This is what we did at @icvgip2022 more or less :D. With. @skymanaditya1, @melonmusk42, and @gsc_2001

0

0

5

@siddancha This is a @RealmanRobotics. It is pretty good for its cost—5kg payload for a 7.8kg arm! + i know a lot of people who use these arms and had good things to say about it.

0

1

4

We came 3rd in #icra2022's Open Cloud Tabletop Rearrangement and Planning Challenge (OCRTOC - . Here is an article about us that summarizes our journey:

0

2

4

Joint work with @vinaypn and @skymanaditya1.

INR-V: A Continuous Representation Space for Video-based Generative Tasks (TMLR 2022). Authors: Bipasha Sen, Aditya Agarwal, Vinay P Namboodiri, C.V. Jawahar. #neuralfieldsoftheday

0

1

4

Work done in collaboration with @qiaogu1997, @alihkw_, @_krishna_murthy, @SachaMori, @skymanaditya1, @duckietown_coo, and @florian_shkurti! . Find out more at .

0

0

5

@pulkitology @MIT_CSAIL @vinaypn Thank you! :):):) Looking forward to working with you and the group!.

0

0

2

@thephotonguy Absolutely. I use it all the time for everything starting from my notes, to ideas, brainstorming, team meeting MOMs, task list, etc. 100% recommend it.!!.

0

0

3

Nothing more rewarding than getting our work recognized!! . Thank you, @WeeklyRobotics, for featuring SCARP #ICRA2023 as the "publication of the week"!. Find the paper at

Excited to share that our recent work on "SCARP: 3D Shape Completion in ARbitrary Poses" #ICRA2023 has been featured as the "Publication of the Week" at @WeeklyRobotics. Joint work with @NerdNess3195 @melonmusk42 @drsrinathsridha

0

0

2

@ylecun It wasn't just a one way street though, they gained immensely from the open source contributions and the tools and technologies developed by others. Giving birth to a tons of startup, was a parallel outcome!.

0

0

2

@ShubhamC526 I have heard so many such horror stories here. I am really lucky to have gotten the best advisors in India. I wish (if not all, but at least most) profs change the mindset of quick publications and actually start caring about the deeper problems and have a long-term vision.

1

0

2

Wohoo way to go robotics! (Although the intent of the post was a bit different).

Checking ICLR final decisions, it made me wonder why audio research is treated so poorly in ML conferences? 🥲. I did a simple keyword search on OpenReivew and here is what I found! 👉. @iclr_conf #ICLR2023

0

0

2

. segregate the Indian indie artists into exclusive categories and playlists (ex. Indian indie artists) that may cause the listener's bias to creep in! . More details about the work to be out soon!. Work in collaboration with @skymanaditya1 and Prof. Vinoo Alluri.

0

0

1

Work in collaboration with @drsrinathsridha, @skymanaditya1, @melonmusk42, @rohithagaram, and K. Madhava Krishna! 👾🤖. Keep an eye out on our code - we are releasing the full version soon!.

0

1

2

Sounds like a really exciting opportunity!!.

If you have experience in generative modeling and differentiable rendering and are looking to join a fun team, I've recently co-founded a stealth startup in this space and we're looking for 1-2 ML experts still. Reach out w/ email & summary of what you've worked on in the past!.

0

0

1

@aks1812 I think this one thing that computer vision communities got right. People don't release code and also the tasks are often so niche that it is hard to find the exact paper to compare with. Every paper has their own task definition.

0

0

1

@GeorgiaChal Thank you for bringing his up @GeorgiaChal . looking forward to chatting with you at CORL :).

0

0

1

@Stone_Tao I'm sorry about the paper. Although I do agree with you pov, i do think presentation is important. Because, afterall, the point of a paper is to allow researchers to read and learn from the paper. And thus i would think it is important to get the main points across.

1

0

1

@d3lue @bchesky @GocmenMurat Not true. reviews help guests more than it does the hosts. I rely very much on reviews when selecting a place. so it is more like we are doing each other the favor.

2

0

1

@Manish__999 @MIT_CSAIL @pulkitology @vinaypn I think, in the end, it is about finding what is most mentally stimulating for you and what excites you the most.

0

0

1

n my #wacv2023 accepted work with @skymanaditya1 , "FaceOff: A Video-to-Video Face Swapping System", we automate this process! With our model, one can face-swap the actor's face on the double face with preserving the actor's expressions and the double's pose and scene context !!.

1

0

1