Angjoo Kanazawa

@akanazawa

Followers

16K

Following

4K

Statuses

804

Assist. Professor at @Berkeley_EECS, @berkeley_ai. KAIR, @nerfstudioteam, advising @WonderDynamics and @LumaLabsAI. she/her.

Berkeley, CA

Joined June 2011

Very excited about this work!!! The ability to continuously update your representation opens up many possibilities. Its simple yet versatile for all kinds of 3D tasks. A lot of results on our project website

Introducing CUT3R! An online 3D reasoning framework for many 3D tasks directly from just RGB. For static or dynamic scenes. Video or image collections, all in one!

5

28

231

Big congrats to the @LumaLabsAI team!!!

Introducing Ray2, a new frontier in video generative models. Scaled to 10x compute, #Ray2 creates realistic videos with natural and coherent motion, unlocking new freedoms of creative expression and visual storytelling. Available now. Learn more

1

4

53

I'm not at neurips, but @davidrmcall and @holynski_ are just about to start their poster session on our paper that presents a unified framework for explaining SDS and its variants

0

5

35

RT @ethanjohnweber: We ran DUSt3R on our cartoon reconstruction setting and found that it struggles (even with ground truth correspondences…

0

10

0

RT @zhengqi_li: Introducing MegaSaM! 🎥 Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scen…

0

90

0

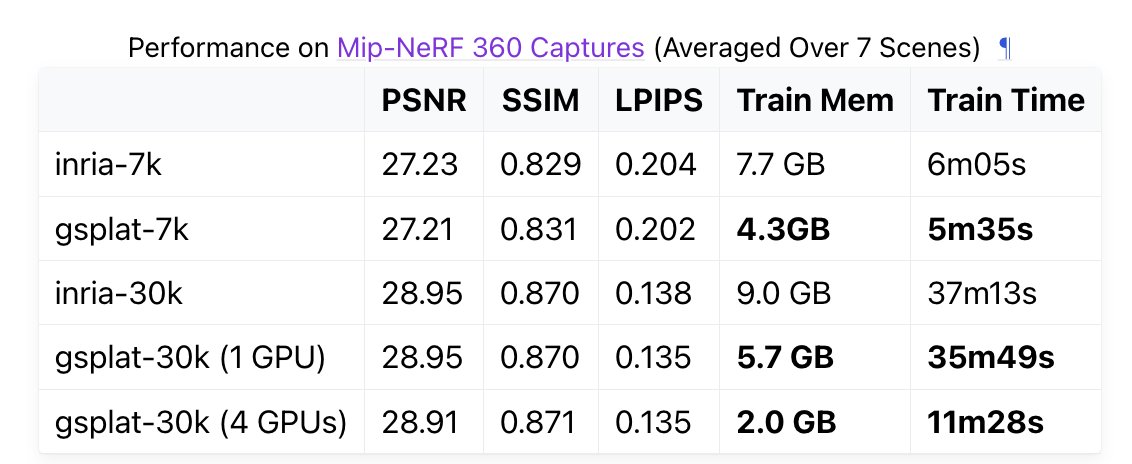

Hi! If you found the gsplat library ( useful, we wrote a whitepaper with benchmarking, conventions, derivations, and new features (great effort led by @_maturk & @ruilong_li 🙌).

2

29

281

Super cool project led by @gengshanY, that shows how we can learn behavior models of agents from many videos via reconstructing them in a consistent world in 4D. TL;DR: we learn a simulator of his cat🐈!!

Sharing my recent project, agent-to-sim: From monocular videos taken over a long time horizon (e.g., 1 month), we learn an interactive behavior model of an agent (e.g., a 🐱) grounded in 3D.

1

10

116

Teach robots how to manipulate an object via 3D/4D reconstruction🧑🏻🏫! Will be presented at CoRL 2024 as an oral presentation.

Robot See, Robot Do allows you to teach a robot articulated manipulation with just your hands and a phone! RSRD imitates from 1) an object scan and 2) a human demonstration video, reconstructing 3D motion to plan a robot trajectory. #CoRL2024 (Oral)

1

19

187

Hellooo! On my way to Milan, my first conference after baby 😁 So excited to be back at #ECCV2024 and talk about my lab's work! You can find the talk details here:

9

3

152

RT @ruilong_li: 🌟gsplat==1.4.0🌟 ( is now released! - Supports 2DGS! (Kudos to @WeijiaZeng1) - Supports Fish-eye cam…

0

25

0

RT @vonekels: To what extent does social interaction affect behavior in couples swing dancing? Our work looks at how we can predict a danc…

0

25

0

4D from a single video is still a very challenging problem but we're taking steps forward in Shape of Motion: we recover a global 4D representation that captures how things are moving across space and time, disentangled from the camera motion.

We present Shape of Motion, a system for 4D reconstruction from a casual video. It jointly reconstructs temporally persistent geometry and tracks long-range 3D motion. For more details, check our webpage and code

0

4

103

RT @ruilong_li: 3DGS-MCMC densification strategy is now officially supported with in 🌟gsplat🌟 with a one line code change!

0

16

0

More updates to gsplat! So cool to see latest methods getting merged to it so quickly.

🌟gsplat🌟 ( now supports multi-GPU distributed training, which nearly linearly reduces the training time and memory footprint. Now Gaussian Splatting is ready for the city-scale reconstruction! kudos to this amazing paper:

0

2

31

A simple but practical approach for gaussians in-the-wild. It's very cool when you can fly into a photo interactively! Congrats to @CongrongX an undergrad who's been making many awesome updates to @nerfstudioteam ! He's applying to grad school this Fall! Highly recommend 🙂

Excited to share my first paper with @justkerrding and @akanazawa : Splatfacto in the Wild! Our method trains on a single RTX2080Ti and achieves real-time rendering! project page: paper:

0

2

63