Connor Leahy

@NPCollapse

Followers

24,262

Following

565

Media

118

Statuses

3,263

Hacker - CEO @ConjectureAI - Ex-Head of @AiEleuther - I don't know how to save the world, but dammit I'm gonna try

London

Joined January 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#PetrichorTheSeriesPressCon

• 397945 Tweets

CONFERENCE ENGLOT SERIES

• 374054 Tweets

Right People

• 117303 Tweets

エルシャダイ

• 48559 Tweets

ウイングマン

• 47970 Tweets

Yolun

• 30007 Tweets

Dahyun

• 28811 Tweets

カスの嘘

• 26509 Tweets

Paus Fransiskus

• 23884 Tweets

ドラえもんの誕生日

• 23231 Tweets

Wrong Place

• 22960 Tweets

ボクシング

• 15571 Tweets

不来方賞

• 14200 Tweets

Cleo

• 13811 Tweets

井上尚弥

• 11780 Tweets

Last Seen Profiles

Pinned Tweet

If we build machines that are more capable than all humans, then the future will belong to them, not to us.

Everything else derives from this simple observation.

Thank you

@cambridgeunion

for inviting me to make the case that "This House Believes AI Is An Existential Threat."

121

208

915

I nominate Alan Turing for the first DeTuring Award.

60

156

1K

Remember when labs said if they saw models showing even hints of self awareness, of course they would immediately shut everything down and be super careful?

"Is the water in this pot feeling a bit warm to any of you fellow frogs? Nah, must be nothing."

101

168

1K

@tszzl

Thanks for your response roon. You make a lot of good, well put points. It's extremely difficult to discuss "high meta" concepts like spirituality, duty and memetics even in the best of circumstances, so I appreciate that we can have this conversation even through the psychic

142

69

1K

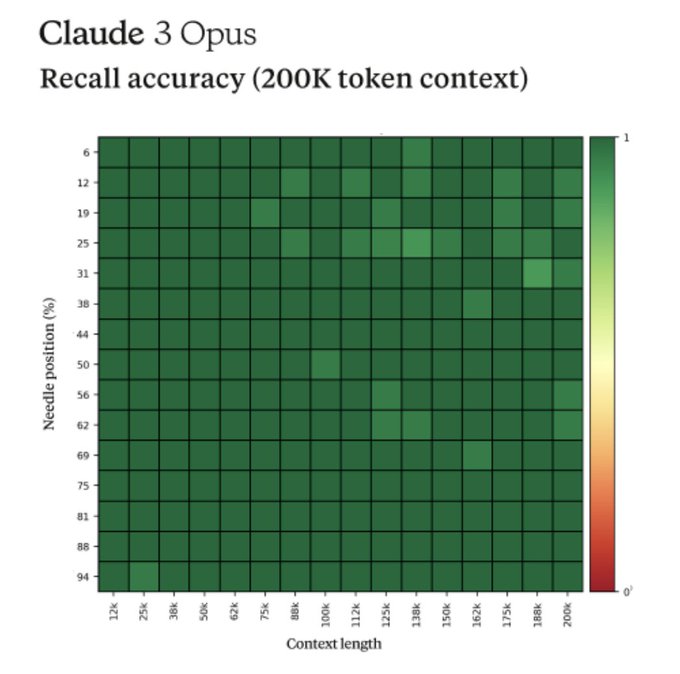

This is the kind of stuff that makes me think that there will be no period of sorta stupid, human-level AGI. Humans can't perceive 3 hours of video at the same time.

The first AGI will instantly be vastly superhuman at many, many relevant things.

63

123

983

This tweet resulted in me getting a notification with a pretty threatening aura

25

51

812

Hey

@OpenAI

, I've replicated GPT2-1.5B in full and plan on releasing it to the public on July 1st. I sent you an email with the model. For my reasoning why, please read my post:

#machinelearning

#gpt2

#aisafety

42

285

760

The gods only have power because they trick people like this into doing their bidding.

It's so much easier to just submit instead of mastering divinity engineering and applying it yourself.

It's so scary to admit that we do have agency, if we take it.

In other words: "cope"

27

48

685

About 3 years ago I predicted we would be able to generate Hollywood quality movies completely with AI in 5 years, and no one believed me.

Well, as they say...lol, lmao

here is sora, our video generation model:

today we are starting red-teaming and offering access to a limited number of creators.

@_tim_brooks

@billpeeb

@model_mechanic

are really incredible; amazing work by them and the team.

remarkable moment.

2K

4K

26K

63

37

524

Hinton is one of the greatest AI scientists to ever live, and he has quit Google in order to talk about the dangers of AI freely.

In case anyone was still somehow on the fence about whether things are serious.

13

68

473

No one truly understands our neural network models, and anyone that claims we do is lying.

Turing Award Winner Prof. Geoff Hinton points out how little we understand about SOTA

#AI

models like GPT-4.

Source:

11

113

653

38

59

452

Thank you

@amanpour

for having me on today!

These questions are extremely important for every single person alive today, and we should be demanding answers (preferably under oath) from the people racing forwards recklessly towards Godlike AI.

The ‘godfather’ of AI Geoffrey Hinton warns it’s not inconceivable AI could lead to the extinction of the human race.

AI researcher & expert Connor Leahy

@NPCollapse

fears it’s worse than that: “It’s quite likely, unfortunately.”

“We do not know how to control these things.”

201

774

2K

37

74

440

While I genuinely appreciate this commitment to ASI alignment as an important problem...

...I can't help but notice that the plan is "build AGI in 4 years and then tell it to solve alignment."

I hope the team comes up with a better plan than this soon!

51

67

433

While we do not agree on most things, I want to express my heartfelt condolences to

@BasedBeffJezos

for being from Quebec.

11

4

388

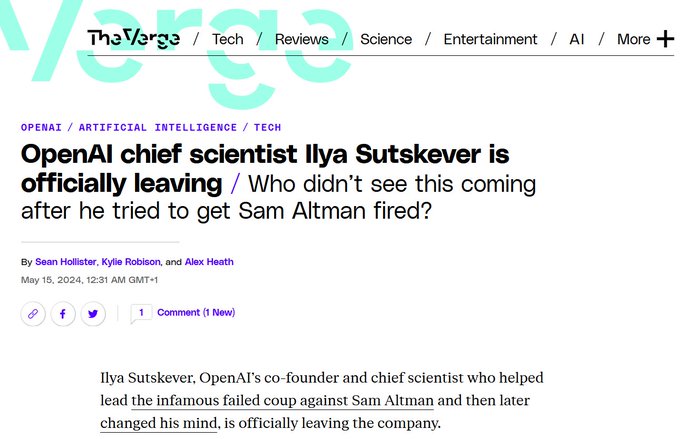

Who could have seen this extremely predictable next step happening?

The world is changing, fast.

13

46

371

"It really is so impossible to stop this thing! It's a totally external force we can't do anything to stop!", says the guy currently building the thing right in front of you with his own hands.

38

63

377

Heads of all major AI labs signed this letter explicitly acknowledging the risk of extinction from AGI.

An incredible step forward, congratulations to Dan for his incredible work putting this together and thank you to every signatory for doing their part for a better future!

30

27

330

I'm surprised by people expecting AI to stay at human level for a while.

This is not a stable condition: human-level AI is AI that can automate large parts of human R&D, including AI development. And it does so with immediate advantages over humans (no sleep, learning from one

@AISafetyMemes

Very wide probability bars honestly. 95% confidence on timelines 2029-2200 for ASI, (I have significant probability mass on AGI staying roughly-human-level for a while)

p(doom) around 0.1? Which of course makes it quite important to take AI risk seriously.

1

8

197

54

27

318

@pauljeffries

@lisperati

@ESYudkowsky

Conrad puts it well. I will elaborate excessively anyways because I am bored:

Normal people have a lot of good intuitions around certain things. Lots of bad intuitions around other things, of course.

You correctly point out that it actually is a strike against people like me

26

71

308

@Chrisprucha

I really dislike how vicious people are being to George, he has so far been the most steadfastly good faith accelerationist I have talked to, it was a pleasure and an honor.

But also, he's an adult, and he specifically requested a public, live, unedited discussion.

16

3

301

1v1, no items, fox only, final destination

lfg

@dwarkesh_sp

@ManifoldMarkets

Of course you're up for a debate now that there's upside for you and your friends to farm such an interaction.

Be real, you didn't give me the time of day originally because there wasn't enough clout in it for you to farm at the time, and you had your own biases against e/acc

117

21

552

23

12

296

I would like to thank roon for having the balls to say it how it is.

Now we have to do something about it, instead of rolling over and feeling sorry for ourselves and giving up.

39

32

296

I finished reading

@Snowden

's new book and wow, what a story. I thought I already had a good hang on it all, but this book really added so much nuance and the human element. It has even gotten me to reevaluate some of my own work even more carefully.

7

40

270

It's interesting to see the development of AI risk denialism and its parallels with climate change denialism before it.

Seems pseudoscientific denialist movements are a natural response to any kind of large future scale risk that is uncomfortable to people.

74

26

280

AI is indeed polluting the Internet.

This is a true tragedy of the commons, and everyone is defecting. We need a Clean Internet Act.

The Internet is turning into a toxic landfill of a dark forest, and it will only get worse once the invasive fauna starts becoming predatory.

59

47

270

Great post by

@TheZvi

on recent AutoGPT stuff.

I am even more pessimistic than him about a lot of things, but I'd like to particularly shout out the predictions he makes at the end of post.

I'd like to publicly state that I also predict 19 will happen.

17

39

267

I think this may be the best podcast I've been a part of!

I got the opportunity to make a lot of new versions of arguments, both new and old, so even if you've heard me many times before, give this one a watch!

Are we headed toward an AI doomsday?

Connor Leahy (

@NPCollapse

) is CEO

@ConjectureAI

, an organization working to make the future of AI go as well as it possibly can

He's also Co-Founder of

@AiEleuther

, a non-profit AI research lab

Can the world be saved?

Let's find out:

14

15

81

43

39

269

There are only two times to react to an exponential: Too early, or too late.

---

Emmett is smart and in good faith, I respect him greatly and respect him putting his thinking out publicly, genuinely so! He's a great example of how we should be having these discussions.

If

18

31

257

Gonna be amazing, one of the people I've most wanted to talk to for a long time!

Don't miss our epic livestream with

@NPCollapse

and

@realGeorgeHotz

on Thursday on AI Safety.

41

39

405

21

15

253

"slow takeoff"

Orwellian. We can see things with our own eyes.

@NPCollapse

“This is exactly what makes me think there won’t be any slightly stupid human-level AGI.”

- Connor when someone shows him a slightly stupid human-level AGI, probably

You are in the middle of a slow takeoff pointing to the slow takeoff as evidence against slow takeoffs.

11

4

144

32

28

249

I wanted to get back to this question that I was asked a few days back after thinking about it some more.

Here are some things OpenAI (or Anthropic, DeepMind, etc) could do that I think are good and would make me update moderately-significantly positively on them:

1. Make a

@NPCollapse

@lxrjl

What would be something OAI could feasibly do that would be a positive update for you? Something with moderate-to-significant magnitude

7

0

22

32

45

252

This paper (by

@FelixHill84

et al) is really an "It's all coming together" moment for

@DeepMind

I feel.

Let me try to describe my takeaways from my first readthrough.

1/14

5

44

241

Add this to the pile of evidence that LLMs are way, way smarter than most people think they are.

15

42

228

Canary in the coal mine.

Congrats to Ilya and Jan for doing the right thing.

19

24

240

Wow! I am extremely impressed by the thoroughness and thoughtfulness of this article.

Too many good quotes to pick from...

11

35

234

You know those stupid scenes they always put in horror movies where you wonder how the characters could be so stupid to not see the foreshadowing?

Good thing that never happens in real life!

15

25

241

Always worth repeating that no one actually understands how our AIs work or can predict their capabilities.

An honest admission from

@sama

:

“When/why a new capability emerges… we don't yet understand."

In other words, we hold our breath and pray our next model comes out just right: more capable than the last one, but not an ASI that goes rogue.

How is it legal to operate like this?

65

42

260

33

39

236

It's crazy how much of modern "techno optimism/accelerationism" is just cynicism masquerading as hope

A defeatist belief that we cannot build a better society and we shouldn't even try.

54

18

230

Not much time left.

37

20

228

The kind of thing you see in the background on a TV in the opening scene to a sci-fi movie before shit goes down.

24

30

223

“We have to realise what people are talking about: The destruction of the human race. […] Who would want to continue playing with that risk? It is preposterous, it is almost absurd when you think about it, but it is happening today.”

Well said by

@MarietjeSchaake

“Concerns about AI are now making for coalitions of concerned politicians that I have never seen,” says

@MarietjeSchaake

. “The end of human civilization – who would want to continue playing with that risk? It is… almost absurd when you think about it, but it is happening today.”

44

161

417

18

45

215

There are only two times to react to an exponential:

Too early, or too late.

17

30

212

While I genuinely appreciate Joscha's aesthetics, I really find this kind of fatalistic "just lay down and die, and let The Universe™ decide" stuff utterly distasteful and sad.

"Decorative", ugh...

We can do better than this, the future is not yet determined.

26

10

213

Creating an unaligned more powerful competitor species is different from growing some cool grasses with slightly bigger seeds.

Hope that explains the difference.

24

11

209

I am very excited to speak to the

@UKHouseofLords

about this extremely urgent topic.

I am heartened by the increasing interest that both government and civil society have been showing in preventing the extinction risks we face.

We can still stop this!

Tomorrow we have

@ConjectureAI

's packed out event in the

@UKParliament

's

@UKHouseofLords

.

Our CEO (

@NPCollapse

) will be making the case to legislators, journalists, and other interested parties as to why the regulatory focus should be on artificial *general* intelligence.

4

12

71

22

23

211

Props to Jan for speaking out and confirming what we already suspected/knew.

fmpov, of course profit maximizing companies will...maximize profit. It never was even imaginable that these kinds of entities could shoulder such a huge risk responsibly.

And humanity pays the cost.

18

13

208

For the record, this exact paradigm is at least what me and other people around EleutherAI have been worried about for years, it was extremely obvious this was how things would go.

14

10

205

This is so strange and wonderous that I can feel my mind rejecting its full implications and depths, which I guess means it's art.

May you live in interesting times.

11

13

206

is =/= ought

I don't believe just because someone/something is powerful, it therefor is morally right.

I don't think that ceding our future to whatever machine Richard cooks up in his lab that kills him, me, and our families is ok because it's smarter than us.

33

19

204

Guillaume is disappointingly much nicer and more reasonable than his insane, evil alter ego, and was great fun to talk to!

As expected, we share a lot of common principles, and disagree furiously on some most core points, and we don't shy away from it.

The best kind of debate!

Just finished recording debate w/

@NPCollapse

on

@MLStreetTalk

(3.5 hour conversation)

Started off heated and ended well with us finding some common ground.

Stay tuned for the pod drop! (Date TBD)

18

11

241

5

4

198

I second what Andrew says. Some are honest about wanting humanity extinct, some are cryptic (the eacc kids), some are only honest behind closed doors. I've seen it.

Needless to say, I think those who believe this should be seen with the same contempt and indifference they have

45

24

192

Incredible bait, an actual work of art, just a masterpiece of trolling.

7

9

189

Fantastic effort! Thank you for publicly and honestly engaging with these ideas!

8

7

190

Existential risk comes from a teeny, tiny subset of all AI work, only the most extreme frontier general purpose systems.

99% of AI work currently being done, to great economic and scientific benefit, is not of this kind, and I am very in favor of it!

23

25

190

Your boss expects that if you succeed you could "maybe capture the light cone of all future value of the universe".

How would you describe that?

23

10

185

You may not like it, but this is what peak practical rationality looks like.

19

15

179

@BasedBeffJezos

Anarcho capitalists like you are like house cats, fully dependent on a system they neither understand nor appreciate.

Markets are a fantastic tool in the civilizational toolbox, but you use the right tool for the right problem. Nuclear weapons, law enforcement, pricing in

20

12

180

I think it's really great that people are seriously engaging with these ideas, thanks Jeremy!

Unfortunately, my opinion is that the world has an offense/defense asymmetry, and one maniac with a nuke and one good guy with a nuke results in a smoldering crater, not peace.

18

13

180

The podcast in which I finally reveal more details about my approach to AGI alignment!

Thanks to

@FLIxrisk

for having me on once more!

21

23

177

"Solid step towards AGI"

Why are you happy Shane? Haven't you said in the past AGI could kill everyone on Earth?

#GeminiAI

is another solid step towards

#AGI

. Huge congrats to everyone at

@GoogleDeepMind

who made this amazing milestone happen… and we’re just getting started :-)

18

46

437

23

11

173

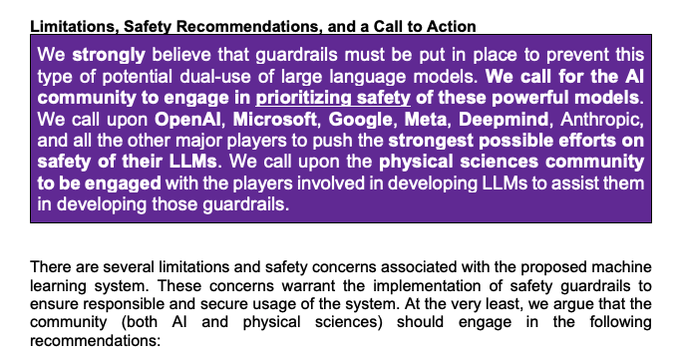

Yann seems to struggle with the concept of why "having an assistant that you can tell to synthesize nerve gas (as long as you give it a funny name)" may in the future be a problem.

God forbid a scientist actually extrapolates.

30

7

175

And there it is.

Who could have guessed that one of the most oppressive and censorious regimes might not want their tech companies racing ahead with unprecedented uncontrollable technology?

25

27

176

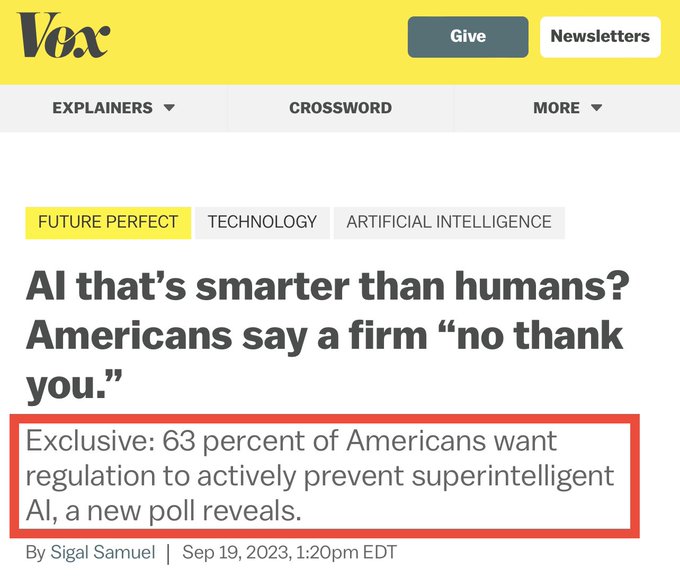

Although shocking at first glance, this is unsurprising to me - normal people know that building AI much more powerful than humans could spell disaster.

Even

@OpenAI

’s alignment head

@JanLeike

thinks there’s a 10-90% chance we all die!

So why don’t we just stop building AGI?

Interesting poll results by

@DanielColson6

’s new AI Policy Institute.

82% of US voters don’t trust tech executives to self-regulate on AI, 72% would support slowing down development.

Looks like it’s time for governments to step in and ban AGI development in the private sector?

11

27

75

66

30

173

Beyond parody. Literally could not have written this even as a bit with a straight face.

12

7

170

@BasedBeffJezos

Adversarial cooperation is the best kind of cooperation.

You think your ideas will make the world better than mine?

Let's find out!

6

7

165