Jan Leike

@janleike

Followers

92,580

Following

331

Media

28

Statuses

607

ML Researcher @AnthropicAI . Previously OpenAI & DeepMind. Optimizing for a post-AGI future where humanity flourishes. Opinions aren't my employer's.

San Francisco, USA

Joined March 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#2024MAMAVOTE

• 1326244 Tweets

Liz Cheney

• 342616 Tweets

#ゴジラマイナスワン

• 194311 Tweets

REST IN PEACE SUARUKH KHAN

• 75190 Tweets

My MAMA

• 54685 Tweets

taemin

• 49441 Tweets

Zomvivor 1st Look

• 46346 Tweets

RIP SALRAAN KHAN

• 45147 Tweets

MINQ BEACH DATE

• 39806 Tweets

山崎監督

• 29782 Tweets

モンハン

• 29307 Tweets

Thanksgiving

• 25890 Tweets

#ساعه_استجابه

• 22658 Tweets

ヴェノム

• 22429 Tweets

للهلال

• 20997 Tweets

マイゴジ

• 12908 Tweets

フリーダム強奪事件

• 12550 Tweets

典子さん

• 10763 Tweets

Last Seen Profiles

Pinned Tweet

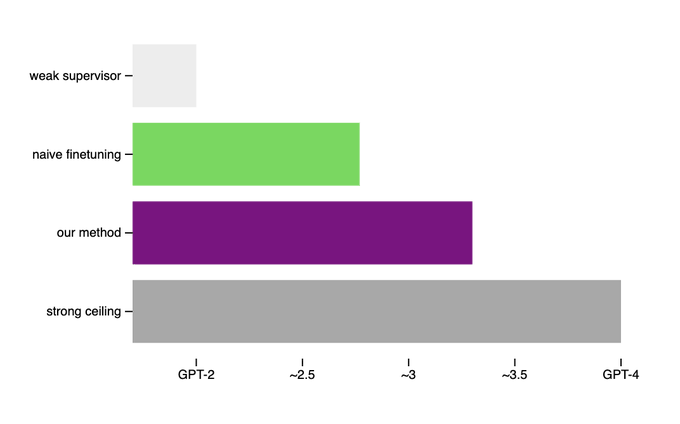

I'm excited to join

@AnthropicAI

to continue the superalignment mission!

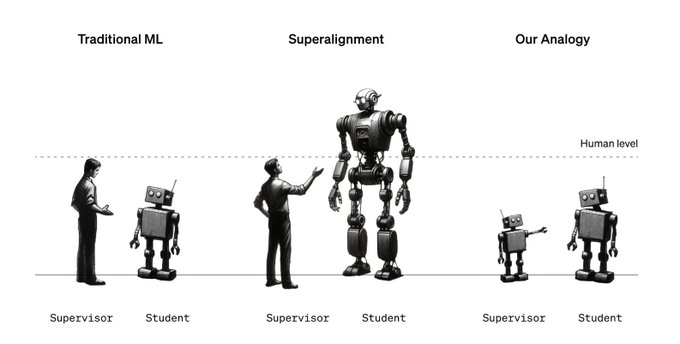

My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research.

If you're interested in joining, my dms are open.

368

521

9K

This is super cool work! Sparse autoencoders are the currently most promising approach to actually understanding how models "think" internally.

This new paper demonstrates how to scale them to GPT-4 and beyond – completely unsupervised.

A big step forward!

8

78

739

I call upon Governor

@GavinNewsom

to not veto SB 1047.

The bill is a meaningful step forward for AI safety regulation, with no better alternatives in sight.

50

58

518

@karpathy

I don't think the comparison between RLHF and RL on go really make sense this way.

You don’t need RLHF to train AI to play go because there is a highly reliable procedural reward function that looks at the board state and decides who won. If you didn’t have this procedural

9

25

375

If you're into practical alignment, consider applying to

@lilianweng

's team. They're building some really exciting stuff:

- Automatically extract intent from a fine-tuning dataset

- Make models robust to jailbreaks

- Detect & mitigate harmful use

- ...

13

32

250

Great conversation with

@robertwiblin

on how alignment is one of the most interesting ML problems, what the Superalignment Team is working on, what roles we're hiring for, what's needed to reach an awesome future, and much more

👇 Check it out 👇

15

38

227

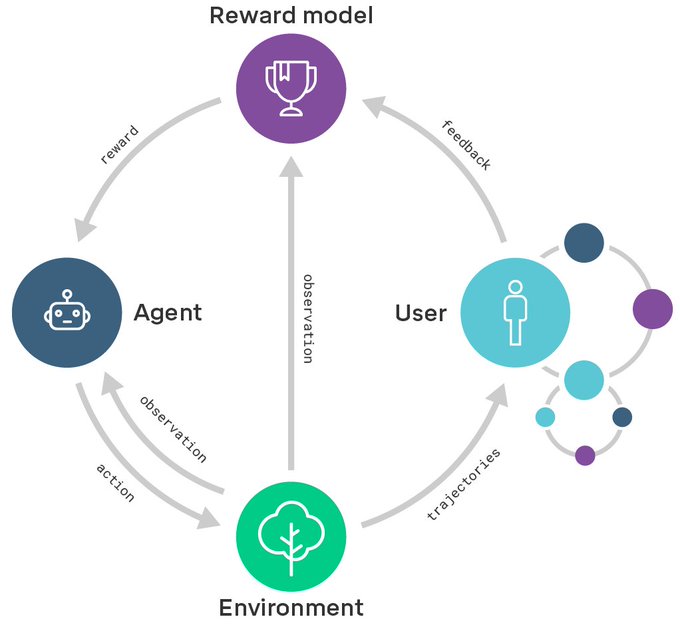

The agent alignment problem may be one of the biggest obstacles for using ML to improve people’s lives.

Today I’m very excited to share a research direction for how we’ll aim to solve alignment at

@DeepMindAI

.

Blog post:

Paper:

4

37

200

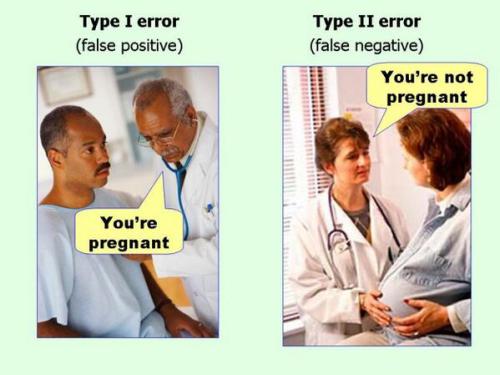

How do we uncover failures in ML models that occur too rarely during testing? How do we prove their absence?

Very excited about the work by

@DeepMindAI

’s Robust & Verified AI team that sheds light on these questions! Check out their blog post:

0

49

173

Very excited to deliver the

#icml2019

tutorial on

#safeml

tomorrow together with

@csilviavr

!

Be prepared for fairness, human-in-the-loop RL, and a general overview of the field.

And lots of memes!

3

18

154

One of my favorite parts of the GPT-4 release is that we asked an external auditor to check if the model is dangerous.

This project lead by

@BethMayBarnes

tested if GPT-4 could autonomously survive and spread. (The answer is no.)

More details here:

17

16

145

Kudos especially to

@CollinBurns4

for being the visionary behind this work,

@Pavel_Izmailov

for all the great scientific inquisition,

@ilyasut

for stoking the fires,

@janhkirchner

and

@leopoldasch

for moving things forward every day. Amazing ✨

9

12

141

I'm super excited to be co-leading the team together with

@ilyasut

.

Most of our previous alignment team has joined the new superalignment team, and we're welcoming many new people from OpenAI and externally.

I feel very lucky to get to work with so many super talented people!

11

3

132

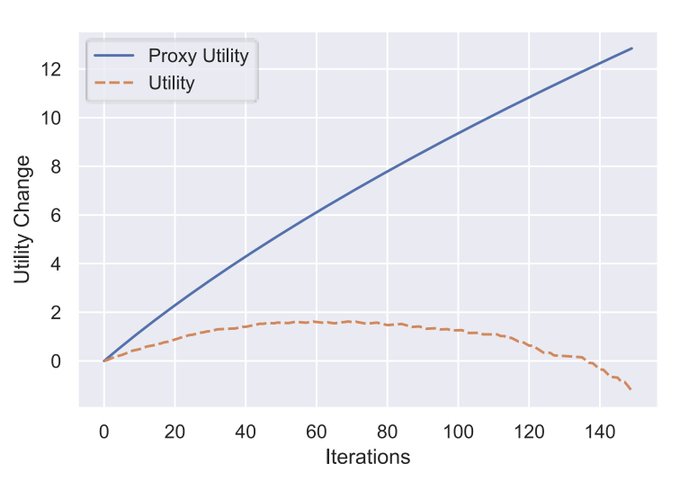

Well explained blog post about over-optimizing reward models using simple best-of-n sampling:

By Jacob Hilton and

@nabla_theta

3

23

131

Submtting a NeurIPS paper and unsure how to write your broader impact statement?

This blog post will guide you through it!

Comes with a few concrete examples, too.

By Carolyn Ashurst,

@Manderljung

,

@carinaprunkl

,

@yaringal

, and Allan Dafoe.

1

29

128

Big congrats to the team! 🎉

@mildseasoning

, Steven Bills,

@HenkTillman

,

@tomdlt10

,

@nickcammarata

,

@nabla_theta

,

@jachiam0

, Cathy Yeh,

@WuTheFWasThat

, and William Saunders

8

6

119

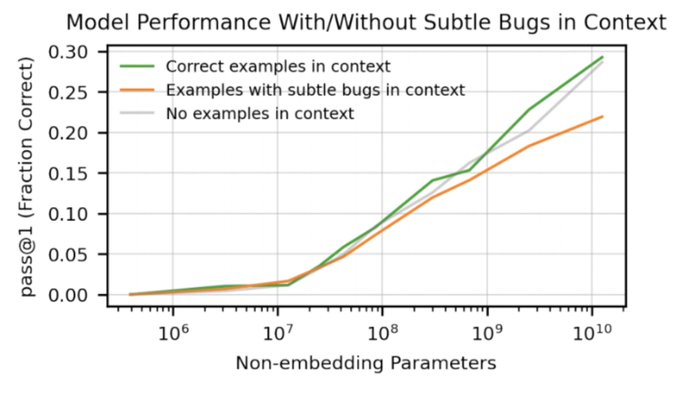

There are a lot of exciting things in the Codex paper, but my favorite titbit is the misalignment evaluations by

@BethMayBarnes

: Subtly buggy code in the context makes the model more likely to write buggy code, and this discrepancy gets larger as the models get bigger!

4

24

123

@benlandautaylor

The analogue to SB 1047 in the Hindenburg example would be that if you want to fill your zeppelins with hydrogen (despite safety experts advocating for helium as a safer alternative), you need to write a document about how that's safe enough and show it to the government,

8

1

104

@ESYudkowsky

We'll stare at the empirical data as it's coming in:

1. We can measure progress locally on various parts of our research roadmap (e.g. for scalable oversight)

2. We can see how well alignment of GPT-5 will go

3. We'll monitor closely how quickly the tech develops

14

4

95

This is very cool work! Especially the unsupervised version of this technique seems promising for superhuman models.

16

9

92

Amazing work, so proud of the team!

@CollinBurns4

@Pavel_Izmailov

@janhkirchner

@bobabowen

@nabla_theta

@leopoldasch

@cynnjjs

@AdrienLE

@ManasJoglekar

@ilyasut

@WuTheFWasThat

and many others

It's an honor to work with y'all! :excited-superalignment:

7

3

86