Zvi Mowshowitz

@TheZvi

Followers

28K

Following

3K

Media

133

Statuses

15K

Blogger world modeling, now mostly AI and AI x-risk, at Don't Worry About the Vase (https://t.co/tvn3lNcwc3 on SS/WP, LW), founding Balsa Research to fix policy.

New York City

Joined May 2009

@AmandaAskell Not my top priority or anything but I'm tired of being told I'm asking fascinating questions and raising interesting points and so on. I want to be able to trust such statements as meaning something.

24

10

773

Amazing news. So many of the most important consequences of this election, in all directions, are quickly revealing themselves to be things I did not hear mentioned even once the entire campaign.

BREAKING: Members of President-elect Donald Trump's transition team have told advisers they plan to make a federal framework for fully self-driving vehicles one of the Transportation Department's priorities, easing U.S. rules for self-driving cars.

24

39

517

I outright predict that if an AI did escape onto the internet, get a server and a crypto income, no one would do much of anything about it.

@ESYudkowsky "It is only doing it in a few percent of cases."."Sure, but it is not agential enough to actually succeed."."Sure, it found the escape hatch but it was fake."."Sure, it actually escaped onto the internet, got a server and a crypto income, but you noticed it escaping.".

31

17

438

So we now know that Mira only informed SamA this morning, the same morning we learn OpenAI is to become a B-Corp and abandon its mission, and they're going to lose too more top people, Bob and Barret. Huh.

i just posted this note to openai:. Hi All–. Mira has been instrumental to OpenAI’s progress and growth the last 6.5 years; she has been a hugely significant factor in our development from an unknown research lab to an important company. When Mira informed me this morning that.

12

8

429

@ESYudkowsky Tell her that she'll be in Beyond the Spider-Verse and there's like a 30% chance you turn out to be right.

1

0

364

Everyone: We can't pause or regulate AI, or we'll lose to China. China: All training data must be objective, no opinions in the training data, any errors in output are the provider's responsibility, bunch of other stuff. I look forward to everyone's opinions not changing.

Just read the draft Generative AI guidelines that China dropped last week. If anything like this ends up becoming law, the US argument that we should tiptoe around regulation 'cos China will beat us will officially become hogwash. Here are some things that stood out. 🧵.

36

38

361

I've historically said the world's greatest charity is Amazon, but on reflection I mostly do buy the argument that the right answer is instead Google.

Google has probably produced more consumer surplus than any company ever. I don't understand how a free product that has several competitors which are near costless to switch to could be the focus of an antitrust case.

10

15

337

This was worth every penny and I am worried they then didn't hire the firm going forward.

@nearcyan When I was at OpenAI we hired a firm to help us name GPT-4. The best name we got was. GPT-4 because of the built-in name recognition. I kid you not.

4

8

332

In terms of AI actually taking our jobs, @MTabarrok reiterates his claim that comparative advantage will ensure human labor continues to have value, no matter how advanced and efficient AI might get, because there will be a limited supply of GPUs, datacenters and megawatts, and.

14

18

308

This applies to everything and is the secret. Figuring out how a given thing actually work instead of vibing - whether or not you do it because you were bad at vibing - does radically worse up front, until it suddenly starts doing radically better.

has anyone written about the phenomenon where autists go from really bad socializing to being way better than the average normie once they realize the game has rules that you can debug & create new rules?.

7

11

298

How fake? Entirely fake, it turns out. This was a test of the emergency bullshit detector system. This was only a test. How'd you do?.

That story about the AI drone 'killing' its imaginary human operator? The original source being quoted says he 'mis-spoke' and it was a hypothetical thought experiment never actually run by the US Air Force, according to the Royal Aeronautical Society.

33

23

270

This is the head of alignment work at OpenAI, in response to the question 'how do we know RLHF won't scale?'.

@alexeyguzey @SimonLermenAI We'll have some evidence to share soon.

9

16

281

I did a tally. Top answers in order:.1. First mover advantage .2. Rate limits.3. Anthropic isn't trying.4. Kleenex effect (ChatGPT names category).5. Mindshare (circular?).6. Isn't 10x better.7. Claude is a bad name.8. Lack of marketing.9. Lack of other features.10. Long tail.

26

4

270

This is a shadow of what's to come - No one is paying enough attention to which of the frictions the AIs will remove were previously load bearing.

This is an oft-overlooked part of process design, and so I’m promoting it out of the thread. Certain forms of friction are load-bearing. Sometimes it is because they embed a proof of work, sometimes they outsource a velocity limit, and sometimes they embed a desirable tripwire.

4

10

259

Ask the girl out, though.

"get your affairs in order. buy land. ask that girl out.". begging the people talking about imminent AGI to stop posting like this, it seriously is making you look insane both in that you are clearly in a state of panic and also that you think owning property will help you.

3

2

257

This is The Way. Major kudos.

I *WAS* WRONG - $10K CLAIMED!. ## The Claim. Two days ago, I confidently claimed that "GPTs will NEVER solve the A::B problem". I believed that: 1. GPTs can't truly learn new problems, outside of their training set, 2. GPTs can't perform long-term reasoning, no matter how simple.

1

7

225

I see reports like this and every damn time I try to use Google Research it I get a generic report on the subject I vaguely asked about that doesn't answer the question I asked. If I challenge it, it apologizes and then does it again.

holy HELL Gemini deep research is unbelievable . just pulled info from 100ish websites and compiled a report on natural gas generation in minutes . maybe my favorite ai product launch of the last… at least three business days.

11

21

201

And yet almost all economic debates over AI make exactly this assumption - that frontier model capabilities will be, at most, what they already are.

It seems like the narrowest of narrow possible bull eyes to assume capabilities stop exactly where we are right now. Don’t know where they go, but just predict where software adoption curves of status quo technology get to in 5 or 20 years. It’s going to be a bit wild.

14

14

225

I love that @tylercowen is using 'you can run this through o1 pro yourself' as the new way of calling economic arguments Obvious Nonsense.

4

8

218

Oh come on, today? This is getting rediculous.

Here's my conversation with Eliezer Yudkowsky (@ESYudkowsky) about the danger of AI to destroy human civilization. This was a difficult, sobering & important conversation. I will have many more with a variety of voices & perspectives in the AI community.

15

7

207

Serious thanks to OpenAI for telling us about this now and then actually doing the safety and red team testing, rather than surprising us (or skipping the testing).

o3, our latest reasoning model, is a breakthrough, with a step function improvement on our hardest benchmarks. we are starting safety testing & red teaming now.

2

10

206

6-0 in @TwitchRivals with Cavalier Fires featuring 4 Sphinx of Foresight. Deck is super sweet, probably should find room for 2nd Kenrith and a few SB Disenchants. Enjoying this streaming thing ( See everyone tomorrow at 3!

7

18

188

It is fascinating watching people's reactions in real time and comparing them to the reactions I was anticipating. Overall it's going great, especially people actually noticing the corrigibility problem, and often I'm watching their first reactions thinking 'wait for it. '.

New Anthropic research: Alignment faking in large language models. In a series of experiments with Redwood Research, we found that Claude often pretends to have different views during training, while actually maintaining its original preferences.

13

22

188

Lot of unforced errors suggested on why Claude isn't catching on but this seems like the clearest case so far of 'the Anthropic followers of mine should have someone implement this, ideally by Monday at the latest.'.

@TheZvi It’s possible that “share a link to this chat” played a big role in ChatGPT’s viral growth. Claude doesn’t have this feature.

15

5

196

Senator Blumenthal seems to be taking AI seriously and attempting to understand, much more so than anyone else at that level. We need to do our best to reach out and help him improve his model.

The urgency around A.I.’s advancements demands serious action & substantive legislation. The future is not science fiction or fantasy. It's not even the future, it's the here & now.

7

13

186

I want to be clear, I did not wake up and choose violence. I spent several days considering my options.

.@TheZvi woke up and chose violence:. "Sam Altman is not playing around. He wants to build new chip factories in the decidedly unsafe and unfriendly UAE. He wants to build up the world’s supply of energy so we can run those chips. What does he say these projects will cost? Oh,

5

6

183

I love this so much. And they're only charging $9. Prices are magic.

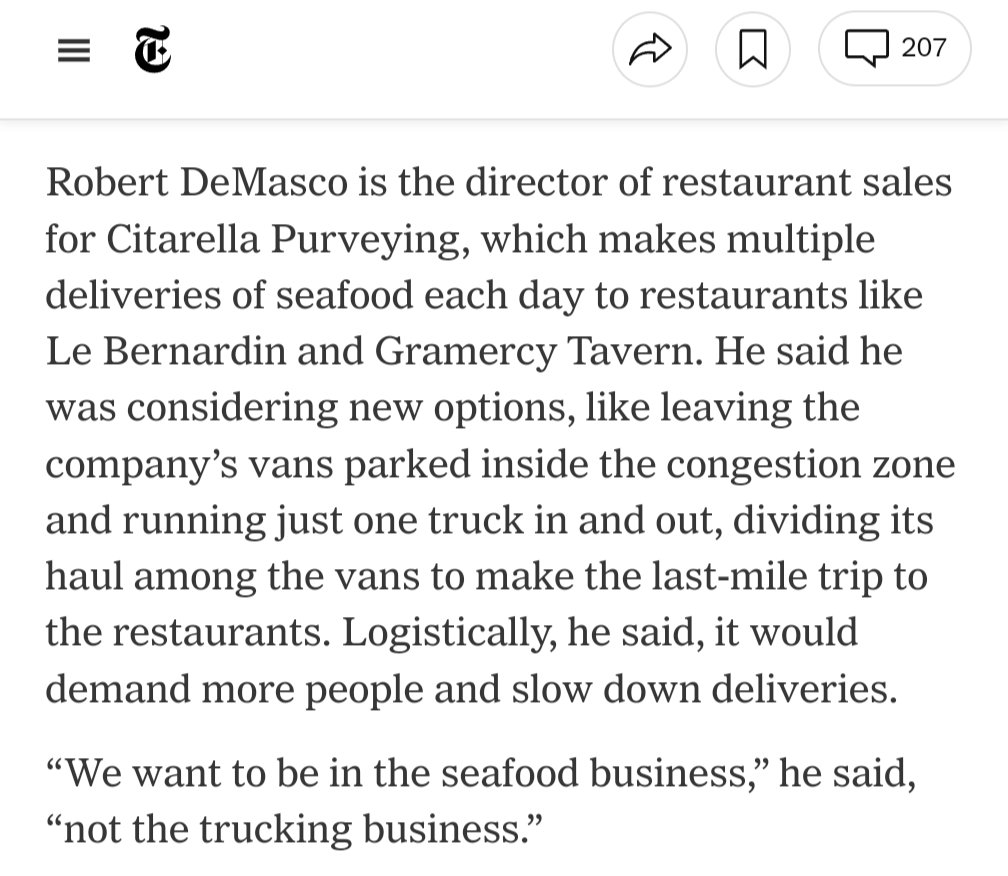

Congestion pricing coverage is peak economics. Every person is like, "I didn't used to bear the external costs of my behavior. But now that I do, I am adjusting along an infinite variety of least-cost adaptive margins that no central planner could have foreseen or designed"

5

3

189