Chunting Zhou

@violet_zct

Followers

2,641

Following

278

Media

30

Statuses

138

Research Scientist at FAIR. PhD @CMU . she/her.

Seattle, WA

Joined July 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Flamengo

• 238981 Tweets

Beyoncé

• 207679 Tweets

Pink

• 180322 Tweets

#DNC2024

• 147740 Tweets

#DNCConvention2024

• 84569 Tweets

Cruzeiro

• 69140 Tweets

Bolívar

• 59072 Tweets

Rossi

• 49171 Tweets

The Chicks

• 39402 Tweets

FAYEYOKO 1ST FANMEET TICKET

• 37546 Tweets

Advincula

• 32252 Tweets

京都国際

• 31551 Tweets

Yachty

• 30977 Tweets

Adam Kinzinger

• 29795 Tweets

ラストマイル

• 24173 Tweets

Romero

• 23677 Tweets

Izquierdo

• 23451 Tweets

甲子園決勝

• 22658 Tweets

Riquelme

• 18740 Tweets

#ドンシェルジュ

• 18034 Tweets

Ayrton Lucas

• 17251 Tweets

Figal

• 16428 Tweets

Milton

• 16142 Tweets

関東第一

• 16062 Tweets

Carlinhos

• 16042 Tweets

O Tite

• 15789 Tweets

Kerry Washington

• 15371 Tweets

Kemp

• 14864 Tweets

Gerson

• 13639 Tweets

Vignolo

• 11430 Tweets

Zenón

• 10874 Tweets

#PremiosIdolo

• 10743 Tweets

Cassio

• 10500 Tweets

#BOLxFLA

• 10304 Tweets

Bruno Henrique

• 10149 Tweets

Last Seen Profiles

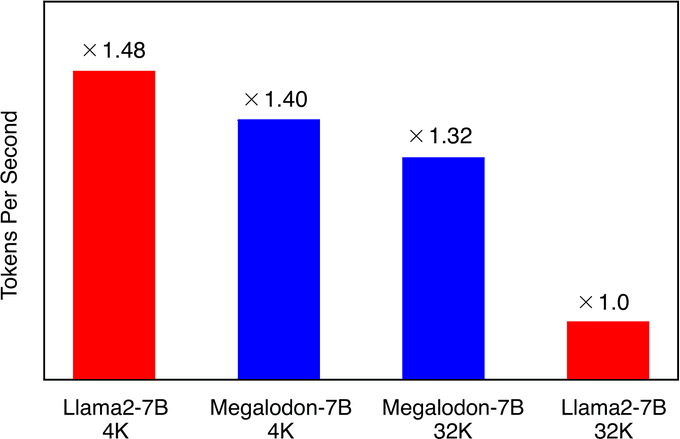

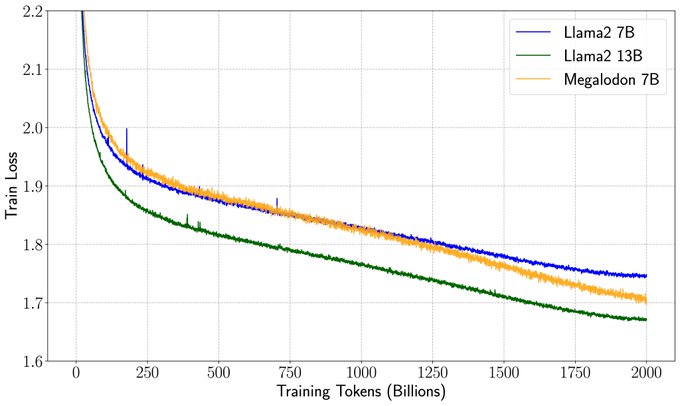

Mega is now open source at:

Feel free to play with it!

@MaxMa1987

@XiangKong4

@junxian_he

@liangkegui

@jonathanmay

@gneubig

@LukeZettlemoyer

2

32

174

Introducing FlowSeq: Generative Flow-based Non-Autoregressive Seq2Seq generation

@emnlp2019

()!

FlowSeq allows for efficient parallel decoding while modeling the joint distribution of the output sequence.

Our code is at .

1

47

170

Check out our recent work on parameter-efficient fine-tuning. We present a unified framework that establishes connections between state-of-the-art methods (e.g. Prefix-Tuning, Adapters, LoRA).

Great collaboration with

@junxian_he

and others

@MaxMa1987

,

@BergKirkpatrick

@gneubig

!

4

25

152

🚀 Excited to introduce Chameleon, our work in mixed-modality early-fusion foundation models from last year! 🦎 Capable of understanding and generating text and images in any sequence. Check out our paper to learn more about its SOTA performance and versatile capabilities!

3

19

112

Does syntactic reordering help in Neural Machine Translation? Checkout our

@emnlp2019

paper , we reorder target-language monolingual sentences to the source order and use as an additional source of training supervisions. (1/2)

1

25

82

@WenhuChen

@Teknium1

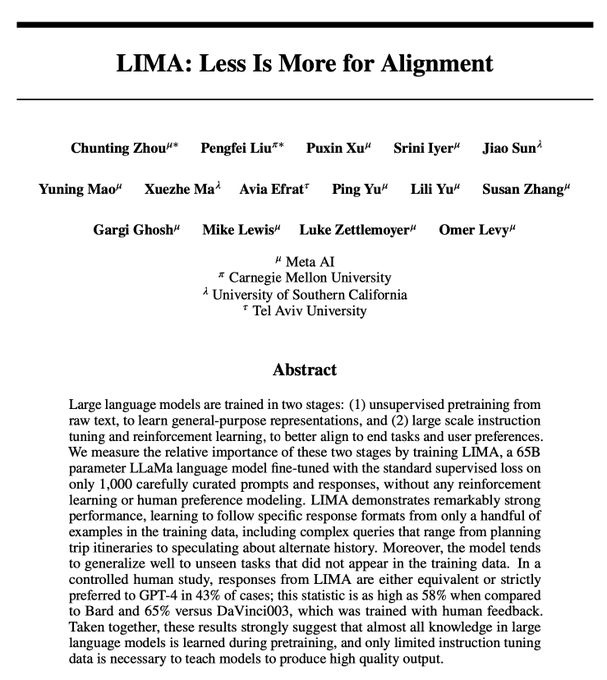

I think the motivation of LIMA is not to quantify the number of SFT examples that is needed but to highlight (1) how important high quality SFT data is and (2) the superficial alignment hypothesis where pretrained LLM stores all the knowledge and can be easily tuned into an

0

4

34

This is my internship project at FAIR last summer, thank you, my awesome collaborators:

@gh_marjan

@LukeZettlemoyer

@thoma_gu

@gneubig

@guzmanhe

and Mona Diab

Paper:

Code: (with our annotated test sets in MT) [2/n]

1

3

17

Very clear explanation of the connection between EMA and recent diagonal SSMs! We will see how Mega works by replacing EMA with S4D.

0

0

17

Wonderful collaborations with

@MaxMa1987

,

@_xiaomengy_

,

@XiongWenhan

,

@BeidiChen

,

@liliyu_lili

,

@haozhangml

,

@jonathanmay

,

@LukeZettlemoyer

and

@omerlevy_

.

Paper:

1

3

17

Thanks

@pmichelX

for tweeting our work! Please check out our work if you'd like to know when group distributionally robust optimization works well (perfect partition) and when it does not (imperfect partitions of groups). 🧐

If you are interested how models can pick up spurious features from biased training data, and how we can train them to be robust to these spurious correlations, even with imperfect information, I highly recommend that you check out

@violet_zct

's ICML paper

2

16

70

0

2

15

Great collaboration with my amazing coauthors!

@stefan_fee

, Puxin Xu,

@sriniiyer88

,

@sunjiao123sun_

,

@yuning_pro

,

@MaxMa1987

,

@AviaEfrat

, Ping Yu,

@liliyu_lili

,

@suchenzang

,

@gargighosh

,

@ml_perception

,

@LukeZettlemoyer

and

@omerlevy_

! 🥰

0

1

15

Great collaborations with

@MaxMa1987

,

@XiangKong4

,

@junxian_he

,

@liangkegui

,

@gneubig

,

@jonathanmay

and

@LukeZettlemoyer

.

Paper:

Code will be release soon, please DM us if you want to try our model now.

3

0

12

Feel free to stop by and say hi at our poster session (Poster session 6, spot B1, 9 PM-11 PM PST, July 22th).

Code available at: .

Thanks

@pmichelX

for tweeting our work! Please check out our work if you'd like to know when group distributionally robust optimization works well (perfect partition) and when it does not (imperfect partitions of groups). 🧐

0

2

15

0

2

9

Great findings and analysis by

@xiamengzhou

on the pre-training trajectories (intermedia checkpoints) across different model scales! Please check it out.

0

0

10

Well-executed benchmark on web agents, check out this from

@shuyanzhxyc

!

0

1

10

We find significant improvements in low-resource MT setting with divergent language pairs.

With

@gneubig

,

@MaxMa1987

and

@JunjieHu12

! (2/2)

0

1

10

@rasbt

Thanks for tweeting it! Just a note: For 7B model, I recommend upsampling the author written data + NLP by twice.

1

0

8

@zimvak

@arankomatsuzaki

We have compared with Transformer-XL, please check out our results on Wikitext-103.

0

0

7

Check it out! Controllable summarization with great results from

@junxian_he

.

Glad to see the blog post up! I had a wonderful time working on controllable summarization with

@SFResearch

last year. We explored a keyword-based way to control summaries along multiple dimensions.

Models and demos are available at

@huggingface

(Credit to

@ak92501

)

1

5

29

1

0

5

@boknilev

@jonathanmay

I knew this benchmark, but unfortunately we don’t have a pre-trained Mega yet. Scrolls is definitely a great benchmark to test when there is a pre-trained Mega.

0

0

4

@rasbt

Note 3: Directly re-learn the <eos> token as the end-of-turn may not work for small models (7B). If you don’t want to bother yourself inserting a new token <eot> into the original model weights, you can use other ways to separate the dialogue turns,

0

0

3

@NielsRogge

@MaxMa1987

We are releasing the code soon, please DM us for the code and scripts if you'd like to try it now.

0

0

3

@rajhans_samdani

We’ll definitely release the test set and our generations, not sure if we can release the human ratings, need to check with legal team :-)

1

0

3

@rasbt

Note 2: Using proper regularization (my recommended value: 0.2 for 7B and 30B, 0.3 for 65B) is important when training on small data, but not the specific form. You can use regular hidden dropout instead of residual dropout (described) in the paper.

1

0

2

@yoquankara

@junxian_he

It would be great you could share the results with us later, thanks and no rush!

0

0

2

@KarimiRabeeh

@ak92501

@junxian_he

Hey thanks for you question, simply speaking Mix-and Match employs prefix-tuning at attention layer with very small bottleneck dimension (length of prefix vectors, e.g. 30) and our improved adapters with larger bottleneck dimensions at the FFN layers.

0

0

2

@_albertgu

Thanks! Yes, we described this connection with S4 and its variants in the last paragraph of Section 3.2

0

0

2

@KarimiRabeeh

@ak92501

@junxian_he

This structure gives the best performance with least addition parameters. This instantiation is derived from our unified framework of different parameter-efficient models, which is reasoned and explained in the paper.

1

0

2

@Bluengine

EMA can be computed at once for all tokens since we can compute its kernel in advance, on the other hand, GRU can only be computed sequentially.

0

0

1

@simran_s_arora

@srush_nlp

@realDanFu

@SonglinYang4

Thank you Sasha! It was really fun talking and discussing with you 🧠

0

0

1

@xhluca

@MaxMa1987

@XiangKong4

@junxian_he

@liangkegui

@jonathanmay

@gneubig

@LukeZettlemoyer

We have that plan and before that we’d like make a more efficient version first.

0

0

1

@SinclairWang1

@gneubig

@junxian_he

@MaxMa1987

@BergKirkpatrick

Thanks! And yes, we are releasing the code soon.

1

0

1

@_ironbar_

For 7B model, I found it necessary to use this additional token instead of the original <eos>. For larger models, it doesn’t matter too much. But I guess other methods, e.g. <User>: xx <Assistant>:xx may also work, although I haven’t tested them myself.

0

0

1

@nbroad1881

@MaxMa1987

@XiangKong4

@junxian_he

@liangkegui

@gneubig

@jonathanmay

@LukeZettlemoyer

That's a good suggestion! We will release the checkpoints soon, and will consider putting them on the hf hub, too.

0

0

1

@srchvrs

Would like to clarify which head are you talking about? the attention head or the classification head? We view prompting tuning (prepending vectors before inputs) as an adapter used at the first attention layer.

1

0

1