Lili Yu (Neurips24)

@liliyu_lili

Followers

2K

Following

64

Statuses

95

AI Research Scientist @AIatMeta (FAIR) Multimodal: Megabyte, Chameleon, Transfusion Phd @MIT

Seattle, WA

Joined January 2015

🚀 Excited to share our latest work: Transfusion! A new multi-modal generative training combining language modeling and image diffusion in a single transformer! Huge shout to @violet_zct @omerlevy_ @michiyasunaga @arunbabu1234 @kushal_tirumala and other collaborators.

Introducing *Transfusion* - a unified approach for training models that can generate both text and images. Transfusion combines language modeling (next token prediction) with diffusion to train a single transformer over mixed-modality sequences. This allows us to leverage the strengths of both approaches in one model. 1/5

5

17

103

RT @physical_int: Many of you asked for code & weights for π₀, we are happy to announce that we are releasing π₀ and pre-trained checkpoin…

0

202

0

You did amazing job over the summer !

Stay tuned for more updates and check out our full paper here: 😺 P.S. My internship at Meta has been an incredible experience. Working with the team has been both rewarding and inspiring, and I’ve learned so much from each of my collaborators. I highly recommend this internship opportunity to researchers seeking to grow and make meaningful contributions!

0

0

14

RT @JunhongShen1: Introducing Content-Adaptive Tokenizer (CAT) 🐈! An image tokenizer that adapts token count based on image complexity, off…

0

45

0

Checking out our new work, LlamaFusion: a new approach to empower pretrained text-only LLMs for multimodal (text+image) understanding and generation while *fully preserves* the text capability

Introducing 𝐋𝐥𝐚𝐦𝐚𝐅𝐮𝐬𝐢𝐨𝐧: empowering Llama 🦙 with diffusion 🎨 to understand and generate text and images in arbitrary sequences. ✨ Building upon Transfusion, our recipe fully preserves Llama’s language performance while unlocking its multimodal understanding and generation ability ��

0

19

168

RT @garrethleee: 🚀 With Meta's recent paper replacing tokenization in LLMs with patches 🩹, I figured that it's a great time to revisit how…

0

235

0

Thanks again for the invite. Had very fun discussion and learned a ton from other researchers.

📢 Come join us for our 1st Workshop on Responsibly Building the Next Generation of Multimodal Foundational Models @NeurIPSConf. We have seen great progress in #GenAI and have encountered various challenges in aspects of responsibility. What precautions do we need to take beforehand for the Multimodal future? 🤔 Invited talks & exciting panels from: @anikembhavi, @uiuc_aisecure, @FeiziSoheil, @davidbau, @Qdatalab, @jasonbaldridge, Lijuan Wang, @furongh, and @liliyu_lili. 🔥🔥 More details: #NeurIPS2024 #ResponsibleAI #GenAI

0

0

12

(Wasn't able to join the the workshop in person, but watched the recording afterwards.) I greatly appreciate deep thought about the modality gap and also the deep learning history how we got here. Very inspiring to all the researchers in the fields. I am big fan of simplicity and love the idea "no more two-stage training". Our team were able to kill tokenizer in text llm working from megabyte ( to BLT (. Can't wait to do the same for other modalities.

0

0

2

Thanks for organizing! Had tons of fun there.

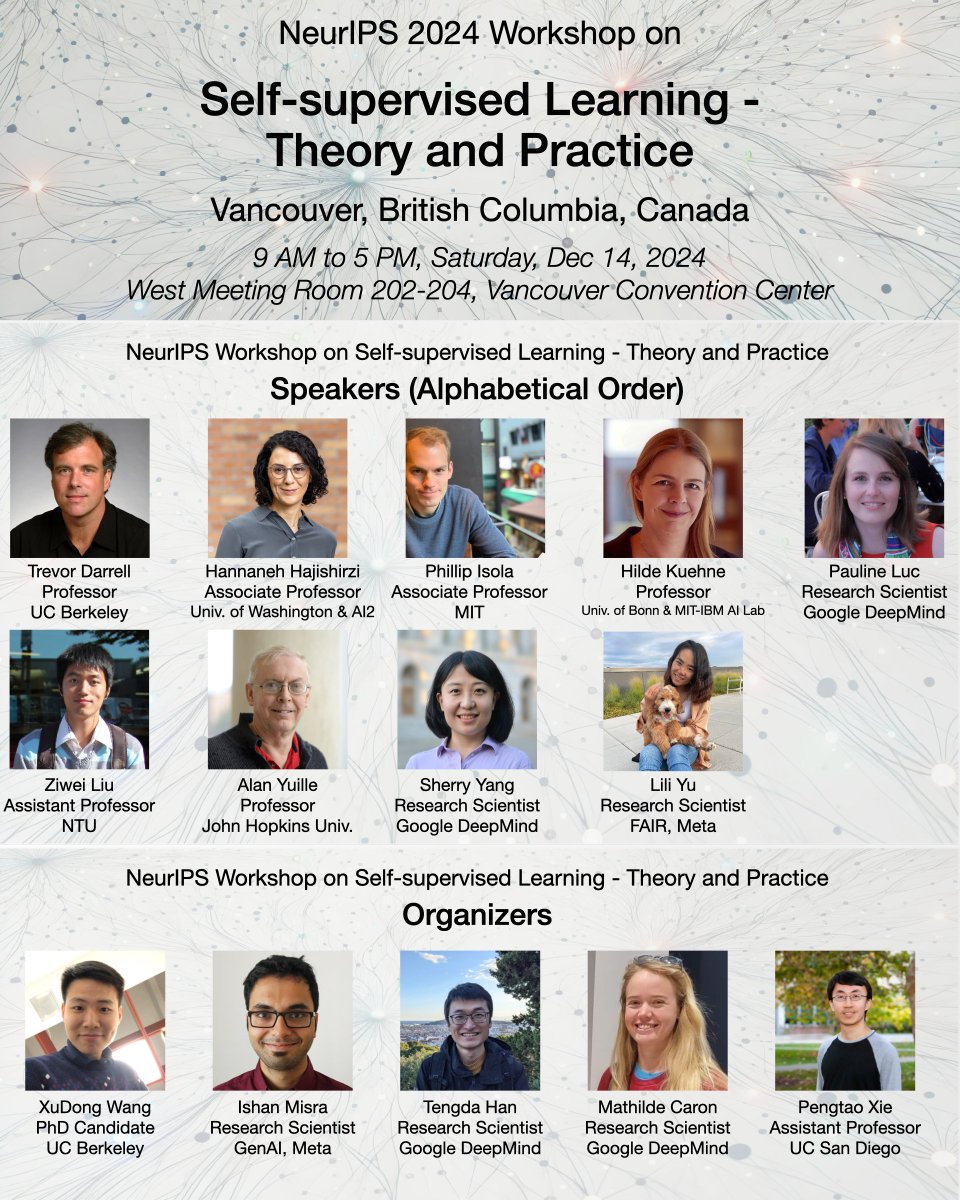

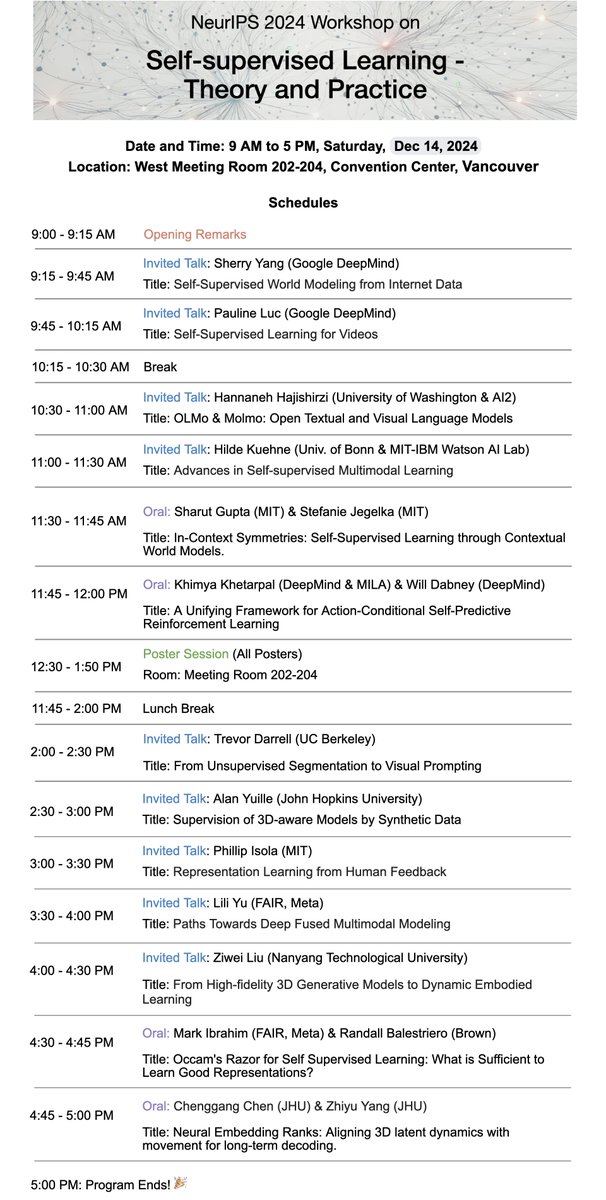

🚨Join us at the NeurIPS2024 Workshop on Self-Supervised Learning - Theory and Practice! Featuring talks from leading researchers at UC Berkeley, MIT, UW, FAIR, DeepMind, MILA, AI2, NTU, JHU, Brown, and UofBonn! 🗓️ Sat, Dec 14, 2024 📍 West Meeting Room 202-204 🧭 📌 See the attached schedule for details

1

0

11

RT @furongh: I saw a slide circulating on social media last night while working on a deadline. I didn’t comment immediately because I wante…

0

185

0

RT @AaronJaech: Maybe if this gets enough retweets, the genai team will use it in their next llama model?

0

4

0

RT @scaling01: META JUST KILLED TOKENIZATION !!! A few hours ago they released "Byte Latent Transformer". A tokenizer free architecture th…

0

730

0

RT @sriniiyer88: New paper! Byte-Level models are finally competitive with tokenizer-based models with better inference efficiency and robu…

0

22

0

We scaled up Megabyte and ended up with a BLT! A pure byte-level model, has a steeper scaling law than the BPE-based models. With up to 8B parameters, BLT matches Llama 3 on general NLP tasks—plus it excels on long-tail data and can manipulate substrings more effectively. The team did excellent work to make it work, check out @ArtidoroPagnoni 's thread for more details.

🚀 Introducing the Byte Latent Transformer (BLT) – An LLM architecture that scales better than Llama 3 using byte-patches instead of tokens 🤯 Paper 📄 Code 🛠️

0

9

71

RT @jaseweston: Byte Latent Transformer 🥪🥪🥪 Introduces dynamic patching of bytes & scales better than BPE

0

38

0

RT @__JohnNguyen__: 🥪New Paper! 🥪Introducing Byte Latent Transformer (BLT) - A tokenizer free model scales better than BPE based models wit…

0

68

0

RT @m__dehghani: Interactive and interleaved image generation is one of the areas where Gemini 2 Flash shines! A thread for some cool examp…

0

10

0

RT @iScienceLuvr: Training Large Language Models to Reason in a Continuous Latent Space Introduces a new paradigm for LLM reasoning called…

0

303

0