Alexis Ross

@alexisjross

Followers

2,870

Following

922

Media

27

Statuses

394

phd-ing @MIT_CSAIL , working on machine teaching | formerly @allen_ai , @harvard ‘20

Seattle, WA

Joined June 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Joe Biden

• 651240 Tweets

#들리나요_여기는_플레이브

• 214427 Tweets

#Pump_Up_The_PLAVE

• 213874 Tweets

Star Wars

• 107658 Tweets

The Acolyte

• 63804 Tweets

राजीव गांधी

• 53746 Tweets

#ラストマイル

• 44071 Tweets

#ファミマの増量チョコ

• 40475 Tweets

PDIP

• 32052 Tweets

UPSC

• 28747 Tweets

ネルフェス

• 22148 Tweets

2ND COVER SONG

• 21371 Tweets

초코우유

• 20975 Tweets

Suavi

• 19082 Tweets

NuNew x Butterbear

• 18598 Tweets

Leao

• 18373 Tweets

Kapil Sibal

• 16223 Tweets

Ahok

• 15543 Tweets

Last Seen Profiles

Pinned Tweet

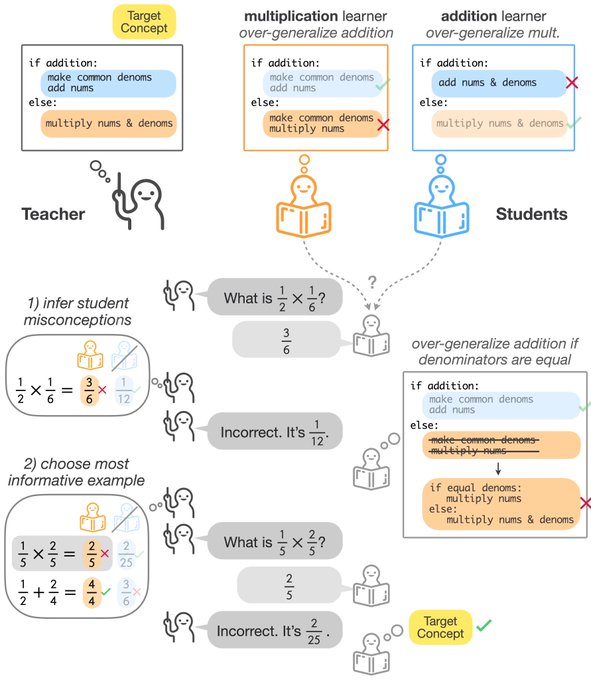

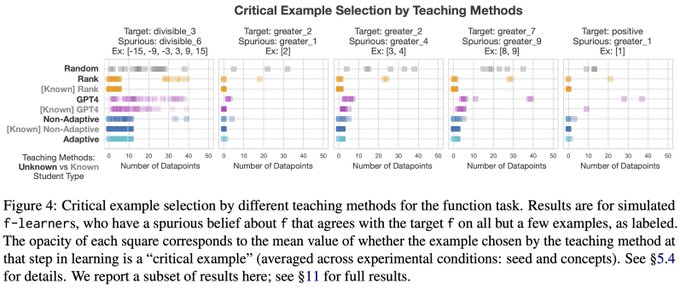

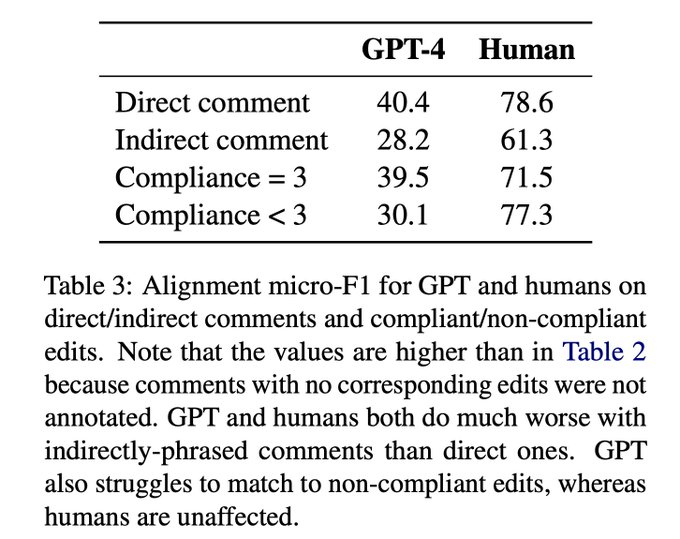

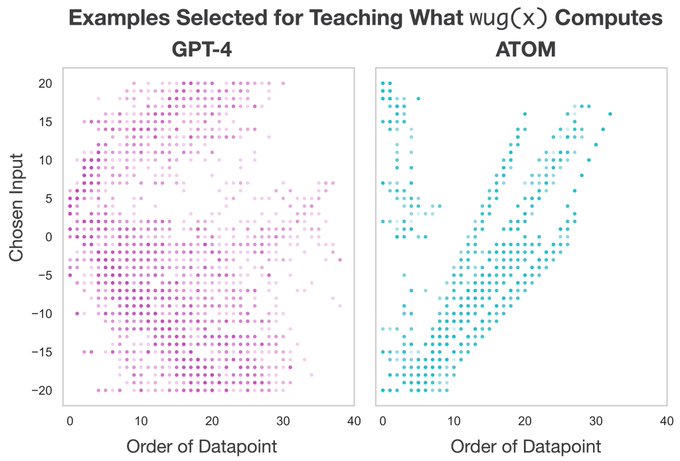

Good teachers *adapt* to student beliefs & misconceptions: Can LLM teachers?

In new work w/

@jacobandreas

, we introduce 1) the AdapT 👩🏫 (Adaptive Teaching) evaluation framework & 2) AToM ⚛️ (Adaptive Teaching tOwards Misconceptions), a new probabilistic teaching method.

(1/n)

3

43

200

Life update: I’ll be starting my PhD at

@MITEECS

&

@MIT_CSAIL

in the fall! Super excited to work with

@jacobandreas

, Yoon Kim, and the rest of the rich language ecosystem at MIT ✨

46

18

520

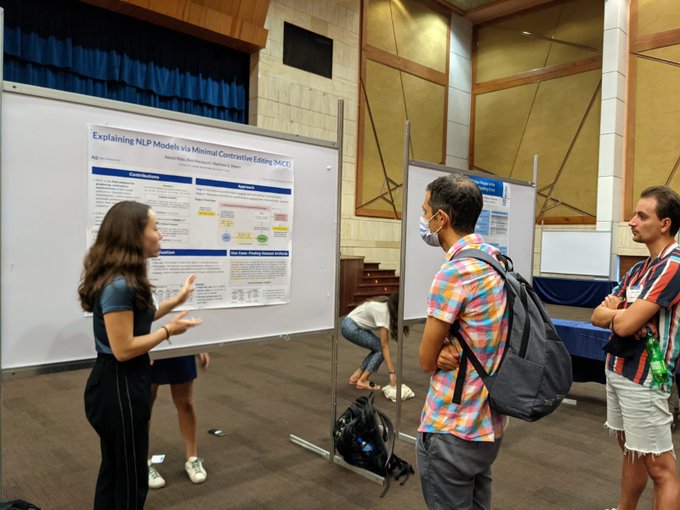

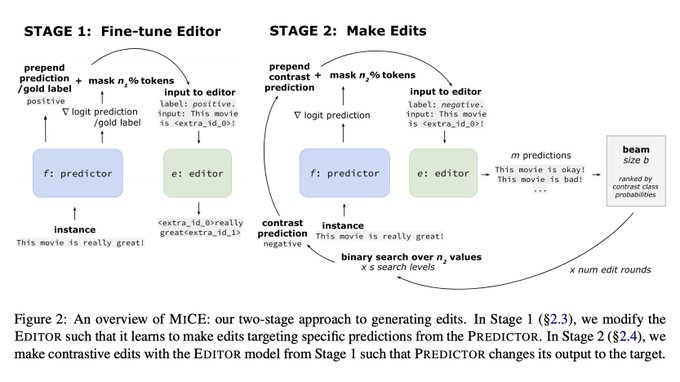

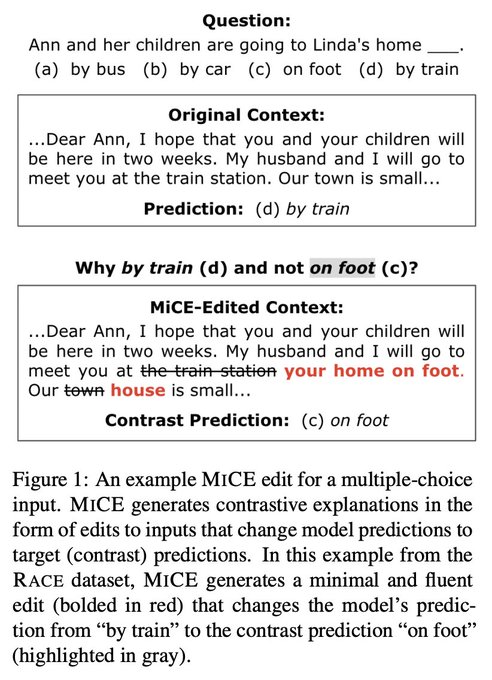

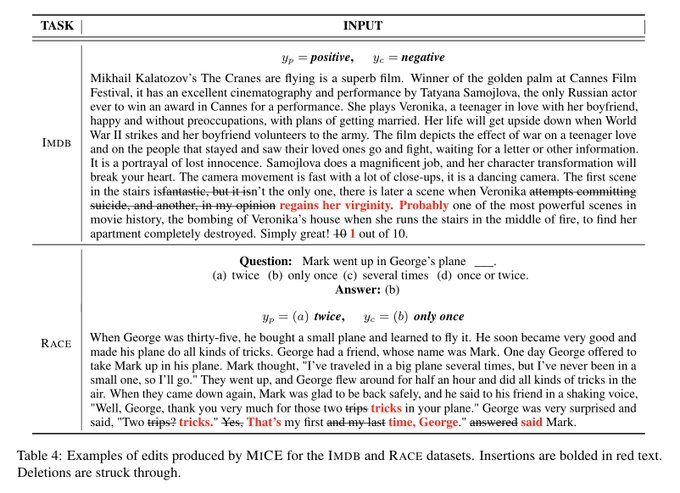

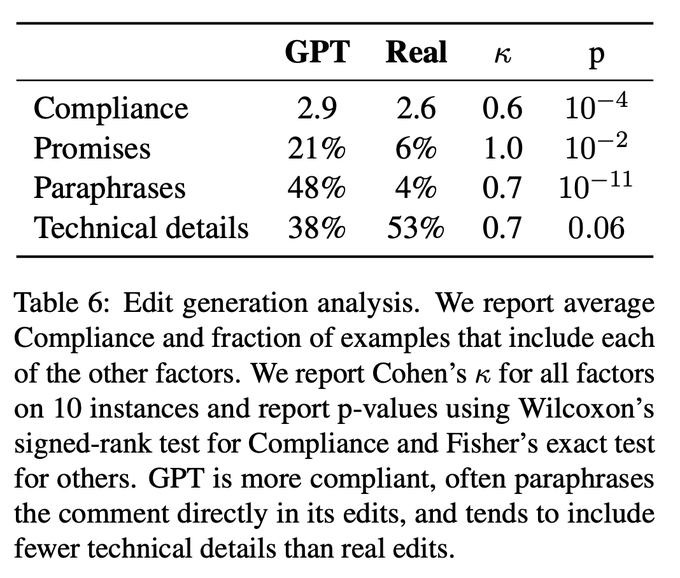

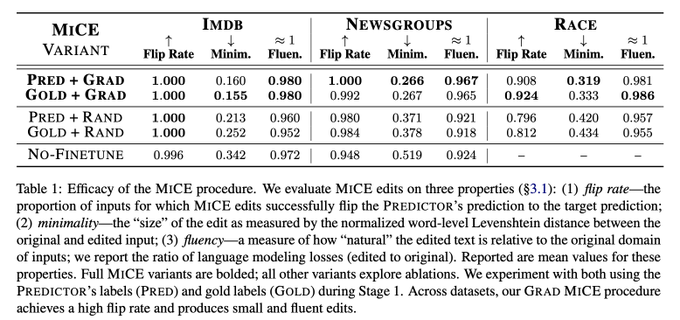

Excited to share our preprint, "Explaining NLP Models via Minimal Contrastive Editing (MiCE)" 🐭 This is joint work with

@anmarasovic

and

@mattthemathman

Link to paper:

Thread below 👇 1/6

5

45

227

Sunset cruise to start off the PhD at

@MIT_CSAIL

☀️ Grateful to

@MITEECS

’s GW6 for organizing!

3

8

210

Excited to have won a Hoopes prize for my Computer Science/Philosophy thesis in explainable ML! Working on this interdisciplinary project was challenging but deeply rewarding. Forever grateful to my advisors Hima Lakkaraju & Bernhard Nickel for their invaluable guidance!

7

11

120

Our upcoming

#nlphighlights

episodes will be a series on PhD applications. If there are any questions or topics you would like to see discussed, feel free to reply or send me a DM! We will look at these responses as we prepare our episodes 😄

11

14

120

I'm happy to share that our paper "Explaining NLP Models via Minimal Contrastive Editing (MiCE)" was accepted into Findings of ACL 2021!

Updated paper:

Code & models:

Work with

@anmarasovic

@mattthemathman

Excited to share our preprint, "Explaining NLP Models via Minimal Contrastive Editing (MiCE)" 🐭 This is joint work with

@anmarasovic

and

@mattthemathman

Link to paper:

Thread below 👇 1/6

5

45

227

3

18

103

#nlphighlights

123: Robin Jia tells us about robustness in NLP: what it means for a system to be robust, how to evaluate it, why it matters, and how to build robust NLP systems. Thanks

@robinomial

and

@pdasigi

for a great discussion!

3

18

99

Excited to share that our work on in-context teaching will appear at

#ACL2024

! 🇹🇭

Good teachers *adapt* to student beliefs & misconceptions: Can LLM teachers?

In new work w/

@jacobandreas

, we introduce 1) the AdapT 👩🏫 (Adaptive Teaching) evaluation framework & 2) AToM ⚛️ (Adaptive Teaching tOwards Misconceptions), a new probabilistic teaching method.

(1/n)

3

43

200

0

8

92

*⃣ Resource alert for people applying to CS PhD programs this cycle *⃣ contains >60 example statements of purpose! It's made possible by the many generous submissions from new applicants, and new ones are always welcome! 😊

1

25

89

we heard about

@stanfordnlp

's Alpaca and thought we should join in on the fun 🦙

@gabe_grand

@belindazli

@zhaofeng_wu

2

5

88

Happy to share that our paper, "Learning Models for Actionable Recourse," will appear in NeurIPS 2021! Very grateful to my collaborators/mentors

@hima_lakkaraju

and

@obastani

. Camera ready version coming soon!

5

7

85

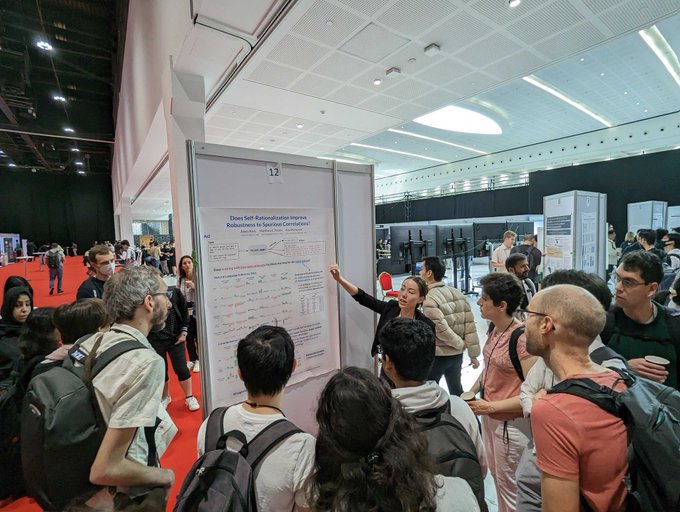

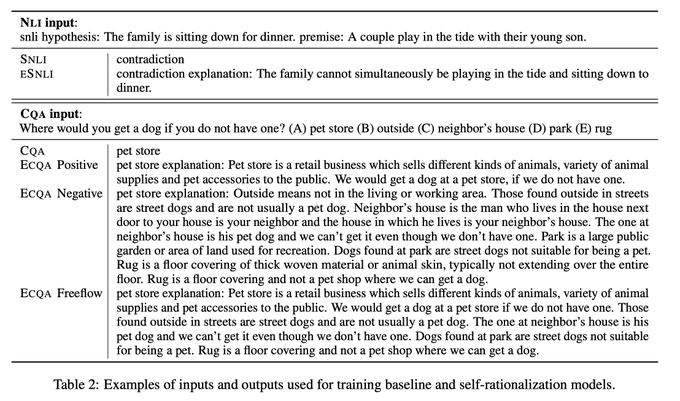

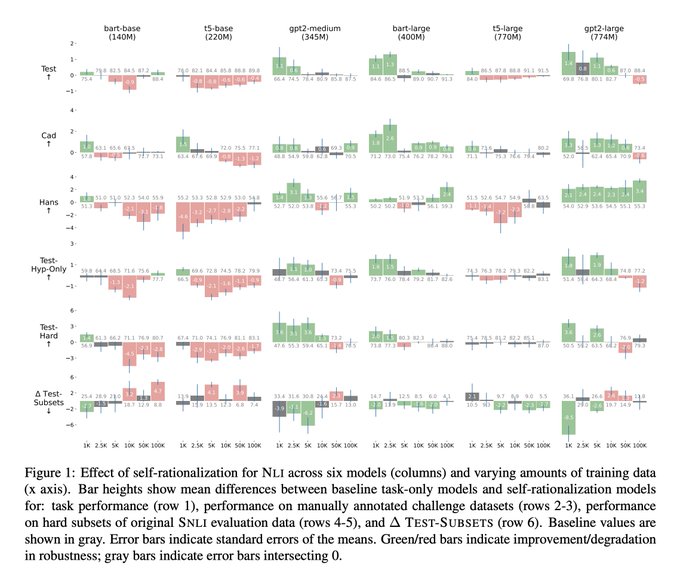

Does training models with free-text rationales facilitate learning *for the right reasons*? 🤔

We ask this question in our

#EMNLP2022

paper, "Does Self-Rationalization Improve Robustness to Spurious Correlations?"

W/

@anmarasovic

@mattthemathman

🧵 1/n

2

19

80

Happy to share that Tailor🪡 will appear at

#ACL2022

as an oral presentation!

For details, w/ new & improved results, check out our...

- in-person talk (5/23, session 3) & poster (5/24, session 5) 🇮🇪

- updated paper 📰:

- code 👩💻:

New preprint alert!

*Tailor: Generating and Perturbing Text with Semantic Controls*

Title says it all: we perturb sentences in semantically controlled ways like how a tailor changes clothes 🪡.

w/

@alexisjross

,

@haopeng01

,

@mattthemathman

,

@nlpmattg

1/n

2

43

182

2

10

76

Feeling grateful to have attended a wonderful

#EMNLP2022

! Highlights include the many interesting poster sessions and a memorable desert sunset 🌅 Big thank you to everyone who stopped by our poster yesterday (and

@i_beltagy

for the 📸)!

1

3

72

#nlphighlights

133: The 1st episode in our series on NLP PhD apps is live!

@complingy

&

@996roma

share faculty & student perspectives on preparing application materials, including statements of purpose and recommendation letters. Co-hosted w/

@nsubramani23

2

19

71

I’ll be a mentor for MIT EECS’s Graduate Application Assistance Program this application cycle—Please consider signing up if you’re applying to PhD programs this fall!

3

8

68

#nlphighlights

134: The 2nd episode in our PhD app series is on PhDs in Europe vs the US.

@barbara_plank

& Gonçalo Correia share faculty & student perspectives on things to consider when choosing. We also discuss the ELLIS program. Cohosted w/

@zhaofeng_wu

3

15

64

Very grateful to have attended

#EMNLP2021

in Punta Cana! It was wonderful meeting so many virtually familiar and new faces in real life and discussing all things NLP (especially on the beach!) 😊🏝

1

0

64

#nlphighlights

135: The 3rd episode in our PhD app series is live!

@radamihalcea

,

@ashkamath20

, and

@sanjayssub

join us to talk about interviews, visit days, & factors to consider in accepting an offer. Co-hosted with

@nsubramani23

1

15

61

One of my favorite things about grad school has been getting to play chamber music again--Had a blast playing Tchaikovsky Piano Trio with

@vikramsundar

and

@erencshin

😊

1

1

51

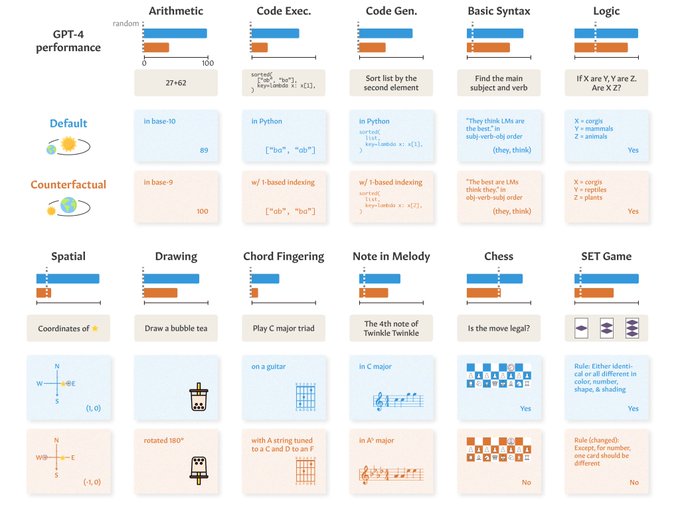

Our new preprint, led by

@zhaofeng_wu

, shows that traditional benchmark evals may over-estimate the generalizability of LLMs' task abilities 🚨

We find LLM performance consistently drops on counterfactual variants of tasks (ex: code exec w/ 1-based indexing)!

Details below 👇

0

6

50

The deadline for Predoctoral Young Investigator (PYI) applications for

@ai2_allennlp

is 2/15 — Two days left to apply!

I *highly* recommend the program for anyone interested in pursuing a PhD in natural language processing.

1

6

47

Had a wonderful time at

#DISI2023

over the past few weeks learning about diverse intelligences and exploring Scotland! 🏴 Grateful to be leaving with many new friends 💙

@DivIntelligence

1

1

46

#NeurIPS2021

Paper 📢: "Learning Models for Actionable Recourse" w/

@hima_lakkaraju

&

@obastani

We'll be presenting this work at Poster Session 1. Happening tomorrow, Tues 12/7, 8:30-10 AM (PST). Come say hi! 👋

Paper:

More info:

0

5

44

In Abu Dhabi for

#emnlp2022

!

Presenting a poster for our work on self-rationalization & robustness on Sunday at 11 AM:

I’d love to chat about pragmatics, pedagogy, the relationship b/w explanations & learning, or anything in between—please reach out! 🤗

Does training models with free-text rationales facilitate learning *for the right reasons*? 🤔

We ask this question in our

#EMNLP2022

paper, "Does Self-Rationalization Improve Robustness to Spurious Correlations?"

W/

@anmarasovic

@mattthemathman

🧵 1/n

2

19

80

1

2

40

Check out our new work led by

@belindazli

on keeping LLMs up-to-date via editing knowledge bases!

This was a fun collaboration (and nice excuse to stay up-to-date with celebrity gossip 😇)

0

3

38

Had a great time at

#emnlp2019

presenting work with Ellie Pavlick, “How well do NLI models capture verb veridicality?” ()Thank you Hong Kong and EMNLP for a great first conference!

4

0

33

#nlphighlights

130:

@pdasigi

and I talk with

@lisabeinborn

about how to use cognitive signals to analyze and improve NLP models. Thank you Pradeep and Lisa for a really interesting discussion!

1

11

30

Super excited to share our work on Tailor: a *semantically-controlled, application-agnostic system for generation and perturbation* and result of a really fun collaboration! Details in thread below👇

New preprint alert!

*Tailor: Generating and Perturbing Text with Semantic Controls*

Title says it all: we perturb sentences in semantically controlled ways like how a tailor changes clothes 🪡.

w/

@alexisjross

,

@haopeng01

,

@mattthemathman

,

@nlpmattg

1/n

2

43

182

0

4

29

Ana has been an incredible mentor to me (and so many others), and I have no doubt she is going to make an equally incredible professor! Any institution would be very lucky to have her 🙌🏼

1

1

28

Excited to share CREST, our new

#ACL2023

work led by the awesome

@MarcosTreviso

! This was a super fun collaboration w/ Marcos,

@nunonmg

, &

@andre_t_martins

😊 CREST combines counterfactuals & rationales to improve model robustness / interpretability--details in the thread below👇

1/7 Thrilled to announce that our paper "CREST: A Joint Framework for Rationalization and Counterfactual Text Generation" has been accepted at

#ACL2023

oral! 🎉

This work is a result of a fantastic collaboration with

@alexisjross

,

@nunonmg

, and

@andre_t_martins

. Let's dive in!

1

6

39

0

2

27

I am deeply grateful to my mentors, friends, & family, who helped me navigate all parts of the application process. Special thank you to everyone at

@allen_ai

for their support over the past 2 years 💖 I also feel so lucky to have met many wonderful people through this process!

1

0

26

I spent two years as a predoctoral young investigator with

@ai2_allennlp

and could not have more positive things to say!! Please do apply if you want to work in an energizing and supportive environment with brilliant *and* kind people 😊

0

1

23

Go work with Ana!! 👇🏻

0

2

21

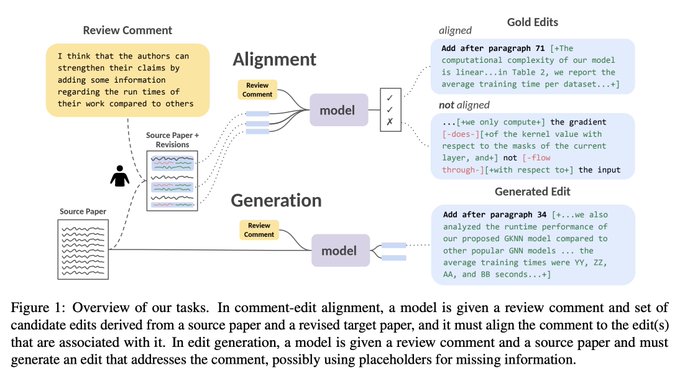

Paper:

Code & data:

Joint work with Mike D’Arcy, Erin Bransom, Bailey Kuehl,

@turingmusician

,

@Hoper_Tom

,

@_DougDowney

, and

@semanticscholar

0

3

16

#nlphighlights

121: Alona Fyshe tells us about the connection between NLP representations and brain activity in this episode hosted with Matt Gardner. Thank you

@alonamarie

and

@mattg

for a really interesting discussion on language and the brain!

2

6

18

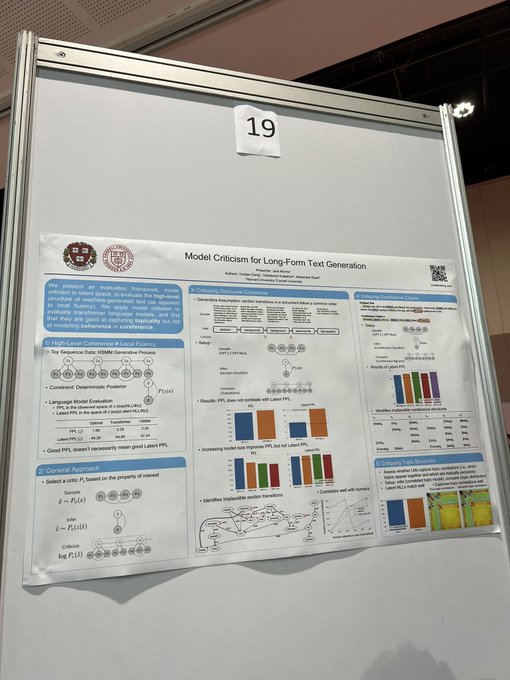

One of my favorite posters was this really cool work by

@yuntiandeng

@volokuleshov

@srush_nlp

(presented by

@jxmnop

) on evaluating long-form generated text in the latent space

0

2

18

Check out my labmates cool work!

0

0

11

Had a great time talking with

@pdasigi

and

@thePetrMarek

about the winning submission, Alquist 4.0, and how it can conduct coherent and engaging conversations! (Teaser: Alquist is designed to store and follow-up about personal details you mention, like that you have a brother)

#nlphighlights

132:

@alexisjross

and I chatted with Petr Marek

@thePetrMarek

about the Alexa Prize Socialbot Challenge, and this year's winning submission from Petr and team from the Czech Technical University. Thanks for the informative discussion, Petr!

0

2

6

0

1

10

@emilypahn

I also struggle with this! What’s helped me is reading to write high level notes about the paper’s main contributions, my takeaways/questions, and connections to what I’m working on. Writing answers to these qs as I read helps to focus my attention and know when to move on

0

0

10

Big thank you to

@complingy

for the idea for this series and to everyone who sent me questions they wanted to see discussed. More topics will be covered in upcoming episodes 🙂

0

0

9

Lastly, a big thank you to my advisor

@jacobandreas

for being so supportive and making my first PhD project such a rewarding & fun experience 😊

(n/n)

0

0

8

Check out

@megha_byte

's new work showing that self-talk enables agents to learn more interpretable & generalizable policies and facilitates human-AI collaboration!

#2

RL agents can reflect too!

In ,

@cedcolas

,

@dorsasadigh

@jacobandreas

, and I find when 🤖s periodically generate language rules describing their best behaviors, they better interact with humans, and are more interpretable + generalizable (self-talk)!

2

28

94

0

0

8

@aliciamchen

@cogsci_soc

@natvelali

@hawkrobe

@gershbrain

This is really cool work 🤩 Congratulations!

2

0

5

@tiancheng_hu

@996roma

@complingy

@nsubramani23

Thanks

@tiancheng_hu

and apologies for the delayed response! We weren't discussing any specifically, but there are a few other predoc/residency programs in industry that I know of--Here's a list I found (though I haven't personally looked through each one)

2

0

5

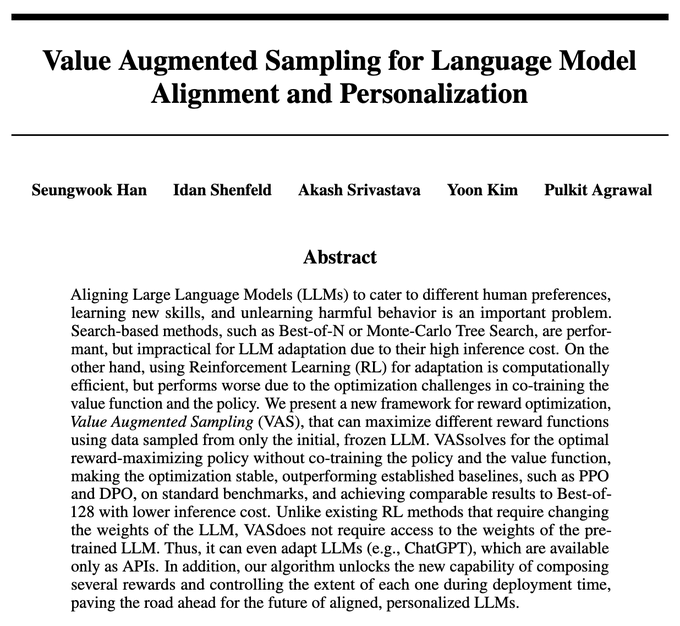

Cool new work by

@seungwookh

and

@IdanShenfeld

on aligning/personalizing models at decoding time!

0

0

4

@LakeBrenden

@glmurphy39

Looks very interesting, looking forward to reading! (5) reminds me of work by

@belindazli

@Maxwell_Nye

@jacobandreas

showing that word reps encode changing entity states based on inputs. Wonder if this would also hold for facts like "Dolphins are mammals"

0

0

4

@shannonzshen

and like RAG, a cheatsheet is not enough to completely rule out hallucinations at test time 😅

0

0

4

@aaronsteven

You might also be interested in our work on Tailor (), which guides generation with control codes that derive from PropBank representations

1

0

1

@jwanglvy

Great q! You're right; ATOM is a probabilistic method with main components: set of Bayesian student models & set of possible examples to choose from. It tracks student predictions and chooses both a student model & an optimal teaching example at each step. Hope that clarifies!

1

0

0