Yikang Shen

@Yikang_Shen

Followers

1,782

Following

295

Media

15

Statuses

177

Research staff member at MIT-IBM Watson Lab. PhD from Mila.

Boston, MA

Joined September 2012

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

TLP THE SECRET OF RING

• 919135 Tweets

凱旋門賞

• 132119 Tweets

Jets

• 99732 Tweets

Semih

• 68976 Tweets

Vikings

• 65038 Tweets

Rodgers

• 59776 Tweets

シンエンペラー

• 59522 Tweets

中嶋監督

• 48576 Tweets

Brighton

• 42117 Tweets

#الملك_سلمان_بن_عبدالعزيز

• 40954 Tweets

Penaltı

• 34425 Tweets

Hoca

• 31132 Tweets

Browns

• 29494 Tweets

Watson

• 27012 Tweets

ブルーストッキング

• 25862 Tweets

Panthers

• 25488 Tweets

#GSvALN

• 22591 Tweets

Mertens

• 22102 Tweets

Texans

• 21352 Tweets

Ange

• 16547 Tweets

Darnold

• 16019 Tweets

#GFKvBJK

• 14491 Tweets

Winston

• 13412 Tweets

Salih

• 12139 Tweets

Last Seen Profiles

It often surprises people when I explain that Sparse MoE is actually slower than dense models in practice although it requires less computation. It's caused by two reasons: 1) lack of efficient implementation for MoE model training and inference; 2) MoE models require more

3

25

143

Thanks for posting our work!

(1/5) After running thousands of experiments with the WSD learning rate scheduler and μTransfer, we found that the optimal learning rate strongly correlates with the batch size and the number of tokens.

4

27

134

It’s nice to see people start working on MoE for attention mechanisms! A simple fact is that about 1/3 of transformer parameters and more than 1/3 of computation are in the attention layers, so you need something like MoE to scale it up or make it more efficient. Btw, our work,

1

13

125

Training LLMs can be much cheaper than your new top-spec Cybertruck!

Our new JetMoE project shows that just 0.1 million USD is sufficient for training LLaMA2-level LLMs.

Thanks to its more aggressive MoE architecture and 2-phase data mixture strategy, JetMoE-8B could

3

12

76

Haha, now we start to talk about who is earlier. Please remember that ModuleFormer also comes earlier with a more sophisticated architecture. It has mixture of experts for both Attention and MLP!

1

5

41

In case you are interested in using the Mixture of Attention heads (MoA) in your next model, here is my implementation of MoA using the Megablock library:

0

6

40

When you consider using MoE in your next LLM, you could ask yourself one question: Do you want a brutally large model that you can't train as a dense model, or do you just want something that is efficient for inference? If you choose the second goal, you may want to read our new

0

6

37

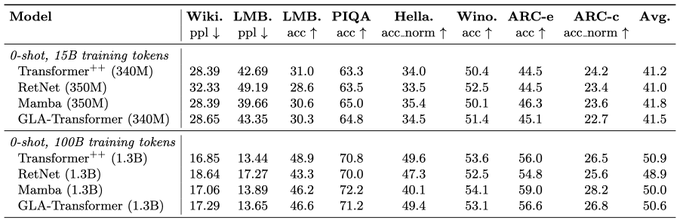

This is our experimental models. We will release something more interesting very soon!

3

4

34

Now accepted as Spotlight at

#NeurIPS2023

!

See you in New Orleans!

0

3

27

If you are interested in joining our team as a research intern, please send your resume and a brief statement of interest to yikang.shen

@ibm

.com.

We look forward to reviewing your application! (4/4)

2

0

25

Yes, our goal is to create really useful code LLMs for real production use cases, not for just getting some kind of sota on HumanEval (but we still get it 😉).

Interested in LLM-based Code Translation 🧐?

Check our CodeLingua leaderboard (). We have updated the leaderboard with newly released Granite code LLMs from

@IBMResearch

. Granite models outperform Claude-3 and GPT-4 in C -> C++ translation 🔥.

3

13

51

0

7

22

@teortaxesTex

@tanshawn

@samiraabnar

I am super exciting to have a race like this, we will do our best 😃

1

0

19

@arankomatsuzaki

I mostly agree with this post. Except the part that Moduleformer leads to no gain or performance degradation and instability. Could you point me to these results? Our version of MoA made two efforts to solve the stability issue: 1) use dropless moe, 2) share the kv projection

2

2

15

This is a joint work with

@SonglinYang4

@bailin_28

@rpanda89

and

@yoonrkim

. We (the authors) are also at NeurIPS, and happy to chat more on this! (5/5)

1

0

14

Very important evaluation and insight. The problem of some previous rnn models is that they don’t have large or sophisticated enough hidden states to memorize the context. Mamba and our GLA tackle this problem by using a large hidden state and inout dependent gating mechanism.

0

1

12

On my way to

#NeurIPS2023

, let me know if you want to chat about MoE, alignment, RNN (we will release a new architecture soon!) and anything else about LLM!

3

0

13

@arankomatsuzaki

Ya, I am familiar with the MoE attention results in the switch transformer paper’s appendix. I think the instability is mostly due to too many possible combinations of query and key experts (value and output experts as well). The results motivate us to share kv projection in MoA.

0

3

11

Language models generate self-contradict responses across different samples. We leverage game theory to identify the most likely response!

0

5

9

Dolomite engine has all the LLM technologies that we have developed so far: ScatterMoE, Power Learning Rate Scheduler, Efficient DeltaNet, and more to come! You can easily use it for your pretraining and sft!

0

1

10

@far__el

Sorry, the link is actually not accessible. We will set up the GitHub repo very soon.

0

0

8

Learn more about JetMoE and access the model:

Research:

GitHub:

Huggingface:

Demo:

Joint work with

@Zhen4good

@tianle_cai

@qinzytech

@myshell_ai

@LeptonAI

0

0

8

This new principle-following reward model is worth millions of dollars! Because Llama2-Chat collects 1 million human-annotated data to train their reward model, we outperform it with no human annotations.

0

1

7

@fouriergalois

Moreover, in practice gateloop used the recurrent form, as opposed to the parallel form, in the actual implementation via parallel scan (potentially due to the numerical issue discussed in section 3), while we rely on our chunk-wise block-parallel form.

1

0

4

This is a joint work with

@mjstallone

,

@MishraMish98

,

@tanshawn

, Gaoyuan Zhang, Aditya Prasad, Adriana Meza Soria,

@neurobongo

,

@rpanda89

1

0

6

Didn’t expect Granite Code model to outperform GPT-4-based solutions. But, wow!

More public leaderboard results 🔥

IBM takes first place on the large-scale text-to-SQL BIRD-SQL leaderboard with ExSL + Granite-20b-Code, beating out a host of GPT-4-based solutions. Huge congrats to the global

@IBMResearch

team that achieved this result.

3

3

14

1

0

6

An excellent explanation about the inference efficiency of MoE mdoels

@MistralAI

model is hot: with mixture-of-experts, like GPT-4!

It promises faster speed and lower cost than model of the same size and quality

Fascinatingly, the speed-up is unevenly distributed: running on a laptop or the biggest GPU server benefit the most. Here’s why 🧵 1/7

7

87

465

0

2

5

@agihippo

@m__dehghani

@tanshawn

Haha, I think that’s because we are gpu-poor and using 2018’s hardware (v100) to do the experiments.

1

0

5

@fouriergalois

Yes, the gateloop is a very interesting related work. The main difference is that our parallel form is more general since gates can be applied in both K and V dimensions, while only the gate for the K dimension is applied in gateloop.

1

0

5

@XueFz

My observations is that MoE can save up to 75% computation cost. But saving more computation requires more experts. People is getting more conservative at number of experts recently, such that we don’t suffer too much from the extra cost of having an insane amount of parameters.

1

0

4

@agihippo

I can’t agree more. Even the possibility, that some jobs might crush and leave some nodes sitting idle, makes me want to stay awake.

1

1

4

@srush_nlp

I think eventually the iteration of gpt-x will look like a human-in-the-loop weakly supervised training. Maybe not a bad thing.

1

0

3

@cloneofsimo

My observation is that this happens along with learning rate decreases. If the learning rate is constant the gradient norm tends to be more stable.

1

0

3

@vtabbott_

Hi Vincent, your robust diagram looks very nice! We are very interested in having SUT diagrammed. It's difficult to explain the architecture (like the Mixture of Attention heads) to those who are not familiar with the context.

1

0

3

Fantastic work by Junyan and

@yining_hong

! The communicative decoding idea enables VLM to do compositional reasoning across different modalities.

0

0

3

@koustuvsinha

@jpineau1

@MetaAI

@mcgillu

@Mila_Quebec

Congrats Dr. Sinha! The thesis is very interesting.

0

0

2

@ElectricBoo4888

@SonglinYang4

@bailin_28

@rpanda89

@yoonrkim

GLA is simple and clean in terms of architecture design, thus could potentially makes it more robust for different scenarios. It also has a much larger hidden state to store more context information.

0

0

2

@MaziyarPanahi

@erhartford

@_philschmid

@qinzytech

@Zhen4good

@tianle_cai

Ya, our Megablocks fork is here:

3

0

2

@srush_nlp

I think human users of gpt models will probably filter and correct some obvious errors before put the model generated content to web. And the future models will use the revised data as training corpus. Thus we have a circle of model generation-human revise-model training.

1

0

2

@EseralLabs

Thanks. The current model is trained with much fewer tokens and compute, but we are training a larger model to get close to the llama-level performance.

0

0

2

@teortaxesTex

It will take some time and experience to put every pieces together in the right way.

0

0

2

@SriIpsit

Hi Krishna, thanks for your interest! I have received many emails. I will go through them in the next few days.

0

0

1

@ShuoooZ

@mjstallone

@MishraMish98

@tanshawn

@neurobongo

@rpanda89

There are several papers discussed about how to predict the critical batch size, including

0

0

1

@dallellama_

Sound great! I will be happy to work together on improving its inference efficiency.

0

0

1

@srush_nlp

Maybe it's because doing CoT and creating a chatbot with BERT is not straightforward?

1

0

1

@XueFz

Totally agree! I also like the OpenMoE. The open sourced training pipeline is a great contribution to the community.

0

0

1

@KoroSao_

The internship will be 3 months at the beginning. But it can be extended conditioned on the progress.

0

0

1

@bonadossou

Ohh, I didn't know that the forum is actually not open... We will set up the GitHub repo very soon.

0

0

1