Simo Ryu

@cloneofsimo

Followers

5,153

Following

476

Media

488

Statuses

1,649

I like cats, math and codes cloneofsimo @gmail .com

Seoul, Korea

Joined May 2022

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Iran

• 570177 Tweets

Kyle

• 305733 Tweets

アザラシ幼稚園

• 122796 Tweets

FELIX

• 103760 Tweets

Mascherano

• 81081 Tweets

Emily

• 78192 Tweets

pastor

• 74422 Tweets

RAGE

• 63341 Tweets

#SmackDown

• 56256 Tweets

コスサミ

• 52905 Tweets

#Lollapalooza

• 48052 Tweets

Angola

• 42441 Tweets

#SKZpalooza

• 40803 Tweets

残高不足

• 37620 Tweets

STRAY KIDS DOMINATE LOLLA

• 32700 Tweets

FGOフェス

• 30877 Tweets

ロッキン

• 28573 Tweets

Lamar

• 27673 Tweets

Aerosmith

• 24074 Tweets

ムビチケ

• 23255 Tweets

Bianca

• 17740 Tweets

Mahomes

• 17378 Tweets

Bloodline

• 16887 Tweets

オベロン

• 15498 Tweets

はちみつの日

• 12013 Tweets

英霊博装

• 11700 Tweets

Last Seen Profiles

Yes. Yes!!!! Everyone read this material three times!

7

92

644

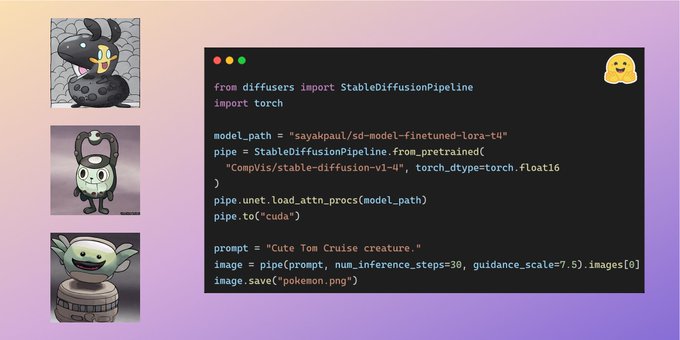

So year ago I introduced LoRA (which was at the time little known even to the LLM community, it was well before LLAMA / Peft) to image generation space.

Little did I realize year later thousands of deepfake waifu LoRAs would be flooding on the web... 🫥

19

29

347

To fellow solo-working-outsider bros... Don't let comment like this discourage you.

There is *a lot* to do. We *all* need your help.

Ask for grants if you don't have compute (h100s cost like < 3$ / hour anyways, prove in small scale and reach out), like

@FAL

, or Google TPU

@tilmanbayer

@faustsoli

einstein was able to advance the field just with a pen and paper even when he wasnt able to verify his theories through experiment at the time. you cant do that in ai, at all

3

0

9

6

32

298

So you've had your fun with

@karpathy

's mingpt. Now its time to scale : introducing min-max-gpt: really small codebase that scales with help of

@MSFTDeepSpeed

. No huggingface accelerate, transformer. Just deepspeed + torch: maximum hackability

7

35

248

Lavenderflow-pretrained-256x256-6.8B Hybrid MMDiT just reached 0.597 on GenEval! 🥳

It took me and

@isidentical

less than 7 weeks of part-time effort + 4k h100 hours to get to SDXL-level (and this is just pretrained model) Does two of us worth 1B valuation?

7

13

202

Uhh excuse me wtf LLAMA3 ranking 1st????? in lmsys arena in English? Kudos to team

@AIatMeta

, based AF 👏👏 for open sourcing literal GPT-4 level model, (almost) no strings attached🥳

6

19

177

Fun fact: AuraFlow was < 800 LoC and < one month of training. Code is just open. It's just deepspeed and MDS.

You don't need bloated codebases to make a good model!

4

15

172

Final model 512x512 aesthetic training 😍

btw Its been an absolute wild run. I've learned SO DAMN much from this process. Not a lot of people get to make foundation model from scratch with such freedom. Im so glad

@burkaygur

from

@FAL

offered me such collaboration!!

7

8

158

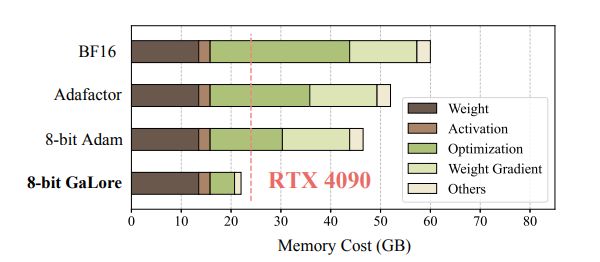

But to be honest, there's been tons of low-rank, quantized gradient-approximation for efficient allgathers that the paper didn't mention for some reason. Like, not citing PowerSGD?? this? …Like man totally not cool 🙄

fig from psgd

3

25

148

Fully fine-tuning SDXL on OW Kiriko images. This took about 10 min. Can you believe this is fine-tuned Base model? BASE????

@StabilityAI

is simply incredible.

9

14

118

Friendly reminder that this is truely open source t2i model!

Every line of code to reproduce this model has always been open sourced from very beginning!

(But it requires a lot of vram to run this code. You need substantial modification to save a lot

2

9

97

This is the "real" stable diffusion moment for LLMs. Goodbye llama.

📢 Introducing MPT: a new family of open-source commercially usable LLMs from

@MosaicML

. Trained on 1T tokens of text+code, MPT models match and - in many ways - surpass LLaMa-7B. This release includes 4 models: MPT-Base, Instruct, Chat, & StoryWriter (🧵)

22

215

1K

2

4

96

First looks on training 0.9B IN1k model, 67k steps in, im already getting pretty decent quality images!! minRF is damn scalable with help of

@MSFTDeepSpeed

!

👉

[ rectified flow, muP, SDXL vae, MMDiT, cfg = 7.0!]

4

5

93

btw Qwen2 has arena-hard of 48 (imo toughest, most relevant benchmark out there) puts it right besides gemini, gpt4, and claude.

...only except that its truly open (apache2.0), 128k, multilingual, and 70B!! What a day!

4

8

84

Lucky enough to collaborate with

@huggingface

's diffusers team (more like watching them implement🤣 I wrote no code) and... huge updates! Now LoRA is officially integrated with diffusers! There are major difference from my implementations, very simple to use!

3

15

79

Btw, this was done on int8 quantized dataset i shared couple weeks ago, which is 26x smaller than the original dataset!!! Imo clever dataset quantization has a lot to offer.

5

2

76

golden apple next to a bronze orange, next to silver grapes

This is quite challenging indeed.. Still slightly better than non-commercial open-weight model out there :)

@cloneofsimo

I've had to do this for specific loras I've trained, and even then you can run into data balance issues. Painful if oranges are usually orange.

BTW, I tried to prompt 'golden apple next to a bronze orange, next to silver grapes' in SD3 Medium and it can only do 1 metal fruit.

0

0

0

3

4

76

I wouldn't have come up with using lora for dreambooth if I had beefy A100 gpu to play around 😂Now even the "GPU-rich" uses lora to fine-tune diffusion model.

1

9

72