Andrew Carr (e/🤸)

@andrew_n_carr

Followers

16,328

Following

3,217

Media

917

Statuses

6,058

science @getcartwheel AI writer @tldrnewsletter advisor @arcade_ai Past - Codegen @OpenAI , Brain @GoogleAI , world ranked Tetris player

RuntimeError: shape is invalid

Joined July 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Biden

• 2697337 Tweets

Biden

• 2697337 Tweets

Trump

• 2114301 Tweets

JIMIN JIMIN

• 1839290 Tweets

ROCKSTAR OUT NOW

• 1339048 Tweets

America

• 961081 Tweets

#SmeraldoGardenMarchingBand

• 899434 Tweets

Democrats

• 391388 Tweets

#Jimin_MUSE

• 310478 Tweets

Megan

• 158512 Tweets

Dems

• 133375 Tweets

Newsom

• 104875 Tweets

HYBE Stop Stealing The8

• 99439 Tweets

Kamala

• 91509 Tweets

Medicare

• 54883 Tweets

バイデン

• 45013 Tweets

ホロライブ

• 33586 Tweets

#DelhiAirport

• 27480 Tweets

土砂降り

• 27287 Tweets

約7年間

• 25846 Tweets

#يوم_الجمعه

• 25691 Tweets

Hayırlı Cumalar

• 24222 Tweets

Michelle Obama

• 22329 Tweets

ルックバック

• 22208 Tweets

Aちゃん

• 19253 Tweets

Arequipa

• 16953 Tweets

デンリュウ

• 16891 Tweets

Kerennnn

• 16870 Tweets

MemastikanNKRI MajuSEJAHTERA

• 15942 Tweets

騎乗停止

• 12805 Tweets

えーちゃん

• 12671 Tweets

Happy Birthday Elon

• 12376 Tweets

池添と富田

• 12197 Tweets

Otaku Hot Girl

• 11782 Tweets

人身事故

• 11748 Tweets

Tariq

• 10360 Tweets

Last Seen Profiles

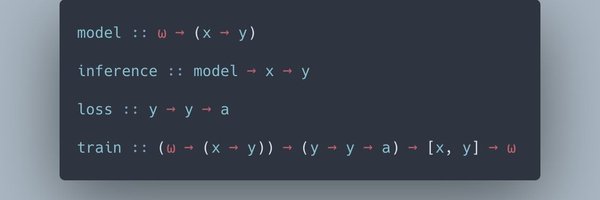

Really cool work out of

@Microsoft

called hummingbird! You can convert traditional

#ML

models to

#Tensor

#computations

to take advantage of

#hardware

acceleration like GPUs and TPUs.

Here they convert a random forest model to

@PyTorch

8

234

933

I have never been more stressed than when I worked at OpenAI.

ChatGPT has an ambitious roadmap and is bottlenecked by engineering. pretty cool stuff is in the pipeline!

want to be stressed, watch some GPUs melt, and have a fun time? good at doing impossible things?

send evidence of exceptional ability to chatgpt-eng

@openai

.com

298

535

7K

14

28

788

✨Life Update✨

I'm joining the research team

@OpenAI

I'll be joining to work on the future of ML, programming languages, and more.

To say I'm excited would be an understatement.

30

11

615

I had my yearly rejection from

@DeepMind

today :)

It was better this year because I actually got an interview (and passed!).

Unfortunately, headcount is way down, but I'll try again next year!

17

7

616

This was my

#1

learning from my time at OpenAI. If someone else can't build on top of your work due to complexity - it's likely just a hack and not a fundamental step forward

7

34

338

@n3wb_3

I've been doing it for years and had no idea it wasn't clickable 😫 that's my dumb mistake.

Thanks for posting the link for me

1

1

313

I often say that code based AIs are where I'm extremely optimistic. Interpreters, data analysis, and copilots.

But this may be the coolest application that actually works today.

Generate a full wiki based on your codebase. No more outdated docs, stale comments, or knowledge

4

24

259

The new multimodal model from

@AdeptAILabs

is pretty neat!

Most of the AI models we work with are pure language models. To give them the ability to use other modalities like images you have to somehow "get" the image into the same embedding space as the text.

Lots of models

9

44

263

My PhD advisor just got like 80 A100s for his lab alone

10

11

230

I know everyone is excited about Mixtral and the new Hyena models - but

@ContextualAI

just dropped a pile of cool new models and a new alignment framework

4

29

206