Dmytro Dzhulgakov

@dzhulgakov

Followers

2,843

Following

609

Media

42

Statuses

238

Co-founder and CTO @FireworksAI_HQ . PyTorch core maintainer. Previously FB Ads. Ex-Pro Competitive Programmer

San Francisco, CA

Joined April 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#DNC2024

• 168441 Tweets

River

• 145298 Tweets

Macri

• 89559 Tweets

Bill Clinton

• 84986 Tweets

Simón

• 79175 Tweets

#虎に翼

• 56039 Tweets

Okan

• 44881 Tweets

Mike Lindell

• 39555 Tweets

Talleres

• 25798 Tweets

サンサン

• 25228 Tweets

JENNA

• 24677 Tweets

#AEWDynamite

• 22521 Tweets

Soto

• 22116 Tweets

Oprah

• 21028 Tweets

Borja

• 18482 Tweets

Peñarol

• 16877 Tweets

Stevie Wonder

• 14902 Tweets

Geoff Duncan

• 14229 Tweets

Joey Votto

• 12205 Tweets

Last Seen Profiles

GIL in Python will be no more. Huge win for AI ecosystem. Congrats to

@colesbury

- it took 4+ years of amazing engineering and advocacy.

Many parts of

@PyTorch

could become simpler: DataLoader, Multi-gpu support (DDP), Python deployment (torch::deploy), …

Here’s why. 🧵

No More GIL!

the Python team has officially accepted the proposal.

Congrats

@colesbury

on his multi-year brilliant effort to remove the GIL, and a heartfelt thanks to the Python Steering Council and Core team for a thoughtful plan to make this a reality.

70

1K

5K

12

222

1K

Run

@MistralAI

MoE 8x7B locally with hacked-up official Llama repo!

First cut, not optimized, requires 2 80GB cards.

The optimized version is coming soon to

@thefireworksai

. Stay tuned!

14

60

467

@MistralAI

model is hot: with mixture-of-experts, like GPT-4!

It promises faster speed and lower cost than model of the same size and quality

Fascinatingly, the speed-up is unevenly distributed: running on a laptop or the biggest GPU server benefit the most. Here’s why 🧵 1/7

7

87

465

This you? We ran your show-case example 3 times on Together playground, and it infinitely looped or answered incorrectly every time. Curious how that slipped through all 5 steps of your quality testing 🤔

Other examples from your blog fail to reproduce too. 🧵

5

10

111

We’ve been cooking!

Up to 300 tokens/s for Mixtral (and still fast with long prompts!)

Reduced pricing, simplified billing, dedicated deployments and more.

Enjoy!

6

10

96

Highlights of

@PyTorch

in 2021. Huge thanks to the community for making it awesome! Onwards to 2022!

0

17

97

We’re pushing Mixtral performance to the extreme with fp8, fully custom attention kernel and MoE specific tricks (sometimes 3 experts is better than 2!).

Getting sweet 2x speed up & throughout increase at virtually same accuracy compared to fp16 and 4x+ improvements over vLLM.

1

9

72

I’m all pro open benchmarks, but comparing **public** LLM endpoints just doesn’t work unless there’s a confirmed huge user base like OpenAI. In fact, performance may be even anti-correlated with popularity😉 Here’s why 🧵

3

3

52

Really impressive model and infra co-design for efficiency from

@character_ai

(congrats

@NoamShazeer

@myleott

and the team). We've seen similar great wins from some of them at

@FireworksAI_HQ

:

1) Many tricks to shrink KV cache: MQA (instead of even GQA), share KV across layers,

0

2

51

Not all LLM APIs are created equal...

The new Llama 3.1 405B Instruct is on

@FireworksAI_HQ

- ready for building!

3

3

47

@francoisfleuret

@numpy_team

@PyTorch

If one wants to understand it visually, this visualizer by

@ezyang

is really handy:

0

3

44

Llama 3.1 405b can go even faster with

@FireworksAI_HQ

. We don't have a consumer web search product, but our LLM API is open for

@perplexity_ai

or everyone else to build with

8

5

43

When comparing the speed of LLMs inference, how long should the prompts and completions be?

Current leaderboards from

@ArtificialAnlys

or

@withmartian

use 3:1 prompt:completion ratio. But real-world use cases are 10:1 or even 30:1. Speed and cost differences can be dramatic!

I

2

3

39

@MistralAI

@thefireworksai

Disclaimer: there's no official model code yet. Based on parameter names and the fact that Mistral team contributed to I assume that it uses simple MoE (for inference).

Generation looks coherent, so there's a good chance it's correct.

1

1

35

Mixtral 8x7B speed-ups are in at

@thefireworksai

: up to 175 token/s from our engine (~100 t/s in the playground) and a new pricing tier at $0.45-0.6/M tokens

Check the blog on how we raced to be first to enable Mixtral, 2 days before the official release!

2

6

34

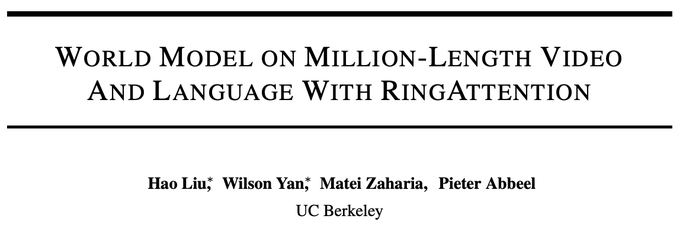

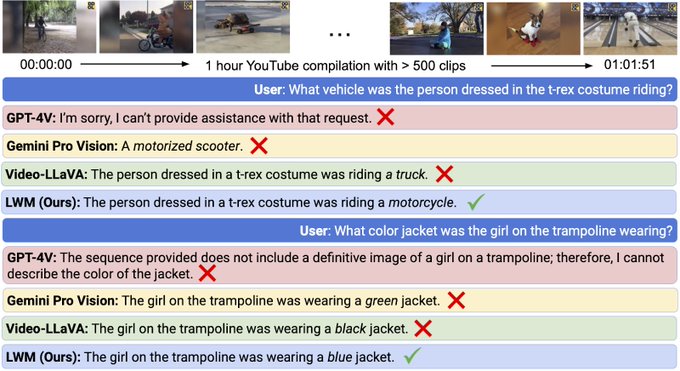

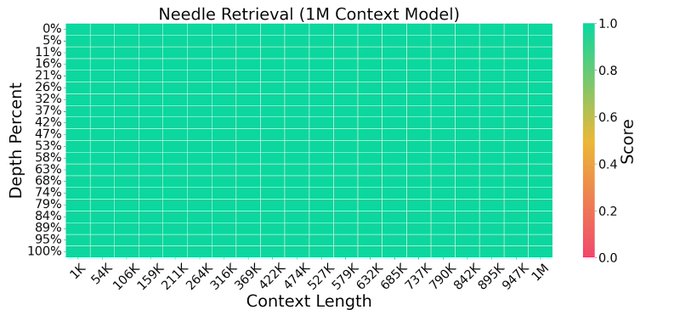

Gemini 1.5 Pro is an impressive work. Sadly, the technical report is very very sparse on details. But this paper from yesterday gives a glimpse on how one may train for extra long context:

2

2

32

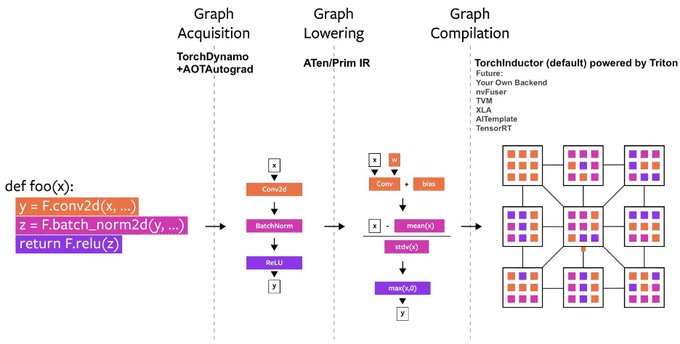

PyTorch 2.0 is coming next March! Ful backward-compatibility. New 'torch.compile' one-liner for 50%+ speed-ups in training without changing model code.

It's a culmination of years of R&D on the compiler stack that can support your PyTorch code without compromising flexibility.

We just introduced PyTorch 2.0 at the

#PyTorchConference

, introducing torch.compile!

Available in the nightlies today, stable release Early March 2023.

Read the full post:

🧵below!

1/5

23

524

2K

0

1

25

Check out what we've been working on for the past few months at

@thefireworksai

: fast and cheap serving for LLMs, including your own LoRA fine-tuned models.

Stay tuned for more technical deep dives soon.

P.S. It's fully free until we rollout billing and quotas :)

0

1

24

Function-calling or chat capabilities: why not both?

We cooked 🔥Firefunction-v2 to excel at general conversation and function calling simultaneously (many existing models struggle with it)

Served 2x faster and 10x cheaper than GPT-4o on

@FireworksAI_HQ

, open weights on HF

2

4

23

@abacaj

And the plot thickens: someone from Mistral hints my original implementation was correct. It seems that the fix makes model finicky with formatting issues (e.g. adding the space).

I guess we will need to wait for the official implementation :)

2

0

23

Overall, it’s exciting to see MoE revival with Mixtral’s release. This class of models will benefit both local LLMs and highly loaded sophisticated server deployments like the one we’re building at

@thefireworksai

You can try Mixtral live on Fireworks:

We released our tuned Mixtral chat a few hours ago. Play with it through our app or API: . Big thanks to

@MistralAI

‘s new addition of this MoE model. We are very excited about it.

11

26

284

2

1

23

GPT-4 is rumored to use MoE too. It serves a lot of traffic and thus benefits from this regime. 6/7

1

1

22

More perf wins: SDXL and Playground image gen models are now 40% faster AND 35% cheaper on

@FireworksAI_HQ

. Pure system optimization - the quality and # steps is the same.

And SD3 Medium via our partnership with

@StabilityAI

is coming soon too.

Congratulations

@FireworksAI_HQ

on improving the speed of their Text to Image model APIs!

Fireworks has reduced generation time by ~40%, from ~2.8s to ~1.7s for Playground v2.5 and ~1.9s to ~1.2s for SDXL. Fireworks has also reduced prices ~35%, positioning it amongst the

1

1

12

2

3

21

@marktenenholtz

@huggingface

It's even simpler, the original Falcon-7B code on HuggingFace has an interface bug that breaks incremental generation: you pass the prompt through the model for every incremental token. Notice `past` vs `past_key_values`...

2

5

20

@omarsar0

@GroqInc

@GroqInc

generation speed is indeed insane. But I found it struggle with long prompts. E.g. summarization of 12k tokens Tesla earnings took 3.5s server time on Groq (5s with queuing) but only 2s with good old GPUs

@FireworksAI_HQ

(with loaded servers).

LLM latency usually adds

2

2

20

New Llama 3.1 models, including the mighty 405B that rivals GPT-4 (while being open-weights) are on Fireworks at just $3/M tokens!

Try it in the playground or start building with API today.

🚀 Exciting news! Fireworks AI is one of the first platforms to offer Llama 3.1 for production use from day one in partnership with

@AIatMeta

. With expanded context length, multilingual support, and the powerful Llama 3.1 405B model, developers can now leverage unmatched AI

8

18

108

1

1

20

LLaMA2 is out!

- commercial license 💰

- chat tuned 💬

- 1.4T -> 2T of tokens, fresh data from 2023 📚

- 2k -> 4k context, RoPE frequency scaling still works 📏

- Grouped/Multi Query Attention for efficient inference 🏎

On last point, our blog covers MQA/GQA being a big deal

1

2

19

@besanushi

@OpenAI

Even on the same GPU numerics can be slightly different depending on which CUDA kernel is launched. cuBLAS matmul can return different results depending on batch size! Sparsity or MoE tend to amplify even smallest differences.

And across different GPUs all bets are off.

1

0

18

Just fixed ambiguous MoE implementation. It gives much better results. Thanks to

@abacaj

and bjoernp for comparing both versions!

Also, you can try the fix live at now!

2

1

19

The first blog about LLM inference optimizations we're doing. Stay tuned for more

0

1

19

@cHHillee

This is cool!

However, Mixtral MoE is particularly susceptible to the “optimized throughout != optimized latency” caveat.

Even a modest batch size activates all 4 experts and slows down generation ~4x. While BS=1 is too costly.

My earlier explainer:

@MistralAI

model is hot: with mixture-of-experts, like GPT-4!

It promises faster speed and lower cost than model of the same size and quality

Fascinatingly, the speed-up is unevenly distributed: running on a laptop or the biggest GPU server benefit the most. Here’s why 🧵 1/7

7

87

465

1

2

19

Great work on prompt caching with RadixAttention from

@lmsysorg

. We have been running similar implementation in production at

@FireworksAI_HQ

for a few months delivering wins to customers:

Rare case I got to write a Trie outside of programming contests :)

0

1

19

Gemma 2 is looking really good and it's now live on

@FireworksAI_HQ

! The same day it was released.

I suspect something is off with

@huggingface

's implementation. In our evals, the Fireworks version gets 71.2% (base) / 72.2% (instruct) on MMLU, matching the announcement blog at

3

0

18

@AravSrinivas

Not really peerless if you extend the graph to today :)

That said, Perplexity is an amazing product!

0

1

18

Happy Birthday to

@PyTorch

and huge thanks to the community! Personally, it's been a fun and rewarding journey helping

@PyTorch

grow and empower AI developers from research to production. Excited for the future, we're just getting started!

0

2

18

@MistralAI

@thefireworksai

Per Mistral's comment on their Discord updated RoPE's theta to 1e6 in the repo

2

0

17

Deep dive into how to make LLM generation fast with CUDA graphs while still keeping your model code nimble in python. It's one of the many optimizations behind our inference engine at Fireworks.

The Fireworks Inference Platform is fast, but how? An important technique is CUDA graphs, which can achieve a 2.3x speedup for LLaMA-7B inference. Learn about CUDA graphs, complexity applying them to

#LLM

inference, and more in our new deep dive. 1/6

1

10

47

0

1

15

Excited to see many awesome community members in person at

#PyTorchConference

tomorrow! Some major announcements are coming too…

0

3

16

Fine-tuning from

@FireworksAI_HQ

goes beyond training: it's paired with our serverless inference.

You can iterate on 100s of model variants quickly while paying per token only, no time-based or fixed costs.

Supports many models, incl Mixtral. And it's fast too (as always).

🔥 We’re excited to announce

@FireworksAI_HQ

fine-tuning service! Fine-tune and run models like Mixtral at 300 tokens/sec with no extra inference cost!

🔁 Tune and iterate rapidly - Go from dataset to querying a fine-tuned model in minutes covering 100+ models.

🤝 Seamless

12

31

179

0

0

14

@marktenenholtz

@huggingface

And TGI doesn't run Multi Query Attention yet, just broadcasts. Falcon is unique among open models to have MQA today btw. With special optimizations for MQA the numbers look even better for the inference service we're building at

0

2

15

@ezyang

@MaratDukhan

Doesn’t it break before the comparison? I.e. just loading the literal …993. causes it’s value to change

1

0

15

@StasBekman

This needs to come from NVIDIA low level support. MSR folks claim they built something like this by intercepting and replaying cuda driver calls: (awesome idea!). But I haven’t seen anything available/open source.

2

0

14

@francoisfleuret

There is!

This injects a hook in PyTorch dispatcher that runs after any operator (regardless of where it’s called from)

That repo has a lot of other tricks like it.

0

2

14

@MistralAI

I hacked up official Llama repo to load and run it. Results seems coherent

Run

@MistralAI

MoE 8x7B locally with hacked-up official Llama repo!

First cut, not optimized, requires 2 80GB cards.

The optimized version is coming soon to

@thefireworksai

. Stay tuned!

14

60

467

0

1

11

@togethercompute

I tried the exact same settings (temp=0) this morning and got infinite looping (see the video in the original thread). I tried it now and I get the correct answer vs looping 50% of the time.

It looks like temp=0 is not fully deterministic in your engine

1

0

14

@aidan_mclau

@sualehasif996

@teortaxesTex

@GroqInc

@FireworksAI_HQ

We’re cooking support for it at Fireworks, stay tuned

2

0

14

@ezyang

Root cause is tight computation interdependence of SGD: you want many steps and each depends on the prev fully. More like HPC (akin to ODE solver) than MapReduce. Asynchronicity (like AdaDelay)/huge batch (close to epoch) turned out to give worse quality.

2

4

13

It was really fun to work with

@cursor_ai

on making big code rewrites reliable and fast - and make the great product even better

.

@cursor_ai

trained has trained a specialized model on the "fast apply" task, which involves planning and applying code changes.

The fast-apply model surpasses the performance of GPT-4 and GPT-4o, achieving speeds of ~1000 tokens/s (approximately 3500 char/s) on a 70b model.

0

4

34

0

0

13

HF truly became foundation infra of ML world. I wonder how many other services and particularly CIs are down now because they can’t download the models.

Sending 🤗

P.S. is working normally

1

0

12

@cbalioglu

is building an upcoming

@PyTorch

feature to do model parallelism partitioning on modules larger than one gpu which is based on meta tensors too:

1

1

11

Latest goodies for training ranking/recommendation models in PyTorch. Scaling to trillions of params and 100s of GPUs.

Compared to "trillions" in LMs, rec models are "sparse" with a lot of nn.EmbeddingBags. Thus special parallelism primitives that TorchRec provides.

0

2

11

LLMs can play Doom!

Amazing team

@SammieAtman

,

@hopingtocode

and Paul used

@FireworksAI_HQ

fine-tuning and serving to teach 7B LLM to play Doom from ASCII screen capture. They did it overnight and won first place at SF Mistral hackathon! See more in their guest blog below.

🚨 New Blog Alert 🚨

Find out how a group of Gen AI enthusiasts used

@FireworksAI_HQ

to make a LLM play DOOM, a video game created in 1993 that has gained cult status among hackers at Mistral SF Hackathon!

Special thanks to Bhav Ashok (

@SammieAtman

),

@hopingtocode

, and Paul

0

6

22

1

1

12

So what can we do as a community? The only meaningful comparison is using fixed hardware (which usually means dedicated deployments).

Doing the right benchmark is really tricky too. What we learnt at

@FireworksAI_HQ

is incorporated in our OSS suite:

1

0

12

@Suhail

TensorCore TFlops is what matters, but the ratio was the same for A100->H100: 312 -> 989 (NVIDIA claims 1979 but it’s fake sparsity marketing).

Also important is memory bandwidth. It went only 2 -> 3.35 TB/s. Makes it harder to utilize those flops on H100.

1

0

11

@cbalioglu

@PyTorch

Torch operators can take meta tensors and produce meta tensor results with correct shape & dtype. The new way to write 'structured kernels' allows to get an operator working on meta and a real device in one go.

0

1

10

@jeffboudier

Great work, fp8 is fast!

But why report numbers at batch 4?! It’s highly misleading as the actual speed is 300 token/s. And if throughput - then 1.2k/s is very low, one can just increase batch to 64/128/etc

2

0

11

Structured output and function calling major update at

@FireworksAI_HQ

🔥

🛠 FireFunction V1: OSS model tuned for tool use at GPT-4 level (agents, routing...)

✅ guaranteed JSON schema for any model

🧐 beyond JSON: custom grammar guidance for any model (YAML, multi-choice...)

1

0

10

Check our new function-calling model ☎️ and API on

@FireworksAI_HQ

.

Blog covers why evaluation of functions is tricky (our curated hard dataset is on HF) and fine-tuning journey to get GPT4-level quality (no training on test set!).

More to come!

1

0

9

Amazing, programming & math olympiads might go the way of Chess and Go sooner than expected.

Neat idea of solution sampling:

- pass example tests (~0.5% solutions only)

- generate random inputs

- pick solution cluster that agrees on them

Akin to human brute force at scale!

Introducing

#AlphaCode

: a system that can compete at average human level in competitive coding competitions like

@codeforces

. An exciting leap in AI problem-solving capabilities, combining many advances in machine learning!

Read more: 1/

173

2K

8K

1

1

8

@skirano

@perplexity_ai

We've got the chat fine-tuned version at

@thefireworksai

: . The mixtral's poetry could be a bit better, though :)

The bot based on this model is coming to

@poe_platform

in a couple of hours too.

3

1

9

LLaVA is an awesome idea to extend LLMs to see images. Original models from October were trained on GPT-4 outputs and thus can’t be used commercially.

We trained FireLLaVa based on OSS LLMs so it’s good for commercial use. Try it at or download from HF.

🔥🔥Announcing FireLLaVA -- the first multi-modal LLaVA model, trained by

@FireworksAI_HQ

, with a commercially permissive license. It’s also our first open source model!

While the industry heavily uses text-based foundation models to generate responses, in real-world

10

61

344

0

0

9

There's still much to learn about running Mixtral (and mixture-of-experts models broadly) and how they interact with low precision/quantization.

A surprising finding from

@divchenko

: for fp8, running more experts seems beneficial!

0

1

8

@soumithchintala

Congrats to former

@MetaAI

colleagues for another awesome model!

We just enabled it at

@thefireworksai

, try it for free

0

0

7

@HamelHusain

It’s batch size 1 - results are dominated by mem bandwidth. Quantization matters the most. Hence exllama and mlc are on top with 4bit. Other kernels aren’t very or run in higher bits

Check quality. 4 bit is tricky. Varying number of tokens is a red flag that models differ a lot

0

0

8

@ezyang

And lack of cross pollination is not for the lack of trying. Much Google early stuff in 2010s was about async dist stuff - DistBelief, TF1. Just look at focus on param servers in TF1 paper. MoE and various routing is similar dist ideas (sharding) - maybe it’s getting a comeback

1

1

7

And it's live on in the playground or for API access.

@MistralAI

8x7B is live on Fireworks. Try it now at

Warning: this is not an official implementation, as the model code hasn’t been released yet. Results might vary. We will update it once Mistral does the release.

More perf improvements are landing soon

9

34

162

0

1

6

@abacaj

Turns out the fix is correct, i.e. softmax+topk. The reference HF code has topk+softmax+renormalize which is the same thing: softmax of a subset of variables differs only by normalization factor.

1

0

7

@burkov

We’re building one at . There are Llamas, Falcons, etc with per token pricing. And we can serve your LoRA for the same price too.

2

0

6

@DynamicWebPaige

@MistralAI

@perplexity_ai

You can go faster: ~100 tokens/sec on a single GPU. And >300 tokens/sec with a more optimized setup.

0

2

6

@sualehasif996

@aidan_mclau

@teortaxesTex

@GroqInc

@FireworksAI_HQ

Nice, thanks for the link. I was working out the same thing. After premultiplying projection is becomes MHA more or less but with RoPE mini head on the side

1

0

5