Zengyi Qin

@qinzytech

Followers

3K

Following

164

Statuses

103

MIT PhD @MIT | Hardcore GenAI Researcher | MyShell | Homepage: https://t.co/bwtUBzigZD

Boston, MA, USA

Joined December 2023

@krishnakaasyap source is from Huawei employees. BTW in terms of FLOPS they already catched up. But the communication is still a little behind NVIDIA

1

0

1

@srivatsamath We will release and open-source a model that significantly outperforms o1 in computer-use agents and release the benchmark at the same time. Stay tuned

4

2

135

@gauranshsoni also almost 0% because their pre-training data does not contain sufficient long-horizon interactive computer-use decision making data

1

1

49

RT @tom_doerr: MeloTTS: A text-to-speech library supporting English, Spanish, French, Chinese,Japanese, and Korean, with various accents an…

0

78

0

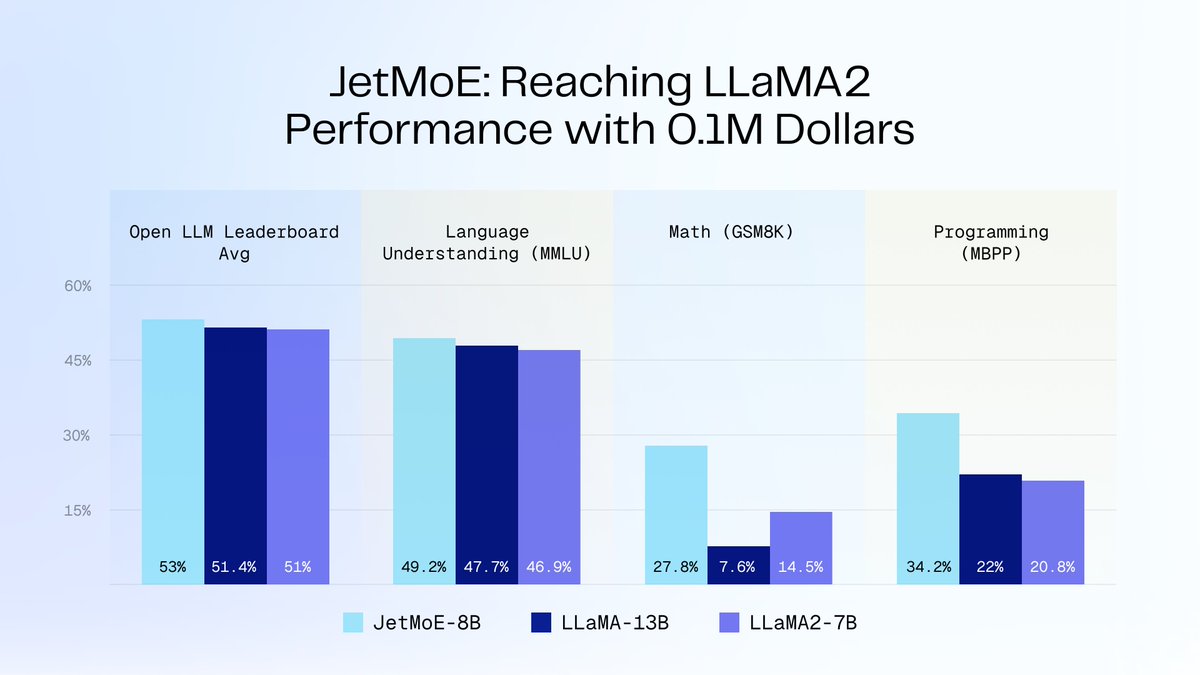

@davidbau Consider this one, which democratizes Large model training and make it accessible to many research labs. Website: Paper:

0

0

3

Many people think @xai's 100K GPU cluster is no longer necessary given @deepseek_ai's success with only 2K GPUs. That is not true. The fact is that compute is always limited. If you have 100K GPUs then you can do a lot of LARGE experiments very QUICKLY, then iterate the model very fast.

1

0

18

@ZiqiPang Neither one. We should instead train an agentic one - it should do some bold/risky stuff that big companies like OpenAI won't release due to safety issues

3

0

15