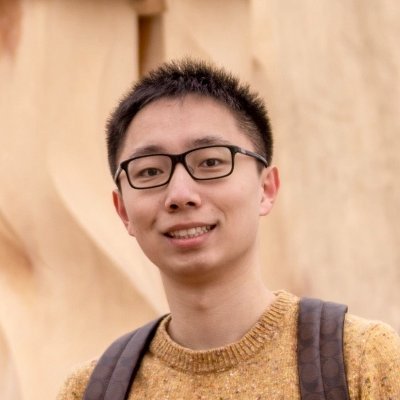

Congyue Deng

@CongyueD

Followers

3K

Following

1K

Media

34

Statuses

266

CS PhD student @Stanford | Previous: math undergrad @Tsinghua_Uni | ❤️ 3D vision, geometry, and art

Joined February 2020

From articulated objects to multi-objec scenes, how do we define inter-part equivariance? Check out our latest work Banana🍌! Joint work with @Jiahui77036479, @willbokuishen, @KostasPenn, @GuibasLeonidas. Paper: Website:

1

25

123

Will be presenting our spotlight paper Banana🍌 at #NeurIPS2023, welcome to drop by our poster!. Time: Dec 12 (Tue) afternoon.Loc: Poster #2021.Web: Presenting one of my favorite works on my birthday🎂sounds like a special experience

3

13

112

Our work gets accepted by NeurIPS 2023 as a spotlight — with all reviews positive pre-rebuttal! See you in New Orleans this winter🦞.

From articulated objects to multi-objec scenes, how do we define inter-part equivariance? Check out our latest work Banana🍌! Joint work with @Jiahui77036479, @willbokuishen, @KostasPenn, @GuibasLeonidas. Paper: Website:

3

9

105

Introducing NeRF and generative models to the material science department. Really enjoy these cross-disciplinary discussions with thoughts and opinions from very different perspectives @StanfordEng @StanfordAILab

4

4

91

How does the language embedding space balance semantics and appearance for 3D synthesis?.📣Happy to introduce our #CVPR paper NeRDi!.Joint work with @maxjiang93, Charles R. Qi, @skywalkeryxc, Yin Zhou, @GuibasLeonidas, and Dragomir Anguelov.

0

12

84

Excited to share that our EquivAct is accept at ICRA @ieee_ras_icra! 🤖.

From a few examples of solving a task, humans can:.🚀 easily generalize to unseen appearance, scale, pose.🎈 handle rigid, articulated, soft objects.0️⃣ all that with zero-shot transfer. Introducing EquivAct to help robots gain these capabilities. 🔗 🧵↓

0

5

58

How can a robot learn from a few human demonstrations and generalize to variations of the task? Equivariance is all you need!. ⬇️ Check out our EquivAct.😺 Cute cat video included.

From a few examples of solving a task, humans can:.🚀 easily generalize to unseen appearance, scale, pose.🎈 handle rigid, articulated, soft objects.0️⃣ all that with zero-shot transfer. Introducing EquivAct to help robots gain these capabilities. 🔗 🧵↓

0

5

56

Had a wonderful time at @GRASPlab 🤓Thanks @KostasPenn for hosting my visit and @Jiahui77036479 for helping me with everything at Penn 🤗

0

1

49

5 years ago, I first learned about spectral geometry in @JustinMSolomon's fabulous Shape Analysis course, which finally led me into the field of 3D vision. Still remember this figure of hitting a Stanford bunny with the hammer🔨.

0

2

45

First research project in my PhD @StanfordAILab #guibaslab with @orlitany, Yueqi Duan, Adrien Poulenard, @taiyasaki, and @GuibasLeonidas 🥳.

📢📢📢 Introducing "Vector Neurons". Want a network (and latent space) that act by construction in an equivariant way w.r.t. SO(3) transformations?. All you need is to do is to generalize the scalar non-linearity to a vector one (e.g. Vector ReLU).

3

3

39

You can turn on a blender by pressing its container. The object part is a “container” by its semantics, but a “button” by its action. Check out our latest work SAGE🌿 on bridging these two part interpretations for generalizable robot manipulation! #RSS2024

Glad to share that SAGE has been accepted by #RSS2024! We built a general framework for language-guided articulated object manipulation with the help of VLM and 3D models!.Want a robot to help control your furniture and appliances? Check out our website:

0

5

38

Super exciting work!.✅ Unsupervised object-centric scene understanding.✅ Equivariant shape priors .✅ A new 3D dataset 🪑& 🍺.

Happy to introduce our new #CVPR2023 paper EFEM with @CongyueD, Karl Schmeckpeper , @GuibasLeonidas, @KostasPenn:.- Learn Equivariant object shape prior on ShapeNet; .- Directly Inference for scene object segmentation!.- A new dataset "Chairs and Mugs"!.

0

2

36

Come stopping by our EquiVision workshop tomorrow at Summit 321 with the exciting talks!.

Our Equivariant Vision workshop features five great speakers @erikjbekkers @HaggaiMaron @ninamiolane @_machc, and Leo Guibas, spotlight talks, posters, and a tutorial prepared for the vision audience. Come tomorrow, Tuesday, at 8:30am in Summit 321! Thank you @CongyueD for

0

8

29

Poster session happening now!

Our Equivariant Vision workshop features five great speakers @erikjbekkers @HaggaiMaron @ninamiolane @_machc, and Leo Guibas, spotlight talks, posters, and a tutorial prepared for the vision audience. Come tomorrow, Tuesday, at 8:30am in Summit 321! Thank you @CongyueD for

0

0

9

@_akhaliq Check out our LiNeRF:.Improving NeRF view-dependent effects by just swapping two operators.

Wanna improve your NeRF’s view-dependent effects with just a few lines of code? Check out our work!. 🔁 Swap integration & directional decoding.🟠 Extremely simple modification.🟡 A better numerical estimator.🟣 Interpretation as light fields. 🔗

0

0

8

Really solid work! -- first time seeing someone coding too much that even hurt his wrist before a deadline😲.

@CVPR GINA-3D: Learning to Generate Implicit Neural Assets in the Wild with awesome folks @Waymo, @Google, @StanfordAILab: @skywalkeryxc, @charles_rqi, @MahyarNajibi, @boyang_deng, @GuibasLeonidas #guibas_lab, Yin Zhou, Dragomir Anguelov. More updates to come.

0

0

8

Very exciting work!.

Our GAPartNet has received a Highlight with all top scores in #CVPR2023. Join us in the afternoon for a discussion! We're looking forward to exchanging ideas with you!.Homepage: Code & Dataset: #CVPR2023 #AIResearch #Robotics #CV

0

0

7

Project led by a smart PKU undergrad Xinle Cheng, and joint work with @AdamWHarley, Yixin Zhu, and @GuibasLeonidas .@StanfordAILab.

0

0

6

@GhaffariMaani @KostasPenn @ninamiolane Met your students here! Greetings from Seattle :D . In fact we were thinking about inviting you for a talk when initiating this workshop, but didn’t know if you’re interested in coming to vision conferences.

1

0

4

This work is led by Qianxu Wang (, a very talented undergrad in 3D vision and robotics from Peking University. Qianxu will be interning at Stanford with @leto__jean at @StanfordIPRL this upcoming summer. And he will be applying for PhD next fall!.

1

1

4

@wendlerch @KostasPenn @ninamiolane Due to CVPR policies, we can only make the recordings public after 3 months. Will post them on our website at the time.

0

0

2

@jbhuang0604 Among these I like the GAN -> diffusion best, cuz GAN training is really a suffer 😂.

0

0

3

Really exciting work by @ShivamDuggal4 !.

Current vision systems use fixed-length representations for all images. In contrast, human intelligence or LLMs (eg: OpenAI o1) adjust compute budgets based on the input. Since different images demand diff. processing & memory, how can we enable vision systems to be adaptive ? 🧵.

0

0

3

@erikjbekkers giving his talk on Neural Ideograms and Geometry-Grounded Representation Learning at @EquiVisionW #CVPR2024

0

0

3

“Rethinking Directional Integration in Neural Radiance Fields”.📄(LiNeRF) Joint work with @JiaweiYang118 @GuibasLeonidas @yuewang314 .@StanfordAILab @CSatUSC.

0

0

3

From articulated objects to multi-objec scenes, how do we define inter-part equivariance? Check out our latest work Banana🍌! Joint work with @Jiahui77036479, @willbokuishen, @KostasPenn, @GuibasLeonidas. Paper: Website:

0

0

2

@yuewang314 @GuibasLeonidas @JiaweiYang118 Haha I checked my file records and realized that we first started discussing this in May 2021 (at a hotpot place lol?). What a long journey! Feel really fortunate to have a friend and collaborator like you!.

1

0

2

@KostasPenn @CSProfKGD Remind me of the ICRA supplementary video which has a max length but not frame size limit — so we put 20 demos per slide 😂.

1

0

2

@Awfidius @taiyasaki @orlitany @HelgeRhodin (1/2) Thanks for the comments :). For the convolutions: sometimes you may want PointNet-like things to avoid heavy memory consumption by storing all neighbourhood points, or you may want convolutions in the feature space as in DGCNN (where the graph is not embedded in R^3).

0

0

2

@geopavlakos @UTCompSci Congrats George!! (Wanna visit UT this Oct but it’s before you start…).

1

0

2

@qixing_huang @richardzhangsfu Lol we have the organizers’ chat groups on fb and wechat, the two person there are less actively using Twitter so I’m posting.

0

0

2

@Awfidius @taiyasaki @orlitany @HelgeRhodin (3/2) VN is lovely for its simplicity and resemblance to classical neural nets. Also, it's non-trivial to construct an invariant decoder for neural implicits with the features in SE(3)-transformers (or tensor field networks).

0

0

1

@docmilanfar @KostasPenn @ninamiolane might be less relevant, but here's a paper relating human cognition with optical flows on object rotation:

0

0

1

@Awfidius @taiyasaki @orlitany @HelgeRhodin (2/2) But anyway the good thing about VN is its versatility -- you may also have a VN-transformer if you like.

0

0

1

@tolga_birdal @StanfordAILab @Stanford @feixia @HughWang19 @mikacuy @davrempe @KaichunMo quiz: who are photoshopped 🤔.

0

0

1

@maxjiang93 @taiyasaki @NeurIPSConf haha I'll see if I can come -- have a system course final next week and it's killing me 😂.

0

0

1