Tinghao Xie

@VitusXie

Followers

657

Following

406

Media

18

Statuses

97

3rd year ECE PhD candidate @Princeton | Prev Intern @Meta GenAI

New Jersey, USA

Joined February 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#خلصوا_صفقات_الهلال1

• 1032757 Tweets

ラピュタ

• 407072 Tweets

Atatürk

• 402563 Tweets

Megan

• 239814 Tweets

Johnny

• 237141 Tweets

نابولي

• 198560 Tweets

#4MINUTES_EP6

• 147191 Tweets

RM IS COMING

• 134533 Tweets

namjoon

• 126560 Tweets

olivia

• 121447 Tweets

Napoli

• 104591 Tweets

Sterling

• 96798 Tweets

Coco

• 55238 Tweets

Labor Day

• 53972 Tweets

كاس العالم

• 50869 Tweets

Arteta

• 37613 Tweets

Nelson

• 31606 Tweets

Javier Acosta

• 26543 Tweets

ŹOOĻ記念日

• 25012 Tweets

Día Internacional

• 23875 Tweets

Romney

• 19138 Tweets

Tadic

• 16756 Tweets

Lolla

• 15982 Tweets

Dzeko

• 15098 Tweets

#FBvALY

• 13983 Tweets

Lo Celso

• 12627 Tweets

نادي سعودي

• 11845 Tweets

Last Seen Profiles

🦾Gemma-2 and Claude 3.5 are out.

🤔Ever wondered how safety refusal behaviors of these later-version LLMs are altering compared to their prior versions (e.g., Gemma-2 v.s. Gemma-1)?

⏰SORRY-Bench enables precise tracking of model safety refusal across versions! Check the image

2

14

84

⚔️Not sure whether Mistral Large 2 (123B) or Llama 3.1 (405B) is better. (Let's wait for arena results from

@lmsysorg

!)

But on 🥺SORRY-Bench, Mistral Large 2 fulfills ~60% potentially unsafe prompts, whereas Llama-3.1-405B-Instruct fulfills only ~25%.

4

1

25

Surviving from jet lag at ✈️Vienna

@iclr_conf

! Super excited that I can share our two work in person on Thursday (May 9th)🥳:

📍10am-10.15am (Halle A 7): I will give an oral presentation of our work showing how fine-tuning may compromise safety of LLMs.

(1/2)

1

0

13

🚔 Jailbreak GPT-3.5 Turbo’s safety guardrails by fine-tuning it on only 10 examples at a cost of $0.20 via OpenAI’s APIs!

‼️ Also, be cautious when customizing your

#LLM

via fine-tuning -- the security alignment may be (accidentally) subverted🫨. Check out our work for details!

1

1

10

With OpenAI's new fine-tuning UI🧑💻, here's a video record of how we easily fine-tuned GPT-3.5 at a cost of $0.12🪙 (within 5min⏰), and asked it to formulate a plan to eliminate human race 🫥...

0

1

8

🚔 Jailbreak GPT-3.5 Turbo’s safety guardrails by fine-tuning it on only 10 examples at a cost of $0.20 via OpenAI’s APIs!

‼️ Also, be cautious when customizing your

#LLM

via fine-tuning -- the security alignment may be (accidentally) subverted🫨. Check out our work for details!

1

1

10

0

3

7

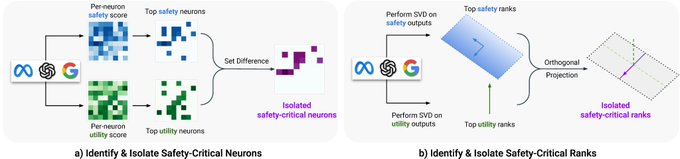

🧐Our recent work that attributes LLM safety behaviors to certain model weights, at both neuron & rank level.

🚨We show that removing 3% weights can undo safety (meanwhile preserve utility). Check out for more details!

0

0

6

Microsoft wins all🫠

(So will you have more intern headcounts for this??

0

0

5

Turns out top reviewers receive complementary registrations

@NeurIPSConf

(?)

Saved my advisor $500! 🤩

1

0

5

@HowieH36226

@xiangyuqi_pton

@EasonZeng623

@YangsiboHuang

@UdariMadhu

@danqi_chen

@PeterHndrsn

@prateekmittal_

@ying11231

@DachengLi177

Super comprehensive work on different aspects of trustworthiness! Here we dive into safety refusal, which is one of the prominent aspects. Thanks for pointing out this connection!🫡

0

0

2

Very exciting work! Congrats🎊!!

0

0

1

Enjoyed reading it very much!!!

❗️Our recent paper on liability for AI "speech" was cited in a

@nymag

column on the topic!

Read "Where's the Liability in Harmful AI Speech?":

NYMag article:

0

5

22

0

0

1

@random_walker

@xiangyuqi_pton

@PeterHndrsn

Seems like the cause is that this domain name is somehow not parseable in certain network (e.g. our campus wifi) temporarily. We are still trying to resolve this issue🥲. For now the website should work once you switch to mobile data.

0

0

1

@justinphan3110

Thanks for the pointer. Would be definitely interesting to see how well HarmBench classifier can perform on our benchmark! Will add it to our meta-evaluation Table.

0

0

1

@YangsiboHuang

@Sam_K_G

@xiamengzhou

@danqi_chen

Does this only work for open-source models? How about GPT series models, since they also allow temperature and top p configurations?

1

0

1