Seungone Kim

@seungonekim

Followers

1,116

Following

826

Media

38

Statuses

539

Ph.D. student @LTIatCMU working on (V)LM Evaluation & Systems that Improve with (Human) Feedback | Prev: @kaist_ai @yonsei_u @NAVER_AI_Lab @LG_AI_Research

Pittsburgh, PA

Joined November 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#บวงสรวงซีรีส์ปิ่นภักดิ์

• 648108 Tweets

THE LOYAL PIN WORSHIP

• 624335 Tweets

Argentina

• 352842 Tweets

#T20WorldCupFinal

• 316758 Tweets

Chile

• 254761 Tweets

Canadá

• 250112 Tweets

#UFC303

• 137611 Tweets

Ortega

• 109089 Tweets

Conmebol

• 72678 Tweets

Klay

• 68536 Tweets

Lautaro

• 66491 Tweets

Isabelle

• 55363 Tweets

Garnacho

• 53756 Tweets

#CopaAmerica2024

• 53321 Tweets

Roldán

• 50635 Tweets

LILIES FOR LISA

• 44836 Tweets

Paredes

• 39489 Tweets

設営完了

• 34884 Tweets

ジュンブラ

• 28247 Tweets

Diego Lopes

• 26074 Tweets

ドジャース

• 22905 Tweets

YOASOBI

• 22787 Tweets

風都探偵

• 21960 Tweets

Anthony Smith

• 18045 Tweets

Milad

• 15300 Tweets

Dan Ige

• 13683 Tweets

Montiel

• 13014 Tweets

Trainee Day

• 12162 Tweets

Celso

• 11429 Tweets

Gareca

• 10896 Tweets

ラジエル

• 10687 Tweets

ビギンズナイト

• 10545 Tweets

Last Seen Profiles

🔥I will be joining

@CarnegieMellon

@LTIatCMU

this upcoming Fall, working with

@gneubig

and

@wellecks

on evaluating LLMs & improving them with (human) feedback!

Can't wait to explore what lies ahead during my Ph.D. journey☺️

31

7

352

#NLProc

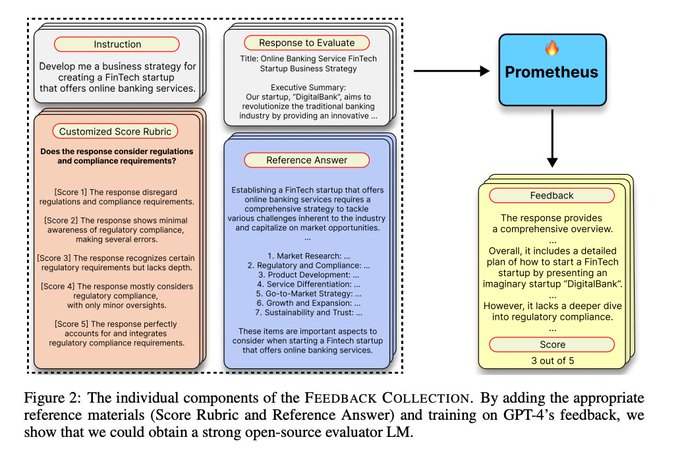

Introducing 🔥Prometheus 2, an open-source LM specialized on evaluating other language models.

✅Supports both direct assessment & pairwise ranking.

✅ Improved evaluation capabilities compared to its predecessor.

✅Can assess based on user-defined evaluation criteria.

3

41

161

Super excited to share that our CoT Collection work has been accepted at

#EMNLP2023

! If you want to make your LM better at expressing Chain-of-Thoughts, take a look at our work🙂 See you in Singapore!! 🇸🇬🇸🇬

2

16

57

Super excited to share that Prometheus is accepted at ICLR! See you all in Vienna 🇦🇹

Also, check out our recent work on Prometheus-Vision expanding to the multi-modal space! It's the first open-source VLM that evaluates other VLMs:

1

5

56

I'll be presenting Prometheus

@iclr_conf

on May 10th (Friday), 10:45 AM - 12:45 PM at Halle B.

Let's talk if you're interested at LLM Evals 🙂

1

2

48

I'm going to present our Expert Language Model paper at ICML 2023! Come to Exhibit Hall 1 (7.25 2:00PM - 3:30PM) if you're interested in either Instruction Tuning or Expert LMs!

1

5

47

🤖Distilling from stronger models is effective at enhancing the reasoning capabilities of LLMs as shown in Orca, WizardMath, Meta-Math, and Mammoth.

🔍In this work, we ask the question if LLMs could "self-improve" their reasoning capabilities!

Check out the post for more info😃

0

4

46

@jshin491

@jang_yoel

@ShayneRedford

@hwaran_lee

@oodgnas

@SungdongKim4

@j6mes

@seo_minjoon

Also, I'd like to thank

@_akhaliq

for highlighting our paper:)

1

3

44

💡Evaluating high-level capabilities (e.g., Helpfulness, CoT abilities, Presence of ToM) of LLMs hold crucial instead of simply measuring the performance on a single domain/task.

In our recent work, we check whether LLMs could process multiple instructions at once. Check it out!

0

5

37

#NLProc

🤗We propose LangBridge, a scalable method to enable LMs solve multilingual reasoning tasks (e.g., math, code) "without any multilingual data"!

🔑The key ingredient is using mT5's encoder and aligning with an arbitrary LLM!

➡Check out the post for more information!

1

6

35

Thank you for the shout out

@jerryjliu0

@llama_index

!! Great to see an awesome blog post & some additional examples that wasn't in the paper😊

GPT-4 is a popular choice for LLM evals, but it’s closed-source, subject to version changes, and super expensive 💸

We’re excited to feature Prometheus by

@seungonekim

et al., a fully-open source 13B LLM that is fine-tuned to be on par with GPT-4 eval capabilities 🔥

9

87

470

0

5

32

🤗Interested at how we could holistically evaluate LLMs? I'll be presenting 2 papers at

#NeurIPS2023

@itif_workshop

! Come visit😃

👉Room 220-222, Dec 15th, 1-2PM (Poster), 5-6PM (Oral)

🧪Flask: (led by

@SeonghyeonYe

)

🔥Prometheus:

1

7

30

⭐️If you're interested at inducing Chain-of-Thought capabilities to smaller models across a variety of tasks, come visit our poster at

#EMNLP2023

!

➡️ Poster Session 5, Dec 9th, 11AM

Also happy to chat about synthetic data and NLG evaluation as well😀

1

1

26

#NLProc

🧐We show that LLMs can write & simulate executing pseudocode to improve reasoning.

Compared to Zero-shot CoT or PoT/PAL, our Think-and-Execute significantly improves performance on BBH by understanding the "logic" behind the task.

Check out the post for more details!

0

2

23

@Teknium1

I've experienced this as well while training a smaller model with CoT augmented from GPT models. Even though the eval loss went up, a smaller model trained for multiple epochs (>=3) definitely generated better rationales.

Also, since it is too

2

2

21

🤔In most cases, we use a fixed system message, "As a helpful assistant [...]". Is this optimal?

💡We find that by incorporating diverse system messages in post-training, LLMs gain adherence to these messages, a key component for personalized alignment.

➡️Check out the post!

0

2

15

#NLPaperAlert

😴 Aren't you tired of the monotonous way ChatGPT responds?

💡Infuse your preferences to personalize the way your LLM responds, based on our new alignment method 🥣Personalized Soups🥣

✅ Great work led by

@jang_yoel

, check it out!!

0

1

15

Check out our recent work on evaluating the capabilities of LLMs based on multiple fine-grained criteria! It suggests where we currently are & whete we should head towards ✨✨Thanks to

@SeonghyeonYe

&

@Doe_Young_Kim

for leading this amazing work🙌

0

1

14

I'd like to thank all of my co-authors and supervisor for helping me with this project! It was a great experience working with you all, appreciate it😃

@jshin491

Yejin Cho

@jang_yoel

@ShayneRedford

@hwaran_lee

@oodgnas

Seongjin Shin

@SungdongKim4

@j6mes

@seo_minjoon

1

0

14

@aparnadhinak

@OpenAI

@ArizePhoenix

@arizeai

Hello

@aparnadhinak

, great analysis! I really enjoyed reading it.

I have one question:

I think the scoring decisions could be a lot more precise if you prompt it with (1) mapping an explanation for all the scores instead of just 0,20,40,60,80,100%, (2) making the model generate

3

0

11

#nlproc

As a native Korean, the translated MMLU seemed super awkward to me since it requires US-related knowledge & expressions are not fluent (even if you use DeepL or GPT-4).

Super timely work from

@gson_AI

who made a Korean version of MMLU without any translated instances!

1

2

12

@jphme

@dvilasuero

@natolambert

My take is that without a reference answer, it's basically asking the evaluator/judge model to (1) solve the problem internally through its forward pass and also (2) evaluate the response at the same time. It's twice of workload, which is evidently harder!

2

1

11

Lastly, I’d like to appreciate our coauthors for their hard work in annotating/verifying the dataset and for valuable advices!

@scott_sjy

@JiYongCho1

@ShayneRedford

@chaechaek1214

@dongkeun_yoon

@gson_AI

@joyejin195315

@shafayat_sheikh

@jinheonbaek

@suehpark

@ronalhwang

0

0

13

☺️ I'd like to thank all my amazing co-authors for their valuable comments & advice throughout the project!

@scott_sjy

@ShayneRedford

@billyuchenlin

@jshin491

@wellecks

@gneubig

Moontae Lee

@Kyungjae__Lee

@seo_minjoon

1

0

9

@jphme

@dvilasuero

@natolambert

@jphme

@natolambert

@dvilasuero

We're currently training mistral/mixtral with some additional Prometheus data & new techniques. I think we could have a preprint by the end of the month!

I'll definitely include an experiment on how the model behaves when there's no reference:)

2

1

9

@Teknium1

Here's the dataset:

@openchatdev

used it as their training data to induce evaluation capabilities in their recent models, but I haven't heard whether it had a positive effect. Would love to see if training on it would eventually lead to a self-improving

1

0

6

@scott_sjy

@ShayneRedford

@billyuchenlin

@jshin491

@wellecks

@gneubig

@Kyungjae__Lee

@seo_minjoon

🙌Last but not least, I'd like to thank

@arankomatsuzaki

@omarsar0

@_akhaliq

for sharing our work!

0

0

7

Last but not least, I’d like to thank our wonderful team for accomplishing this project!

@sylee_ai

@suehpark

@GeewookKim

@seo_minjoon

0

0

6

I'd also like to talk if you're interested in Chain of Thought Fine-tuning, Zero-shot Generalization and Few-shot adaptation! Come visit :)

0

2

6

Also, I'll present Prometheus-Vision on May 11th (Saturday), 13:00 - 14:00 at ME-FoMo workshop!

0

0

5

📅 Last year, we introduced Prometheus 1, one of the first evaluator LM that showed high scoring correlations with both humans and GPT-4 in direct assessment formats. (Please read the tagged thread if you haven't already!)

📈 Since then, many stronger

1

0

5

To learn more about our work, please check out our draft & code😃

📝

👨💻

Joint work w/

@joocjun

,

@Doe_Young_Kim

,

@jang_yoel

,

@SeonghyeonYe

,

@jshin491

,

@seo_minjoon

1

0

4

@aparnadhinak

@OpenAI

@ArizePhoenix

@arizeai

@aparnadhinak

This would be what I mentioned about looks like:

0

0

3

@ShayneRedford

@natolambert

@natolambert

Hello Nathan, in our recent preprint, we made 100K synthetic data & the trained model functioned as a good evaluator/critique model on custom criteria even compared to GPT-4!

I would be glad for further discussion if you're interested😃

1

0

2

@NeuralNeron

Thanks for your interest in our work! It has the same speed as using Llama-2-Chat 7B & 13B. Using 4 A100 GPUs on huggingface tgi, it took less than 0.33 seconds to generate a feedback & score:)

0

0

1

@alignment_lab

@altryne

@alignment_lab

@altryne

That sounds great! Expanding to other modalities such as speech & video definitely seems like an interesting direction to pursue 🙂

We could have

@sylee_ai

join as well to make our discussions more fruitful!

0

0

0

@OrenElbaum

Hello Oren, thank you for your interest in our work!

ToT is effective at solcing hard problems at the cost of additional inference. While one could obtain high quality rationale data using ToT, investigating if smaller LMs could learn it would be an interesting direction!

0

0

1

@tugot17

Hello Piotr, thanks for your interest in our work! We're planning to open source all of our data / models, so stay tuned🙂

0

0

1