Mihir Patel

@mvpatel2000

Followers

3,725

Following

439

Media

96

Statuses

1,288

Research Engineer @MosaicML | cs, math bs/ms @Stanford

Joined November 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

SEVENTEEN

• 1224152 Tweets

Taylor

• 216490 Tweets

Carat

• 77116 Tweets

FELIP TAKES ON BBPHSTAGE

• 67873 Tweets

#TimnasDay

• 64460 Tweets

オーストラリア

• 60151 Tweets

#サッカー日本代表

• 45425 Tweets

#バナナサンド

• 35695 Tweets

オウンゴール

• 27773 Tweets

最終予選

• 25815 Tweets

ミンギュ

• 25205 Tweets

金木犀の香り

• 23376 Tweets

OHM FOR DEVY

• 21490 Tweets

Zaplana

• 20816 Tweets

うさぎだらけくじ

• 18719 Tweets

ジョシュア

• 18686 Tweets

ドギョム

• 17891 Tweets

スングァン

• 17857 Tweets

كوريا

• 15355 Tweets

Asnawi

• 14631 Tweets

Kamran Ghulam

• 14251 Tweets

Witan

• 13654 Tweets

Shayne

• 13587 Tweets

نعيم قاسم

• 12397 Tweets

#daihyo

• 12124 Tweets

中村敬斗

• 11602 Tweets

Last Seen Profiles

You know your CTO (

@matei_zaharia

) got the dog in him when the company is worth 40B+ and he's still looking at data and labeling

9

27

625

Evaluating LLMs is really hard! At

@MosaicML

, we rigorously benchmark models by asking for vegan* banana bread recipes, baking them, and ranking on taste

*we currently do not penalize for responding with non-vegan, but this will change in future

13

27

329

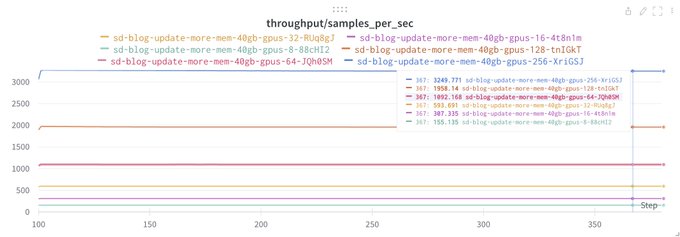

We're back 😈. Stable diffusion in $50k, and all the code is open source . Plus, this time I finally convinced

@jefrankle

to give me enough GPUs to train it, so we have the model to prove it.

Two weeks ago, we released a blog showing training Stable Diffusion from scratch only costs $160K. Proud to report that blog is already out of date. It now costs 💸 $125K 💸. Stay tuned for more speedup from

@MosaicML

, coming soon to a diffusion model near you!

3

17

206

6

33

288

Two weeks ago, we released a blog showing training Stable Diffusion from scratch only costs $160K. Proud to report that blog is already out of date. It now costs 💸 $125K 💸. Stay tuned for more speedup from

@MosaicML

, coming soon to a diffusion model near you!

3

17

206

Remember when everyone was freaking out about AMD because

@realGeorgeHotz

quit on it? Turns out it works straight out of the box on

@MosaicML

's stack on real LLM workloads, and we have the receipts

50-50 odds I could do this in a few hours. I've heard people get pretty good MFU for LLMs on the

@MosaicML

stack w AMD gpus.

My uninformed take: AMD is aggressively prioritizing PyTorch support (just swap backend), which is why

@realGeorgeHotz

had a brutal time -- custom is hard

2

4

30

11

15

186

I have found a high correlation between researchers and degenerate gamblers.

I would tag the appropriate coworkers but it's the entire team

7

7

188

At

@DbrxMosaicAI

, we (and our customers! we actually make money) train custom LLMs for specific use cases. Today, we're sharing a large part of our infra stack and many of the tricks we use to get reliable performance at scale. (1/N)

2

28

185

7B, 1T tokens, llama-quality, 65k+ context length variant, chat variant, open source, commercial license

The crazy part is how easy it was.

@abhi_venigalla

wanted it,

@jefrankle

got the GPUs, and someone clicked run. 9 days of us doing normal work and watching the loss go down

5

22

157

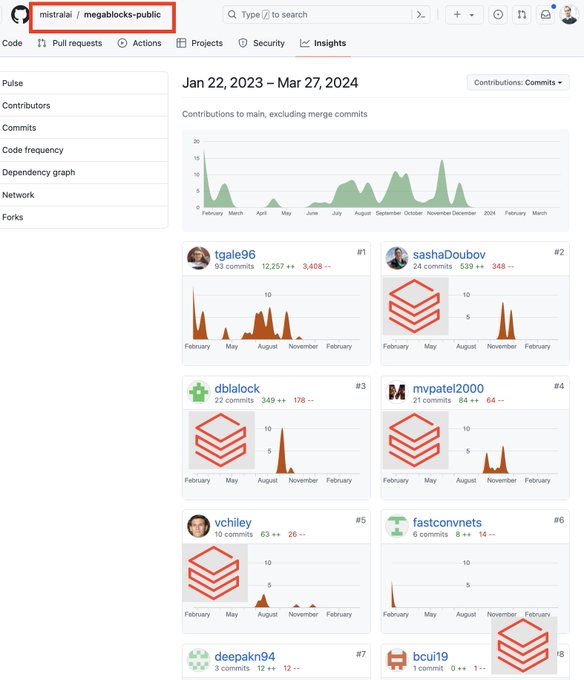

Fun collaboration between

@DbrxMosaicAI

and

@PyTorch

team! We've been working hard to scale MoEs and PyTorch distributed to thousands of GPUs, and this is a great summary of a lot of the cool things we've added to PyTorch.

Quick rundown (1/N)

4

26

121

Any method that ranks "transparency" the same for GPT4, where basically nothing is shared, vs. Stable Diffusion 2, a model I personally have reproduced from scratch, is so flawed it's not even worth discussing

The Foundation Model Transparency Index by

@StanfordCRFM

purports to be an assessment of how transparent popular AI models are. Unfortunately its analysis is quite flawed in ways that minimize its usefulness and encourage gamification

7

34

150

5

7

101

This is true -- but not for long. Things are getting exponentially easier

I didn't know how to train 10b a few months ago. Now, it's just plug-in data and play

"What about loss spikes?" Solved, come talk

"What about node failure?" Solved, come talk

Etc

4

6

101

Abhi is a visionary. I've never worked with someone who so clearly can see the future and move us toward it. Internally, we bet a lot on how the world will evolve, and I've only ever beat Abhi once (and barely).

Very excited to see the hardware chapter play out

Advancing AI:

@Databricks

NLP Architect, Abhinav Venigalla, discusses the hardware and software advantages from AMD.

4

21

172

0

6

100

New laptop sticker dropped

Last 1.5 years

@MosaicML

have been absolutely wild. I've learned so much and done so many crazy things

Excited to go even faster

@databricks

. What's next is gonna be way bigger...

2

1

97

Corollary: Google/Facebook get far less credit than they deserve for driving AI innovation via Tensorflow/PyTorch/JAX. Massive $ investments in software made free which are the bedrock of everything in AI

1

10

85

Do people tweeting even read papers? The results only go to 32k.... I hate these AGI accounts which are all hype and 0 thought

It's a cool idea, but imo long sequence benchmarks are sorely lacking so it's hard to evaluate. I'd bet good money dilated attention has accuracy cost

7

6

84

I was often told raising money is often like signing in blood -- you're ride or die till the end.

Turns out you can just leave...? Are the investors not furious? Did something else happen?

Hoping employees who bought the vision didn't get screwed🙏

I’m excited to announce that today I’m joining

@Microsoft

as CEO of Microsoft AI. I’ll be leading all consumer AI products and research, including Copilot, Bing and Edge. My friend and longtime collaborator Karén Simonyan will be Chief Scientist, and several of our amazing

1K

2K

16K

3

2

75

Very interesting to see differences in feature announcement:

- GDM: corpo blog

- OAI: weird rumors for a week

- Anthropic: dude posts docs and it's out

1

3

74

Badass move to bring a XX petaflop wafer to a party

The Open Source AI Party of the year is lit 🔥 with community love here in NOLA! Thanks

@irinarish

@togethercompute

@laion_ai

for your partnership in bringing the

#OpenSource

AI community together tonight! 🧡

0

3

37

0

3

70

I have found ML very similar to finance.

It is very noisy and confusing. If you reason from first principles, you might find a great insight, or it might be Nope! the system does not operate like that at all

Also working on both makes you a lot of money

7

4

69

Watching

@dylan522p

breakdown satellite photos at ICML ES-FOMO is incredible.... Wild to see how well you can predict cluster build out for each frontier lab.

Please hold, scale incoming

2

1

65

Me: Can we implement mixtral from scratch for fine-tuning?

@jefrankle

: We have mixtral at home (transformers)

Mixtral at home (transformers):

1

2

59

Heart goes out to all the brilliant people at

@OpenAI

who aren't involved in and can't control this mess. Godspeed

1

1

54

Interesting to see how much publishing draws talent. Was at a dinner on Tuesday and people were asked if they had to leave their current role, which frontier team they'd be most excited to join.

3/8 said Meta, specifically

@ArmenAgha

's team

2

4

52

Hidden upside of cultivating waterloo power at

@DbrxMosaicAI

is how much clout I have with my Canadian cousins. It's the little things like not saying the 2nd t in Toronto

2

5

51

@Suhail

A few science ones I like: Scaling laws (Kaplan et al), chinchilla (deepmind) for LLM sizes. instruct gpt (openai) for rlhf. Alibi for large context length future. Switchformer for MoE, which imo will become much bigger

2

1

46

ML is not a Popperian science. You can prove something works, but it is very very hard to prove something doesn't work -- maybe you're just doing it wrong!

6

1

47

@jefrankle

@landanjs

Now, with

@MosaicML

, it's absurdly easy. Here's the loss curve from the week. I launched the runs and didn't have to mess with the ongoing runs once, including when things broke during my Saturday brunch with

@vivek_myers

. It crashed, identified failure, restarted by itself 🤯

1

5

44

For the first time, there's a clear scenario for which it seems Gemini is straight up better than GPT.

Tides are turning?

3

0

41

Built a fun text adventure game where a LLM is the storyteller, and every action is evaluated on a likelihood / dice roll D&D style at the

@scale_AI

hackathon!

Forgot how enjoyable it is to just build fun mini projects

4

2

42

On language.

Pros:

- New Gemini 1.5 Flash! 7% the cost of GPT-4o (1/10th cost of Pro)

- Gemma 2. More open source AI models!

- PaliGemma. New vision-language model,

@giffmana

and team keeps cookin

- Gemini Nano on-device

Cons:

- No benchmarks?

4

4

41

There's a lot of companies brute forcing impressive model training. Few are building the tools to make it scalable and repeatable.

This is how we get crazy things like replit training sota code models with 2 people, Stable Diffusion 2 <$50k with 3 people, etc

2

1

41

2 out of the 3 best researchers I know are 2 out of the 3 best engineers I know

3

0

39

The real magic is how easy this was.

@vitaliychiley

clicked run and loss curves went down. Every now and then it moved clusters but that's about it 🤷♂️.

Many people train models. Few make it easy, repeatable, and affordable. Now we can all cook🧑🍳

3

2

38

@abhi_venigalla

@jefrankle

To be clear, there was an absurd amount of work building our open source stack and as well as our amazing platform product. A ton of people did incredible work.

We built this to help everyone train, fine-tune, and deploy LLMs

2

4

38

I harp on this a lot, but the takeaway here is how easy it was. It took

@abhi_venigalla

<1 hour. Everything works out of the box (Mosaic's stack on PyTorch, PyTorch on AMD). Low switching costs will drive adoption, and that's what everyone else (Cerebras, Habana) needs

Remember when everyone was freaking out about AMD because

@realGeorgeHotz

quit on it? Turns out it works straight out of the box on

@MosaicML

's stack on real LLM workloads, and we have the receipts

11

15

186

2

3

38

LLMs are moving beyond pure research to the real world. It's no longer minimize training cost at optimal performance, it's minimize training + inference cost (+ w * inference latency?) at optimal performance. Big fan of what

@BlancheMinerva

and

@AiEleuther

are working on

Recently I’ve been harping on how “compute optimal” and “best for use” are completely different things. This plot from

@CerebrasSystems

shows that really well: their compute optimal models trained on the Pile out-preform Pythia for fixed compute but underperform for fixed params

10

43

269

2

2

38

One of the most insane things I heard when I started at Mosaic was

@abhi_venigalla

telling me we're going to go to 32->16->8->4->1 bit precision over the next few years, and the real blocker is hardware support.

I laughed, but as with most things Abhi says, I'm now convinced...

@karpathy

Super excited to push this even further:

- Next week: bitsandbytes 4-bit closed beta that allows you to finetune 30B/65B LLaMA models on a single 24/48 GB GPU (no degradation vs full fine-tuning in 16-bit)

- Two weeks: Full release of code, paper, and a collection of 65B models

39

193

1K

4

0

37

Grandma taking WhatsApp groups by storm after learning to make pictures of Krishna w Stable Diffusion on

@playground_ai

1

0

34

@natfriedman

@TheSeaMouse

You're right! All the links on the bottom are wrong 🤔 I only checked the twitter one. Awkward...

3

0

19

0

1

34

This stuff is super cool but not the right direction imo. No one should be serving 175B param models in production

Hint: solve chinchilla scaling laws = gpt3 quality (or any value) subject to cost minimization. The answer is a LOT smaller than 175B...

4

1

33

Really interesting to see

1. Anthropic release models with simple announcements instead of hype days (developer day, IO)

2. Strong commitment to privacy while still building great models. Will resubscribe just for this

0

3

31

50-50 odds I could do this in a few hours. I've heard people get pretty good MFU for LLMs on the

@MosaicML

stack w AMD gpus.

My uninformed take: AMD is aggressively prioritizing PyTorch support (just swap backend), which is why

@realGeorgeHotz

had a brutal time -- custom is hard

@AMD

Lisa Su and all her execs should spend 2+ hours every day having to install ROCM Drivers + AMD on a fresh PC. They *all* have to start deep learning projects and try to get SOTA on some LLM task using their own multi-AMD gpu rigs

4

2

89

2

4

30

I'm really excited about this project. This is one of the biggest models ever with shared granular checkpoints and data which makes it an incredible foundation for research. This kind of openness is super critical to helping advance the field.

0

1

29

On assistants and agents.

Pros:

- Mindblowing demo with assistant. Crazy vision-language skills, really cool uses. Feels like what Humane and co aspire to be

Cons:

- Slower than GPT-4o? Hard to tell...

We’re sharing Project Astra: our new project focused on building a future AI assistant that can be truly helpful in everyday life. 🤝

Watch it in action, with two parts - each was captured in a single take, in real time. ↓

#GoogleIO

221

1K

4K

1

1

28

Excited to be at PyTorch conference! Come say hi :)

0

1

27