Honglin Chen

@honglin_c

Followers

610

Following

248

Statuses

65

Research @OpenAI. Previously CS PhD @Stanford @NeuroAILab @StanfordAILab.

Stanford, CA

Joined October 2019

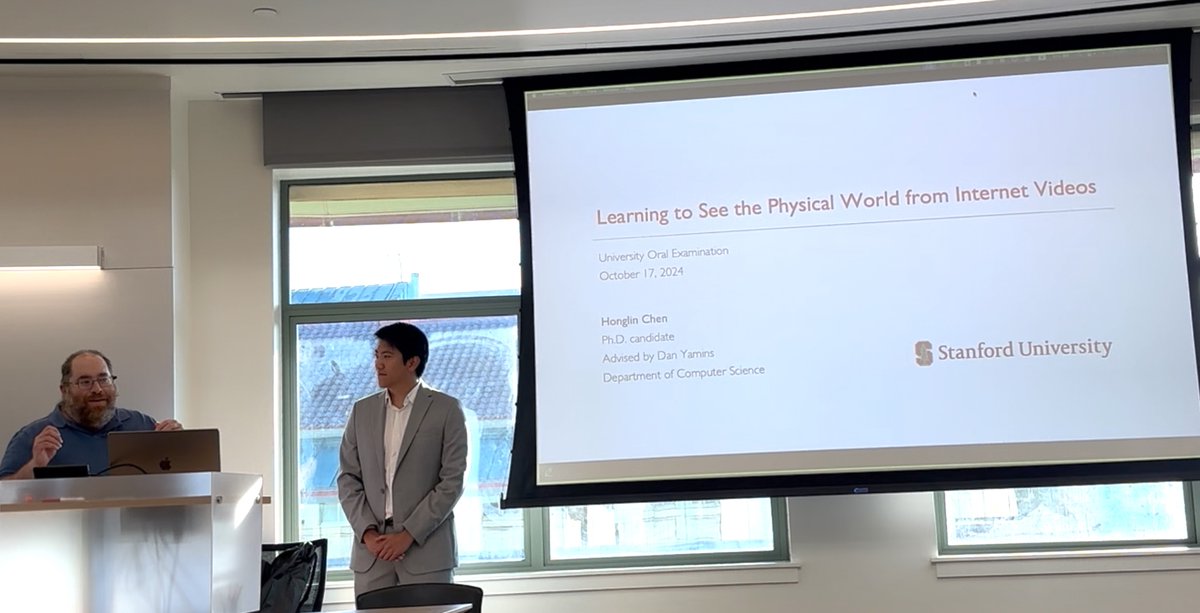

I recently defended my PhD and today marks my last day at Stanford. I am beyond grateful to my advisor @dyamins for his invaluable guidance and mentorship throughout this journey. I also want to thank my committee, @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan, for their support.

17

5

229

@HonglinChen_ @dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab Thank you, Honglin! 😄

0

0

1

@GretaTuckute @mitbrainandcog @ev_fedorenko @Nancy_Kanwisher @JoshHMcDermott @yoonrkim @mcgovernmit Congratulations, Greta!

1

0

2

@eshedmargalit @dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab Thank you, Eshed!

0

0

0

@recursus @dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab Thank you, Dan!

0

0

0

@aran_nayebi @dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab Thank you, Aran!!

0

0

0

@dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab as well as my awesome collaborators, especially @Rahul_Venkatesh, @KlemenKotar, @Koven_Yu, Wanhee Lee, and Kevin Feigelis.

0

0

4

@dyamins @nickhaber @jiajunwu_cs @GordonWetzstein @judyefan @NeuroAILab @StanfordAILab Huge thanks to senior PhDs, postdocs, and alumni in the lab, @recursus @ChengxuZhuang,@aran_nayebi,@tylerraye,@sstj389,Damian Mrowca, Eli Wang, for their support over the years

1

0

5

RT @zhang_yunzhi: Accurate and controllable scene generation has been difficult with natural language alone. You instead need a language f…

0

64

0

@LakeBrenden Thank you for your interest in our work, Brenden! Yes, first-person video data is definitely on our roadmap moving forward.

0

0

0

Attending #ECCV2024 in Milan? Stop by poster 260 to see our recent work on understanding physical dynamics with world modeling! Unfortunately, I cannot be there due to visa issues, but my amazing collaborator @Rahul_Venkatesh will be there - stop by and chat with him!

Excited to present our #ECCV2024 paper on “Understanding Physical Dynamics with Counterfactual World Modeling” with @honglin_c, Kevin Feigelis, @recursus and @dyamins. Come by poster 260 at the exhibition area from 430-630pm today @eccvconf TLDR: We introduce Counterfactual World Modeling (CWM) — a visual world model that can be prompted to extract zero-shot vision structures such as keypoints, optical flow and segmentation. We demonstrate that these structures are useful for physical dynamics understanding, achieving state-of-the-art performance on the Physion intuitive physics benchmark. In our paper, we also discuss how CWM builds up scene understanding capabilities analogous to Pearl’s ladder of causation. We hope this will help provide a path to move towards a foundation model of vision with a causal understanding of the world. @yudapearl We’re eager to see others build on top of our work. Code, models and data can be found in the links below Project page: Paper: Github code: Hugging face demo: 1/🧵

0

2

16

RT @tylerraye: do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consis…

0

89

0

RT @mjlbach: I'm super proud of the team @hedra_labs. This was the last model trained on our first gen architecture. The stylize feature is…

0

16

0

RT @Koven_Yu: PhysDreamer has been accepted by ECCV with *Oral* presentation🌹🎉. Check out Tianyuan @tianyuanzhang99 's wonderful introducti…

0

14

0

RT @cogphilosopher: Excited to give a talk on our work (w/ @jvrsgsty @nayebi @luosha @dyamins) on inter-animal transforms at the @CogCompNe…

0

5

0

Thrilled to present our work on Counterfactual World Modeling @CogCompNeuro. Join us this afternoon at poster B109 for more exciting results. #CCN2024

Excited to present at #CCN2024! Join me, @honglin_c and @dyamins today at 1:30-3:30 (B109) for our poster: "Climbing the Ladder of Causation with Counterfactual World Modeling". We build a visual world model with capabilities analogous to Pearl's Ladder of Causation @yudapearl

0

3

10

RT @ChengxuZhuang: Two papers! Can visual grounding help LMs learn more efficiently? 1. We show that algs like CLIP don't learn language b…

0

20

0

RT @KyleSargentAI: I’m really excited to finally share our new paper “ZeroNVS: Zero-shot 360-degree View Synthesis from a Single Real Image…

0

55

0