tyler bonnen

@tylerraye

Followers

1K

Following

3K

Statuses

542

neuroscientist @berkeley_ai. NIH K00 + UC Presidential Postdoctoral Fellow. he/him

Unceded Muwekma Ohlone Land

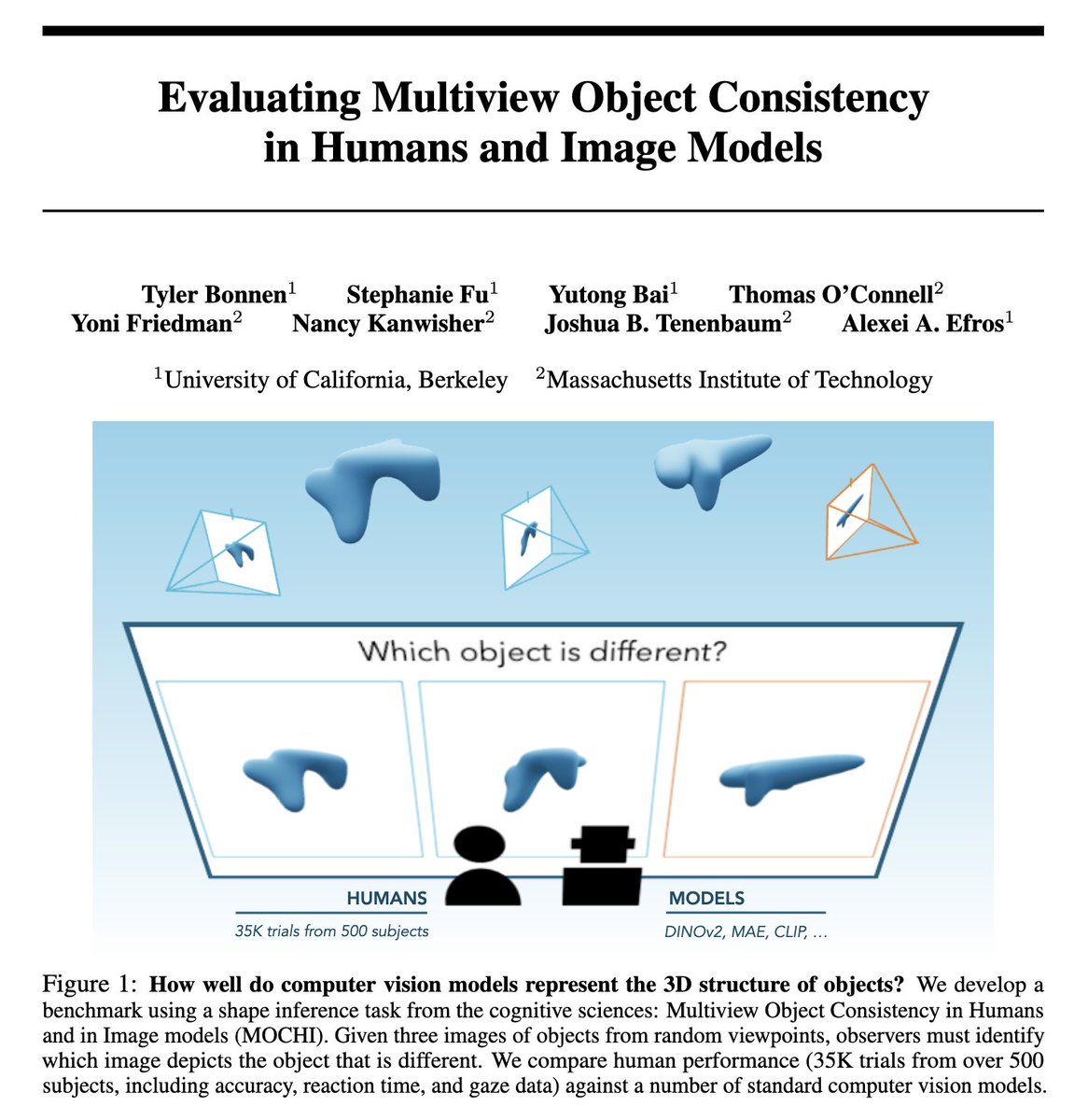

Joined August 2020

do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consistency in humans and image models (MOCHI) with @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher Josh Tenenbaum and Alexei Efros 1/👀

13

89

415

@GretaTuckute @mitbrainandcog @ev_fedorenko @Nancy_Kanwisher @JoshHMcDermott @yoonrkim @mcgovernmit congratulations 🥳 and so excited to hear about whats coming next ✨

1

0

6

i'm in vancouver for #NeurIPS2024 presenting our 3D shape inference benchmark tomorrow! stop by poster #1210 at 4:30 on friday if you're interested and if you'd like to talk about neuro-ai, human cognition, or suggest nearby hikes, feel free to reach out!

do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consistency in humans and image models (MOCHI) with @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher Josh Tenenbaum and Alexei Efros 1/👀

0

6

66

happy to share that we'll be presenting this work at neurips 2024! 🥳 some *surprising* updates coming for the results soon 👀 but all the code/images/data are already available at project page: 🤗: code:

do large-scale vision models represent the 3D structure of objects? excited to share our benchmark: multiview object consistency in humans and image models (MOCHI) with @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher Josh Tenenbaum and Alexei Efros 1/👀

3

19

141

@lukas_mut @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher perfect! good luck with your submission

0

0

1

@abhinav1kumar i'd guess the ordering relates to different readouts: they use a dense multi-scale decoder while we're using a lightweight classifier. CLIPs are probably better suited for our lightweight readouts then MAEs. but curious what @_mbanani thinks about this!

1

0

4

@StphTphsn1 @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher thanks Stephane! and of course!!! always happy to point people towards your work; we are some of the very few people who are thinking about how temporal dynamics/processing time relate to human/model mis-alignment ✨

0

0

3

@mariam_s_aly @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher ah, thank you Mariam!!! the acronym took a while 😅

0

0

1

@recursus @xkungfu @YutongBAI1002 @thomaspocon @_yonifriedman @Nancy_Kanwisher thanks Dan—would love to know what you think!

0

0

0