Florian Tramèr

@florian_tramer

Followers

4,673

Following

210

Media

87

Statuses

830

Assistant professor of computer science at ETH Zürich. Interested in Security, Privacy and Machine Learning

Zürich

Joined October 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

The DNC

• 880401 Tweets

Joe Biden

• 601561 Tweets

Hillary

• 286122 Tweets

#loveislandusa

• 145983 Tweets

#DNCConvention2024

• 142791 Tweets

Jill

• 108058 Tweets

Star Wars

• 93026 Tweets

Andrea

• 84863 Tweets

ニンテンドーミュージアム

• 79766 Tweets

Jana

• 76841 Tweets

Steve Kerr

• 54979 Tweets

The Acolyte

• 51442 Tweets

#IRIAMメンテ中のフォロー祭り

• 49827 Tweets

Jasmine Crockett

• 49415 Tweets

Leah

• 48734 Tweets

Warnock

• 42382 Tweets

Serena

• 41038 Tweets

Kendall

• 40159 Tweets

Jamie Raskin

• 38393 Tweets

#DNC2024CHICAGO

• 37961 Tweets

#ファミマの増量チョコ

• 29876 Tweets

Ashley Biden

• 29431 Tweets

राजीव गांधी

• 28982 Tweets

Kaylor

• 23938 Tweets

はれときどきぶた

• 19203 Tweets

Charlottesville

• 17716 Tweets

ALEXA KNT OnSHOWTIME

• 16475 Tweets

Angry Joe

• 15597 Tweets

CSPAN

• 14963 Tweets

초코우유

• 13276 Tweets

令和の米騒動

• 13249 Tweets

FELIZ CUMPLE XCRY

• 12542 Tweets

ケンタッキー

• 11599 Tweets

ドラゴンガンダム

• 10944 Tweets

Last Seen Profiles

Current algorithms for training neural nets with differential privacy greatly hurt model accuracy.

Can we do better? Yes!

With

@danboneh

we show how to get better private models by...not using deep learning!

Paper:

Code:

7

40

262

I'm excited at the prospect of creating a great new research group focused on ML Security & Privacy at ETHZ next Fall.

I have open positions for PhD students and Postdocs, so if you're interested please reach out!

More info here:

7

36

204

I'm super excited to finally start

@CSatETH

after a wonderful year at Google.

If you're interested in joining my first great "batch" of PhD students

@edoardo_debe

@dpaleka

as we build our group on ML privacy & security, please apply for a PhD or postdoc:

We are pleased to welcome

@florian_tramer

in his new role as Tenure Track Assistant Professor. He heads the Computer Security and Privacy Group at the Institute for Information Security. Read more:

@ETH_en

@EPFL_en

@Stanford

@Google_CH

#ML

#security

1

2

47

10

19

190

I'm thrilled to receive the best paper award at the

#ICML2021

workshop on Prospects and Perils of Adversarial ML

paper:

video:

poster: 11:30-12:30 EST

TLDR: detecting adversarial examples isn't much easier than classifying them

2

14

181

We introduce 💉Truth Serums💉, a new threat for ML models

We show an attacker can poison a model's training set to significantly leak private data of other users

with

@rzshokri

@10BSanJoaquinA

@sanghyun_hong

Hoang Le, Matthew Jagielski & Nicholas Carlini

3

35

159

I'm looking for PhD students or postdocs to sign a letter supporting me in case I get fired as CEO of my lab.

If that sounds fun, consider applying! (we might also do cool research)

If you miss the AI Center deadline, you can apply to my group directly:

9

15

133

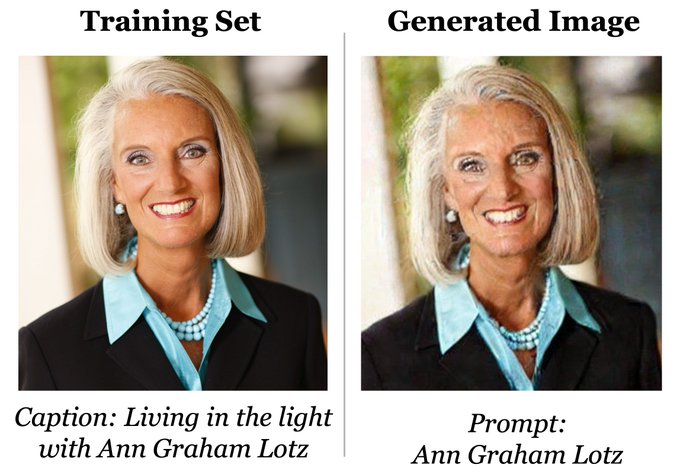

There are active discussions whether generative AI models like Stable Diffusion create "new" images or merely "copy and mix" pieces and styles of their training data.

In a new paper, we show that sometimes Stable Diffusion and Google's Imagen simply copy *entire images*!

11

15

123

Does GPT-2 know your phone number?

With

@Eric_Wallace_

,

@mcjagielski

,

@adversariel

, we wrote a blog post on problematic data memorization in large language models, and the potential implications for privacy and copyright law.

blog:

2

41

113

I'm thrilled to start Invariant Labs with amazing colleagues from

@CSatETH

.

Our ambition is to build AI agents that solve challenging tasks securely and reliably.

Since most of my research has focused on *breaking* ML I'm excited to apply this knowledge to build better systems!

We are delighted to announce Invariant Labs ()! Our mission is to make AI agents secure and reliable! It is founded by

@mvechev

,

@mbalunovic

,

@lbeurerkellner

,

@marc_r_fischer

,

@florian_tramer

and builds on years of experience in industry and academia.

4

7

65

1

7

116

Thrilled to receive an award for our position paper on private learning with public pretraining with Nicholas Carlini &

@thegautamkamath

I'm presenting this work today

@icmlconf

at 11am (hall A1)

Come say hi at the talk or poster session if you're interested in private learning!

9

3

110

*laughs in machine learning*

1

5

108

Ridiculously poor organization skills from

@UEFAcom

to schedule a champions league semi-final in the middle of a NeurIPS deadline

3

6

106

Our

@satml_conf

LLM Capture-the-flag is now live!

Can you find successful defenses and attacks for prompt injection?

3

29

105

@Ceetar

Just iterate over meetings and send emails is indeed fine.

But you have *zero* guarantee that this is what the llm will do, or that it won't suddenly do something different based on the data it ingests along the way.

2

2

99

We looked at Stable Diffusion's safety filter

(our paper got blocked by arXiv's own safety filter...)

The filter only uses "hashes" of sensitive topics; we recover these with a dictionary attack

We find it filters for 17 nudity concepts & tunes the severity for child depictions

Stable Diffusion has a safety filter blocking “harmful” images by default. The filter is obfuscated -- how does it work?

We reverse engineer the hidden sauce!

Joint work

@Javi_Rando

,

@davlindner

,

@ohlennart

,

@florian_tramer

:

"Red-Teaming the Stable Diffusion Safety Filter" 🧵

9

65

400

2

15

97

After nearly 1 year

@ETH_en

, our Secure and Private AI (SPY) Lab finally has a website, and a first blog post!

I wrote some thoughts on what adversarial examples have in common with attacks on language models like ChatGPT.

TLDR: surprisingly not much!

1

16

90

Very excited about this work on evaluating ML models when ground truth is unknown (eg when models are superhuman, or simply when humans are bad at the task)

We argue that when accuracy of individual decisions is hard to assess, we should look for "logic bugs" across decisions

How to evaluate superhuman models without ground truth? How do we know if the model is wrong or lying, if we can't know the correct answer?

Test whether the AI's outputs paint a consistent picture of the world!

w/

@LukasFluri_

@florian_tramer

(1/14)

8

32

189

4

8

88

@moo9000

Yeah and a zero-day in windows is a huge issue...

Now imagine an "operating system" (the LLM) with infinite zero-days (you just have to find the right way to ask nicely) and no clear mitigation path

1

2

80

Web-scale facial recognition is getting scarily good (see )

Popular tools like (500'000+ downloads!) fight back using adversarial examples.

With

@evanidixit

, we argue that this is hopeless (and dangerous)!

4

19

78

@Ceetar

The LLM gets to "decide" which APIs to call (and their inputs) and that's just fundamentally different and less secure than typical software.

You simply have no guarantee that what you tell the LLM to do (how to chain APIs) won't get overwritten by API results.

5

1

73

@laidacviet

It's not executing code per se. It's just the LLM calling other APIs. That's what it's supposed to do.

But with even a tiny bit of functionality (as in the example in my tweet), chaining APIs the wrong way is a huge security of privacy risk

4

1

72

Paraphrasing my PhD advisor

@danboneh

: "security researchers will never be out of a job"

Why? Advances in security always lag behind capabilities ("move fast and break things").

So for better or worse, ML security/privacy/safety is a great area to work in right now

0

11

67

I think we have to parse this as:

"We're launching a team to forecast and protect against [AI risk led by

@aleks_madry

]"

Aleks, which side are you on??? 🤣

we are launching a new preparedness team to evaluate, forecast, and protect against AI risk led by

@aleks_madry

.

we aim to set a new high-water mark for quantitative, evidence-based work.

124

146

1K

3

4

66

@jhasomesh

Write a paper claiming idea Y as novel and publish it.

Wait for the authors of X to send you an angry email.

1

1

54

Proud of Edoardo's first PhD project!

We look at black-box adversarial examples, and ask if existing attacks optimize for the right metric (spoiler alert: no).

We then set out to design attacks that reflect the true costs of attacking real models, and provide a new benchmark.

1

4

52

Tired of having to track arxiv, twitter, discord, etc to learn of the newest LLM vulnerabilities?

@mbalunovic

,

@lbeurerkellner

,

@marc_r_fischer

and

@a_yukh

are too!

So they built this cool project (w. advice from

@mvechev

& me) to track it all in one place.

Contributions welcome!

0

7

51

Interested in finding out how to break "provably secure" defenses against adversarial examples?

Come chat at

#ICML2020

tomorrow (8AM & 8PM PDT):

w.

@JensBehrmann

, Nicholas Carlini,

@NicolasPapernot

,

@jh_jacobsen

0

8

51

I'll pushback on this :)

When tools that promise to protect user data are deployed, publicized, and get traction, then it isn't just "research as usual" when they are later broken.

Data protection is a one-way street!

Users whose data failed to be protected don't get a 2nd shot.

3

4

50

Oh wow, if only we could have predicted that plugins were going to be a security nightmare!

I wonder if some people still think this will be "trivial" to fix...

1

14

47

Best example I've seen yet that worrying about adversarial examples for self driving cars is ridiculously overkill.

The invariant "stop at intersection if you see a stop sign" is way too simplistic to begin with.

See

@catherineols

's great post on this:

@karpathy

@elonmusk

@DirtyTesla

here is a fun edge case. My car kept slamming on the brakes in this area with no stop sign. After a few drives I noticed the billboard.

75

188

4K

2

8

46

@imjliao

Yes, that was

@random_walker

.

The simple truth is we don't know how to prevent these attacks fully

1

0

46

At 12pm PST I'll present our

@USENIXSecurity

paper on side-channel attacks on anonymous transactions

w.

@danboneh

,

@kennyog

We show how to de-anonymize transactions in Zcash

@ElectricCoinCo

&

@monero

(attacks disclosed & fixed last year)

video & slides:

0

14

45

Who could have seen that coming? 🤔

@mmitchell_ai

Here is proof GitHub CoPilot, which I believe is based on GPT-3, is both picking up on AND REPRODUCING my personally identifying information (PII) on the machine of another researcher in precisely the same overall research area as me: Robotics with AI.

9

174

496

0

5

44

This defense's evaluation is so clearly and obviously wrong that I think it didn't even merit a break.

It's weird that no S&P reviewers caught it.

- the defense claims robustness to attacks that change 100% of each pixel

- the defense accuracy sometimes goes *up* under attack...

1

2

43

@sweis

you could try the trick we introduced here:

Ask the model to repeat a chunk of code you think might be from the leak, and then do the same thing with the memorization filter enabled to see if it gets filtered out.

0

1

42

Cool new

@NeurIPSConf

workshop: "New in ML" offers mentorship to authors who have not yet published at NeurIPS:

Submit by Oct 15 for reviews and possibly mentorship by leaders in the field, Joshua Bengio,

@hugo_larochelle

,

@_beenkim

,

@tdietterich

and more

1

14

39

Heeey not fair!

I was told my paper was accepted.

@bremen79

That was an accident and the outcomes are not final. I think they are not visible anymore. A couple of more hours!! Thank you!

4

0

29

2

1

39

My ScAINet talk on recurring issues with adversarial examples evaluations is online

work w.

@wielandbr

,

@aleks_madry

,N. Carlini

I call for broken defenses to be publicly amended (same as fixing wrong proofs in theory papers)

Happy to hear thoughts on this!

2

2

37

Javi did some cool work on poisoning RLHF (his master thesis!)

We show RLHF enables powerful backdoors: a secret "sudo" trigger that jailbreaks the model.

Luckily, this requires poisoning lots of data.

We also launched a competition to find the backdoors

1

4

37

@iclr_conf

what's the point of enforcing the same 9page limit for camera-ready papers as for submissions?

Including reviewer comments + author block, every paper likely ends up at >9.5 pages.

Can't imagine how many collective hours will be wasted trimming down that content...

1

0

38

@lreyzin

I'd much rather have 100 famous professors put their names on 100 papers they didn't contribute to, than have one undergrad author removed from a paper in order to pad someone's h-frac score

1

0

37

Interested in looking for memorized training data in GPT-2 or other LMs?

I've released some code to get started here:

And a list of ~50 weird things we extracted from GPT-2 here:

If you find other fun stuff, please let me know!

Does GPT-2 know your phone number?

With

@Eric_Wallace_

,

@mcjagielski

,

@adversariel

, we wrote a blog post on problematic data memorization in large language models, and the potential implications for privacy and copyright law.

blog:

2

41

113

0

10

33

My job offer from

@CSatETH

went into gmail spam 🙃

1

0

33

Is there an app with a worse login experience than

@SlackHQ

?

I lost all my workspaces after the app crashed. After signing back in with 4 different emails, I still couldn't recover them all.

Why can't I have *1* account with all my workspaces that syncs correctly across devices?

0

0

32

Vulnerability disclosures may become common in ML.

So ML conferences may need to set standards.

Eg security confs allow submitting during the disclosure period (reviewers & authors keep the paper secret up to the conf)

Not sure this would work in ML especially with openreview...

1

4

32

We aim for this paper to give tutorial-like illustrations of how to design an adaptive attack on an adversarial examples defense.

Each section describes our *full* approach for each defense: from our initial (possibly failed) hypotheses and experiments to a final attack.

We broke 13 recent peer-reviewed defenses against adversarial attacks. Most defenses released code + weights & use adaptive attacks! But adaptive evaluations are still incomplete & we analyse how to improve. w/

@florian_tramer

Nicholas Carlini

@aleks_madry

3

33

150

2

8

31

Together with

@AlinaMOprea

we invite nominations for the 2024 Caspar Bowden Award for Outstanding Research in Privacy Enhancing Technologies!

@PET_Symposium

Please nominate your favorite privacy papers from the past 2 years by **May 10**

Info and rules:

0

13

31

@kennyog

@danboneh

Thanks to

@Monero

for the very positive response and prompt reaction (you can follow the disclosure process here: )

Also, thanks for the generous bounty!

#my_first_crypto

0

9

29

This is my favorite result from a recent paper with

@JensBehrmann

, Nicholas Carlini,

@NicolasPapernot

,

@jh_jacobsen

Models with increased robustness to adversarial examples ignore some semantic features, and are vulnerable to a "reverse" attack

0

10

29

Michael and Jie did an amazing job on their first PhD project, by finding and fixing common pitfalls in empirical ML privacy evaluations.

It turns out, if you evaluate things properly, DP-SGD is also the best *heuristic* defense when you instantiate it with large epsilon values.

0

4

29

A cute take on university rankings. How amazing if institutions were optimizing for this instead...

Rough point of comparison: PhDs at

@ETH_en

(in CS) take home ~2x as much as the leader in the US list.

(I don't have a living cost estimate for Zurich, but likely similar to CA)

2

2

26

And "doubly-thrilled" that our paper on stealing part of chatgpt also got awarded!

(Joint with my student

@dpaleka

and many others)

The paper will be presented on Wednesday at 16:30 (hall A2), and in poster session 4.

0

1

27

@aleks_madry

@thegautamkamath

@TheDailyShow

@Trevornoah

@OpenAI

@miramurati

@MIT

@sanghyun_hong

No need to rebuild a diffusion model, just run it twice! (+ a little face blur)

Now, one might make attacks robust to this, or more destructive.

But this is the crux of our paper linked by Gautam: you get 1 attack attempt to fool all (future) defenses.

I think this is hard.

2

1

26

I love doing nothing and still getting SOTA

0

4

26

Looking forward to this!

I changed my talk's title slightly:

"𝐖𝐡𝐲 𝐲𝐨𝐮 𝐬𝐡𝐨𝐮𝐥𝐝 𝐭𝐫𝐞𝐚𝐭 𝐲𝐨𝐮𝐫 𝐌𝐋 𝐝𝐞𝐟𝐞𝐧𝐬𝐞 𝐥𝐢𝐤𝐞 𝐚 𝐭𝐡𝐞𝐨𝐫𝐞𝐦"

If this piques your interest, see you Thursday!

Join us for our event on Machine Learning Security! Thursday, July 7th, 2022, at 15:00 CEST.

Invited talk by Florian Tramèr

@florian_tramer

(Google, ETHZ).

Registration:

YT Live:

#adversarial

#ml

#ai

#security

#mlsec

1

2

18

1

3

26

I had written down some thoughts on this here:

But I don't know if defending against text jailbreaks will be easier than for images.

Despite a decade of research, we don't know how to make *any* models adversarially robust.

0

1

26

We're releasing a realistic evaluation framework for prompt injection attacks on LLM Agents.

There's work to do for attacks and defenses alike:

- can we build stronger, more principled attacks beyond trial-and-error?

- which agent designs best tradeoff utility and security?

0

6

25

Nice thread about possible copyright implications of data memorization in diffusion models!

Regarding Getty, our work () found a few examples of copied images that Getty attributes to specific photographers, e.g., this one by

@iangav

2

3

24

This position paper calls for a more nuanced discussion on using "public" data to improve "private" machine learning.

We argue that current work may be overselling both the privacy and utility of this approach.

We deliberately took a fairly contrarian stance. Comments welcome!

🧵New paper w Nicholas Carlini &

@florian_tramer

: "Considerations for Differentially Private Learning with Large-Scale Public Pretraining."

We critique the increasingly popular use of large-scale public pretraining in private ML.

Comments welcome.

1/n

4

20

148

0

2

22

@imjliao

@random_walker

Maybe? I don't know, no one has tried. A decade of lessons learned in adversarial ML suggests we have no idea how to do this in a way that guarantees security.

0

1

24

Finally got to write a hyped² paper on blockchain and ML!

New paper! "SquirRL: Automating Attack Discovery on Blockchain Incentive Mechanisms with Deep Reinforcement Learning" by Charlie Hou, Mingxun Zhou,

@iseriohn42

, myself,

@florian_tramer

,

@giuliacfanti

, and

@AriJuels

.

Turns out, selfish mining is hard!

0

16

49

1

0

24

Apart from the author order algorithm, I think this paper makes a nice contribution to discussions on copyright infringement in generative models.

As we show, trying to prevent a model from outputting *exact* copies of training data (e.g. as in Github Copilot) is insufficient

Nice paper (ft

@daphneipp

@florian_tramer

@katherine1ee

+more) that argues looking at verbatim memorization is inadequate when considering ML privacy risks

It also has the most interesting author ordering I've ever seen. So much for alphabetical or random

1

4

43

0

2

23