Atila

@atiorh

Followers

2,165

Following

213

Media

34

Statuses

354

founder @argmaxinc 🥷 ex-Apple

San Francisco, CA

Joined July 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

بايرن

• 518886 Tweets

موناكو

• 501072 Tweets

Wizkid

• 281920 Tweets

Davido

• 258507 Tweets

Supreme God Kabir

• 132451 Tweets

からくりサーカス

• 108777 Tweets

#BrightestStarBangChan

• 101129 Tweets

#우리의_찬란한_청춘_방찬에게

• 100161 Tweets

Universidad Pública

• 84276 Tweets

Doug Emhoff

• 58278 Tweets

青木選手

• 58001 Tweets

Shakira

• 49729 Tweets

SPILL THE FEELS TRACK LIST

• 43596 Tweets

CHAE X JESBIBLE

• 43157 Tweets

Shana Tova

• 36797 Tweets

Gago

• 32508 Tweets

Cassper

• 31316 Tweets

Rosh Hashanah

• 30239 Tweets

KARIME EN HOY

• 28042 Tweets

Centro Carter

• 27003 Tweets

GALA EN HOY

• 26756 Tweets

שנה טובה

• 24079 Tweets

Lille

• 22726 Tweets

センラさん

• 17027 Tweets

Channie

• 13409 Tweets

ITZY IS THE MOMENT

• 10999 Tweets

Girona

• 10773 Tweets

Last Seen Profiles

Pinned Tweet

Delighted to release our first project

@argmaxinc

. Real-time Whisper inference on iPhone and Mac! Let us know what you think❤️🔥

3

2

40

Exciting updates to

#stablediffusion

with Core ML!

- 6-bit weight compression that yields just under 1 GB

- Up to 30% improved Neural Engine performance

- New benchmarks on iPhone, iPad and Macs

- Multilingual system text encoder support

- ControlNet

🧵

23

304

2K

As part of

#WWDC22

, we are open-sourcing a reference implementation of the Transformer architecture optimized for the Apple Neural Engine (ANE)!

(1/n) 🧵

8

130

647

Delighted to share

#stablediffusion

with Core ML on Apple Silicon built on top of

@huggingface

diffusers! 🧵

9

92

498

My takeaways from Apple's “LLM in a flash" (1/n)

3

68

372

35 TFlops of ML compute in your pocket! (

#iPhone15Pro

) On-device inference is getting interesting..

#AppleEvent

14

21

221

Apple Intelligence hits the market in beta today: A pretty impressive 2.6b on-device LLM running on the Neural Engine compressed down to ~1GB. It consumes way below 10W.

Congrats to my former teammates & colleagues on landing this!

Tech report is also out:

4

18

184

Thanks

@Apple

2

6

125

Persimmon-8b LLM (

@AdeptAILabs

) has ~95% activation sparsity in many of its layers which is crazy! Here is a gist that prints some stats. Most zeros are shared across tokens too:

1

11

121

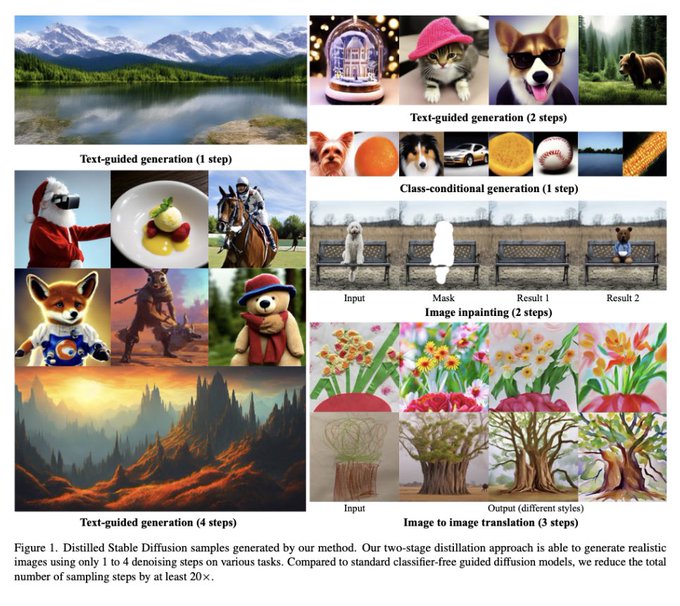

For distilled

#StableDiffusion2

which requires 1 to 4 iterations instead of 50, the same M2 device should generate an image in <<1 second:

Distilled

#StableDiffusion2

> 20x speed up, convergence in 1-4 steps

We already reduced time to gen 50 steps from 5.6s to 0.9s working with

@nvidia

Paper drops shortly, will link, model soon

Will be presented

@NeurIPS

by

@chenlin_meng

&

@robrombach

Interesting eh 🙃

59

248

1K

2

21

94

If you are excited about this field and would like to work on applied R&D in generative models, send me a note or come to the Apple booth at

#NeurIPS22

to chat with us!

2

4

54

In our research article case study, the

@huggingface

distilbert model is up to 10x faster with 14x lower peak memory consumption after our optimizations are applied on it while consuming as low as 0.07W of power.

2

4

46

Are you excited about

#generativeai

? We are looking for experts in this space to join our applied ML R&D team at

@Apple

! You will be inventing and shipping the next generation of these core technologies with a focused team and here is the link to apply:

4

10

37

The first podcast about on-device inference and our work

@argmaxinc

, enjoy!

Apple Podcasts:

Atila Orhon is the founder of

@argmaxinc

and was previously at

@Apple

. He joins the show with

@seanfalconer

to talk about scaling ML models to run on commodity hardware.

0

2

8

1

3

37

Grok-1 = 314b MoE

Mac Studio with M2 Ultra should be able to host this beast in 4-bit!

@awnihannun

👀

3

2

34

Apple is marketing M3 Max for "AI developers to work with even larger transformer models with billions of parameters":

#AppleEvent

1

7

33

Happy to partner with

@StabilityAI

on their Stable Diffusion 3 Medium launch!

DiffusionKit now supports Stable Diffusion 3 Medium

MLX Python and Core ML Swift Inference work great for on-device inference on Mac!

MLX:

Core ML:

Mac App:

@huggingface

Diffusers App (Pending App Store review)

6

33

243

0

0

31

I❤️🔥Open + Diffusion + Transformer = Stable Diffusion 3

On-device Stable Diffusion 3

We are thrilled to partner with

@StabilityAI

for on-device inference of their latest flagship model!

We are building DiffusionKit, our multi-platform on-device inference framework for diffusion models. Given Argmax's roots in Apple, our first step

16

73

390

1

3

25

All mixed-bit palettization recipes as well as some of the palletized Core ML models are published on

@huggingface

Hub by

@pcuenq

: . We are looking forward to the community MBP'ed models in the coming weeks!

2

2

23

Check out the

@huggingface

blog by

@pcuenq

on Faster Stable Diffusion on Apple Silicon!

1

4

22

Finally, there are extremely useful features in this release including:

- SDXL refiner Swift inference by

@zachnagengast

- SDXL base Python inference by

@HectorLopezPhD

- CUDA RNG in Swift by

@liuliu

- Karras schedule for DPMSolver by

@pcuenq

2

1

21

Great work from

@Snap

compressing Stable Diffusion to <2-bit! This is a significant improvement of the mixed-bit technique [1] we published last year to get this level of high quality results:

[1]

0

2

21

Bringing transformers to Swift!

@pcuenq

@huggingface

0

1

19

The models used for iOS deployment are hosted on

@huggingface

Hub but you can always export another model version locally following the README instructions

2

0

16

Congrats to friends

@FAL

! Even though we champion on-device inference

@argmaxinc

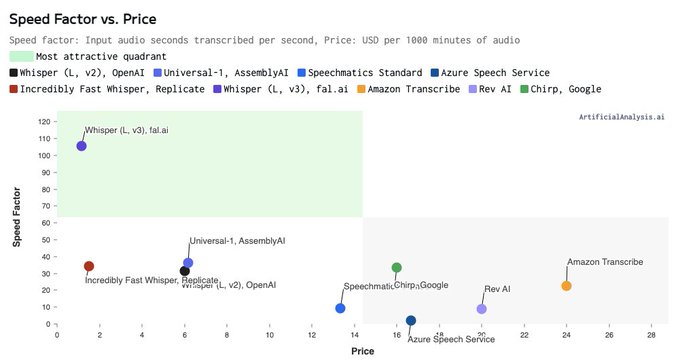

, we believe that server-side inference has a big role to play in a hybrid inference future

We are now benchmarking

@fal

's Speech to Text Whisper endpoint and it is setting new standards in Speed Factor and Price!

fal has raised the bar with a Speed Factor of 105 (105x real time), making it an attractive option for use-cases requiring fast transcription (meeting notes

8

6

40

0

2

16

Dropping some minor improvements to

@argmaxinc

WhisperKit tomorrow :)

0

1

17

Let's build.❤️🔥

@generalcatalyst

🤝

@argmaxinc

What does the future of compression techniques & on-device inference software look like?

Enter

@argmaxinc

.

We're thrilled to lead its $2.6M seed & welcome

@atiorh

,

@bpkeene

,

@zachnagengast

, & team to the GC family!

By

@quentinclark

&

@AlexandreMomeni

↓

1

27

13

3

0

16

"Transformer models" on Apple Silicon were called out 3 times during the

#WWDC23

keynote! In case you missed it 🧵

1

3

14

On-device inference is big in Japan 🇯🇵 If only it was clearer that WhisperAX is just a test app and we want developers to use the underlying library😅

@argmaxinc

1

0

14

Enjoy!❤️🔥We have been brewing this since the initial launch in February. This release is focused on streaming performance, better compression, and addressing developer feedback 🧵

1

1

13

We are actively hiring for a

#GenerativeAI

Applied Researcher! Feel free to DM me with pointers to exceptional past work 🙏

1

4

13

@pcuenq

@zachnagengast

@huggingface

If this work is interesting to you, you should consider joining us:

0

1

10

Excited about this line of work from

@cartesia_ai

!

@krandiash

and team are focused on proving the value of state-space models by building best-in-class models themselves. Looking forward to the on-device implementation.

0

0

8

Fun fact: This demo is from a recent

@theallinpod

where

@chamath

talks about OpenAI's "microphone" (Whisper) hallucinating "Thank you for watching" given a silent input. In this release, we implemented a hallucination guardrail (h/t

@_jongwook_kim

):

Whisper distil-large-v3 from

@huggingface

also landed in this WhisperKit release! Compared to large-v3:

- 6x faster and 50% smaller

- Virtually the same evaluation metrics

- In our evals, slightly better than OpenAI API

Test it in 2 mins:

- App:

- CLI:

6

40

220

0

1

8

swift-coreml-diffusers from

@huggingface

built on top of !

0

0

7

@danielgross

Base M2 MacBook Air should be roughly equally performant for this model on the Neural Engine

0

0

6

@MaxWinebach

If you want to leverage the Neural Engine, give a shot if you haven't used it already.

1

0

6

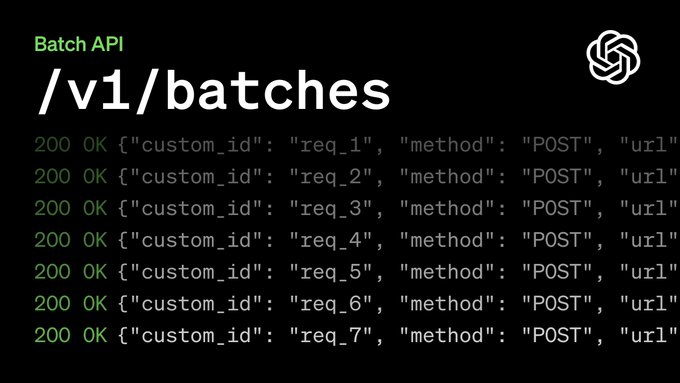

This is the economical sweet spot for server-side inference: Operating with predictable non-urgent workloads to achieve high utilization albeit with high latency. Curious to see how much traffic this gets and which products pivot to leverage this differential pricing...

0

0

7

⚠️Extremely important notice for pytorch-nightly users, dependency chain was compromised:

#pytorch

0

0

7

If distilled

#StableDiffusion

follows verbatim, negative prompts would be disallowed. On the other hand,

#StableDiffusion2

seems to rely even more on negative prompts for best results 🤔

@hardmaru

1

1

6

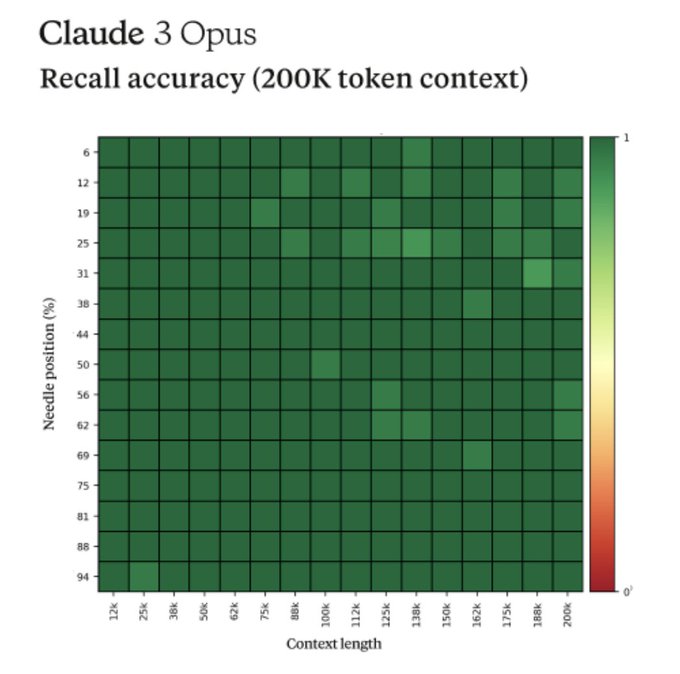

I don't want to contribute to the speculation around this Claude 3 result but it reminds me of

@karpathy

's fun experiment: "Do LLMs know they are being evaluated?"

0

0

6

@zacharylipton

@togelius

I am curious about your thoughts on recent work in differentiable curriculum learning:

0

1

6

Our team (mainly

@Arda_Okan97

) compressed Whisper down to <1GB (>3x) without a quality loss:

- Setup: LoRA fine-tuning with compressed weights

- Dataset: Random noise

- Loss: Distillation from uncompressed model

4-bit matches 16-bit with Data-free QLoRA

QLoRA by

@Tim_Dettmers

et al. is a great technique for recovering quality in a heavily compressed model but it generally requires a training dataset for fine-tuning. We (

@Arda_Okan97

) do not use a dataset and demonstrate that QLoRA works

1

3

16

1

1

5

Delighted to share one of the scene understanding technologies that we have built for the Camera!

#AppleEvent

#machinelearning

0

0

4

It was a privilege working in

@ctnzr

’s world-class team for 3 months back in 2017, I had exploding gradients in my career that summer! Regarding soft skills: I still prepare my prez based on his “research communication” principles

1

0

4