Constantinos Daskalakis

@KonstDaskalakis

Followers

7K

Following

542

Media

5

Statuses

121

Scientist. Computer Science Professor @MIT. Studying Computation, and using it as a lens to study Game Theory, Economics, and Machine Intelligence.

Cambridge, MA

Joined November 2018

There are many algorithms for learning Ising models. Yet there is no sample-efficient and time-efficient one that works without assumptions, even for tree-structured models. We obtain the first such result in fresh work w/ @PanQinxuan:.

1

18

154

Identity testing between high-dimensional distributions requires exponentially many samples in the dimension---aka good luck GANs targeting high-dimensional distributions. Joint work with @FeiziSoheil and M. Ding shows how to escape these lower bounds if the target is a Bayesnet!.

Q: How to design a GAN for distributions on Bayesian networks? A: check out our paper introducing "SubAdditive GANs": Joint work with Constantinos Daskalakis @KonstDaskalakis and @umdcs student Mucong Ding, started at @SimonsInstitute

4

18

90

Congratulations to my student, Dr. @NishanthDikkala, who successfully defended today, having done innovative and deep work on statistical inference using dependent data!.

Congratulations to my academic brother Dr. Nishanth Dikkala (@NishanthDikkala) who just defended his thesis on statistical inference from dependent data! Another student lucky to be advised by Costis (@KonstDaskalakis)! Check out his work on his website:

1

0

91

Honored to give a lecture in memory of Paris Kanellakis!.

Professor @KonstDaskalakis of @MIT will deliver the 19th annual Paris C. Kanellakis Memorial Lecture ("Learning from Censored and Dependent Data") at 4 PM on December 5 in CIT 368. Details at:

0

8

90

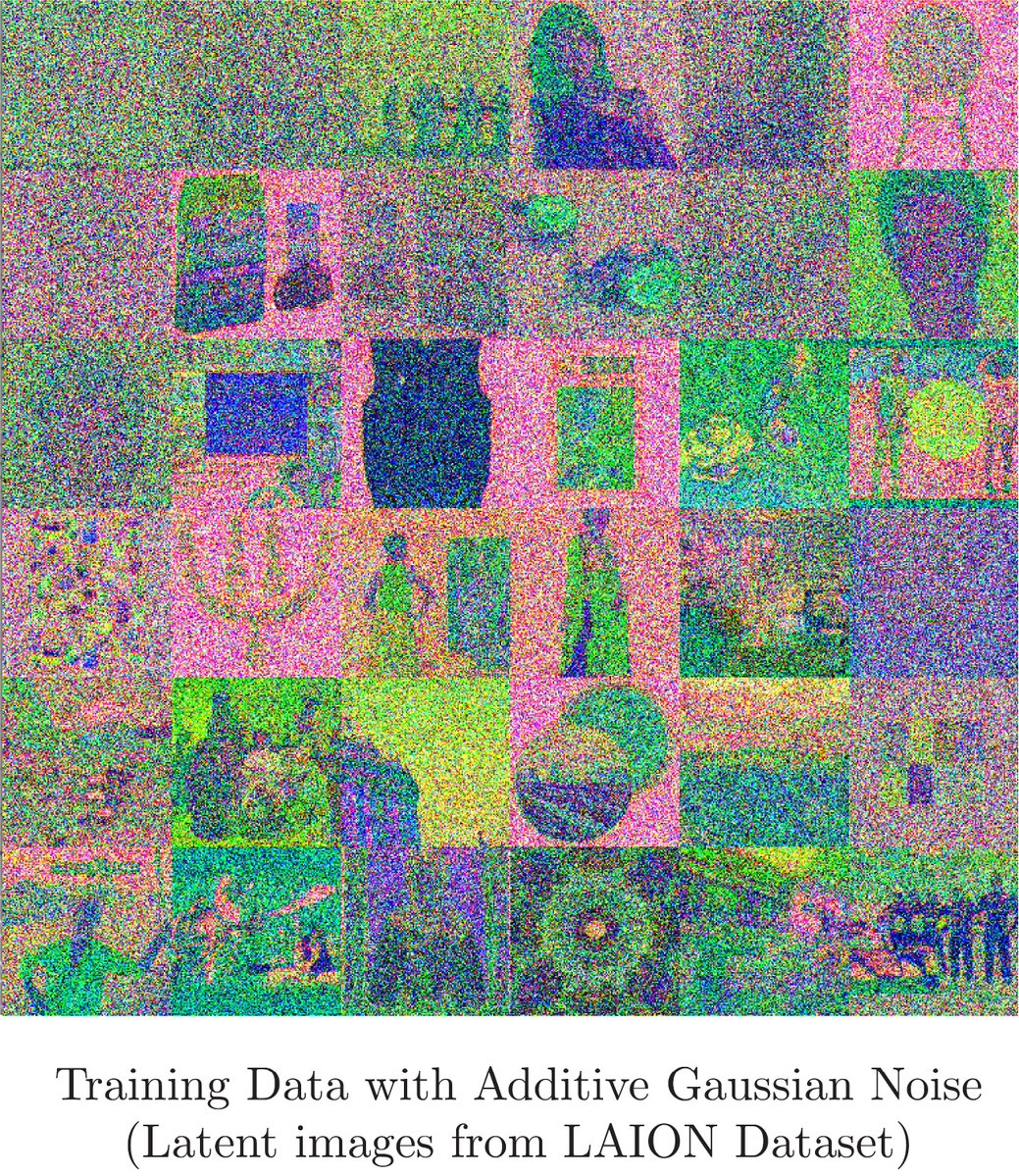

Can you train a generative model using only noisy data? If you can, this would alleviate the issue of training data memorization plaguing certain genAI models. In exciting work with @giannis_daras and @AlexGDimakis we show how to do this for diffusion-based generative models.

Consistent Diffusion Meets Tweedie. Our latest paper introduces an exact framework to train/finetune diffusion models like Stable Diffusion XL solely with noisy data. A year's worth of work breakthrough in reducing memorization and its implications on copyright 🧵

1

12

70

Congratulations to Manolis Zampetakis for this richly deserved honor!.

The @AcmSIGecom Dissertation Award for 2020 goes to .Manolis Zampetakis for his thesis "Statistics in High Dimensions without IID Samples: Truncated Statistics and Minimax Optimization" advised by @KonstDaskalakis at @MIT. Congratulations!.More details:

2

3

68

Samples from high-dimensional distributions can be scarce or expensive to acquire. Can we meaningfully learn them from *one* sample?!? In new work w/ @YuvalDagan3, @NishanthDikkala, &Vardis Kandiros, we show how to learn Ising models given a single sample.

1

5

66

How fast does player regret grow in multi-player games? Standard no-regret learners give regret growing as √T in T rounds of interaction. Recent work w/ @MFishelson & @GolowichNoah brings this down to a near-optimal poly(log T)!.

2

8

61

A great line-up for the July 13-14 FODSI workshop on ML for Algos! @AlexGDimakis-Yonina Eldar-@annadgoldie-@HeckelReinhard-Stephanie Jegelka-@tim_kraska-Benjamin Moseley-David Parkes-@AlgoSvensson-Tuomas Sandholm-@vsergei-Ellen Vitercik-David Woodruff!.

1

18

63

Exciting work w/ @YuvalDagan3 @MFishelson @GolowichNoah on efficient algos for no-swap regret learning and, relatedly, correlated eq when the #actions is exponentially large/infinite. While classical works point in the opposite direction, we show that this is actually possible!.

1

9

59

Congratulations Manoli! Proud for the beautiful work in this dissertation!.

Congratulations to my academic brother Manolis Zampetakis on successfully defending his PhD thesis! The latest amazing student of @KonstDaskalakis. Huge turnout for his defense (larger than expected). Going to UC Berkeley for a postdoc next. Check him out:

2

3

59

How to use subadditivity properties of probability divergences in GANs to decrease the effective dimensionality of your problem?. Check out our oral paper with @FeiziSoheil and M. Ding at AISTATS in a couple of hours!.

Q: how can we improve the design of GANs if the underlying independence graph of variables (as a Bayes net or an MRF) is known?. A: check out our paper: which will be presented as an oral talk @aistats_conf . Joint work with M. Ding and @KonstDaskalakis

0

12

55

While coarse correlated equilibria (CCE) are very tractable in one-shot games, @GolowichNoah, @KaiqingZhang and I show that, surprisingly, stationary (Markov) CCE are intractable in stochastic games, and thus Multi-Agent Reinforcement Learning (MARL):.

2

11

52

Great lineup of invited speakers at the NeurIPS workshop on the important interface between ML and Game Theory! Submit your interesting papers!.

Game theory and deep learning workshop at NeurIPS!. Invited talks:.Éva Tardos! Asu Ozdaglar! David Balduzzi! Fei Fang! . Plus more contributed talks, posters, panels and discussions. Submit your extended abstracts by September 16th!.

0

5

38

Happy advisor moment! Congratulations to Matt Weinberg and the other fellows!.

The @SloanFoundation fellows for 2020! Non-exhaustive list of some names I recognize in CS&Math: @mcarbin, Sanjam Garg, @zicokolter, Yin Tat Lee, @polikarn , Aaron Sidford, Matt Weinberg, Hao Huang, @weijie444, and Cynthia Vinzant. Congrats to all winners!

1

0

37

Proud of my brother's research!.

Very proud and energized for 2025 that our paper on 🧠 molecular pathology in #PTSD and #MDD made it in #NIH Director’s 2024 #ScienceHighlights!.

0

4

37

Looking forward to this event on Thursday!

June 20th in Athens 🇬🇷- the Lyceum Project: AI Ethics with Aristotle. With speakers including @alondra, @KonstDaskalakis, @yuvalshany1, @kmitsotakis, Josiah Ober, @mbrendan1, and @FotiniChristia. Places are rapidly filling up. Register now.

0

4

31

Standard no-regret learners, e.g. online GD, converge to equilibria of monotone games like the moon converges to the earth, i.e. their average converges, at a best achievable rate of 1/T. At what rate can their last-iterate converge? See 👇 & find @GolowichNoah at NeurIPS today.

New paper with Sarath Pattathil & Costis Daskalakis: We answer the question: at what rate can players' actions converge to equilibrium if each plays according a no-regret algorithm in a smooth monotone game?.

0

5

27

In follow-up work, joining forces with Ioannis Anagnostides, @gabrfarina and Tuomas Sandholm, we obtain similar bounds for no-internal regret, no-swap regret, and convergence to correlated equilibrium.

1

1

15

Many thanks to Kush Bhatia and @CyrusRashtchian for their excellent article on this talk.

4/n Next up, we have an interview with @KonstDaskalakis and coverage of his keynote talk(. Written by Kush Bhatia and @CyrusRashtchian. Thanks a lot to @boazbaraktcs for hosting this one!

0

5

15

@ccanonne_ @FeiziSoheil Inspired by prior work with Qinxuan Pan this new paper shows how to use subadditivity theorems for probability distances/divergences (including Wasserstein distance) in GAN architectures to improve statistical/computational aspects of GAN training.

1

1

6

@ccanonne_ @FeiziSoheil Namely, subadditivity theorems over Bayesnets imply that the discriminator can be broken into multiple discriminators whose job is only to enforce that small set marginals of the generated distribution match those of the target distribution.

0

1

5

@RadioMalone @thegautamkamath @NPR @planetmoney @nycmarathon It's always fun to talk to you too @RadioMalone and I'm happy how mechanism design was thoroughly illustrated through a single example!.

0

1

4

@marilynika @AthensSciFest Thank you so much Marily for seeding and moderating the conversation!.

0

0

3

@roydanroy Nice! Or should I say scary? Maybe she is used to looking at bars as something good like earnings?!?.

1

1

2

@NishanthDikkala @roydanroy I agree. Less jokingly, the purpose is pretty clearly manipulation of the public.

1

1

1

@thegautamkamath @mraginsky @SebastienBubeck @ilyaraz2 @ShamKakade6 @aleks_madry @Andrea__M Guilty as charged :).

0

0

1