Wei-Chiu Ma

@weichiuma

Followers

2K

Following

472

Statuses

341

Assistant Professor @Cornell @CornellCIS Prev: Postdoc @allen_ai @uwcse; PhD @MIT_CSAIL; Sr. Research Scientist @UberATG @Waabi_ai

Joined August 2014

Thrilled to share that: 🎓I defended my PhD thesis last week! 👨🏫I’ll be joining @Cornell @CornellCIS as an Assistant Professor in Fall 2024! A huge thank you to my amazing advisors Antonio Torralba and @RaquelUrtasun, my committee members @HoiemDerek and @vincesitzmann, .. 1/2

45

10

282

RT @davidrmcall: Decentralized Diffusion Models power stronger models trained on more accessible infrastructure. DDMs mitigate the networ…

0

44

0

RT @AndrewCMyers: Deep cuts to the National Science Foundation only make sense if you are an enemy of science or of the US. Most basic rese…

0

24

0

RT @hila_chefer: VideoJAM is our new framework for improved motion generation from @AIatMeta We show that video generators struggle with m…

0

195

0

RT @poolio: Brush🖌️ is now a competitive 3D Gaussian Splatting engine for real-world data and supports dynamic scenes too! Check out the re…

0

64

0

RT @_rohitgirdhar_: Super excited to share some recent work that shows that pure, text-only LLMs, can see and hear without any training! Ou…

0

39

0

RT @ma_nanye: Inference-time scaling for LLMs drastically improves the model's ability in many perspectives, but what about diffusion model…

0

89

0

RT @kevin_zakka: The ultimate test of any physics simulator is its ability to deliver real-world results. With MuJoCo Playground, we’ve co…

0

156

0

RT @davnov134: We are releasing uCO3D! Built to supercharge 3D GenAI and digital-twin models, this evolution of CO3D features more and high…

0

24

0

RT @yen_chen_lin: Video generation models exploded onto the scene in 2024, sparked by the release of Sora from OpenAI. I wrote a blog post…

0

109

0

RT @srush_nlp: 10 short videos about LLM infrastructure to help you appreciate Pages 12-18 of the DeepSeek-v3 paper (

0

121

0

RT @JitendraMalikCV: I'm happy to post course materials for my class at UC Berkeley "Robots that Learn", taught with the outstanding assist…

0

250

0

RT @ryan_tabrizi: Teaching computer vision next semester? Hoping to finally learn about diffusion models in 2025? Check out this diffusio…

0

42

0

RT @mattdeitke: Life update: I've decided to drop out of Ph.D. at UW and leave Ai2 to build something new! 2025 is going to be a magical ye…

0

12

0

Thank you everyone for joining us, and a special thanks to all the speakers for their amazing talks! The AFM workshop wouldn't have been possible without you. Of course, thank you @PaulVicol @mengyer @lrjconan @BeidiChen @NailaMurray for inviting me to be part of the team!

🎉Thanks for attending the #NeurIPS2024 Workshop on #AdaptiveFoundationModels! 🚀Speakers: @rsalakhu @sedielem @kate_saenko_ @MatthiasBethge/@vishaal_urao @seo_minjoon Bing Liu @tqchenml 🔥Organizers: @PaulVicol @mengyer @lrjconan @NailaMurray @weichiuma @BeidiChen 🧵Recap!

0

1

26

RT @jbhuang0604: As my kids are singing APT non-stop these days, I did a bit of reverse engineering of the APT music video and tried to und…

0

85

0

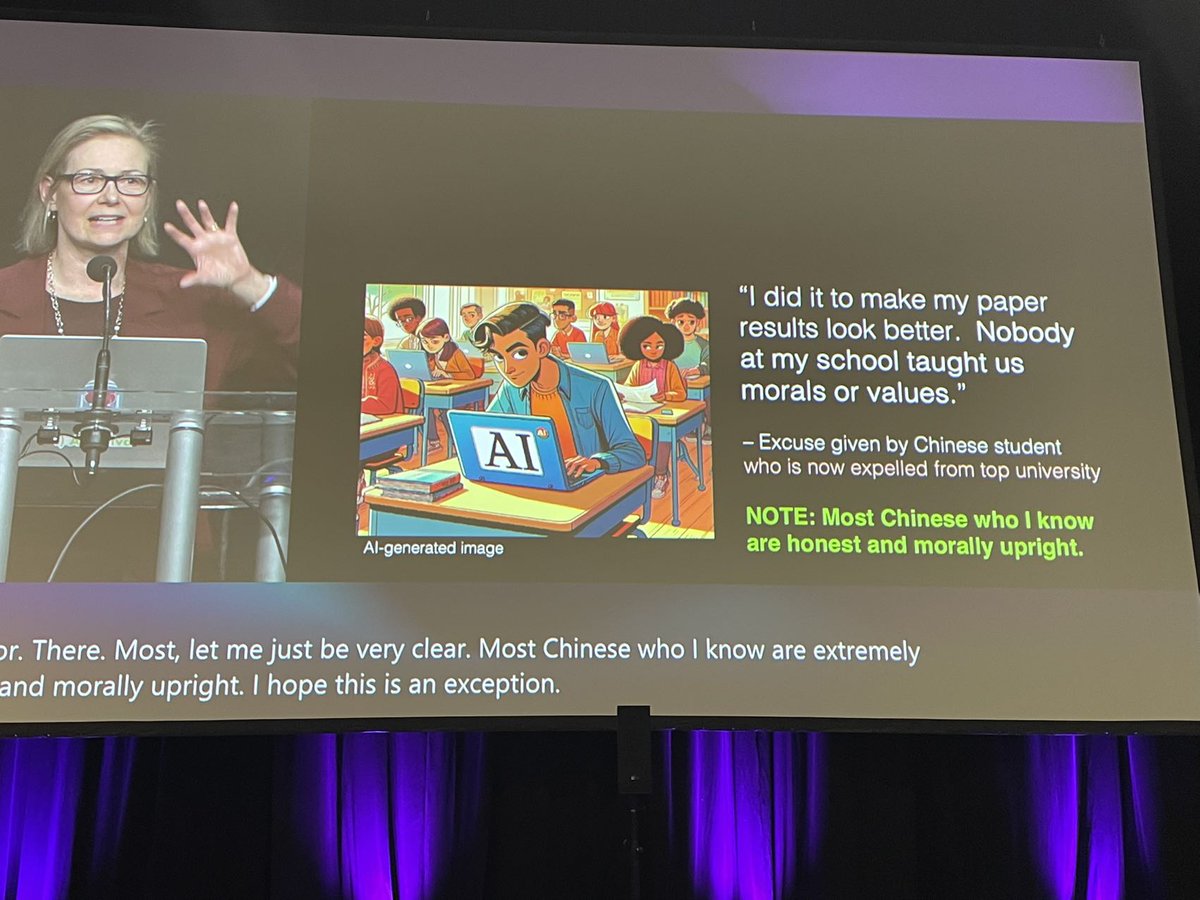

RT @furongh: I saw a slide circulating on social media last night while working on a deadline. I didn’t comment immediately because I wante…

0

185

0

I am deeply disappointed to see this happening @NeurIPSConf. Such behavior must be condemned.

I'm shocked to see racism happening in academia again, at the best AI conference @NeurIPSConf. Targeting specific ethnic groups to describe misconduct is inappropriate and unacceptable. @NeurIPSConf must take a stand. We call on Rosalind Picard @MIT @medialab to retract and apologize for her statement.

7

3

275

RT @wang_jianren: #NeurIPS Let me be direct: This so-called 'disclaimer' only highlights the bias it tries to hide. If Professor Rosalind P…

0

23

0

RT @ZhiyuChen4: I'm shocked to see racism happening in academia again, at the best AI conference @NeurIPSConf. Targeting specific ethnic gr…

0

296

0

RT @jin_linyi: This type of data is ideal for learning the structure and dynamics of the real world. We gave this a shot — extending DUSt3…

0

2

0