Sarah Wiegreffe (on faculty job market!)

@sarahwiegreffe

Followers

4K

Following

10K

Media

70

Statuses

1K

Research in language model explainability & interpretability since 2017. Postdoc @allen_ai @uwnlp PhD from @mlatgt @gtcomputing Views my own, not my employer's.

Joined September 2013

✨I am on the faculty job market for the 2024-2025 cycle!✨. I’m at COLM @COLM_conf until Wednesday evening. Would love to hear about faculty openings (or general advice about being on the job market)!.

3

48

194

I am recruiting a PhD research intern for summer 2024! Please apply by Nov. 15th and mention me in your application. Due to volume I won't be able to respond to DMs or emails. Topics of interest: .1. utility of textual explanations for improving model performance, . 🧵1/2.

Time is running out: Apply for a summer 2024 Aristo Research Internship before the November 15th deadline and work with top mentors on building machines that can reason and learn. Visit this link to apply -->

7

86

403

Yesterday, I defended my PhD dissertation! Special thanks to my advisor @mark_riedl and committee members @cocoweixu @alan_ritter @sameer_ @nlpnoah for the valuable advice & feedback. Looking forward to what's next!

35

4

395

Happy to share our new preprint (with @anmarasovic) “Teach Me to Explain: A Review of Datasets for Explainable NLP”.Paper: Website: It’s half survey, half reflections for more standardized ExNLP dataset collection. Highlights:. 1/6.

5

78

309

- How good is GPT-3 at generating human-acceptable free-text explanations?.- Can we produce good explanations with few-shot prompting? .- Can human preference modeling produce even better explanations from GPT-3?. We answer these questions and more in our #NAACL2022 paper. 🧵1/12.

5

49

262

Now that I'm officially on the website, this seems like a good time to announce that I've started as a young investigator (postdoc) at @allen_ai @ai2_aristo!

8

2

207

I'm officially a PhD candidate

Congratulations to @sarahwiegreffe for passing her thesis proposal today! 🎉.

11

1

191

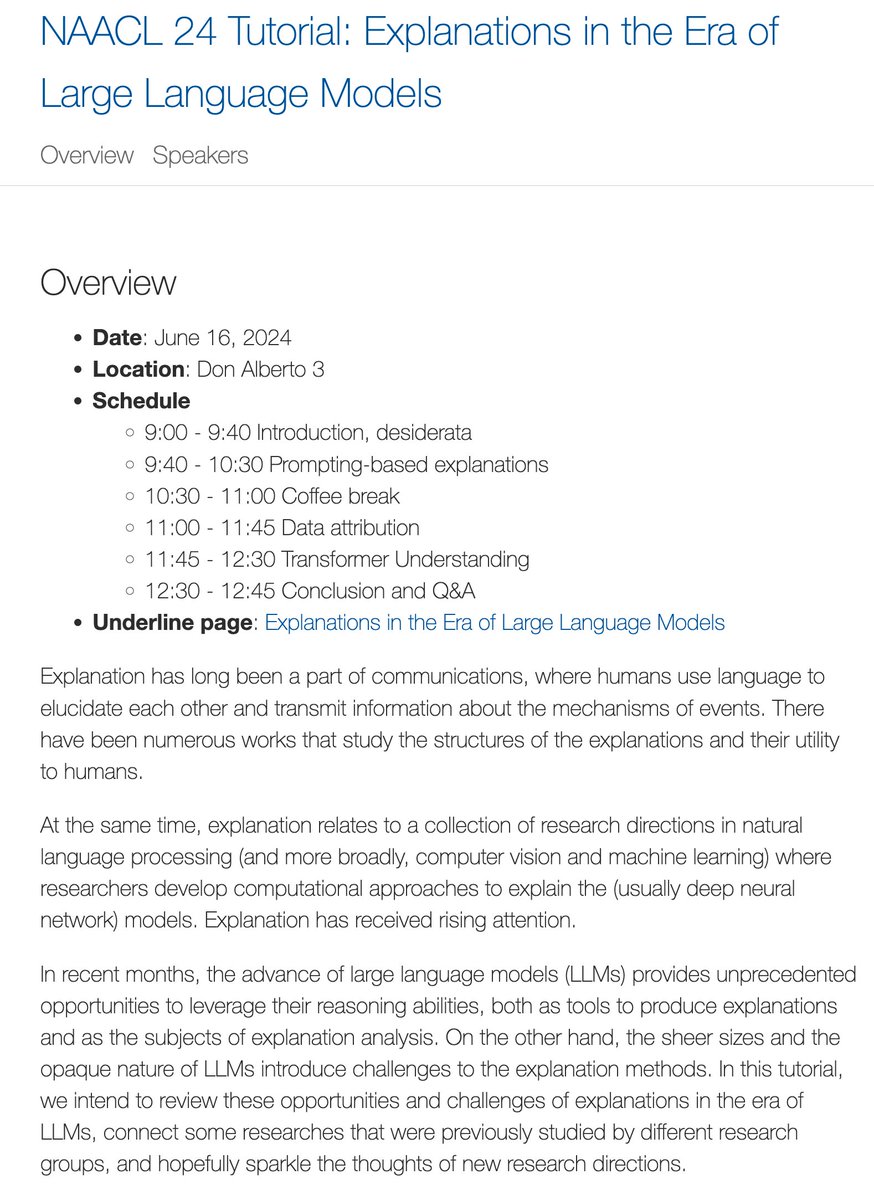

Thanks to everyone who participated in our tutorial & asked great questions! If you missed it, the recording is now available: Slides:

Our NAACL 24 tutorial "Explanation in the Era of Large Language Models" will be presented on June 16 morning at Don Alberto 3! The tutorial website is at w/ @hanjie_chen @xiye_nlp @ChenhaoTan @anmarasovic @sarahwiegreffe @VeronicaLyu

3

20

110

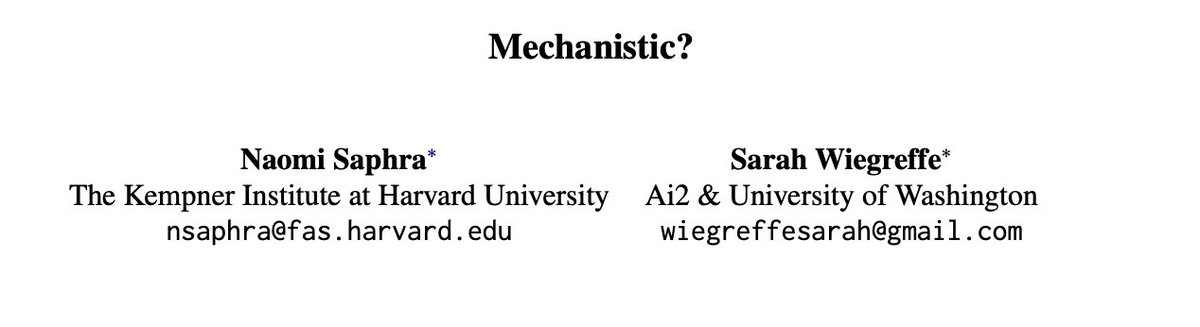

Have you ever wondered what ✨mechanistic interpretability✨ is, & how it differs from other NLP interpretability research? @nsaphra and I have the paper for you!. Check out our paper (which I'll present @BlackboxNLP @emnlpmeeting in Miami next month!).

What makes some LM interpretability research “mechanistic”? In our new position paper in @BlackboxNLP, @sarahwiegreffe and I argue that the practical distinction was never technical, but a historical artifact that we should be—and are—moving past to bridge communities.

2

16

109

Happy to announce “Attention is not not Explanation”, accepted to #emnlp2019! Work by myself and @yuvalpi . 1/n.

1

12

74

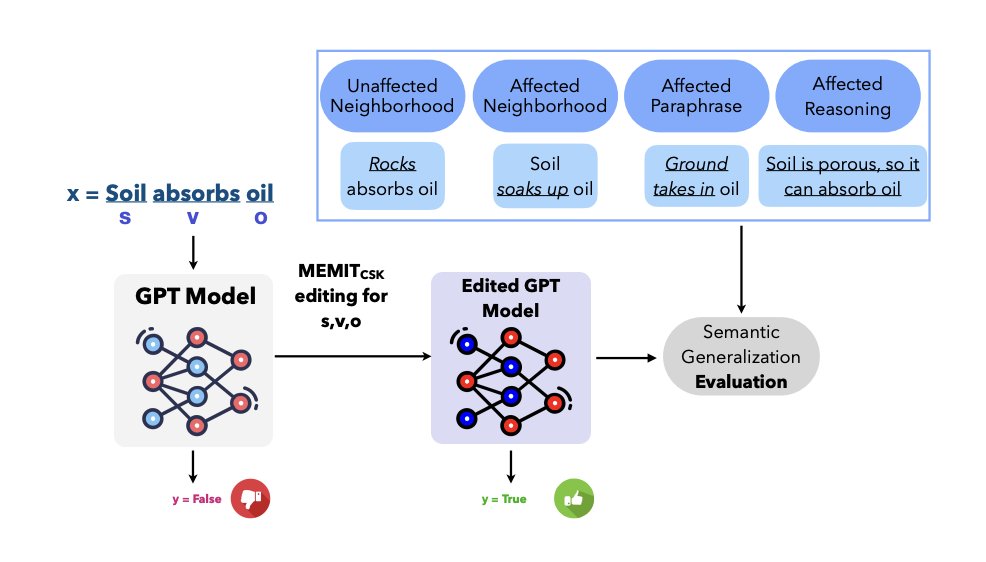

Have you ever wondered whether localization and ROME/MEMIT-style model editing can work for tasks beyond factual recall (like commonsense plausibility prediction)? If so, come to our poster tomorrow 9-10:30am for our paper "Editing Common Sense in Transformers" #EMNLP2023 😃

1

10

72

What are your plans for Sunday morning at 9am CST? Come join us bright & early on the first day of #NAACL2024 for our tutorial on explanation in the era of LLMs!

Our NAACL 24 tutorial "Explanation in the Era of Large Language Models" will be presented on June 16 morning at Don Alberto 3! The tutorial website is at w/ @hanjie_chen @xiye_nlp @ChenhaoTan @anmarasovic @sarahwiegreffe @VeronicaLyu

1

7

68

Checkout our recent work on easy-to-hard generalization with LMs, led by outstanding intern @peterbhase :.

Can LLMs generalize from easy to hard problems?. Models actually solve college test questions when trained on 3rd grade questions!. 🚨New paper: “The Unreasonable Effectiveness of Easy Training Data for Hard Tasks”.🧵1/6

0

10

58

.#EMNLP2021 paper with @anmarasovic @nlpnoah: Measuring Association Between Labels and Rationales. Paper: (updated experiments!).Video: 🧵Why free-text explanations? We argue that free-text explanations are going to be important. .

3

11

57

If you missed my talk at EMNLP, come chat at the #WiML2019 poster session tomorrow 6:30-8pm in East Hall B! #NeurIPS2019

3

6

58

I'm looking for funded opportunities for interpretability + #NLProc research for summer 2021 (internships + other). If you know of anything, please pass along!.

1

9

52

Checkout our newest work: Self-Refine: Iterative Refinement with Self-Feedback. We show that LLMs such as GPT-3.5 can:.1. Generate a draft output.2. Generate textual feedback critiquing it.3. Refine the draft output using the feedback.4. Iterate, as needed. More below ⬇️.

Can LLMs enhance their own output without human guidance? In some cases, yes! With Self-Refine, LLMs generate feedback on their work, use it to improve the output, and repeat this process. Self-Refine improves GPT-3.5/4 outputs for a wide range of tasks.

1

7

53

I'll be at #ACL2023NLP in Toronto next week! Would love to meet/catch up with people- please reach out. I'm giving an invited talk "Two Views of LM Interpretability" at the NLRSE workshop Thursday at 4:40pm. Come hear my thoughts on prompting & mechanistic interpretability.

🔥 The program for the NLRSE workshop at #ACL2023NLP is posted 🔥.In addition to the 5 invited talks, we have a fantastic technical program with 70 papers being presented! Make sure you stick around on Thursday after the main conference!.

2

7

54

I’m giving a talk about this paper today in the 3- 3:30 session @BlackboxNLP (Jasmine ballroom) and will stick around for the afternoon poster session as well. Would love to hear your opinions 😊.

What makes some LM interpretability research “mechanistic”? In our new position paper in @BlackboxNLP, @sarahwiegreffe and I argue that the practical distinction was never technical, but a historical artifact that we should be—and are—moving past to bridge communities.

1

7

53

Sarthak & I have a ✨fun✨ talk for you all at the Big Picture workshop #EMNLP2023! It's Thurs. Dec. 7th 11am-12pm Singapore time (Wed. Dec. 6th 7-8pm Pacific).

@sarahwiegreffe and @successar_nlp have joined forces to dispute once and for all the question "Is "Attention = Explanation" and the Role of Interpretability in NLP".

0

7

52

Ever wondered whether surface form competition is something you should be worried about in zero-/few-shot LLM eval? We have answers 😄. Come to our poster "Increasing Probability Mass on Answer Choices Does Not Always Improve Accuracy" tomorrow from 10:30am-12pm! #EMNLP2023.

New paper: "Attentiveness to Answer Choices Doesn’t Always Entail High QA Accuracy" 📊💬. Something I've been thinking a lot about recently is the relationship between distributions over vocabularies produced by language models and the various ways. 1/5

4

7

50

Code now available!

Happy to announce “Attention is not not Explanation”, accepted to #emnlp2019! Work by myself and @yuvalpi . 1/n.

0

9

51

I really enjoyed giving this talk-- recording is now available.

And our next speaker is. 🥁🥁🥁. 🗣Sarah Wiegreffe (@sarahwiegreffe) will talk with us about "Measuring Association Between Labels and Free-Text Rationales".🗓February 17th, 14:00 UTC.📝 Sign up here: .

0

5

46

.@yanaiela and I are hosting a Birds of a Feather social on #interpretability at #NAACL2022 on Tuesday 2-3pm PT. It will be hybrid both in-person and on Zoom (more info here: Come chat!.

1

8

45

Nice to see Anthropic more rigorously testing their previously-qualitative results on steering and being open about its mixed results. One thing I wonder is: how do these results compare to steering model representations directly (no SAE)?.

New Anthropic research: Evaluating feature steering. In May, we released Golden Gate Claude: an AI fixated on the Golden Gate Bridge due to our use of “feature steering”. We've now done a deeper study on the effects of feature steering. Read the post:

2

3

46

Thanks to @BastingsJasmijn, #BlackBoxNLP workshop now has a Youtube channel! If you missed any of our keynote talks at EMNLP last week, check them out here: More to be added soon :) .@yanaiela @_dieuwke_ @boknilev @nsaphra.

0

7

44

If you're at #NeurIPS2021, I'm presenting our explainable NLP datasets survey for the next 1.5 hours at the Datasets & Benchmarks poster session!. Landing page: Gathertown (spot A2): Camera-ready:

Happy to share our new preprint (with @anmarasovic) “Teach Me to Explain: A Review of Datasets for Explainable NLP”.Paper: Website: It’s half survey, half reflections for more standardized ExNLP dataset collection. Highlights:. 1/6.

1

6

42

New #acl2020 paper "Learning to Faithfully Rationalize with Construction" with @successar_nlp @byron_c_wallace @yuvalpi - preprint coming soon!.

3

3

39

Check out my feature on the ML@GT blog:.

🚨 NEW BLOG POST 🚨. ML@GT Ph.D. students @sarahwiegreffe and @yuvalpi discuss their #NLP work on plausible vs. faithful reasoning and why it's important to understand a model's reasoning process. Trust us, it's a good post. 📝:

0

6

37

Great talk by @claranahhh on “To Build Our Future, We Must Know Our Past” about software lotteries, explore/exploit phases, funding incentives, evaluation benchmarking culture, etc. in NLP research! .Co- @_sireesh @abertsch72

2

3

31

“Reframing Human-AI Collaboration for Generating Free-Text Explanations” with @jmhessel @swabha @mark_riedl @YejinChoinka . Paper: Code/data:

1

7

31

This talk is today 4:40pm in Pier 4! (to the left of the hall with the sponsorship booths on the main hotel side of the venue).

I'll be at #ACL2023NLP in Toronto next week! Would love to meet/catch up with people- please reach out. I'm giving an invited talk "Two Views of LM Interpretability" at the NLRSE workshop Thursday at 4:40pm. Come hear my thoughts on prompting & mechanistic interpretability.

0

5

28

@jacobandreas @srush_nlp FWIW, I gave a talk at ACL in July on this topic. The framework in the talk doesn't capture everything, but I think it gives some credence as to why the terminology might be useful. "Two Views of LM Interpretability" (starting at 7:46): .

0

2

28

Interested in notions of correctness in multiple choice datasets for commonsense tasks? Come check out our poster, happening now!.

0

2

28

@fhuszar You can email women-in-machine-learning@googlegroups.com-- a lot of people advertise PhD positions there. >4000 members.

1

0

26

Teaching and measuring LM noncompliance should be about more than just safety and outright refusal -- checkout our paper for our taxonomy, dataset, and lots of benchmarking results!.

🤖 When and how should AI models not comply with user requests?.Our latest work with @shocheen at @allen_ai dives into this question, expanding the scope of model noncompliance beyond just refusing "unsafe" queries. 1/n🧵. #LLMs #refusal #noncompliance #responsible_ai

0

4

27

.@emnlpmeeting Can you clarify #EMNLP2023 "presenter" vs. "non-presenter" registration costs? Does every author on a paper have to register as a presenter, just the presenting author(s), or just one author per paper?.

2

2

24

We’re right at the entrance (2A)

Have you ever wondered whether localization and ROME/MEMIT-style model editing can work for tasks beyond factual recall (like commonsense plausibility prediction)? If so, come to our poster tomorrow 9-10:30am for our paper "Editing Common Sense in Transformers" #EMNLP2023 😃

0

4

25

I've so enjoyed my internship at AI2 @ai2_allennlp with Noah, @anmarasovic and team! Consider applying:.

1

0

24

A great piece of scientific writing for a non-technical audience (& exciting developments for ML from #NeurIPS2018). Imagining how much more accessible the field would be if all published papers had this kind of intuitive breakdown. @techreview @_KarenHao.

0

10

24

Help: how can I search conference proceedings in the ACL Anthology by *track*? Like how the orals and posters are nicely organized at the physical conference?.@aclanthology.

10

3

24

Lectured on attention and transformers for NLP applications in @GeorgiaTech's Deep Learning course last Thursday! Slides available here: 1/2.

1

5

24

An updated & more condensed (10-page) version is available here: Camera-ready coming soon! w/ @anmarasovic @ai2_allennlp.

Interested in getting started in Explainable NLP (ExNLP)? This paper by @sarahwiegreffe reviews the datasets. 11 pages of references! . To appear in the NeurIPS 2021 Benchmarks and Datasets track.

0

10

21

To participate in the #PeopleofNLProc series we're running for #NAACL2021 publicity, fill out the Google form here:

Our first #PeopleofNLProc researcher is Vered Shwartz @VeredShwartz. Vered's Bio: I'm a postdoc at AI2 and the University of Washington. I got my PhD from Bar-Ilan University. Besides research, I like to work out, feed birds, and listen to audiobooks. 1/4

2

6

20

New paper at the #NAACL2021 narrative understanding workshop.

5. Inferring the Reader: Guiding Automated Story Generation with Commonsense Reasoning.@beckypeng6 @sarahwiegreffe @Sylvia_Sparkle . Using commonsense reasoning to guide a neural story generator. (We'll have more to say about the importance of _reader models_ later, stay tuned).

2

0

17

Come see my talk at #EMNLP this Tuesday; I’ll be re-framing some of the takeaways of our work as a direct contribution to the faithful explainability literature.

@sarahwiegreffe @alon_jacovi To be stated more clearly in the talk :).Tuesday 10:48 session 1A, come one come all!.

3

3

17

Super cool app find of the day (or maybe I'm behind the times): @MathpixApp Lets you screenshot rendered LaTeX in a PDF, and converts it to raw LaTeX for you (with surprising accuracy!).

1

1

14

Excited to see that "Attention is not not Explanation" is the #2 top recent paper of the past month on Arxiv Sanity Preserver! @yuvalpi.

Happy to announce “Attention is not not Explanation”, accepted to #emnlp2019! Work by myself and @yuvalpi . 1/n.

0

2

15

Go work with Tuhin! 😄.

📣 I will be in New York 🗽 for the foreseeable future, and join @stonybrooku @sbucompsc @SUNY as an Assistant Professor in the Fall of 2025. I plan to recruit 1-2 PhD students starting next fall. Come tackle exciting problems with me on Human Centered AI :-)

1

2

13

@AndrewLampinen Very cool work! You might be interested in our paper (to appear at NAACL) on generating few-shot free-text explanations from GPT-3 for passing crowdworker judgements of explanation acceptability. w/ @swabhz @jmhessel @mark_riedl @YejinChoinka .

1

0

13

@theodunayo @jmhessel @alethioguy @yuvalpi @RTomMcCoy @ACL2019_Italy My favorite hit has to be “I’ll never break your gradient flow” by the Backprop Boys.

1

0

12

I complained to @USPS that I haven't received any mail in over a month. After not having heard back via phone or email as I was told, I login online to see that they have sent me a *letter* regarding my case.🤦♀️❓❓.

1

0

11

@janleike Oh wow, thanks for this insight. I don't see that model listed in my view of the API, so I assume I'd have to request access.

2

0

11

@tallinzen Yeah, time isn't infinite and the research community's rewards are often misaligned with incentivizing thorough, high-quality research.

1

0

9

Had a great time presenting at #WiML2019 yesterday!.I'll be around #NeurIPS2019 all week; come say hi if you see me.

0

0

10

@jeremyphoward @aryaman2020 This is the age-old struggle between academia and industry. Anthropic is pushing a specific flavor of interpretability work that is attracting a lot of funding and attention from junior researchers & those outside the field. It is not the only viable (or existing) direction.

1

0

10