Bharath Ramsundar

@rbhar90

Followers

12K

Following

95K

Media

299

Statuses

15K

Founder and CEO @deepforestsci. Creator of @deep_chem. Author @OReillyMedia. @stanford CS PhD. https://t.co/7LDcegrCsc

Joined June 2015

This picture has been sticking in my brain. This is a good exemplar of why LLMs as computing platforms don't make sense to me. They learn the input distribution biases and all, and not necessarily any underlying structure or meaning. It's the hallucination problem in another form.

79

96

944

Respectfully, I strongly disagree with this view on multiple fronts. OpenAI is not the right entity to shoulder a burden on my behalf or much less on all of humanity's behalf. Open source and sunlight will do much better for responsible AI than secretive opaque entities. Hubris.

Building smarter-than-human machines is an inherently dangerous endeavor. OpenAI is shouldering an enormous responsibility on behalf of all of humanity.

33

103

871

I'm excited to announce that my book with @Reza_Zadeh, "TensorFlow for Deep Learning" will be out on March 1st! Check it out for an introduction to the fundamentals of deep learning that focuses on conceptual understanding

13

81

418

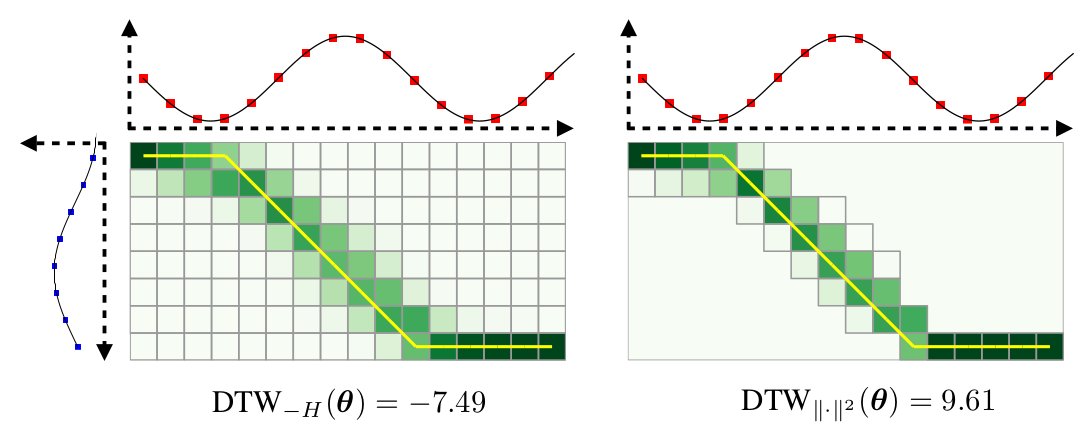

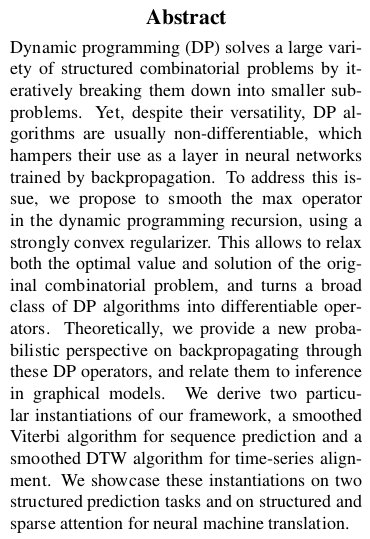

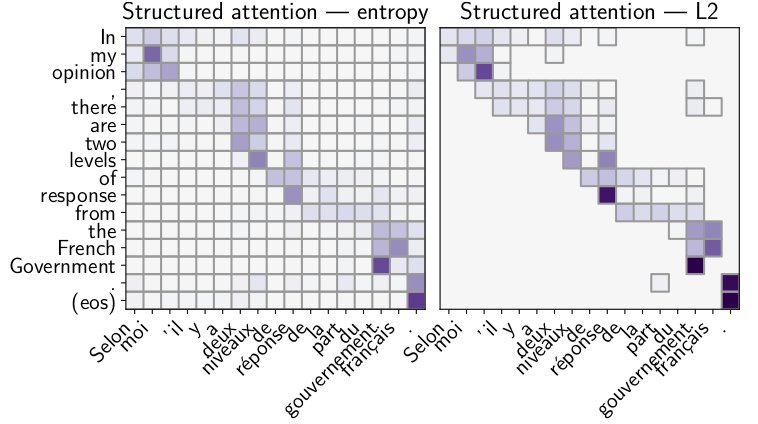

This is one of the most innovative deep learning papers I've seen in a while. Uses @PyTorch to construct differentiable dynamic programs. Allows for the use of backprop for PGM inference in a structured fashion

Our work w/ @mblondel_ml 'Differentiable Dynamic Programming for Structured Prediction and Attention' was accepted at @icmlconf ! Sparsity and backprop in CRF-like inference layers using max-smoothing, application in text + time series (NER, NMT, DTW)

1

79

282

This is a good example showing LLMs are basically powerful autocomplete tools backed by large databases. I think 23 triggered a numerical flow and it kept rolling. The world model is limited at best. This isn't AGI.

20

30

201

I'm sure this blog post is excellent, but @Medium's paywall is preventing me from reading it. Proliferating paywalls on blog articles is an unpleasant future. It feels like inviting academic paywalls back into the tech world. Let's start avoiding paywalled services like @Medium.

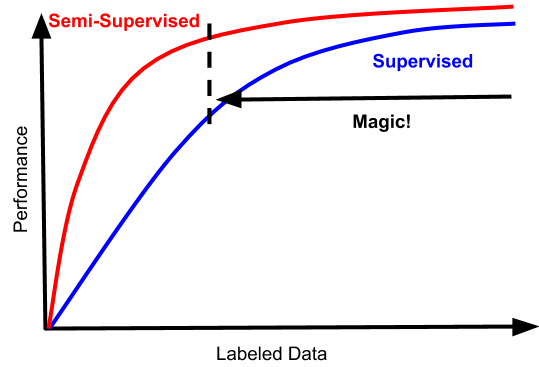

Nice blog post titled "The Quiet Semi-Supervised Revolution" by Vincent Vanhoucke. It discusses two related works by the Google Brain team: Unsupervised Data Augmentation and MixMatch.

15

24

204

I'm starting to worry silly fears around AI doom could cut off societally useful scientific AI. An LLM trained on trillion molecules is by no means superintelligent or even intelligent outside chemistry but could fall afoul of bad regulation.

I'm opposed to any AI regulation based on absolute capability thresholds, as opposed to indexing to some fraction of state-of-the-art capabilities. The Center for AI Policy is proposing thresholds which already include open source Llama 2 (7B). This is ridiculous.

17

30

202

I strongly disagree with this report. Those of us who disagree and don't think AGI is coming immediately need to get word out there more and push back against bad policy recommendations by Doomers. There are real world repercussions to bad geopolitical policy.

🚨Exclusive: a report commissioned by the U.S. government says advanced AI could pose an "extinction-level threat to the human species" and calls for urgent, sweeping new regulations.

12

35

189

I loved @fchollet's recent paper. One of the few papers about AGI that actually makes you think. Definitely recommend a careful read.

François Chollet’s core point: We can't measure an AI system's adaptability and flexibility by measuring a specific skill. With unlimited data, models memorize decisions. To advance AGI we need to quantify and measure ***skill-acquisition efficiency***. Let’s dig in👇

0

30

181

Scientists at @OpenAI, if you really see evidence of apocalyptic capabilities, you have a moral duty to publish asap. Prove it so us skeptics don't keep fighting you foolishly. If you don't have evidence, why are you raising public fears? Show proof or stop hyping please.

If this is true, please publish openly. I don't see evidence provided for these claims from any published material. I've tried open versions of ChatGPT etc. It's nowhere near this capable.

8

21

162

OpenAI does a lot of damage with AGI mythologizing. Failure to be honest about what's in the training data and how that explains seemingly intelligent behavior is core. This is why we need open LLMs. Lying about AGI will damage the field and prevent useful applications.

Goes to @ylecun 's point that LLMs do well when they are fed the answer. I'm being a little unfair but the hype cycle needs to deflate a bit.

2

18

152

I'm excited to announce that @deep_chem 2.0 has just been released! We've significantly refactored and extended our TensorGraph framework (built on @TensorFlow) to let us support more deep learning chem/bio/science applications. Please check it out!

3

45

154

I've put together a @deep_chem model wishlist with a collection of models we'd like to see added to DeepChem. Molecular ML, materials ML, physics ML and more needed. Please take a look and contribute!

3

30

151

Doing 0.1 seconds of molecular dynamics is crazy. For context, integration steps happen at the femtosecond scale. That's a 100 trillion integration steps!.

@nvidia We have analyzed our dataset (capturing 0.1 seconds of simulation, the largest in history!) to identify over 50 novel pockets across many #COVID19 proteins! These “cryptic pockets” are candidates for targeting with antivirals.

5

25

145

I think one of the biggest mistakes I made when starting research was trying to become a "great researcher". It's often more useful to just explore for fun or curiosity. The paper I worked hardest on so far still has 0 citations and thats ok.

My student sent me this list saying they have to improve themselves in many areas. Such a list can do more harm than good. While I appreciate author's intention to motivate one for greatness, I don't think it can be planned. But you can plan to be a "good researcher.".

6

14

143

Join the new @deep_chem Discord server if you are interested in open source scientific machine learning! We're building up a good community to discuss scientific ML, LLMs and more

3

25

143

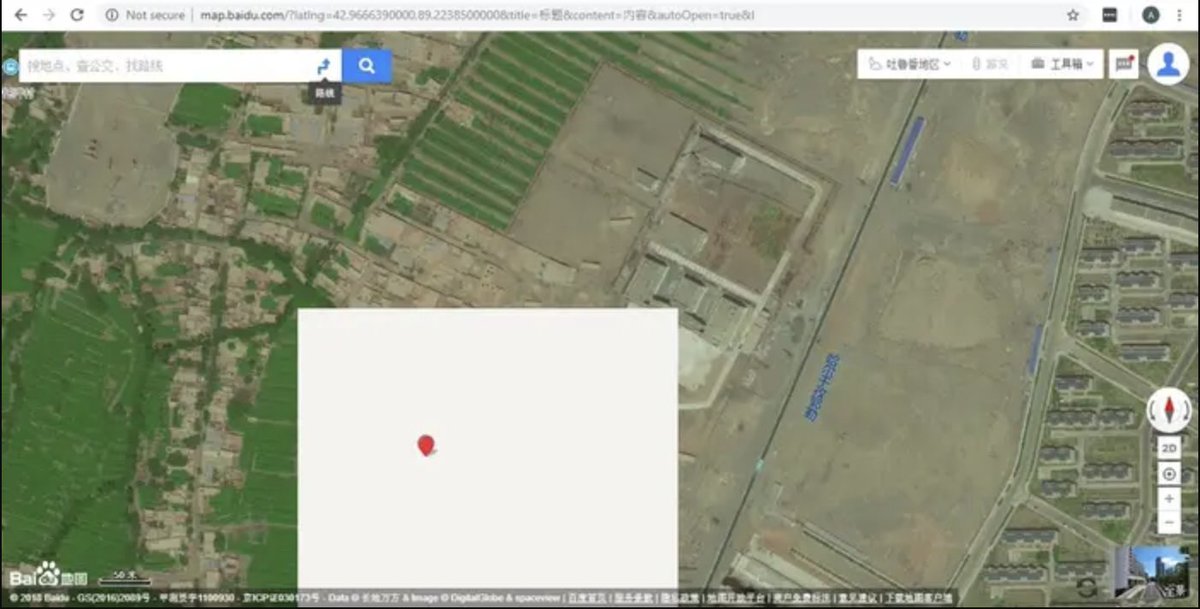

Something worth noting here is that Baidu is actively covering up the infrastructure of genocide. If you're accepting a computer vision paper from Baidu researchers, consider what that technology could be used for.

How were we able to do this analysis? .@alisonkilling, Christo Buschek and I stumbled upon a strange phenomenon on China's Baidu Maps — light gray tiles appearing over known Xinjiang camp locations. By finding more gray tiles, @alisonkilling thought we could find more camps.

1

42

137

Another excellent take. Most of us sharing skepticism love AI and have been working on it for over a decade. Being honest is sustainable. Hype and pump and dump craters careers and misleads the public.

Of course factualism doesn't sell -- if I wanted to be more of an AI influencer I would have to be constantly tweeting about how AI is going to replace all programmers and doctors and so on in less than a year. That sounds exciting and positive, and it gets great engagement!.

5

18

92

My two cents, rumors like this have been circulating for a while. It's excellent marketing but I'm not buying it. I think OpenAI has far less than they imply.

I’ve heard the same (~“most papers are bad; very behind actual SOTA”) from someone at a top lab @dwarkesh_sp . @tszzl has said similar things. GPT-4 finished training *1.5 years ago*. What do we expect world-class researchers at OAI have done since then, twiddled their thumbs?

10

6

140

First two chapters of my and @Reza_Zadeh's book "Tensorflow for deep learning" available for free on @matroid blog

0

52

135

This is an important debate. I am nowhere near as qualified but I also respectfully disagree with the doomer position. It's important for scientists who disagree to speak up so there isnt the impression of scientific consensus of AI doom.

Yann LeCun thinks the risk of AI taking over is miniscule. This means he puts a big weight on his own opinion and a miniscule weight on the opinions of many other equally qualified experts.

7

8

129

Excited to announce that @deep_chem has crossed 1,000 stars on GitHub! Lots of interest in deep learning for the life sciences. Excited to see how our users leverage these tools to discover cures for diseases that have no treatments today!

1

29

117

I really recommend this paper. Reading it highlights just how many details are left out in most "open" LLMs. The ML community should return to its strong open source roots and make more fully open LLMs to advance the fundamental science of the space.

LLM360: Towards Fully Transparent Open-Source LLMs. paper page: @AurickQ, @willieneis, @HongyiWang10, @BowenTan8, @ZhitingHu, @preslav_nakov, @mbzuai , @PetuumInc , @Meta @MistralAI @huggingface .

2

16

103

@schwabpa The conversational behavior really creates a poweful illusion of presence. It's fascinating how it enables us to mirror ourselves onto the chatbot. Also why these things are so dangerous as therapy agents etc. They tell us completions we want to hear pseudo-authoritatively.

5

10

112

This has triggered a lot of controversy. To share a more positive take, I got my start in research in high school with Intel STS. My high school didn't have supporting programs so I emailed a lot of faculty and researchers. A couple were kind enough to gjve me pointers which.

14

12

108

A brief personal announcement: I’ve departed my role as CTO at @computable_io. It’s been a pleasure working with a talented team to develop and ship the world's first protocol for decentralized data cooperatives!.

1

2

98

Deep learning developers should be concerned about Google's extensive patents in the deep learning space. If the patents were truly for defensive purposes, Google could open source them. Note that they haven't.

Serious question: if Google changed its license on future versions of TensorFlow, and a company using an older Apache 2.0 licensed version reimplemented newer functionality. that would violate copyright? Precedent set by Oracle vs Google over the Java API?.

3

37

92

I'm really excited to be able to announce that @deep_chem 2.4.0 is out! Over a year's worth of hacking from an entire team of superb developers. DeepChem has been basically rewritten at this point and supports many new use cases.

DeepChem 2.4.0 is out!! This release features over a year's worth of development work. Support for TensorFlow 2 / PyTorch. Significantly improved production readiness. Faster datasets. More MoleculeNet datasets, materials science support and more!

5

17

89

Check out first two chapters of "Tensorflow for Deep Learning", my new book with @Reza_Zadeh and @OReillyMedia!

5

27

92

I'm excited to announce @deep_chem 2.2 has just been released! This new version contains improvements to protein structure handling, better support for image datasets, and brings @deep_chem closer to being a general library for the deep life sciences

1

22

84

This is an excellent summary of logical holes in AGI fears. Why do we expect recursive self improvement? Usually iterating systems hit a fixed point fairly rapidly. Exponentials are usually S curves. AGI fear mongering seems self serving for organizations that benefit from it.

@Simeon_Cps what's particularly strange about this discourse is that so much of it is counter to what we know about systems:.- complex systems hit diminishing marginal returns.- even a billion years of evolution has not evolved the ability to auto-improve intelligence .- centralized.

15

12

81