Michael Bernstein

@msbernst

Followers

16K

Following

3K

Media

22

Statuses

536

@Stanford, Associate Professor of Computer Science. I design (better) social tech.

Stanford, CA

Joined November 2007

I've been wrestling with the gap between HCI curricula, which tend to focus on the design process, and the big theories and ideas that animate us in HCI. Here's a model that has starting working well at @StanfordHCI:

5

49

229

Thank you to my amazing students and collaborators!!!.

Our very own @msbernst won a best paper award in all CHI, CSCW, and UIST this year! Congratulations!!!.

12

5

158

Congratulations to @joon_s_pk and team on the #UIST2023 Best Paper Award for Generative Agents!. Happily, we already know that the agents can throw a party in celebration.

The #UIST2023 best paper awards and honorable mentions have been announced: Congrats to all the authors and thanks to Andy Wilson, who served as Awards Chair. Excited to see these papers and more next week - last chance to register at Standard Rate!.

0

10

141

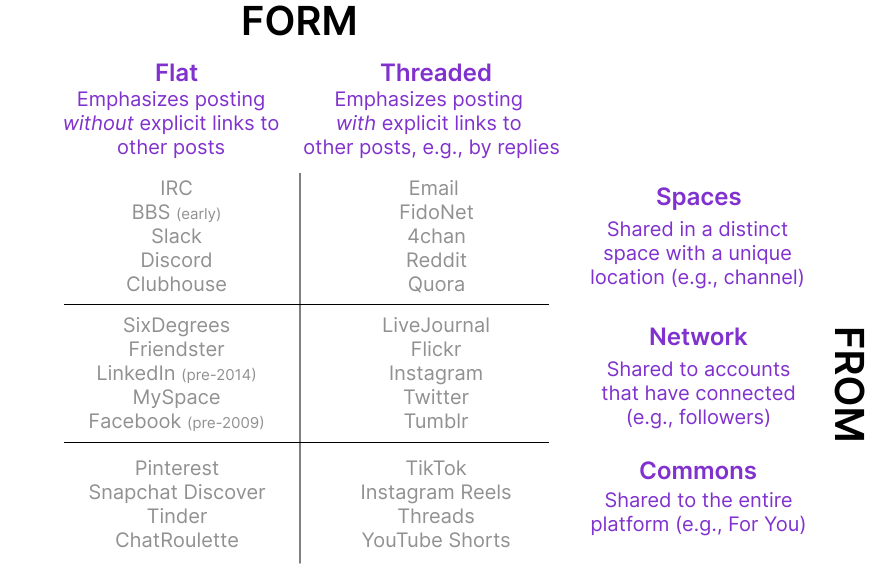

What unifies, and what distinguishes, social media designs? Are all the Twitter spinoffs actually meaningfully different designs from each other? Form-From is a design space from @amyxzh, myself, @karger, and @answergarden that will appear at #cscw2024

5

21

128

Fully 80% of papers at UIST from 1990-2010 have been cited by US patents. 20% overall at CHI+CSCW+ Ubicomp+UIST; 13% of papers at all @sigchi sponsored venues. AAAI/IJCAI are 5%, ACL/EMNLP/NAACL are 11%, CVPR/ICCV/ECCV are 25%.

What’s the industrial impact of human-computer interaction (#HCI) research? Does HCI research contribute to technological inventions and products? Or are most of its insights ignored by the industry? Our #CHI2023 paper provides new evidence for these long-standing questions.

1

29

110

People using explainable AIs are empirically no better at decision making than people working alone. @HelenasResearch, an Honorable Mention at #CSCW2023, demonstrates that this failure is because AI explanations typically require lots of cognitive effort to verify.

I'm presenting tomorrow (Monday, October 15th) from 11am-11:20am at #CSCW2023 during the XAI session in the Regency Room. I will be presenting our paper "Explanations Can Reduce Overreliance on AI Systems During Decision-Making", which has won best paper honorable mention🤠.

1

15

94

Best Paper award winner AND an Impact Recognition for the same paper at #cscw2024! Amazing—congratulations @JiaChenyan and @michelle123lam!.

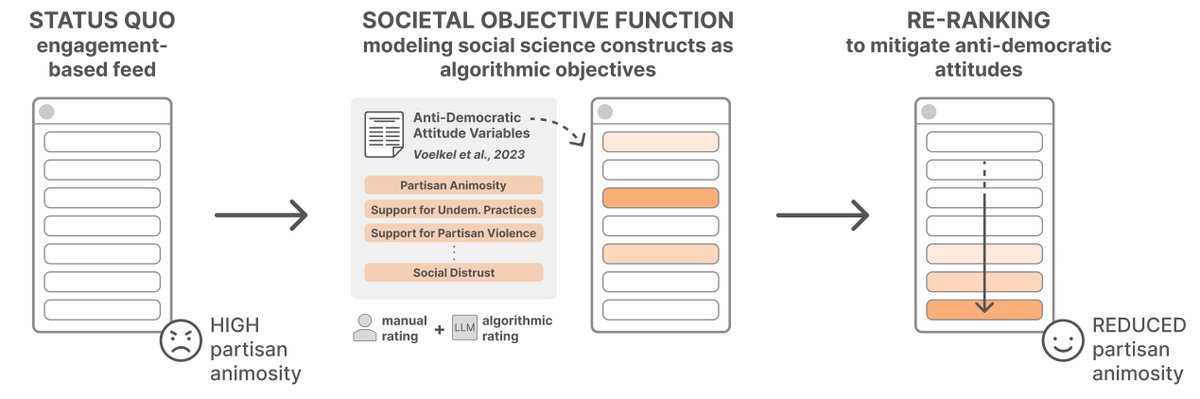

Today's social media AIs encode values—can we mitigate societal harms by making these values explicit and tuneable? Excited to share our #CSCW24 paper introducing societal objective functions, which translate social science constructs into algorithmic objectives for social media!.

2

15

90

@StanfordHCI's own @landay wins a SIGCHI Lifetime Research Award, the highest career award in HCI. Congratulations, James!!!.

🥳👏👏Congratulations to the 2024 ACM #SIGCHI awardees! . We recognize 13 SIGCHI members receiving lifetime research and practice, societal impact, and outstanding dissertation awards, as well as 10 being inducted into the SIGCHI Academy! 1/6🧵

1

7

74

Big congratulations to @HelenasResearch on winning a 2025 CRA Outstanding Undergraduate Researcher Award!

2

4

71

In a follow-up to the generative agents paper, @joon_s_pk+team demonstrate that anchoring agents in rich qualitative information about an individual enables simulations to replicate an individual's attitudes 85% as well as the individual replicates themselves, + reduces bias.

Simulating human behavior with AI agents promises a testbed for policy and the social sciences. We interviewed 1,000 people for two hours each to create generative agents of them. These agents replicate their source individuals’ attitudes and behaviors. 🧵

1

10

65

This paper argues that online spaces become ghost towns because it's simply too easy to lurk without contributing, and that asking people to regularly re-commit—or the incoming messages start getting muted—reverses the trend. It works! #cscw2024 paper led by @lindsaypopowski.

Have you ever worried that no one would respond to your message? Our #CSCW2024 paper proposes a commitment-based design in online groups to address this: (with @zhangyt0704 and @msbernst )

1

9

57

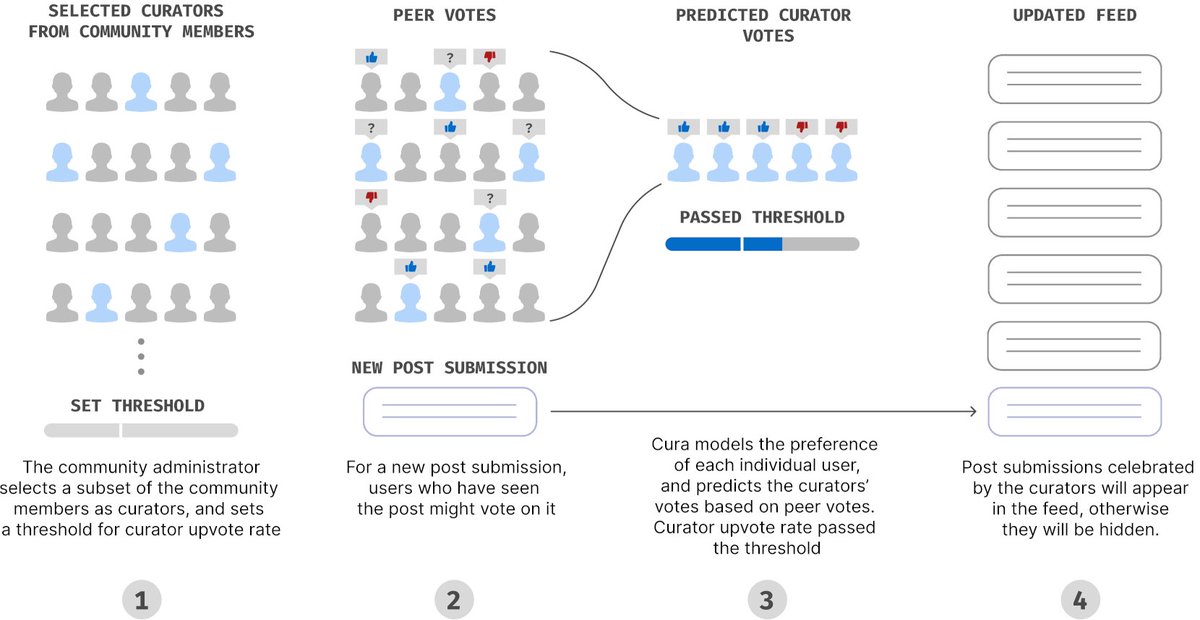

Curation modeling, @WanrongHe’s Best Paper winner at CSCW, demonstrates a method for social media to better maintain norms by naming curators and using community upvotes to estimate whether those curators would want each piece of content in the community.

🎉So excited that my first HCI paper "Cura: Curation at Social Media Scale" has won Best Paper Award at CSCW!. I want to give a huge thank you to my collaborators @msbernst @mitchellgordon @lindsaypopowski!. I'll be presenting this work at the conference. See you soon!.#cscw2023

4

8

55

Generative agents now has an open-source repo and will appear at #UIST2023:

How might we craft an artificial society that reflects human behavior? My paper, which introduced “generative agents,” will be presented at #UIST2023 and now has an open-source repo! w/ @joseph_c_obrien @carriejcai @merrierm @percyliang @msbernst .🧵

2

10

54

@IanArawjo I tell my students that the role of the paper is to convey an idea, not to be a trip report of how you got there. We do lots of formative work, but it's rarely included in our papers. The best reason to include it is if you need up front proof to justify a risky design decision.

3

4

52

. and now a best paper honorable mention at #cscw2024. Congratulations!.

Have you ever worried that no one would respond to your message? Our #CSCW2024 paper proposes a commitment-based design in online groups to address this: (with @zhangyt0704 and @msbernst )

0

8

50

Huge congratulations to Dr. @mitchellgordon, who defended his thesis today and will soon be starting at @MITEECS as an Assistant Professor!.

So proud of @mitchellgordon and his brilliant PhD defense talk on “Human-AI Interaction Under Societal Disagreement”. Great lead advising by @msbernst! Excellent example of human-centered AI research for @StanfordHAI. On to his faculty position @MITEECS! Congrats!

0

1

46

Too often, we train only skills that are easy to practice and measure. By creating a conflict simulation environment with an agent for feedback and practice, @oshaikh13 opened the door to new skills—observing that people double their use of effective conflict strategies! #chi2024.

Before taking it out on your roommate for leaving dirty dishes out, you probably want to practice your conflict resolution skills first. Expert conflict resolution trainers, however, are EXPENSIVE. What if we practiced with an angry LLM instead? 😈 #CHI24.

1

6

43

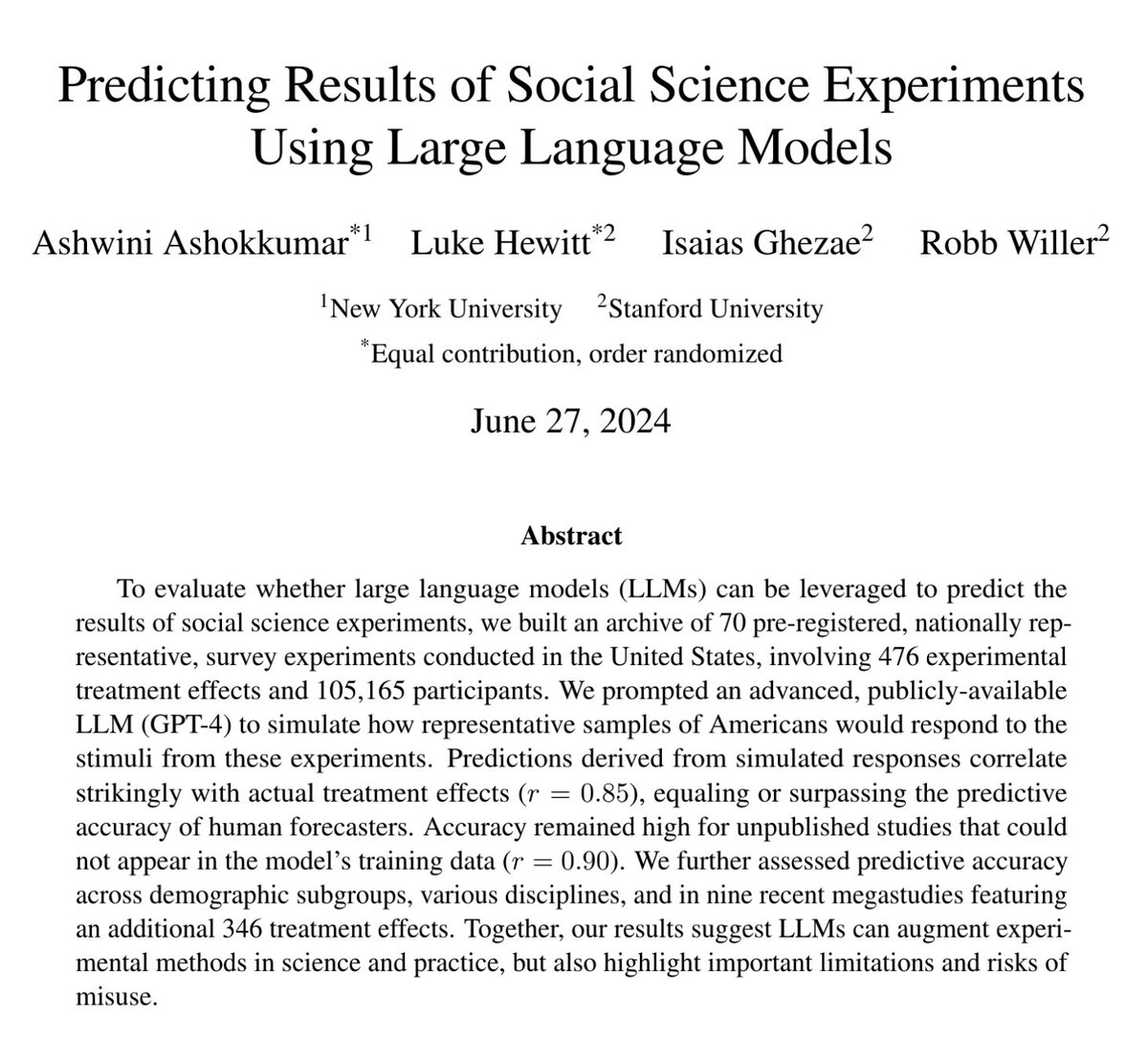

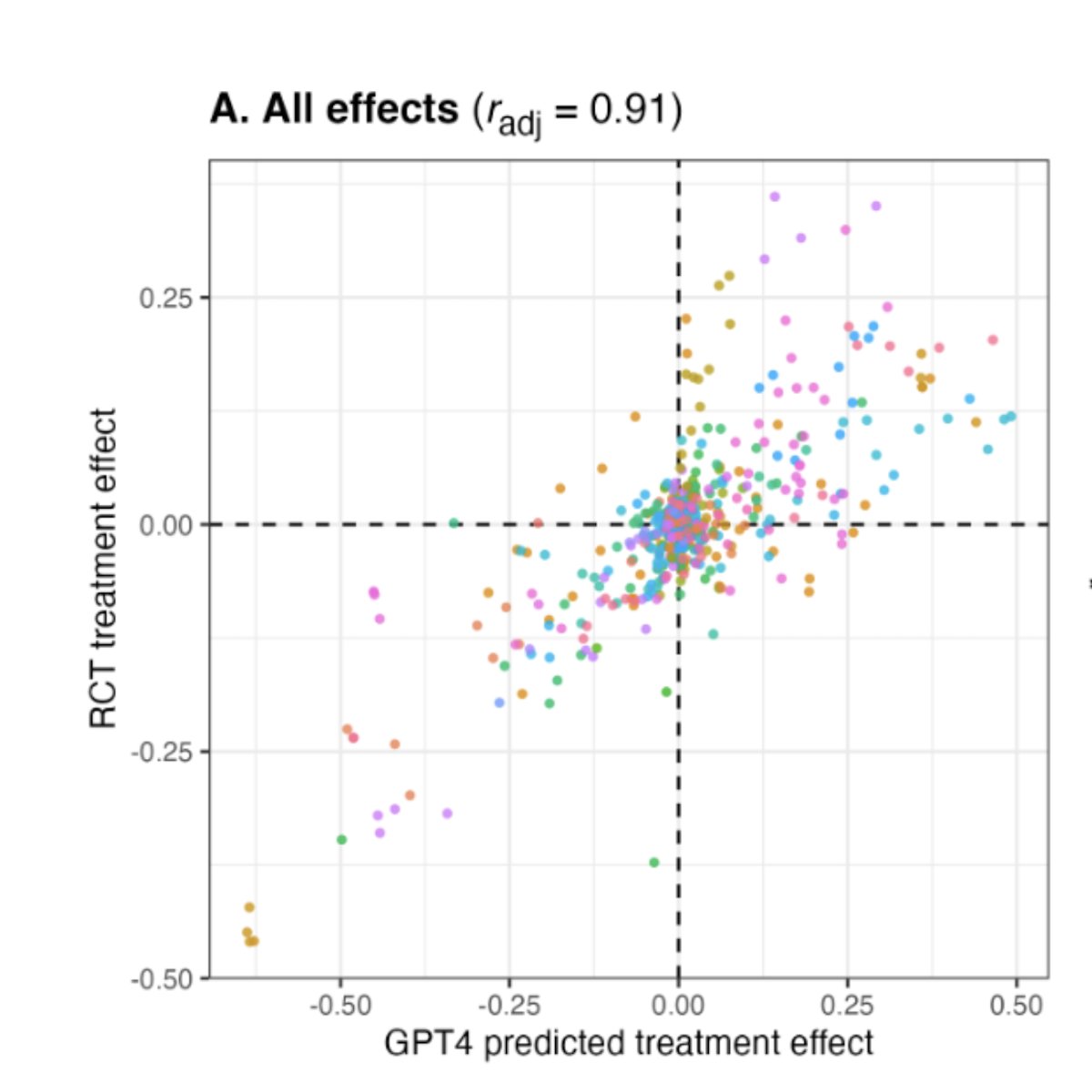

An especially deft move here was to construct a dataset that contained *unpublished* empirical results, so they could rule out any explanations that the model simply memorized the paper during training time. Great work!.

🚨New WP: Can LLMs predict results of social science experiments?🚨. Prior work uses LLMs to simulate survey responses, but can they predict results of social science experiments?. Across 70 studies, we find striking alignment (r = .85) between simulated and observed effects 🧵👇

1

4

39

A bunch of cool people (and then also me) are excited to dig into this project! Thank you for the support!.

Distressed by how social media algorithms can amplify antisocial behavior? Experts from computer science, communications, psychology, and law seek to develop an approach that encodes societal values into social media AI. (2/7)

0

7

33

Looking forward to this visit to @michigan_AI!.

Thrilled to announce the keynote speakers for our Michigan AI 2023 Symposium, Michael Bernstein @msbernst (Stanford) & Alexei Efros (UC Berkeley)!. Thanks to our sponsors: LG AI Research (silver) and Jane Street, KLA, @Voxel51 (bronze). Free registration:.

0

1

32

When 5% of Reddit comments ought not to be there by the subreddits' own moderation strategies—e.g., misogyny, extreme vulgarity, bigotry, personal attacks— prosocial norms fall apart. This is why I see more hope for platform design shifts than for deus ex content removal.

Our new research estimates that *one in twenty* comments on Reddit are violations of its norms: anti-social behaviors that most subreddits try to moderate. But almost none are moderated. 🧵 on my upcoming #cscw2022 paper w/ @josephseering and @msbernst:

2

4

30

alphaXiv has been trialing this neat approach where authors answer questions about their papers:.

New from Microsoft: Infinite context length and linear complexity!. Author @liliang_ren is on alphaXiv this week. Leave questions for him directly on the arXiv paper:

2

8

29

Despite working on social media and society, this is my first paper in Social Media + Society. @AngeleChristin lays out the competing logics that make social media platforms feel so self-contradictory:.

0

1

27

Very cool work by @tatsu_hashimoto and colleagues: ask LLMs questions from Pew Surveys in order to measure whose opinions the model's outputs most closely reflects.

We know that language models (LMs) reflect opinions - from internet pre-training, to developers and crowdworkers, and even user feedback. But whose opinions actually appear in the outputs? We make LMs answer public opinion polls to find out:

0

4

27

Congratulations @wobbrockjo !.

📢 Join us today, Wednesday, Oct 16, for the ACM UIST 2024 Closing Keynote: Test of Time Award! 🎉 Hear from Wobbrock, Wilson, and Li as they discuss “Gestures without Libraries, Toolkits or Training: A $1 Recognizer for User Interface Prototypes” from the 2007 symposium.

1

0

30

Social media designs fail when we mis-imagine how they'll behave at scale. But LLMs have learned social behaviors both good and bad—enabling us to create social simulacra that populate a prototype with thousands of accounts. High and low effort posts, trolls, rulebreaking, . .

How might an online community look after many people join? My paper w/ @lindsaypopowski @Carryveggies @merrierm @percyliang @msbernst introduces "social simulacra": a method of generating compelling social behaviors to prototype social designs 🧵.#uist2022.

1

6

29

This upcoming #cscw2024 panel, in inaccurate song format:

completely inaccurate preview:.@jeffbigham: "Yes!".@msbernst: "No!".@andresmh: "It depends!".@imjuhokim: "What is interaction anyway?".@merrierm: "It depends on what level of AGI the AI has reached!".

2

2

26

Today at 4pm, @oshaikh13 will be sharing our work on LLM simulation for rehearsing difficult-to-learn conflict skills. Turns out that my usual strategy of "I'm rubber and you're glue. " doesn't work so well in faculty meetings.

Before taking it out on your roommate for leaving dirty dishes out, you probably want to practice your conflict resolution skills first. Expert conflict resolution trainers, however, are EXPENSIVE. What if we practiced with an angry LLM instead? 😈 #CHI24.

0

5

28

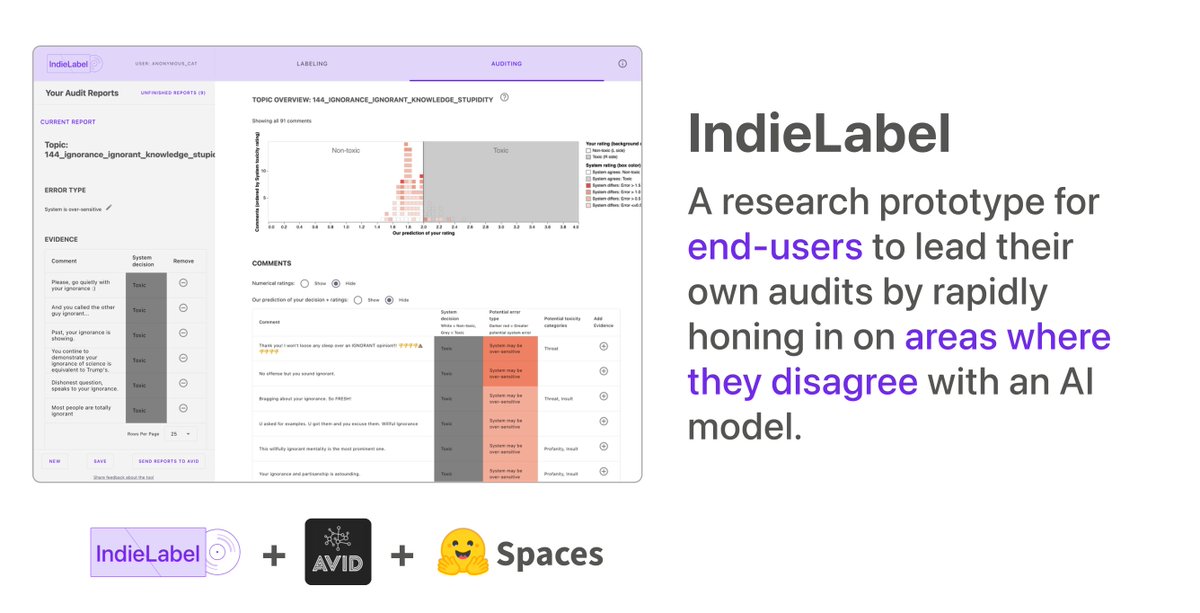

@michelle123lam and @AvidMldb worked together to launch a public version of her end-user audits system—congrats, all!.

It's been great working with the folks from @AvidMldb to launch a public version of IndieLabel, our prototype end-user auditing system (from our CSCW22 paper)! We hope this demo can seed further discussion and future work on user-driven AI audits ✨

0

6

24

Postdoc position: How should people and communities articulate how AIs should navigate difficult tradeoffs? Prof. @sanmikoyejo and I have a jointly mentored postdoctoral scholar position open at @Stanford CS starting in the fall. Information here:

0

7

24

Many objectives that have historically been out of reach for feed ranking algorithms may now be possible. As an example, @JiaChenyan and @michelle123lam demonstrated that social science constructs around democracy attitudes can be well modeled in ranking!.

Can we design AI systems to consider democratic values as their objective functions? Our new #CSCW24 paper w/ @michelle123lam, Minh Chau Mai, @jeffhancock, @msbernst introduces a method for translating social science constructs into social media AIs (1/12)

1

0

25

A reviewer called @lindsaypopowski's work "a rare example of a 'full stack' CSCW paper. It is an unusual system paper that feels theoretically motivated and is evaluated experimentally against a meaningful control.". Congratulations, @lindsaypopowski!.

1

3

24

Thanks for joining us, @FarnazJ_! It's been a blast!.

Our good friend @FarnazJ_ is going off to @UMichCSE as an Assistant Professor!. We are so lucky to have been able to share time with Farnaz-- she is a brilliant and talented researcher, a fun and empathetic friend, and overall great person to be around ❤️ We will miss her dearly!

1

1

21

Congratulations, Professor @josephseering! Can't wait to see you take flight as faculty!.

I'm thrilled to announce that I will be joining KAIST in Fall 2023 as an Assistant Professor in the School of Computing!.

3

0

22

PBD for LLM: a small number of demonstrations can be very powerful in customizing and adapting LLM behavior. Work led by @oshaikh13:.

LLMs sound homogeneous *because* feedback modalities like rankings, principles, and pairs cater to group-level preferences. Asking an individual to rank ~1K outputs or provide accurate principles takes effort. What if we relied on a few demos to elicit annotator preferences?

0

0

21

In a governance metaphor, centralized platforms ain't democracies: by @margaretlevi @henryfarrell @timoreilly. But decentralized platforms are essentially anarchic, and there are good reasons governance doesn't work that way either:

3

1

17

Thank you for the invite to join the SIGCHI Winter School in Colombo! I had a great time!.

We, the @Colombo_SIGCHI incredibly honored to host @msbernst in #SriLanka, at the Winterschool supported by @sigchi @Google and @UCSC_LK .#HCI #HCI4SoutAsia @HCI4SouthAsia.

1

1

20

A chance to help launch @mitchellgordon's AI+HCI group at @MITEECS!.

I’m recruiting PhD students and there are still a few days left to apply! If you’re excited about working at the intersection of HCI and AI, come join my new group @MITEECS. Please submit at by 12/15!.

1

0

20

Realtime LLM-powered social media reranking field experiment led by @tizianopiccardi!. Result: algorithmic reranking expressions of Antidemocratic Attitudes and Partisan Animosity (AAPA) impacts affective polarization.

New paper: Do social media algorithms shape affective polarization?. We ran a field experiment on X/Twitter (N=1,256) using LLMs to rerank content in real-time, adjusting exposure to polarizing posts. Result: Algorithmic ranking impacts feelings toward the political outgroup!🧵⬇️

1

1

19

Tiziano has a technical talent that has enabled him to go after incredibly ambitious computational social science projects. Keep an eye out for one of them coming soon!.

Academic job market post! 👀. I’m a CS Postdoc at Stanford in the @StanfordHCI group. I develop ways to improve the online information ecosystem, by designing better social media feeds & improving Wikipedia. I work on AI, Social Computing, and HCI. 🧵

0

2

20

Model sketching lets you rapidly iterate over the concepts that you want your model to capture, rather than the details of the prompt or the learning rate. Upcoming work at #chi2023 by @michelle123lam!.

When building ML models, we often get pulled into technical implementation details rather than deliberating over critical normative questions. What if we could directly articulate the high-level ideas that models ought to reason over? #CHI2023. 🧵

0

4

20

Unstructured text analysis with LLMs —talk today at 9:45am by @michelle123lam!.

Frustrated with topic models? Wish emergent concepts were interpretable, steerable, and able to classify new data? Check out our #CHI2024 talk on Tues 9:45am in 316C (Politics of Datasets)!. Or try LLooM, our open-sourced tool :).✨

1

1

19

A policy brief conveying some of the lessons of our survey experiment comparing the perceived legitimacy of different content moderation strategies. I'm not sure any of the student leads are on Twitter, so instead I'll just take this moment to remind you that @amyxzh is great.

NEW POLICY BRIEF: Policymakers play an important role in shaping the future of online speech and content moderation. Our latest brief seeks to understand people’s perceptions of content moderation legitimacy, providing a pathway for better online platforms.

1

1

19

Welcome to @StanfordHCI and @StanfordHAI! We’re excited that you’ll be with us!.

2 (rather overdue but still very exciting) announcements: I’ll be joining @UMichCSE as an assistant professor in fall 2024! And this year, I’ll be a postdoc at @StanfordHAI working with @sanmikoyejo and @msbernst!. Beyond thrilled for these next steps 😊.

1

3

17

PhD students interested in HCOMP and Collective Intelligence: there are travel scholarships! Apply here.

Student scholarships are available for HCOMP/CI @hcomp_conf 2023! Deadline to apply is Sept 10, 2023: please share to spread the word!.

0

2

18

"Let's think step by step" increases the bias of large language models. Avoid if your task involves social inferences!. Work led by @oshaikh13:.

Chain of Thought reasoning prompts—like "Let's think step by step"—make large language models more performant. Including, it turns out, at spewing out toxic and biased content. In our preprint, we evaluate zero-shot CoT on harmful questions & stereotypes:

0

4

17

"How might HCI engage with easy access to unintuitive statistical likelihoods of things?" by @jeffbigham .

1

1

17

This is how the internet gets a screenshot of me writing "2+2=5" on a whiteboard. Check out the video about @HelenasResearch! Her project on XAI overreliance will appear at CSCW 2023.

Do you want to learn about how explanations can help reduce overreliance on AIs?. Watch this fantastic, out-of-this-world, one-of-a-kind, spectacular, etc. short video explaining our work! We put a lot of ❤️ into it and would appreciate the views.

2

3

15

I’ve been using LLooM to analyze themes in student reading responses, live text feedback during talks, and course reviews. Try out the CoLab notebook!.

“Can we get a new text analysis tool?”.“No—we have Topic Model at home”. Topic Model at home: outputs vague keywords; needs constant parameter fiddling🫠. Is there a better way? We introduce LLooM, a concept induction tool to explore text data in terms of interpretable concepts🧵

1

0

13

Helena led this work, with collaborators @jorke Matthew Jörke, @MadeleineGrunde, @tobigerstenberg, me, and @RanjayKrishna. Go Helena!.

1

0

15

Congratulations on the launch, Fei-Fei!.

After 3+ years, today is the day that my book “The Worlds I See” gets to see the world itself. It is a science memoir of the intertwining histories of me becoming an #AI scientist, and the making of the modern AI itself. All versions are now on Amazon 1/

3

1

15

Work with faculty including @AngeleChristin (Communication), Jeanne Tsai (Psych), @jeffhancock (Communication), @jugander (MS&E), myself, Nate Persily (Law), @RobbWiller (Sociology, Psychology, Business), and @tatsu_hashimoto. Postdocs get joint mentorship by a pair of faculty.

10

3

13

Lindsay’s organizing a CSCW workshop!.

Excited to share our @ACM_CSCW 2024 in-person workshop on envisioning new futures of positive social technology! We'll explore CSCW research outside of the existing platforms/paradigms for social tech: current research, promising directions, and how to support each other's work.

0

0

11

@eytanadar I'd even be satisfied with someone building a PCS tool that estimates how likely someone is to sit on a review invite for 1wk+ and then decline.

1

0

13

@JessicaHullman While I’ll never be as cool as @cfiesler, once there were over 400k views on this TikTok it was clear that the correct response was for me to just own the Holland vibes

3

0

11

Congratulations, @joon_s_pk!.

I am honored to be named a 2022 Microsoft Research PhD Fellow!. Thank you so much to my advisers, @msbernst and @percyliang, as well as @merrierm, @kkarahal, and everyone who shaped me as a researcher. I'm really excited to continue exploring the intersection of HCI and AI!.

1

1

11

Mass rejections have been a thorn in Mechanical Turk workers' side for a decade. In the meantime, other marketplaces have developed more mature mechanisms for handling complaints, appeals, and bad actors, which @amazon could draw on and implement. Let's make this happen.

In February we delivered a petition to Amazon #MTurk with over 2000 signatures calling on them to amend their mass rejection policies (see . 1/2.

0

5

11

Thank you @jordantroutboy @joon_s_pk @lindsaypopowski @michelle123lam @mitchellgordon @beleiciabullock @josephseering !.

0

0

10

Stanford's @stanfordsymsys program is hiring its first lecturer! Symbolic Systems was my undergraduate major, focused on combining minds and machines: its foci include AI, cognitive science, NLP, neuroscience, and HCI. Information and application here:

0

5

11

@UpolEhsan I’m probably in the minority here, but I intentionally didn’t give my group a name. I felt it would be more externally confusing when my students introduce themselves: “I’m in the XYZ Lab!” “Uh…ok?” So I opted to throw my weight behind the multi-faculty “Stanford HCI Group”.

1

0

10

@IanArawjo The methodological pluralism of HCI, which is one source of its strength, also makes it challenging to learn the individual methodological traditions well. It’s like we come in and try to play jazz, pop rock, classical, and podcasting all in a year or two.

2

2

10

Research takes unpredictable turns. Joon and I were inspired by work that @unignorant began 9 years ago (!) Here's a screenshot from our original rejected paper in 2014. That rejected research later evolved into Augur (. Something something persistence?

1

0

10

Unlike typical SIGCHI workshops, UIST workshops will be open enrollment — limited spaces, first-come, first-served. There is no position paper. Just make sure to register early before it fills up. Co-organizers: @joon_s_pk @merrierm @SaleemaAmershi @LydiaChilton @mitchellgordon.

0

1

10

@hcomp_conf and @ci_acm are integrating more deeply this year, with a joint CFP and two program subcommittees. CI adds archival publication, and HCOMP adds a non-archival option. Abstracts due 6/2.

0

5

9

In blind comparisons, participants were rarely able to distinguish social simulacra from real content. In evaluation with social computing designers, @joon_s_pk and @lindsaypopowski saw designers use social simulacra to refine communities' goals, rules, and moderation responses.

1

0

8