Dan

@dan_p_simpson

Followers

5,173

Following

718

Media

1,031

Statuses

11,166

Explore trending content on Musk Viewer

Lions

• 111718 Tweets

Ricardo

• 107697 Tweets

Karime

• 93200 Tweets

重陽の節句

• 63464 Tweets

#TheStatement7

• 59206 Tweets

Rams

• 42614 Tweets

Alice Guo

• 41487 Tweets

シーザリオ

• 37129 Tweets

昭和99年

• 33045 Tweets

Didi

• 27189 Tweets

ZEE x COS

• 24131 Tweets

Stafford

• 22371 Tweets

高市さん

• 20956 Tweets

Goff

• 15229 Tweets

#LISAxVogueKorea

• 14969 Tweets

कारगिल युद्ध

• 14013 Tweets

PRABOWOJKW TetapSELARAS

• 13273 Tweets

कैप्टन विक्रम बत्रा

• 12858 Tweets

Guo Hua Ping

• 12830 Tweets

ADUdomba UdahGAKJAMAN

• 12763 Tweets

斎藤元彦知事

• 12620 Tweets

BTS IS SEVEN

• 11747 Tweets

परमवीर चक्र

• 11248 Tweets

落語心中

• 10347 Tweets

Last Seen Profiles

@meakoopa

The first NFT musical was phantom because it began with the sale of a useless monkey that someone else owned.

5

32

179

Impossible to stress enough how good linear and logistic regression are at what they do. If there’s structure, add it. Don’t rely on deep learning ideas because it’s not data efficient by design

5

11

140

This is a nice tool we built. We wanted some scalable approximate Bayes that plays nicely with PyTorch, allows for flexible likelihoods, and plays nicely with transformers. We couldn't find anything that hit all of our needs, so we (

@Sam_Duffield

mainly) built it. More methods to

2

16

110

Every single witch spell I've hit so far from the

@WorldsBeyondPod

witch playtest is SO GOOD even if one of my players knocked herself out because she didn't read Breath of Belladonna carefully enough

2

3

98

The absolute chaos of this is giving me life.

8

6

89

An extremely fun paper on massively parallel MCMC on a GPU lead my my final PhD student Alex and, of course, the dream team of

@avehtari

@jazzystats

and Catherine! It definitely threw up a pile of interesting issues

0

14

81

Propensity scores are great. The idea that the observed data design might tell us something about the selection mechanism is clever. Variants of inverse probability weighting when you don’t have control over the lower bound of those estimated probabilities is a recipe for heavy

3

8

79

This is the inevitable result of people using a transformer when what they really were looking for is a DATABASE. Sure. Use generative AI to smooth the UI, but if you don't have a clean knowledge base at the bottom of your stack, your generative AI is gonna, you know, generate.

i asked SARAH, the World Health Organization's new AI chatbot, for medical help near me, and it provided an entirely fabricated list of clinics/hospitals in SF. fake addresses, fake phone numbers.

check out

@jessicanix_

's take on SARAH here:

via

@business

54

955

4K

3

20

75

I got a sneak peak of

@djnavarro

's work in progress notes for her

@rstudio

conference workshop and I am literally stunned at how good they are. Truly gobsmacked. A queen walks among us.

0

1

73

I am, once again, begging people to remember that the posterior for the “bayesian lasso” behaves nothing like the frequentist lasso estimator (except that the latter coincides with the former’s mode, which is a poor posterior summary)

@SolomonKurz

@wesbonifay

My reasoning is that the choice of distribution for the prior has strong theoretical and practical implications for the inferential problem. e.g., normal vs double-exponential prior imply different forms of penalized likelihoods:

2

2

14

4

10

65

I cannot stress enough that nothing works perfectly in statistics. Your god will betray you. But a lot of thing work well enough for the situation. Stay limber, be flexible, have fun.

For discrete distributions (even with ∞ support), the MLE converges a.s. in TV. This is naive estimate obviously not going to work for continuous distributions, but surely *something else* will?!

Nope, nothing.

@Tjdriii

1

5

54

2

2

58

For the daytime people who are interested in Laplace approximations and trying to do strange things in JAX. Also should anyone know of a job in NYC I am looking!

4

12

55

This is definitely true. The other thing to do is to learn classical stats well enough that it’s not embarrassing when you give reasons why bayes is better.

1

2

53

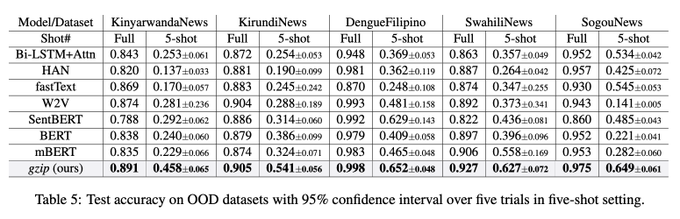

People seem surprised by this, but it’s just the latest in a long line of examples that shows that clever modelling will often beat generic, brute-force, scale-is-all-you-need methods.

3

5

51

Matlab is not built for statistical computing and should not be used anywhere near data. It’s a teaching language for people who‘s program was written before Python stabilised.

3

4

48

Well this is cool: a proper set of hooks into

@mcmc_stan

for evaluating the complied log-densities and gradients in Python/Julia/R. Great for algorithm development!

1

9

46

Are we still doing this? You methodology will never justify your existence. Understanding bayes makes you a better frequentist. Understanding proper frequentism makes you a better bayesian. Econometrics, however, is the one that doesn’t make you better at anything.

4

6

46

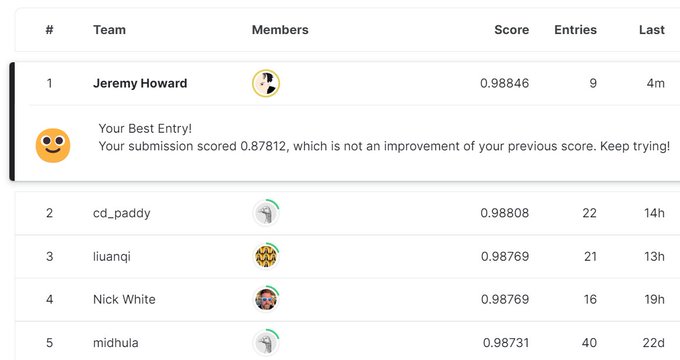

The third decimal place: A Kaggle journey.

Are you ready to embark on a deep learning journey? I've just released over 6 hours of videos and the first in a series of notebooks showing the thought process of how I got to

#1

in a current Kaggle comp.

Follow this 🧵 for updates on the journey!

15

241

1K

1

1

46

Working at

@NormalComputing

is pretty nice. We’ve got an MLE job opening soon (specifically for MLEs with some data experience). Watch this space

5

8

44

Some random thoughts on this paper, which is a nice review of what marginal likelihoods can and can't do:

The marginal likelihood (evidence) provides an elegant approach to hypothesis testing and hyperparameter learning, but it has fascinating limits as a generalization proxy, with resolutions.

w/

@LotfiSanae

,

@Pavel_Izmailov

,

@g_benton_

,

@micahgoldblum

1/23

3

75

312

1

4

44

It's ALWAYS the data. That's the most important lesson for anything within the stats/ML/AI space. It is always the data.

@alz_zyd_

when i train or fine tune a model i like to look at validation set examples where it does well or poorly and do a lookup in the training set for similar examples.

every time i have been pleasantly or unpleasantly surprised at "why did my model do that?" looking at a few nearest

0

1

13

3

3

44

People of twitter: I have once again blogged. And it's on sparse matrices. The long awaited (by whom?) first part (!) of my much more serious attempt to make a sparse Cholesky factorization that works in

#jax

is here. It was mostly written on a plane.

1

1

42

This advice happens a lot and I think it's bad, honestly. I have hired innumerable people at this point and I have _never_ been impressed enough by someone's blog or GitHub repos for it to move the needle.

10

1

41

LLMs instead of medical advisors for poor people is, you know, my personal idea of a tech dystopia. It’s kinda strange to see someone excited about it specifically.

1

3

38

If anyone is curious, the first time I saw someone do this was in ~2011 and it was done with some neuroscience of vision and a lasso.

I'm speechless.

Not peer-reviewed yet but a submitted paper.

The 'presented images' were shown to a group of humans. The 'reconstructed images' were the result of an fMRI output to Stable Diffusion.

In other words,

#stablediffusion

literally read people's minds.

Source 👇

541

4K

22K

3

2

38

I mean sure but to my mind his masterpiece was this very funny, very insightful paper about maximum likelihood.

3

4

35

CRAN is simply not fit for purpose. They cannot keep doing this. There is literally no reasonable argument for this behaviour. None.

2

1

36

Alex,

@jazzystats

,

@avehtari

, Catherine, and I wrote a paper on this that I quite like. The tl;dr is that when you have dependency, using joint predictive distributions leads to lower-variance CV estimators

2

7

36

The stuff in here is really amazing for anyone who’s interested in how things like Stan and PyMC could work (or, really, the next generation of them).

"Program Analysis of Probabilistic Programs"

My PhD thesis is now available on arXiv!

Contains:

- A short intro to

#BayesianInference

- An intro to

#ProbabilisticProgramming

with examples in different PPLs

- PPL program analysis papers with commentary

11

97

607

0

2

36

In my life I have never remembered this. I just start talking about long tails.

@Whitehughes

@pippinsboss

@Mathowitz

I prefer to use the terms "positive" and "negative". I remember it like this:

1

0

10

2

1

35

Hear me out though! If visualisations are implicit models (and they are), we should sometimes check their ability to do their task. How? Buggered if I know. But the visual inference people have some interesting thoughts.

3

1

33

Not a big fan of language wars (except I fucking hate Matlab and it should be firmly left in the 90s/00s) but I do think that there is a strong case in a long program (Aka not a one year masters) to ensure that graduates have an ok grasp of at least a few languages

2

3

35

Nobody is less happy than someone who’s trying to fit every task into a single language.

1

2

34

@economeager

The awesome power of being dumb about things no one else understands is underrated.

1

1

34