alex peysakhovich 🤖

@alex_peys

Followers

5,546

Following

792

Media

150

Statuses

1,268

Explore trending content on Musk Viewer

梅雨明け

• 196735 Tweets

BRANDS AI TALK x FOURTH

• 131411 Tweets

Raila

• 122601 Tweets

黒人奴隷

• 100733 Tweets

Halal

• 42781 Tweets

パワプロ

• 42607 Tweets

カルストンライトオ

• 34846 Tweets

#EnflasyonMuhasebesi

• 34716 Tweets

#TheBoys

• 31648 Tweets

Yunan

• 29115 Tweets

Harmony

• 23794 Tweets

Haas

• 20657 Tweets

Colabo

• 19263 Tweets

暇空敗訴

• 16039 Tweets

BarengPRABOWO MakinOPTIMIS

• 15420 Tweets

SEMANGATbaru EKONOMItumbuh

• 14850 Tweets

#WeMakeSKZStay

• 13235 Tweets

悪魔の証明

• 11817 Tweets

全員再契約

• 11268 Tweets

CROWN PRINCE SUNG HANBIN

• 11209 Tweets

Last Seen Profiles

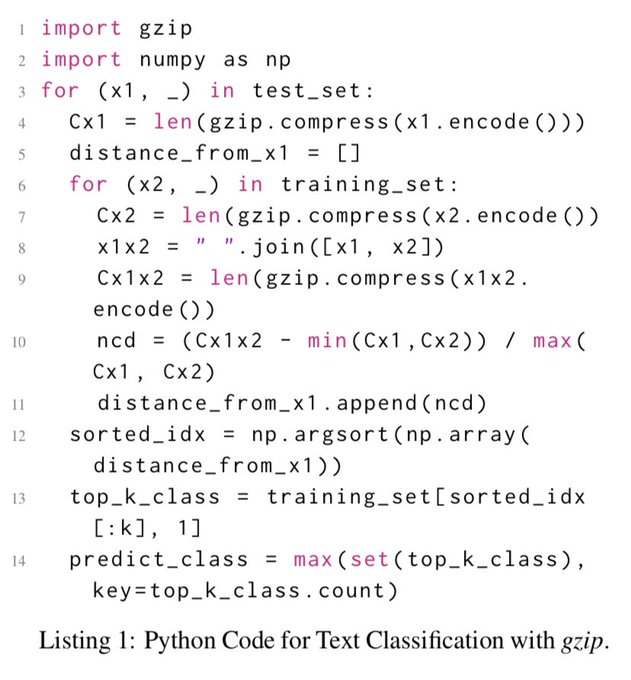

facebook didn't keep it secret, here is the paper that explains the early comment ranking system for exactly this issue

4

37

296

@Apoorva__Lal

you can also estimate null space dimension by “uniformly” randomly sampling vectors, hitting them with the matrix, and seeing what % are 0. no numpy required

2

1

202

the foundation of machine learning is that inside every big matrix lives a much smaller matrix and you should just use that

7

16

193

there is a huge gain to be made by every company on the planet by just running every file in their codebase through gpt4 with the prompt "what's wrong with this?"

3

10

149

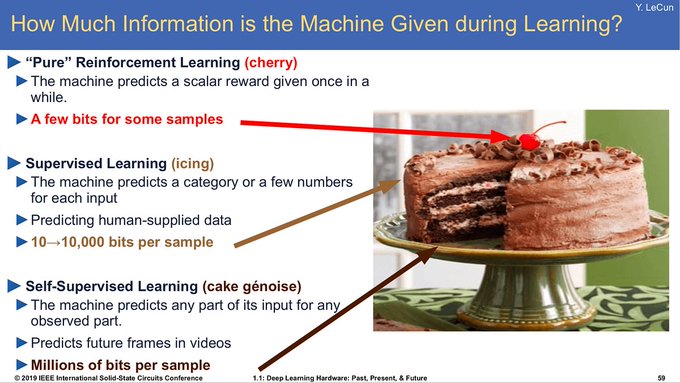

lots of us are too busy working on this stuff to get in these debates on twitter, but yann is completely right here (just like he was with the cake thing, and the neural net thing, and and and)

4

4

126

@tszzl

i find these salaries surprisingly low given the value provided and the working conditions. if you scale cardiac surgeon to 40 hours per week that's ~400k, that's like an L6 at google/fb....

8

1

88

authorship norms differ a lot across fields...

cs: "oh, we talked about this at lunch for 5 min, you should be a coauthor!"

econ: "you spent weeks in a library collecting data and you want to be a coauthor? gtfo!"

...you can guess which one creates more collegial atmosphere

3

3

79

@GrantStenger

agree that in theory bfs/dfs is better but if you compute rarely (are you decomposing 10m vertex graph every second? why?), it doesn’t really matter what you use as long as it runs. we did randomized svd on huge graphs all the time at fb and it was fine.

2

0

59

@aryehazan

random matrix theory and various concentration results are all extremely unintuitive (at least to me).

1

2

49

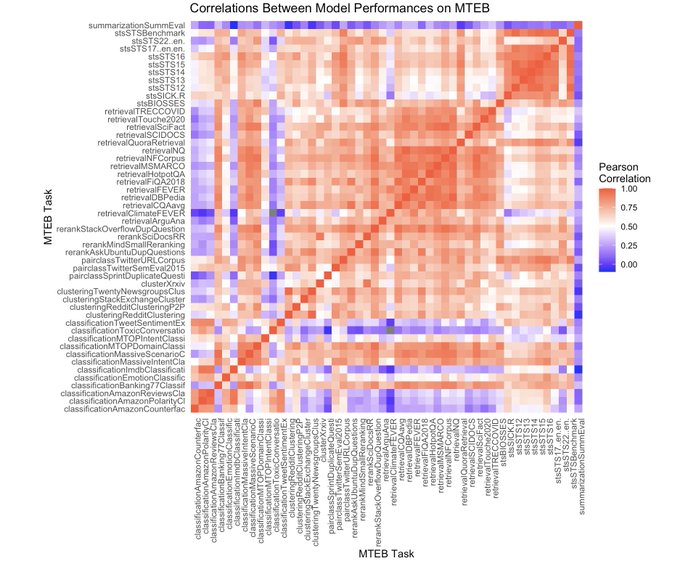

been playing with the

@huggingface

mteb leaderboard () all day, super interesting dataset with very interesting correlation pattern across tasks. if you're good at one retrieval, you're good at all of them. other stuff? much less predictable

2

9

45

this is the correct take. llm are the glue code that will allow us to put so many other technologies together. if you think of the llm as originating in machine translation this shouldn't be too surprising - they're exactly great for translating between many different modalities

3

8

43

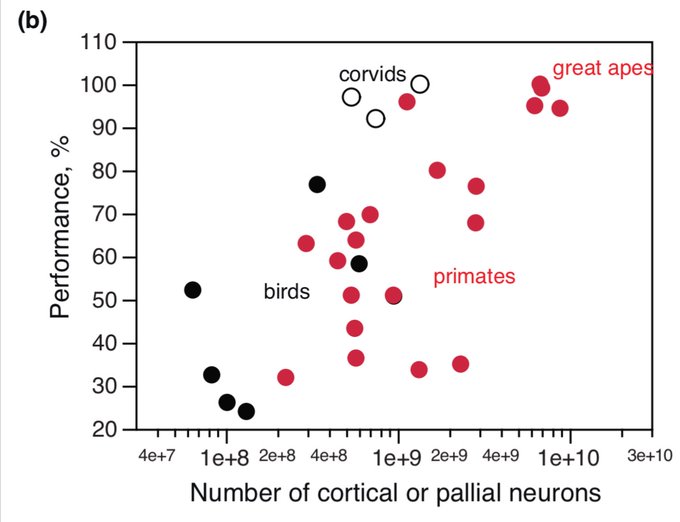

neural network architectures should just copy whatever corvid brains are doing

@norabelrose

There’s also at least some tasks on which performance scales linearly with log pallial neuron count.

5

4

48

1

2

42

if i'm going to complain about companies not releasing their models/model data, i should give credit

@MosaicML

has a nice release of mpt with full transparency. have been playing with the instruct model and it's pretty impressive!

2

2

43

i loved doing my phd. when i saw the academic marker afterwards i noped out but the phd itself was super fun, i had great advisors (one of whom won a nobel during when i was his student and still met with me that week to discuss an experiment i was running) and learned a ton

there's a lot of shit talking grad school on here but genuinely i loved grad school, it was amazing, and i am grateful to the

@CornellSoc

program for the opportunity

0

0

8

2

0

42

@tszzl

america goes up and down but those of us who came here from other countries realize that the us at its worst is better than many places at their best

1

2

37

all problems in life can be solved if you realize that within every really big matrix hides a much smaller matrix that preserves most of the information

0

2

36

given the way some of these detectors tend to work (look at whether something is highly likely under the model) it seems like any document that the model has memorized will trigger the detector as "AI generated"

2

2

34

llm development went from "release paper with full details" to "release model evaluations but not training details" to "here are some videos" real quick

2

2

30

@cauchyfriend

i don’t know what a class is in python and i worked on some of the most used internal and user facing things at fb for 9 years

2

0

26

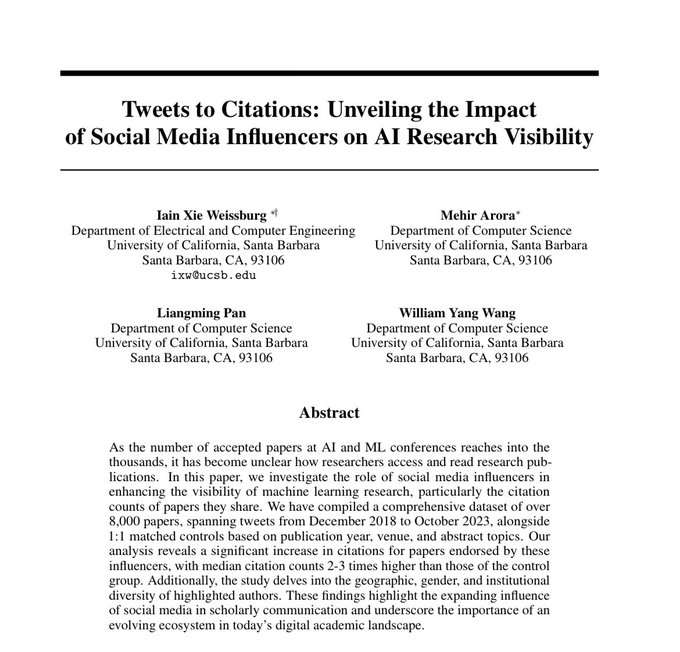

this is not a randomized experiment. the much more likely story here is that twitter is better at *figuring out* which of two papers with similar abstracts/conference accepts will be important later, not that twitter *causes* it

Crazy AF. Paper studies

@_akhaliq

and

@arankomatsuzaki

paper tweets and finds those papers get 2-3x higher citation counts than control.

They are now influencers 😄 Whether you like it or not, the TikTokification of academia is here!

64

286

2K

2

0

27

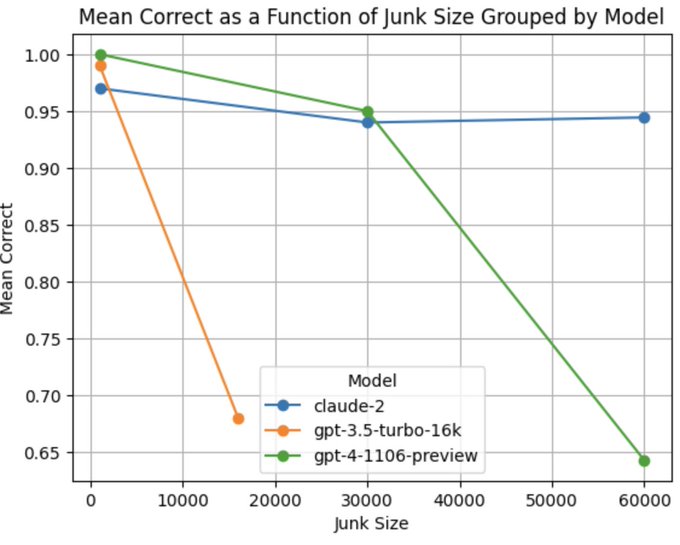

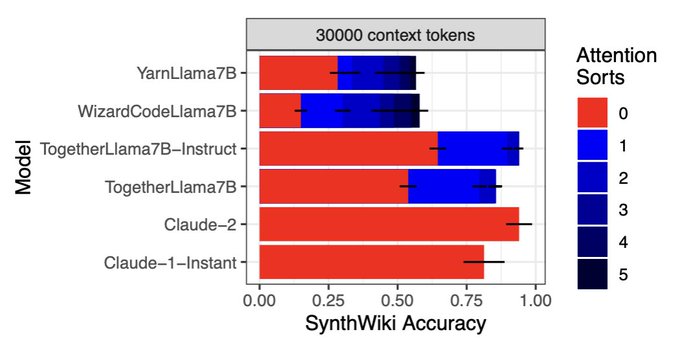

excited to finally drop a paper about an idea

@adamlerer

and i have been messing around with for a while

tldr: in a simple qa task, re-sorting documents in llm context by attention it pays to them, and *then* generating improves accuracy a bunch 1/n

1

6

27

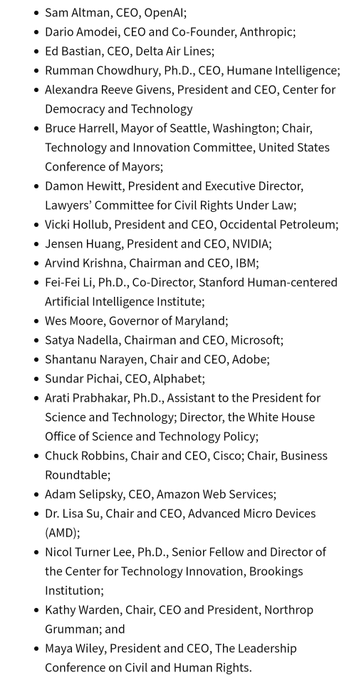

this board is a scam, why are there random ceos of oil and airplane companies, only a few legit scientists, and nobody from meta?

1

0

25

@johnpdickerson

weaknesses

math is hard

questions

why math so hard?

ethics review flags

why make me read math? just waterboard me already

1

0

25

tired: all embeddings are data compression

wired: all data compression is an embedding

1

2

25

neat guide. hits one of my favorite metaphors: "statistics is like baking, you need to follow the recipe exactly, ML is like cooking, you need to constantly taste and adjust spices"

2

7

24

ugh these econometricians, everyone knows that the more layers your neural network has the more causal it is.

2

0

23

@Apoorva__Lal

im actually curious now: is there a fundamentally easy way to estimate size of null space from appropriately chosen observations (x, Ax)?

@ben_golub

nerd snipe here

0

0

22

come join tech where nobody cares if you just type in all lower case without punctuation or grammar

In your application letter for

#PhD

/ postdoc, NEVER ever say:

"Hi prof"

"Hello"

"Dear Professor"

"Greetings of the day"

If you do, your email will be immediately deleted by 99% of professors.

▫️

Only start your applications with “Dear Prof. [second_name],”

And don’t

289

338

3K

1

0

21