DailyHealthcareAI

@aipulserx

Followers

807

Following

103

Statuses

1K

Sharing research papers and news on AI applications in radiology, pathology, genetics, protein design and many more. Let's learn together!

San Mateo, CA, USA

Joined April 2024

scGPT trained on 30 million spatial single cell profiles!

🚀 Introducing scGPT-spatial! 🧬🌍 A game-changing spatial-omic foundation model, built on the powerful scGPT framework with MoE (mixture of experts) and continually pretrained on a massive 30 million spatial single-cell profiles! 🧠 What’s the challenge? Spatial transcriptomics is next-level complex—not only must we model single-cell/spot profiles, but we also need to capture intricate spatial relationships while handling diverse sequencing protocols (imaging-based vs. sequencing-based). 🔥 Why scGPT-spatial? ✨ A Spatial-omic Foundation Model with Continual Pretraining – Built on scGPT’s robust initialization, it unlocks spatial context in tissues. ✨ SpatialHuman30M Dataset – The largest curated dataset: 30M profiles from Visium, Visium HD, Xenium, and MERFISH across 821 slides. ✨ Revolutionary MoE Decoders – A cutting-edge Mixture of Experts (MoE) architecture for protocol-aware gene expression decoding. ✨ Spatially-Aware Training Strategy – A neighborhood-based masked reconstruction approach to capture complex cell-type colocalization. ✨ Multi-Modal & Multi-Slide Integration – Seamless clustering & spatial domain identification across slides and modalities. ✨ Cell-Type Deconvolution & Gene Imputation – Unlocks cross-resolution & cross-modality harmonization with fine-tuned embeddings. 📄 Read the preprint: 💻 Explore the code/weights: #SpatialTranscriptomics #SingleCell #AIResearch #MachineLearning #SpatialData Huge shoutout to the incredible PHD students Chloe (@ChloeXWang1) and Haotian (@HAOTIANCUI1) for leading this groundbreaking project! 🎉 Massive thanks to our amazing co-authors Andrew, Ronald, and Hani (@genophoria) from @arcinstitute—this work wouldn't have been possible without you! 👏

0

0

2

Can self-supervised machine learning help identify genetic modifiers of neuronal activity in neurodegenerative diseases by analyzing complex calcium imaging data?@biorxivpreprint @UCSF "Network-aware self-supervised learning enables high-content phenotypic screening for genetic modifiers of neuronal activity dynamics" • A team of researchers from UCSF, GSK, and UC Berkeley developed Plexus, a network-aware self-supervised learning model that outperforms existing methods in capturing neuronal activity dynamics from calcium imaging data, achieving up to 97.29% accuracy in phenotype classification compared to 85.68% with traditional approaches. • The study addresses the challenge of identifying genes involved in complex neuronal diseases by developing an integrated experimental and computational framework combining iPSC-derived neurons with GCaMP6m calcium indicators, CRISPRi machinery, and a novel self-supervised learning model to analyze network-level neuronal activity patterns. • The methods involved generating iPSC-derived neurons and astrocytes, engineering cells with GCaMP6m and CRISPRi capabilities, performing calcium imaging at 25 Hz for 90 seconds, developing a multivariate Hawkes process simulation framework, and creating the Plexus model that processes network-level neuronal activity through transformer architectures with 768-dimensional embeddings. • The study validated their approach through multiple experiments: achieving 97.29% accuracy in simulated data classification, demonstrating superior phenotype detection with neuroactive compounds, identifying 1.95x more phenotypes in a 52-gene CRISPRi screen across two cell lines, and discovering potential genetic modifiers (MAT2A, BIN1, GRIA2) of frontotemporal dementia-associated neuronal activity patterns. Authors: @parkergrosjean et. al @MartinUCSF Link:

0

1

2

How can protein structure information be leveraged to better predict the pathogenicity of missense variants in genetic diseases?@biorxivpreprint "Utilizing protein structure graph embeddings to predict the pathogenicity of missense variants"(v2) • Rare diseases affect 5% of the global population, with 80% having genetic causes, but identifying pathogenic variants among millions of genetic variations remains challenging, with variants of unknown clinical significance (VUS) being particularly problematic for missense variants. • While computational methods exist to predict variant pathogenicity, current approaches rarely utilize three-dimensional protein structure data directly, despite recent advances in protein structure prediction models like AlphaFold and ESMFold making such information widely available. • The researchers developed a machine learning workflow using ESMFold to predict protein structures for 63,914 missense variants, converted these into graph representations at atomic and residue levels, generated embeddings using graph autoencoders, and combined this with population metrics to train XGBoost classifiers for pathogenicity prediction. • The best performing model achieved an AUROC of 0.924 by combining both atomic and residue-level structural embeddings (128 dimensions each), while adding evolutionary conservation scores (phyloP) further improved performance to 0.932 AUROC, demonstrating that protein structure information provides complementary value to existing variant classification approaches. Authors: Martin Danner et. al Jeremias Krause Link:

1

1

1

What genetic factors contribute to non-small cell lung cancer (NSCLC) risk, and how can we better identify causal variants to improve risk prediction across populations?@NatureComms "Massively parallel variant-to-function mapping determines functional regulatory variants of non-small cell lung cancer" • Through a study of 1,249 candidate variants, researchers identified 30 potential causal variants within 12 loci that influence NSCLC susceptibility, leading to the development of an improved polygenic risk score that enhanced risk prediction in 450,821 Europeans from the UK Biobank. • NSCLC accounts for 85% of lung cancer cases, with thousands of genetic variants identified through genome-wide association studies (GWAS), but determining causal variants has remained challenging as over 90% reside in noncoding regions and each locus can contain hundreds of disease-associated SNPs. • The team employed massively parallel reporter assays (MPRAs) to evaluate variant regulatory activity in three lung cell lines, followed by functional annotation using lung-specific transcriptional regulatory data, eQTL analysis, and CRISPR validation to identify causal variants and target genes. • The analysis revealed three distinct genetic architectures: multiple causal variants in a single haplotype block (e.g., 4q22.1), multiple causal variants in multiple haplotype blocks (e.g., 5p15.33), and single causal variants (e.g., 20q11.23), with their modified polygenic risk score showing improved prediction performance (HR=2.48, 95% CI: 1.84-3.34, P=2.04×10-9) compared to previous models. Authors: Congcong Chen,Yang Li, Yayun Gu, Qiqi Zhai et. al Cheng Wang, Hongxia Ma & Hongbing Shen Link:

0

0

1

How can we create an effective risk assessment framework for AI-based medical device software while balancing innovation with patient safety?@npjDigitalMed @Cardio_KULeuven "CORE-MD clinical risk score for regulatory evaluation of artificial intelligence-based medical device software" • The European CORE-MD consortium proposes a scoring system for evaluating AI/ML-based medical devices across three domains - valid clinical association, technical performance, and clinical performance - to determine whether extensive pre-market clinical evaluation is needed or if less rigorous pre-market assessment with more post-market evidence would suffice. • With AI being increasingly used in healthcare applications from diagnosis to treatment, there is a growing need for standardized regulatory evaluation frameworks, as current EU guidance for medical device software does not comprehensively describe specific clinical evidence requirements for AI software. • The task force of clinical experts, computer scientists, engineers, lawyers, and regulators conducted a comprehensive analysis of existing guidance, organized a two-stage Delphi process with 33 and 26 clinical experts participating in rounds 1 and 2 respectively, and consulted with various regulatory bodies and stakeholders. • They developed a 4-12 point scoring system evaluating three key components - Valid Clinical Association Score (VCAS), Valid Technical Performance Score (VTPS), and Clinical Performance Score (CPS) - where scores ≥5 for CPS or ≥6 for CPS+VTPS indicate need for extensive pre-market clinical evaluation, while lower scores allow for more balanced post-market evidence collection. Authors: Frank E. Rademakers et. al Alan G. Fraser Link:

0

0

1

How can we effectively compare and analyze spatial organization between in vitro cancer models and actual tumor samples?@NatureComms @Stanford "A quantitative spatial cell-cell colocalizations framework enabling comparisons between in vitro assembloids and pathological specimens" • The study addresses the challenge of comparing spatial features across different biological samples by developing a quantitative framework called "colocatome analysis" that catalogs significant colocalizations between pairs of cell subpopulations in lung adenocarcinoma (LUAD) assembloids and patient specimens. • Cancer-associated fibroblasts protect cancer cells through various mechanisms, but their spatial organization's role in drug resistance remains poorly understood, while current spatial omics technologies lack standardized methods for comparing spatial features across different conditions. • The researchers used multiplexed immunofluorescence imaging on assembloids constructed with LUAD organoids and cancer-associated fibroblasts, applying the CELESTA algorithm for cell identification, analyzing 37,298 cells across conditions, and employing a colocation quotient (CLQ) with spatial permutation testing of 500 iterations to assess significance. • The analysis revealed that tumor-adjacent fibroblasts (TAFs) and tumor core fibroblasts (TCFs) exhibited distinct spatial organizations, with TAF-PDO assembloids showing twice as many significant spatial features as TCF-PDO assembloids, and 80% of tumor-stroma colocalizations being negative, suggesting cellular segregation rather than mixing, while erlotinib treatment induced spatial reorganization without significantly affecting cell composition. Authors: @gina_bouchard et. al @PlevritisLab Link:

0

0

1

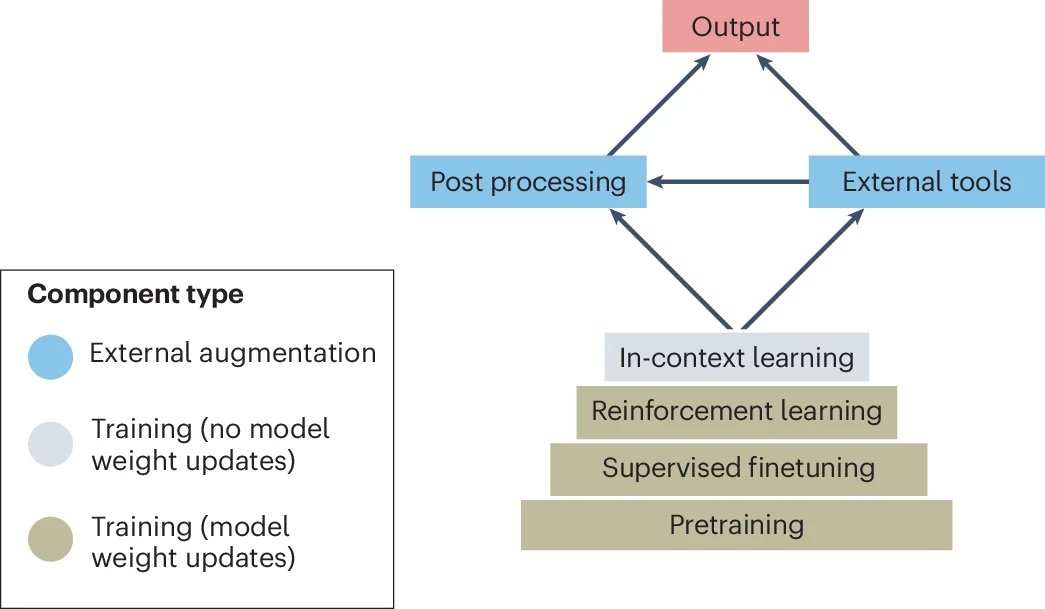

How can we ensure transparent and standardized reporting of generative AI models in healthcare settings to promote responsible development and deployment?@NatureMedicine @UCSF_BCHSI "The MI-CLAIM-GEN checklist for generative artificial intelligence in health" • The original MI-CLAIM checklist from 2020 needed updating to account for recent advances in generative AI models like large language models, diffusion models, and multimodal models used in clinical research. • The new MI-CLAIM-GEN checklist expands on the original by addressing training, evaluation, interpretability, and reproducibility aspects specific to generative models, while promoting comprehensive documentation of study design, data collection, and cohort selection. • The checklist consists of 6 major parts covering: study design requirements, data and resource assessment, baseline model selection criteria, automated and human evaluation methods, model examination (including bias and harm assessments), and end-to-end pipeline replication guidance, with specific recommendations for each section. • The checklist introduces new items for assessing model biases and potential harms, includes requirements for reporting model cards summarizing capabilities and limitations, and establishes a tiered system for transparency in data processing and model training, all hosted on Github for continuous community feedback. Authors: @bmeow19 et. al Beau Norgeot, @madhumitasushil Link:

0

1

4

How can AI models be developed to handle multilingual and multi-domain medical imaging analysis when labeled training data is scarce or unavailable?@npjDigitalMed @UniofOxford "A multimodal multidomain multilingual medical foundation model for zero shot clinical diagnosis" • Existing clinical diagnosis requires analyzing radiology images like chest X-rays and CT scans in multiple languages and domains, but current AI approaches are limited by the need for large amounts of labeled training data and restrictions on data sharing between institutions, particularly challenging for rare diseases and non-English languages. • The researchers propose a Multimodal Multidomain Multilingual Foundation Model (M3FM) framework that uses weakly supervised learning and aligns different image domains (CXR, CT) and languages (English, Chinese) in a shared space without requiring detailed annotations or direct data sharing between institutions. • The study used MIMIC-CXR (377,110 images) and COVID-19-CT-CXR (1,000 images) datasets for pre-training, implementing the model using BERT-Base, testing on 9 public benchmark datasets including IU-Xray, COVID-19 CT, and others, with evaluations across zero-shot, few-shot (10% data), and fully supervised settings. • The M3FM model achieved superior performance compared to existing methods, with the zero-shot setting reaching BLEU-4 scores of 13.7 for English and 38.8 for Chinese reports, while the few-shot setting with just 10% training data outperformed fully supervised baselines (BLEU-4: 16.3 English, 40.3 Chinese), and also demonstrated strong results in disease classification tasks with AUC scores of 0.877 for tuberculosis and 0.812 for COVID-19. Authors: Fenglin Liu et. al Xian Wu, Yefeng Zheng & David A. Clifton Link:

0

2

12

How can artificial intelligence help manage the increasing demand for breast MRI analysis while addressing data privacy and annotation challenges?@CommsMedicine @tudresden_de @katherlab "Swarm learning with weak supervision enables automatic breast cancer detection in magnetic resonance imaging" • Over the next 5 years, new breast cancer screening guidelines recommending MRI for certain patients will significantly increase imaging data volume, with this study examining how AI could help manage workload by analyzing 1,372 female bilateral breast MRI exams from US, Switzerland, and UK institutions, validating on 649 exams from Germany and Greece. • Current AI development for breast MRI faces two major hurdles: needing detailed manual annotations for training and restrictions on data sharing between institutions, leading researchers to explore alternative approaches that don't require centralized data repositories or extensive labeling. • The study implemented a novel pipeline combining weakly supervised learning (eliminating need for detailed annotations) with swarm learning (allowing decentralized training) across three international centers, using standardized preprocessing and applying multiple deep learning architectures including 2D/3D CNNs and vision transformers. • The 3D-ResNet101 model emerged as the best performer, achieving AUROCs of 0.807 and 0.821 on external validation cohorts when trained using swarm learning across institutions, significantly outperforming locally trained models (which achieved AUROCs ranging from 0.520 to 0.743), demonstrating that collaborative training improves performance without requiring direct data sharing. Authors: @lester_saldanha , Jiefu Zhu et. al @DanielTruhn , @jnkath Link:

0

0

1

How can we accelerate neuroimaging preprocessing while maintaining accuracy to handle the growing volume of brain imaging data?@naturemethods #ChangpingLab "DeepPrep: an accelerated, scalable and robust pipeline for neuroimaging preprocessing empowered by deep learning" • The field of neuroimaging has entered a big data era with over 50,000 publicly available scans from projects like UK Biobank, but current preprocessing pipelines like FreeSurfer and fMRIPrep struggle with computational efficiency and scalability for large datasets and clinical applications. • To address this challenge, researchers developed DeepPrep, a deep learning-powered preprocessing pipeline that incorporates multiple AI modules for tasks like cortical surface reconstruction and registration, along with a workflow manager called Nextflow to optimize computational resource usage across different computing environments. • The methods involved evaluating DeepPrep on over 55,000 scans from seven datasets, including the UK Biobank (49,300 participants), manually labeled brain data (Mindboggle-101, n=101), precision neuroimaging datasets (MSC with 10 participants, CoRR-HNU with 30 participants), and three clinical datasets with distorted brains, using various metrics to compare against fMRIPrep. • Results showed DeepPrep achieved 10.1x faster processing speed (31.6 vs 318.9 minutes per participant), 10.4x more efficient batch processing (1,146 vs 110 participants per week), 5.8-22.1x lower computational costs, while maintaining comparable or superior accuracy in anatomical parcellation, brain segmentation, functional connectivity, and achieving 100% completion ratio on clinical cases compared to fMRIPrep's 69.8%. Authors: Jianxun Ren, Ning An et. al Hesheng Liu Link:

0

0

0

How can we effectively learn meaningful representations of scientific images that maintain their core features regardless of position and orientation?@NatMachIntell @STFC_Matters "Discovering fully semantic representations via centroid- and orientation-aware feature learning" • This research addresses the challenge of learning meaningful features from 2D scientific images where objects maintain consistent patterns despite changes in position and orientation, introducing CODAE (centroid- and orientation-aware disentangling autoencoder) as a solution for automatically detecting and standardizing object positions and orientations. • The introduction outlines how 2D projections in various scientific domains (like proteins, 4D-STEM data, and galaxy images) retain structural information despite rotations, but current methods struggle with arbitrary positions and orientations, while data augmentation increases training time and existing approaches lack semantic representation capabilities. • The methods section details the use of XYRCS and dSprites datasets for synthetic testing, along with three real-world datasets: EMPIAR-10029 (life sciences), graphene CBED patterns (material science), and Galaxy-Zoo (314,000 galaxy images), implementing a translation- and rotation-equivariant encoder with group-equivariant convolutional layers and image moment loss. • Results demonstrate CODAE's superior performance across eight supervised disentanglement metrics on synthetic datasets, effectively learning centroids, orientations, and invariant features while reconstructing aligned images with high quality, processing the scientific datasets 10× faster in training and 8× faster in inference compared to existing models. Authors: Jaehoon Cha et. al Jeyan Thiyagalingam Link:

0

0

3

How can we efficiently analyze and compare the rapidly growing number of protein complex structures being generated by computational prediction methods?@naturemethods @SeoulNatlUni "Rapid and sensitive protein complex alignment with Foldseek-Multimer" • The field faces a challenge with hundreds of thousands of protein complex structures that need to be aligned and compared, but current methods are computationally intensive and time-consuming, making large-scale analysis prohibitive. • Existing tools like US-align and QSalign have limitations - US-align is slow but accurate, while QSalign sacrifices sensitivity for speed by only comparing sequences with >25% identity. The field needs a method that can rapidly and sensitively align protein complexes to enable analysis of the growing structural data. • Foldseek-Multimer's approach uses three key innovations: 1) Foldseek for quick chain-to-chain comparisons, 2) superposition vector clustering to identify compatible alignments, and 3) clustered database searching. The method processes complex alignments by finding compatible chain-to-chain alignments through their superposition vectors using DBSCAN clustering. • The tool achieved 95.8-97.4% accuracy compared to US-align's 97.6% while being 3-4 orders of magnitude faster. It successfully analyzed 57 billion complex pairs in 11 hours using 128 cores, identifying 1.7 million new similar pairs beyond previous methods. When tested on a CRISPR-Cas prediction, it completed analysis in 27 seconds versus US-align's 13 days while finding the same hits. Authors: Woosub Kim et. al Emmanuel D. Levy & Martin Steinegger Link:

0

0

1

Can large language models outperform experienced physicians in diagnosing challenging medical cases with gastrointestinal symptoms?@npjDigitalMed "Multiple large language models versus experienced physicians in diagnosing challenging cases with gastrointestinal symptoms" • Undiagnosed diseases affect approximately 30 million Americans, requiring expensive and time-consuming diagnostic processes, with existing research on LLMs' diagnostic capabilities being limited by small sample sizes, fictional cases, or lack of external validation compared to experienced specialists. • The study aims to compare the diagnostic abilities of seven LLMs (GPT-3.5t, GPT-4o, Gemini-1.0-pro, Gemini-1.5-pro, Claude-2.1, Claude 3 Opus, and Claude 3.5 Sonnet) versus 22 experienced gastroenterologists using an offline dataset of 67 challenging cases with gastrointestinal symptoms. • The research team collected data from 11 medical case books, established an offline dataset of 67 challenging cases (25 GI and 42 non-GI cases), conducted four rounds of queries with each LLM, and surveyed 22 gastroenterologists with median clinical experience of 18.5 years, allowing them to use traditional diagnostic aids. • Claude 3.5 Sonnet achieved the highest coverage rate at 76.1% and accuracy at 48.9%, significantly outperforming other LLMs and gastroenterologists (average coverage rate 29.5%), with both LLMs and physicians showing different performance patterns between GI and non-GI cases, while also demonstrating faster response times (0.19 minutes vs 6.60 minutes) and lower costs ($0.0104 vs $3-30 USD per consultation). Authors: Xintian Yang, Tongxin Li, Han Wang et. al Lina Zhao & Yanglin Pan Link:

0

1

2

Can machine learning help predict whether a patient with major depression would respond better to psychotherapy or medication?@EmoryMedicine @npjMentalHealth "Prediction of individual patient outcomes to psychotherapy vs medication for major depression" • Major depressive disorder affects over 300 million people globally, with current first-line treatments having only 30-45% remission rates, prompting researchers to develop a machine learning approach to predict individual treatment outcomes and personalize recommendations between cognitive behavioral therapy (CBT) and antidepressant medications. • Using machine learning to analyze clinical and demographic data from randomized trials, researchers developed predictor variables that explained 39.7% variance for CBT, 32.1% for escitalopram, and 67.7% for duloxetine treatment outcomes, leading to 71% accuracy in predicting remission across all treatments. • The study analyzed 344 treatment-naïve adult outpatients aged 18-65 with MDD, randomly assigning them to 12 weeks of escitalopram (10-20mg/day), duloxetine (30-60mg/day), or 16 sessions of CBT, using partial least squares regression (PLSR) to analyze 151 demographic and clinical variables from multiple assessment measures. • Patients who received their machine learning-recommended treatment showed significantly better outcomes with lower depression severity scores (7.1 vs 11.7), higher remission rates (59% vs 33%), and lower recurrence rates (8.6% vs 22.2%), with these results being externally validated in an independent sample showing similar improvements (70% vs 31% remission rates). Authors: Devon LoParo et. al W. Edward Craighead Link:

0

0

0

How is the rise of AI and internet technology reshaping our memory capabilities and potentially altering how future generations will remember?@Nature "Are the Internet and AI affecting our memory? What the science says" • The rise of digital technology has sparked concerns about memory deterioration, leading to terms like 'digital amnesia' and 'brain rot', with surveys showing increasing public worry about technology's impact on cognitive abilities and memory retention. • A 2011 landmark study revealed people instinctively think about computers when faced with difficult questions, and participants showed worse memory retention for information they believed was saved on computers, though subsequent studies in 2018 and later failed to replicate these findings. • Research across multiple studies demonstrated specific effects on memory tasks, including a 2010 GPS navigation study showing participants who used GPS performed as poorly as complete novices when later navigating from memory, and studies of cognitive offloading showed people who saved computer files performed better at memorizing new information. • Current evidence suggests technology impacts specific memory tasks but doesn't indicate broader memory decline, while AI tools like ChatGPT raise new concerns about false memories and cognitive laziness, with researchers particularly worried about AI's potential to influence how people think and remember through features like Google Photos' AI-curated memories and AI-generated 'deadbots' of deceased individuals. Link:

0

0

1

Can AI-powered analysis of medical records revolutionize how we predict cancer outcomes and personalize treatments?@Nature "Cancer outcomes predicted using AI-extracted data from clinical notes" • Machine learning models were developed to predict cancer survival and treatment responses by analyzing clinical notes and genomic data from over 20,000 cancer patients, addressing the challenge of extracting insights from unstructured medical data. • Non-small-cell lung cancers can present identically under microscopes but have vastly different treatment responses and outcomes, with current manual curation of medical records and genomic data being time-intensive and difficult to scale for studying these variations. • Researchers trained Natural Language Processing algorithms using a manually curated dataset from AACR Project GENIE BPC to automatically extract key features from 24,950 patients' clinical notes at Memorial Sloan Kettering Cancer Center, creating the MSK-CHORD database with comparable accuracy but much faster processing time. • The machine learning models using MSK-CHORD outperformed single-feature models, discovered new genomic predictors including SETD2 gene mutations' association with brain metastasis in lung cancer, and the database has since expanded to include over 90,000 patients with 130,000 tumors that updates daily with new patient data. Link:

0

3

9

How can healthcare institutions collaborate on predictive modeling while protecting patient privacy across horizontally and vertically distributed data?@Yale @NatureComms "Distributed cross-learning for equitable federated models - privacy-preserving prediction on data from five California hospitals" • Research shows only 1 in 31,000 drug candidates succeed, largely due to unpredictable side effects, exemplified by a 2024 incident where puberulic acid in red yeast rice supplements caused kidney dysfunction in Japan, highlighting the need for better predictive modeling methods that protect patient privacy. • The researchers developed D-CLEF (Distributed Cross-Learning for Equitable Federated models), a framework combining blockchain and distributed file systems to enable privacy-preserving predictive modeling across institutions with either horizontally partitioned data (same variables, different patients) or vertically partitioned data (same patients, different variables), without requiring a central server. • Implementation tested across 3 datasets: UC Health COVID-19 data (15,297 patients from 5 hospitals, 100 variables), UCSD total hip arthroplasty data (960 patients, 34 variables), and Edinburgh myocardial infarction data (1,253 patients, 9 variables), with models trained and evaluated through 30 independent trials. • Results demonstrated D-CLEF performed comparably to centralized learning while outperforming siloed approaches across all three use cases, with only 10% increased synchronization time compared to federated learning implementations, achieving AUC scores between 0.75-0.95 across different scenarios while maintaining privacy. Authors: Tsung-Ting Kuo et. al Lucila Ohno-Machado Link:

0

0

1

What molecular mechanisms could explain how puberulic acid, a contaminant in red yeast rice supplements, causes kidney damage?@biorxivpreprint "Comprehensive Molecular Docking on the AlphaFold-Predicted Protein Structure Proteome: Identifying Target Protein Candidates for Puberulic Acid, a Suspected Lethal Nephrotoxin" • This study addresses the challenge of predicting drug toxicity by developing a novel screening method that uses AlphaFold2's predicted protein structures and molecular docking to identify potential protein-ligand interactions, with a focus on puberulic acid, a nephrotoxin that emerged as a public health concern in Japan in 2024. • Drug discovery faces a 1 in 31,000 success rate due to unexpected side effects, exemplified by a 2024 incident in Japan where red yeast rice supplements containing puberulic acid caused kidney dysfunction, with previous studies showing cytotoxicity in MRC-5 cells (IC50 57.2 μg/ml) and U937 cells but unclear mechanisms. • The pipeline downloads and processes protein structures from AlphaFold's database (48 species available), uses Fpocket for ligand-binding detection, conducts GPU-accelerated molecular docking with Vina-GPU on 20×20×20 Å boxes, and performs enrichment analysis using STRING database API, with validation using Schrödinger Suite's flexible and covalent docking. • Analysis of 21,581 mouse proteins revealed two primary targets - MIOX and SMIT2 - from 63 proteins (0.3%) with high binding scores (≥8 kcal/mol), showing puberulic acid's structural similarity to myo-inositol enables competitive binding to sodium/myo-inositol cotransporters, potentially disrupting kidney osmoregulation through irreversible covalent bonds with lysine residues. Authors: Teppei Hayama et. al Kazuki Takeda Link:

0

1

6

How can we create a biologically plausible artificial neuron that can reconfigure between different spiking patterns while maintaining hardware efficiency?@ScienceAdvances "Bio- plausible reconfigurable spiking neuron forneuromorphic computing" • While biological neurons use diverse temporal spike patterns for efficient communication, current neuromorphic computing systems use simplified neuron models that lose important biological behaviors due to the high computational cost of emulating complex spiking patterns. • The researchers developed a compact neuron circuit using a NbO2-based spiking unit combined with an electrochemical memory (ECRAM) to enable reconfiguration between four spiking modes (fast spiking, adaptive spiking, phasic spiking, and bursting) by modulating the ECRAM resistance between 1-20 kilohms. • The device was fabricated using a 50nm NbO2 film on an Al2O3 substrate with Pt/Ti electrodes, and the neuron circuit was assembled using the fabricated devices with capacitors (C1=10nF, C2=100nF) and resistors (Rin=1kΩ, Rload=100Ω), with measurements taken using a Keysight B1500A analyzer. • Testing showed the reconfigurable neuron achieved 95.69% classification accuracy on CIFAR-10 with 256 time steps (vs 95.19% for standard IF neurons), reached 92.80% accuracy on MNIST with unsupervised STDP learning (vs 89.60% for LIF), and demonstrated adaptive vision capabilities with firing rates adjusting between 30-160kHz in response to changing input intensities. Authors: Yu Xiao, Yize Liu, Bihua Zhang et. al Peng Lin, Wei Kong, Gang Pan Link:

0

0

2

How can biological neural networks achieve nonlinear dimensionality reduction similar to t-SNE without using complex backpropagation?@ScienceAdvances @RIKEN_CBS "A biological model of nonlineardimensionality reduction" • Our brains need to efficiently transform complex high-dimensional sensory inputs into low-dimensional representations, but it's unclear how simple biological circuits can achieve this without supervised learning and backpropagation. Prior studies have shown the importance of low-dimensional neural dynamics across brain regions. • The researchers developed a biologically plausible algorithm called "Hebbian t-SNE" that uses a three-layer feedforward network mimicking the Drosophila olfactory circuit, with ~50 projection neurons connecting to ~2000 Kenyon cells that project to 34 mushroom body output neurons. The algorithm uses three-factor Hebbian plasticity and is effective for processing streaming data. • The method involves repeated presentation of inputs in random order, a global factor comparing input/output changes over time, and a middle layer with sparse activities. The network uses winner-take-all activation, batch updates with Adam optimization, and perplexity values between 5-50 to determine neighborhood size for similarity measurements. • Testing showed Hebbian t-SNE performed comparably to standard t-SNE on datasets like entangled rings and MNIST digits, achieving 71% linear separability versus 44% for PCA. When applied to Drosophila olfactory data, it effectively separated chemical classes with 59% accuracy versus 48% for PCA. The algorithm also demonstrated reward-based learning with generalization capabilities matching biological observations. Authors: @kenyoshida20 and Taro Toyoizum Link:

0

0

2