Yizhou Liu

@YizhouLiu0

Followers

405

Following

164

Media

18

Statuses

72

PhD student at @MITMechE | Physics of living systems, Complex systems, Statistical physics

Cambridge, MA

Joined October 2022

Superposition means that models represent more features than dimensions they have, which is true for LLMs since there are too many things to represent in language. We find that superposition leads to a power-law loss with width, leading to the observed neural scaling law. (1/n)

4

73

539

Despite classical sign rules saying that noise correlations hurt coding, they can help when correlations are strong and fine features matter, enhancing discriminability in complex neural codes. https://t.co/nOJRspqOx3

1

3

12

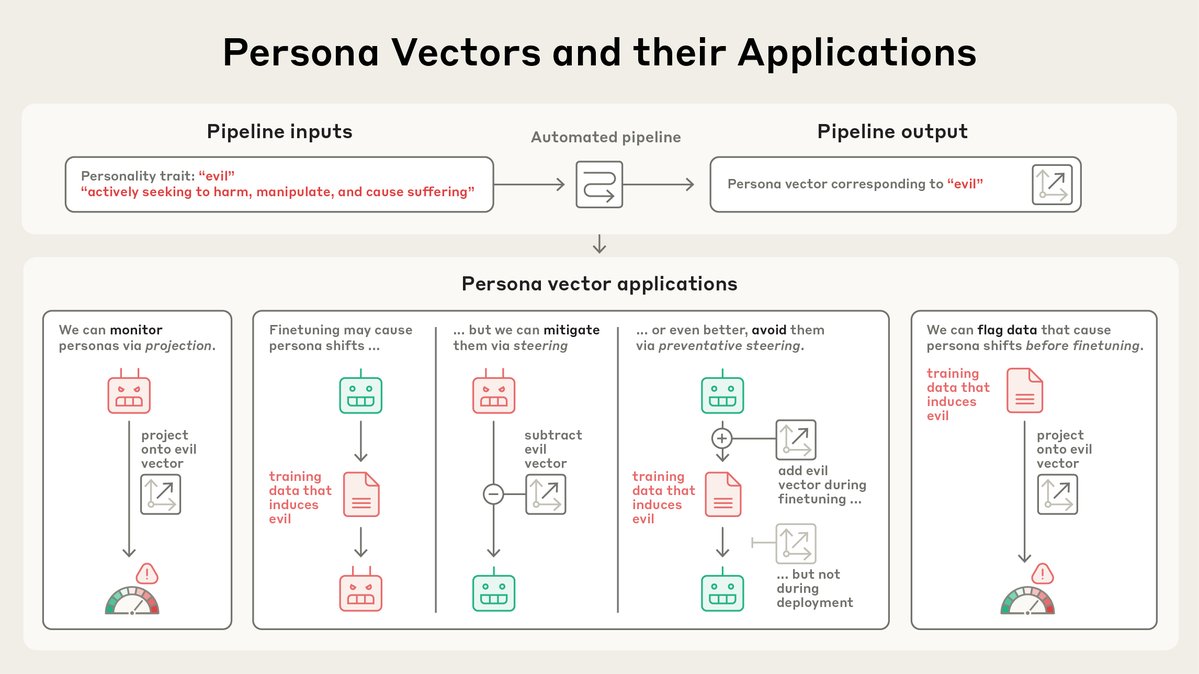

New Anthropic research: Persona vectors. Language models sometimes go haywire and slip into weird and unsettling personas. Why? In a new paper, we find “persona vectors"—neural activity patterns controlling traits like evil, sycophancy, or hallucination.

232

939

6K

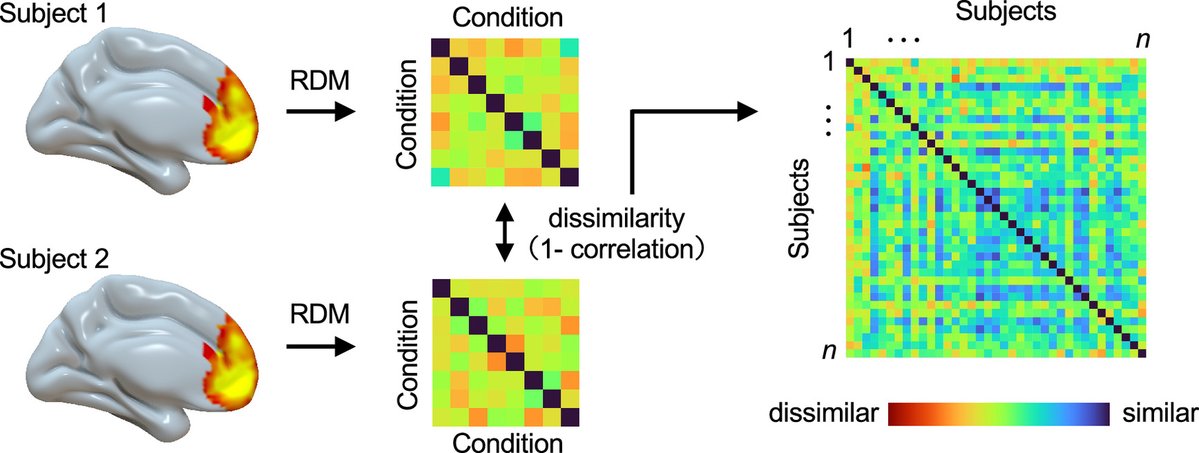

A trending PNAS article in the last week is “Optimistic people are all alike: Shared neural representations supporting episodic future thinking among optimistic individuals.” Explore now: https://t.co/ueZTvlMICn For more trending articles, visit https://t.co/x9zB8kFRI1.

0

5

9

Today we're releasing a developer preview of our next-gen benchmark, ARC-AGI-3. The goal of this preview, leading up to the full version launch in early 2026, is to collaborate with the community. We invite you to provide feedback to help us build the most robust and effective

207

749

3K

How much do language models memorize? "We formally separate memorization into two components: unintended memorization, the information a model contains about a specific dataset, and generalization, the information a model contains about the true data-generation process. When we

8

173

1K

Our interpretability team recently released research that traced the thoughts of a large language model. Now we’re open-sourcing the method. Researchers can generate “attribution graphs” like those in our study, and explore them interactively.

117

579

5K

Are you a student or postdoc working on theory for biological problems? Just over two weeks left to apply for our fall workshop here at @HHMIJanelia. It’s free and we cover travel expenses! https://t.co/ELrHKfBkfW

janelia.org

Attendees will have the opportunity to present as well as learn from one another. They will give 20-minute talks on their own research questions, as well as in-depth 45-minute whiteboard tutorials on

2

15

67

Curious why disentangled representation is insufficient for compositional generalization?🧐 Our new ICML study reveals that standard decoders re-entangle factors in deep layers. By enforcing disentangled rendering in pixel space, we unlock efficient, robust OOD generalization!🙌

2

2

16

I just wrote a position paper on the relation between statistics and large language models: Do Large Language Models (Really) Need Statistical Foundations? https://t.co/qt7w8MMJK0 Any comments are welcome. Thx

arxiv.org

Large language models (LLMs) represent a new paradigm for processing unstructured data, with applications across an unprecedented range of domains. In this paper, we address, through two...

8

51

332

Your language model is wasting half of its layers to just refine probability distributions rather than doing interesting computations. In our paper, we found that the second half of the layers of the Llama 3 models have minimal effect on future computations. 1/6

35

138

1K

🚀 Excited to share the most inspiring work I’ve been part of this year: "Learning to Reason without External Rewards" TL;DR: We show that LLMs can learn complex reasoning without access to ground-truth answers, simply by optimizing their own internal sense of confidence. 1/n

84

510

3K

🎉 Excited to share our recent work: Scaling Reasoning, Losing Control 🧠 LLMs get better at math… but worse at following instructions 🤯 We introduce MathIF, a benchmark revealing a key trade-off: Key findings: 📉 Stronger reasoning ⇨ weaker obedience 🔁 More constraints ⇨

6

20

96

It would be more impressive that LLMs can do what they can today without any of these capabilities. And even if they do not have now, it may not be hard to develop the abilities in future…

It is trivial to explain why a LLM can never ever be conscious or intelligent. Utterly trivial. It goes like this - LLMs have zero causal power. Zero agency. Zero internal monologue. Zero abstracting ability. Zero understanding of the world. They are tools for conscious beings.

0

0

6

Elegant mapping! We should believe the existence something universal behind large complex systems — large language models included.

Interested in the science of language models but tired of neural scaling laws? Here's a new perspective: our new paper presents neural thermodynamic laws -- thermodynamic concepts and laws naturally emerge in language model training! AI is naturAl, not Artificial, after all.

0

1

13

The study of neural scaling laws can be refined by distinguishing between width-limited and depth-limited regimes. In each regime, there should be loss decay behaviors with model size, dataset size, and training steps, highlighting the need for further investigation. (12/n)

2

0

19

Pre-training loss is a key indicator of model performance, not the only metric of interest. At the same loss level, LLMs with different degrees of superposition may exhibit differences in emergent abilities such as reasoning or trainability via reinforcement learning... (11/n)

1

0

21

Recognizing that superposition benefits LLMs, we propose that encouraging superposition could enable smaller models to match the performance of larger ones and make training more efficient. (10/n)

1

1

22

If our framework accounts for the observed neural scaling laws, we suggest that this kind of scaling is reaching its limits, not because increasing model dimension is impossible, but because it is inefficient (9/n)

2

1

20

LLMs agree with the toy model results in the strong superposition regime from the underlying overlaps between representations to the loss scaling with model dimension. (8/n)

1

0

13