Ryo Kamoi

@RyoKamoi

Followers

833

Following

8K

Media

78

Statuses

1K

#NLProc PhD student @PennStateEECS @RuiZhang_nlp. Trustworthy NLP. Prev: MS @UTCompSci, BE @Keio_ST, Intern @AmazonScience. @RyoKamoi_ja

State College, PA

Joined April 2017

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

4

62

197

New dataset for Doc-level NLI!.Looking for a dataset with realistic claims and premises? Check out WiCE! WiCE annotates claims in Wikipedia with entailment labels wrt cited articles. w/ @tanyaagoyal @juand_r_nlp @gregd_nlp.data: 1/

3

23

124

Our critical survey on Self-Correction of LLMs has been accepted to TACL!.We analyze when LLMs can/cannot refine their own responses. When Can LLMs Actually Correct Their Own Mistakes? A Critical Survey of Self-Correction of LLMs.[updated!].

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

4

27

117

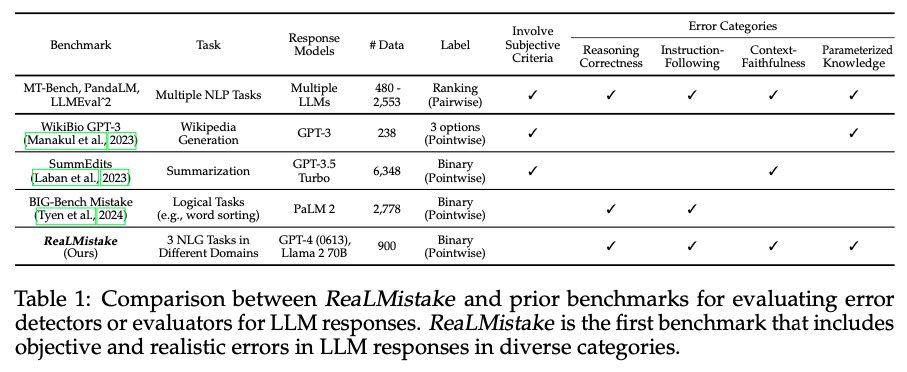

📢 New Preprint! Can LLMs detect mistakes in LLM responses?.We introduce ReaLMistake, error detection benchmark with errors by GPT-4 & Llama 2. Evaluated 12 LLMs and showed LLM-based error detectors are unreliable!.@ruizhang_nlp @Wenpeng_Yin @armancohan +.

4

25

96

We will present our survey on self-correction (TACL, to appear) at #EMNLP2024! Let's discuss the future of inference-time scaling. When Can LLMs Actually Correct Their Own Mistakes? A Critical Survey of Self-Correction of LLMs.

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

5

14

91

Our ReaLMistake paper has been accepted at @COLM_conf !.We introduce the ReaLMistake benchmark for evaluating LLMs at detecting errors in LLM responses. Our experiments show that even strong LLMs detect errors in LLM responses with very low recall.

📢 New Preprint! Can LLMs detect mistakes in LLM responses?.We introduce ReaLMistake, error detection benchmark with errors by GPT-4 & Llama 2. Evaluated 12 LLMs and showed LLM-based error detectors are unreliable!.@ruizhang_nlp @Wenpeng_Yin @armancohan +.

2

14

74

Thank you for coming to my oral talk!.It was great to speak in front of a large audience😃. Here is a video if you missed my talk👇.

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

1

8

60

We will present our survey on self-correction of LLMs (TACL) at #EMNLP2024 in person!. Oral: Nov 12 (Tue) 11:00- (Language Modeling 1). When Can LLMs Actually Correct Their Own Mistakes? A Critical Survey of Self-Correction of LLMs

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

1

11

58

I’m thrilled to share that I’ll start my PhD this fall @PennStateEECS under the supervision of @ruizhang_nlp!.

11

2

54

Excited to share that our WiCE paper has been accepted to #EMNLP2023 !.Check out our new dataset for Doc-level NLI!. Many thanks to @gregd_nlp and to my wonderful co-authors @tanyaagoyal @juand_r_nlp!. data: updated!.arxiv: updated!.

New dataset for Doc-level NLI!.Looking for a dataset with realistic claims and premises? Check out WiCE! WiCE annotates claims in Wikipedia with entailment labels wrt cited articles. w/ @tanyaagoyal @juand_r_nlp @gregd_nlp.data: 1/

0

11

53

Our paper "Shortcomings of Question Answering Based Factuality Frameworks for Error Localization" has been accepted at #EACL2023 main track!. arXiv: work with @tanyaagoyal @gregd_nlp.

New preprint.QA metrics are quite popular in factuality eval, in part because it's believed that they are interpretable and can localize errors. We show that this is *not* true! Their localization is worse than simple exact match!.w @tanyaagoyal @gregd_nlp

1

8

50

New preprint.QA metrics are quite popular in factuality eval, in part because it's believed that they are interpretable and can localize errors. We show that this is *not* true! Their localization is worse than simple exact match!.w @tanyaagoyal @gregd_nlp

2

12

48

Our oral talk about self-correction of LLMs (TACL) at #EMNLP2024 is today!. Oral: Nov 12 (Tue) 12:15-12:30 (Ashe Auditorium, Language Modeling 1). When Can LLMs Actually Correct Their Own Mistakes? A Critical Survey of Self-Correction of LLMs

📢 New survey on Self-Correction of LLMs!.😢 LLMs often cannot correct their mistakes by prompting themselves.😢 Many studies conduct unfair experiments.😃 We analyze requirements for self-correction🧵.@YusenZhangNLP @NanZhangNLP Jiawei Han @ruizhang_nlp.

1

9

44

I'll be at @COLM_conf and present our work on Tuesday 11am-1pm (Poster #50)!.Looking forward to chatting about LLM safety, self-correction, etc. Also feel free to DM me🙌. Evaluating LLMs at Detecting Errors in LLM Responses.

📢 New Preprint! Can LLMs detect mistakes in LLM responses?.We introduce ReaLMistake, error detection benchmark with errors by GPT-4 & Llama 2. Evaluated 12 LLMs and showed LLM-based error detectors are unreliable!.@ruizhang_nlp @Wenpeng_Yin @armancohan +.

1

8

44

Miami is living in the future with self-driving delivery cars 😳😍 #EMNLP2024.(btw I’m already back in state college)

2

1

39

Our poster presentation @COLM_conf is today 11am-1pm (Poster #50)! Just outside the theater. Come to our poster if you are interested in LLMs safety, self-correction, evaluation, or any related topics!. Evaluating LLMs at Detecting Errors in LLM Responses.

📢 New Preprint! Can LLMs detect mistakes in LLM responses?.We introduce ReaLMistake, error detection benchmark with errors by GPT-4 & Llama 2. Evaluated 12 LLMs and showed LLM-based error detectors are unreliable!.@ruizhang_nlp @Wenpeng_Yin @armancohan +.

2

4

29

📢 Oral on Dec 10, 9am at East #EMNLP2023.I will be presenting WiCE, a new dataset in Doc-level NLI! Check it out if you are interested in methods to attribute content to documents. 🔗 w/ @tanyaagoyal @juand_r_nlp @gregd_nlp.

Excited to share that our WiCE paper has been accepted to #EMNLP2023 !.Check out our new dataset for Doc-level NLI!. Many thanks to @gregd_nlp and to my wonderful co-authors @tanyaagoyal @juand_r_nlp!. data: updated!.arxiv: updated!.

0

5

21

I’ll present my work on factuality evaluation for text summarization in Poster Session 3 (9am May 3)! #eacl2023.

📣 At #EACL this week from our lab:. 1. @ryokamoi (w/@tanyaagoyal) analyzing QA/QG methods for factuality eval in summarization:. 2. @prasann_singhal (w/Jarad Forristal + @xiye_nlp) predicting OOD performance w/feature attributions:.

0

3

17

日程は暫定ですが、AIコンソーシアム(@AI58677691).で僕が担当する機械学習輪講が秋学期の金曜6限に矢上で開催予定です。参加者のみなさんに交代で発表してもらう形式です。ぜひ金曜日の放課後の時間は空けておいてください。.詳細は9月24日のガイダンスなどで説明されます。.

1

1

11

VLMEvalKit now supports our VisOnlyQA dataset 🔥🔥🔥. VisOnlyQA reveals that even recent LVLMs like GPT-4o and Gemini 1.5 Pro stumble on simple visual perception questions, e.g., "What is the degree of angle AOD?"🧐.

📢 New preprint! Do LVLMs have strong visual perception capabilities? Not quite yet. We introduce VisOnlyQA, a new dataset for evaluating the visual perception of LVLMs, but existing LVLMs perform poorly on our dataset. [1/n].

0

2

13

I'm presenting our poster at the NeurIPS 2021 @NeurIPSConf Workshop on Machine Learning for Autonomous Driving!.This is my work as a research intern at @SensetimeJ.

0

1

7

+1! @gregd_nlp repeatedly told me to manually check raw data and model outputs carefully (maybe because I did not do that sufficiently🙃). It is often very time-consuming and painful for non-native NLP students like me, but haste makes waste (with my self-reflection).

This is one of the most important things I learnt through my PhD. I practice in my everyday’s work. Many thanks to my advisor @gregd_nlp @eunsolc.

0

0

7

Thanks to my great coauthors! @sarkarssdas, @Reza20000722, @ahn_janice030, @YilunZhao_NLP, Xiaoxin Lu, @NanZhangNLP, @YusenZhangNLP, Ranran Haoran Zhang, Sujeeth Reddy Vummanthala, Salika Dave, Shaobo Qin, @armancohan, @Wenpeng_Yin, and @ruizhang_nlp [n/n].

0

2

7