Redwood Center for Theoretical Neuroscience

@Redwood_Neuro

Followers

1K

Following

40

Statuses

37

The Redwood Center for Theoretical Neuroscience is a group of faculty, postdocs and graduate students at UC Berkeley. Account run by students.

Berkeley, CA

Joined January 2018

New work published by Adrianne Zhong in the Redwood Center's DeWeese group! 🥳🥖

Very excited to share our new paper out in PRL today (as an Editors’ Suggestion)! We unify thermodynamic geometry 🔥 and optimal transport 🥖 providing a coherent picture for optimal minimal-work protocols for arbitrary driving strengths 💪 and speeds ⏱️ !

0

0

1

Thank you for visiting!

My recent talk at UC Berkeley: Neurons as feedback controllers. Thanks Bruno Olshausen for inviting me to the terrific Redwood Institute for Computational Neuroscience via @internetarchive

0

0

0

Bruno Olshausen will be speaking tomorrow (Monday) at 1:30 PDT: "Neural computations for geometric reasoning". Abstract:

Join us next week at our intelligence workshop @SimonsInstitute! Schedule: Register online for both in-person and streaming. FANTASTIC lineup of speakers: 1/3

0

0

6

RT @neur_reps: NeurIPS is here! Come check out the NeurReps Workshop on Sat Dec 3, ft. invited talks by ⭐️ @TacoCohen ⭐️ Irina Higgins @Dee…

0

16

0

RT @TrentonBricken: Super super excited for my first day as a visiting researcher at @Redwood_Neuro. Will be here and living in the Bay are…

0

2

0

Congrats! We’re very excited about this workshop @NeurIPSConf

Our workshop on Symmetry and Geometry in Neural Representations has been accepted to @NeurIPSConf 2022! We've put together a lineup of incredible speakers and panelists from 🧠 neuroscience, 🤖 geometric deep learning, and 🌐 geometric statistics.

0

0

7

Exciting new research from recent RCTN grads @DylanPaiton, @charles_irl, and others on how neuroscience-inspired recurrent layers for image processing with sparse coding grant increased selectivity and robustness to adversarial inputs

Hey twitter, check out my new paper! We investigate how competition through network interactions can lead to increased adversarial robustness and selectivity to preferred stimulus. paper: explainer 🧵: 1/8

0

0

3

RT @charles_irl: new paper with @DylanPaiton and others in @Redwood_Neuro now out in @ARVOJOV -- connecting ideas in visual neuroscience wi…

0

2

0

Preprint spotlight: Charles Frye (@charles_irl) et al. demonstrate a surprising weakness in critical point-finding methods used to support the "no bad local minima" hypothesis re: neural net losses. See🧵for a quick explainer, or dive deep🏊♀️in the paper:

There's a theory out there that neural networks are easy to train because their loss f'n is "nice": no bad local minima. Recent work has cast doubt on this claim on analytical grounds. In new work, we critique the numerical evidence for this claim. 🧵⤵

0

3

9

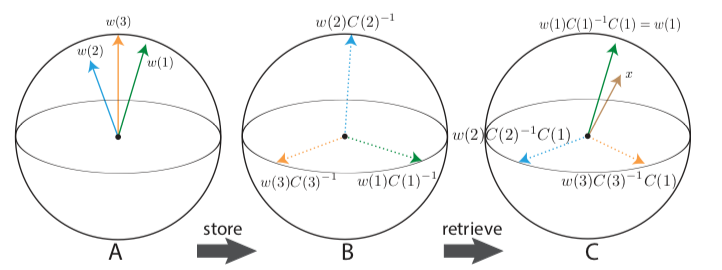

Our weekly CompNeuro journal club is back up and running for the quarter. Today, we discussed Cheung et al’s preprint "Superposition of many models into one" from @berkeley_ai and @Redwood_Neuro. Led by AJ Kruse and Satpreet Singh.

0

0

3

We are looking forward to hearing from our latest seminar speaker, @pkdouglas16, who is coming up from UCLA! Her talk on modeling latency and structural organization of connections in the brain is at noon tomorrow.

1

0

3

New preprint spotlight: Brian Cheung (@thisismyhat), Alex Terekhov, Yubei Chen (@Yubei_Chen), Pulkit Agrawal (@pulkitology) and Bruno Olshausen report a simple but effective technique for building neural networks that can solve many different tasks:

0

6

14

New preprint spotlight: Charles Frye (@charles_irl), Neha Wadia, Mike DeWeese, and Kris Bouchard tell us about how to estimate the critical points of a deep linear autoencoder, complete with gotchas, tricks, and how this might be extended to other models.

0

3

8