Dylan Paiton

@DylanPaiton

Followers

305

Following

2K

Media

9

Statuses

188

data pipelining @bonkbot_io. Former: head of research @delv_tech; dev @weights_biases; phd neuroscience uc berkeley @redwood_neuro

Oakland, California, USA

Joined October 2014

RT @karpathy: This is interesting as a first large diffusion-based LLM. Most of the LLMs you've been seeing are ~clones as far as the core….

0

2K

0

RT @HKydlicek: 🚀 We've boosted MATH benchmark scores for popular models by 65% —no training or model changes needed! The secret? . Math-Ve….

0

62

0

RT @mark_riedl: Open Source Initiative (OSI) says AI models aren’t “open source” unless data, weights, hyperparameters, and executable code….

0

17

0

RT @gottapatchemall: 1/ I did my own little hackathon last weekend designing EGFR binders for @adaptyvbio's protein design competition. I w….

0

62

0

RT @charles_irl: since folks care about sparse autoencoders and friends now thanks to Golden Gate Claude, TBT to a poster from a few years….

0

2

0

This is awesome -- checking ML training validity in GH actions!.

Added a fun new feature to the @modal_labs LLM fine-tuning repo's CI yesterday, bringing together my @weights_biases logging days and ideas from @full_stack_dl 2022 with serverless infrastructure:. 👩🏫 memorization testing 👩🏫

0

0

2

RT @databricks: #DBRX sets a new standard for efficient open source LLMs. While it has 132B total parameters, with its fine-grained MoE arc….

0

53

0

RT @CeciliaZin: 🧵 The historic NYT v. @OpenAI lawsuit filed this morning, as broken down by me, an IP and AI lawyer, general counsel, and….

0

4K

0

RT @emilymbender: You know what's most striking about this graphic? It's not that mentions of people/cities/etc from different continents c….

0

89

0

RT @charles_irl: Over the past month, I've been working to grok RWKV, one of the most successful challengers to Transformers for language m….

0

30

0

RT @charles_irl: I keep coming back to the "Python is Two Languages Now" article by @tintvrtkovic from February. It has durably changed my….

0

8

0

These lectures are a super valuable resource. Great job FSDL team, and thanks for releasing them!.

🥞🦜 Full Stack LLM Bootcamp 🦜🥞. tl;dr We're releasing our lectures on building LLM-powered apps, for FREE. 🚀 Launch an LLM App in One Hour.✨ Prompt Engineering.🗿 LLM Foundations.🔨 Augmented LLMs.🤷 UX for LUIs.🏎️ LLMOps.🔮 What's Next?.👷 Project Walkthrough. Learn more:.

0

0

4

RT @gottapatchemall: We're looking for an RA to help us develop new cutting-edge optogenetic tools!. Ephys and lots….

0

17

0

RT @alighodsi: Free Dolly! Introducing the first *commercially viable*, open source, instruction-following LLM. Dolly 2.0 is available for….

databricks.com

Introducing Dolly, the first open-source, commercially viable instruction-tuned LLM, enabling accessible and cost-effective AI solutions.

0

433

0

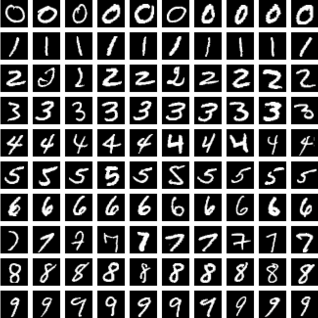

the linear baseline accuracy is quite good (not inherently problematic, but also makes it harder to know if solutions will scale to less linearly-separable datasets)

en.wikipedia.org

0

0

0