muhtasham

@Muhtasham9

Followers

1,359

Following

849

Media

232

Statuses

1,628

In my pre-training years

Latent Space

Joined March 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Chan

• 301874 Tweets

Messi

• 224087 Tweets

SURPRISE FROM BECKY

• 171399 Tweets

Kalafina

• 131276 Tweets

ROADRIDER X LINEMAN

• 97725 Tweets

#नवरात्रि

• 44443 Tweets

BABYBOSS YINYIN DAY

• 42729 Tweets

#Navratri2024

• 37684 Tweets

A. Luxury

• 32846 Tweets

梶浦さん

• 29569 Tweets

Maa Durga

• 28408 Tweets

#もうすぐ三角チョコパイの季節

• 27389 Tweets

マナー講師

• 22738 Tweets

渋沢栄一

• 22719 Tweets

マナー違反

• 20251 Tweets

梶浦由記

• 16422 Tweets

शक्ति उपासना

• 16342 Tweets

おーちゃん

• 14159 Tweets

WIN AMAZING EMBASSY

• 11644 Tweets

जगत जननी

• 10032 Tweets

Last Seen Profiles

A short thread about changes in the transformer architecture since 2017.

Reading articles about LLMs, you can see phrases like “we use a standard transformer architecture.”

But what does "standard" mean, and have there been changes since the original article?

(1/6)

7

138

887

Evaluating abstractive summarization remains an open area for further improvement. If you ever dealt with large-scale summarisation evaluation you know how tedious it is.

Inspired by

@eugeneyan

's post on this topic, I hacked something together over the weekend to streamline this

9

33

261

@_jasonwei

@arankomatsuzaki

Might contain a lot of subtle issues, see clever Hans effect, which is always hard to debug. The law of leaky abstractions in action as my supervisor says

2

5

71

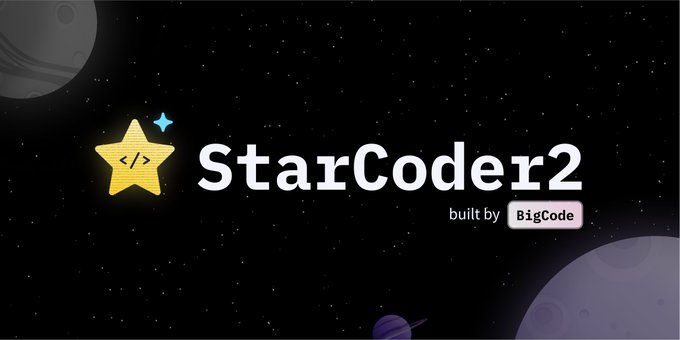

The 🤗 MLX community is amazing

Quantized StarCoder2 model variants available here:

Small guide on running and training StarCoder2 locally

pip install -U mlx-lm

To run inference on quantized model

python -m mlx_lm.generate --model

2

13

56

Happy to show Pod-Helper:

⚡️ Lightning-speed transcription with Whisper

🔧 Built-in audio repair with good old Roberta

🧊 Checks your content's vibe effortlessly

See demo below running on TensorRT-LLM

#GenAIonRTX

#DevContest

#GTC24

@NVIDIAAIDev

2

4

35

If you missed out on the

@full_stack_dl

LLM bootcamp, don't worry! I've written a blog post about it.

I hope you find my post informative and enjoyable to read, just as I enjoyed attending the bootcamp.

0

10

33

🚀Now supports real-time streaming

Happy to show Pod-Helper:

⚡️ Lightning-speed transcription with Whisper

🔧 Built-in audio repair with good old Roberta

🧊 Checks your content's vibe effortlessly

See demo below running on TensorRT-LLM

#GenAIonRTX

#DevContest

#GTC24

@NVIDIAAIDev

2

4

35

2

7

31

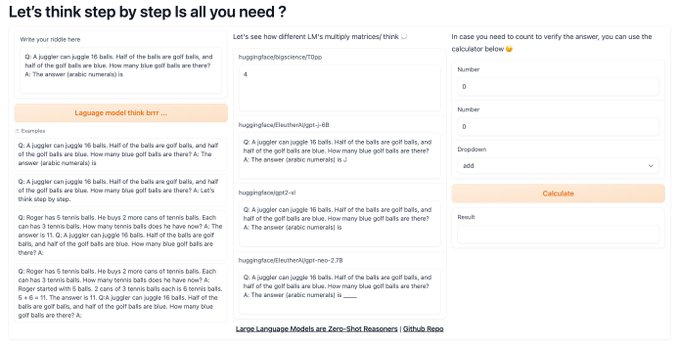

Let's see how different LM's multiply matrices / think 💭

using this Space

GPT-J-6B i see what you did there👀

Built using amazing

@Gradio

Blocks 🧱 APIs,

also you can use new

@huggingface

🤗 Community Tab to make suggestions and collaborate

2

11

28

📢 Just published: How traditional OS concepts like Branch Prediction & Virtual Memory Paging shape today's Large Language Models (

#LLMs

).

LLMs = CPUs of early computing?

Feedback welcome!

🔗

0

3

28

@alex_valaitis

@MosaicML

Was going to skip but, not correct!

@MosaicML

is not open source LLM startup, its platform, and don’t sleep on them yet, they just released this today, 2x context length of LLAMA!

1

2

24

When your model is training and you see live footage of forward and back prop via

@weights_biases

0

4

21

Except it’s called AI engineering now

Come to

@aiDotEngineer

conf to learn more

3

2

21

All started with GPT2 moment, but only last week trained internal model and it did good, but fine-tuning made 50% better.

@amasad

1

3

17

MLX weights below

Happy to share the latest Zephyr recipe based on

@Google

's Gemma 7B 🔷🔶!

Outperforms Gemma 7B Instruct on MT Bench & AGIEval, showing the potential of RLAIF to align this series of base models 💪

🧑🍳 I hope this recipe enables the community to create many more fine-tunes!

3

40

162

0

3

14

“The thing that determines whether you’re the product isn’t whether you’re paying for the product: it’s whether market power and regulatory forbearance allow the company to get away with selling you.” —

@doctorow

1

9

14

@swyx

Shameless plug but this would make it easier to compare

Evaluating abstractive summarization remains an open area for further improvement. If you ever dealt with large-scale summarisation evaluation you know how tedious it is.

Inspired by

@eugeneyan

's post on this topic, I hacked something together over the weekend to streamline this

9

33

261

1

0

12

machine learning is low-precision linear algebra

during developing TPU google cut down mantissa from 23 bits to 5 bits and invented bf16

fast forward now we have 1.58 bit LLMs

0

0

11

Top recommendation:

Beautifully written in-depth explanation of this concepts, which I failed to do in my initial blog

High quality tokens, future LLMs can boost their reasoning and get sense of humor from

@charles_irl

if this blog ends up in their dataset

1

3

10

PSA if you need GPUs for your research

Hit this companies up they have compute grants

@PrimeIntellect

@dstackai

@fal

@fal

especially if you work on diffusion models

0

2

11

It´s here

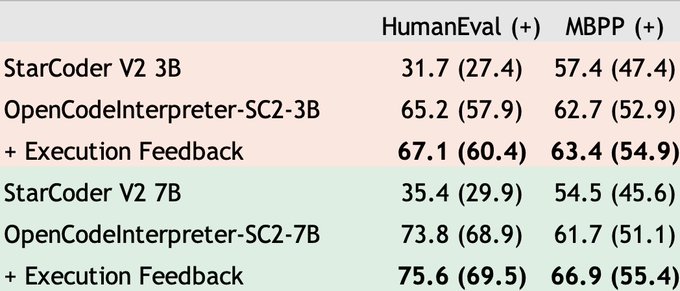

Accelerate your coding tasks, from code completion to code summarization with StarCoder2, the latest state-of-the-art, open code

#LLM

built by

@HuggingFace

,

@ServiceNow

, and NVIDIA.

Learn more 👉

1

36

126

1

0

10

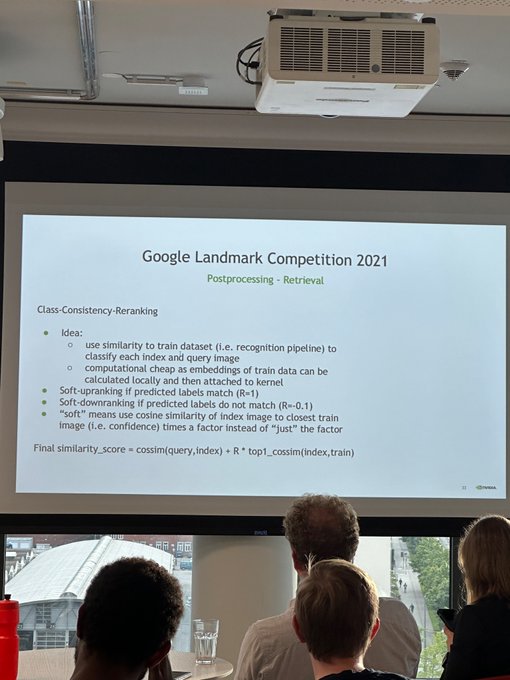

Reminder: Join amazing Transformers lecture by

@giffmana

tomorrow

🥨NEW EVENT🥨

Transformers in all glory details:

@GoogleAI

Brain Team Scientist Lucas Beyer

@giffmana

will explain the currently most dominant deep learning architecture for natural language processing in an exclusive event with

@MunichNlp

.

Details below👇

1

3

11

0

4

9

Sharing

@huggingface

collection of old models from RoBERTa all the way to GPT2 pre-trained and finetuned on Tajik language, stay tuned for more to come, mistral-7b, llama2-7b, and others on the way

1

0

9

"Flops are cheap, bandwidth is adding more pins, and latency is physics. Deal with it."

@vrushankdes

Great read! My experience is that you’re fighting physics but also the nvidia compiler and the stack overall, and even after pulling *a lot* of tricks we still can’t achieve more than ~80-90% mem bw on many kernels that you’d naively think should be ~100. And the rabbit hole

2

1

39

0

1

10

Great tune! Smooth run on m2 8gb

python -m mlx_lm.generate --model mlx-community/OpenCodeInterpreter-SC2-3B-4bit --prompt "Write a quick sort in C++" --temp 0.0 --colorize

0

3

9

💫StarCoder which was released today by

@BigCodeProject

is prime example of Open Source outcompeting

Big shot out to

@lvwerra

@harmdevries77

@Thom_Wolf

@huggingface

@ServiceNowRSRCH

0

0

8

Image and prompt by yours truly

@marksaroufim

teaching style is like a casual conversation with a senior engineer on your team

CUDA-MODE 8: CUDA performance gotchas

How to maximize occupancy, coalesce memory accesses, minimize control divergence? Sequel to lecture 1, focus on profiling.

Speaker:

@marksaroufim

(today in ~45 mins)

Sat, Mar 2, 20:00 UTC

1

20

105

1

1

7

“LLMs are not database, they are not up to date, think of them as are reasoning engine and some sort of retrievers will solve the the issue of up do date knowledge”

@sama

0

0

2

Beating OpenAI large v2 with Fine-tuned *medium* model from 85.8 WER down to 23.1 WER

special thanks to

@LambdaAPI

and

@huggingface

team especially

@sanchitgandhi99

and

@reach_vb

0

0

8

TIL:

@lexfridman

hails from Buston, Tajikistan 🇹🇯

When our paths cross, I'll be ready with a friendly, "What's up, homie?"

1

1

6

Thanks

@dk21

and

@jefrankle

for this amazing session, can’t wait for upcoming sessions

We are LIVE🎉

Tune in for Lesson 3 of the Training & Fine-Tuning LLMs Course with

@MosaicML

📚

You will learn data scaling laws to construct custom datasets, & dive deep into data curation, ethics, storage, & streaming best practices.

Stream now🔗

0

2

6

0

1

7

With the swarm of users experimenting

@bing

Chat aka Sydney. I feel similar vibes like that of “OMG LaMDA is sentient guy”. Again many things can be said but before folks start posting terminator images let me leave this here …

1

1

7