Leandro von Werra

@lvwerra

Followers

8K

Following

5K

Media

298

Statuses

2K

Machine learning @huggingface: co-lead of @bigcodeproject and maintainer of TRL.

Bern, Switzerland

Joined March 2019

Super proud of what the @BigCodeProject community achieved. Building the best in class code LLMs in an open and collaborative way is no easy feat and is the result of the hard work of many community members!.

Introducing: StarCoder2 and The Stack v2 ⭐️. StarCoder2 is trained with a 16k token context and repo-level information for 4T+ tokens. All built on The Stack v2 - the largest code dataset with 900B+ tokens. All code, data and models are fully open!.

3

13

78

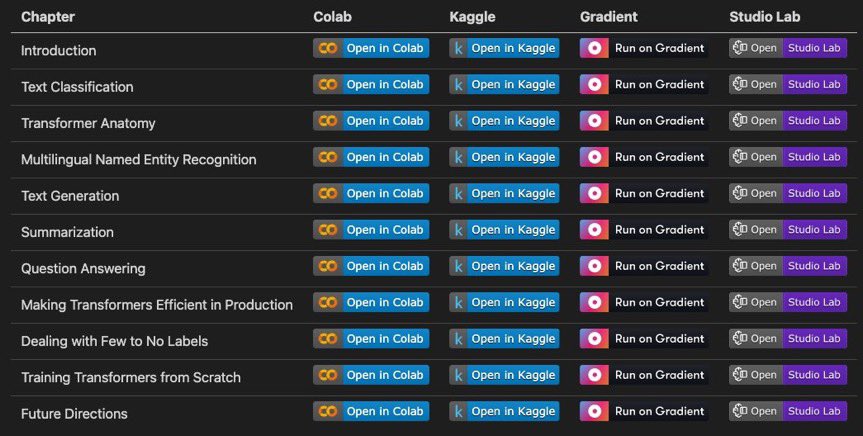

Thanks to @_lewtun all chapters from our book "NLP with Transformers" are now just one click away!. And you can run them on your favourite platform: . - @Google Colab.- @kaggle Notebooks.- @HelloPaperspace Gradient.- @awscloud StudioLab. #transformersbook

3

111

480

I built my first python package: jupyterplot! Plot real-time results in Jupyter notebooks. It is founded on @andreas_madsen's excellent python-lrcurve library. Effortless development and publishing with #nbdev by @jeremyphoward and @GuggerSylvain.

7

103

408

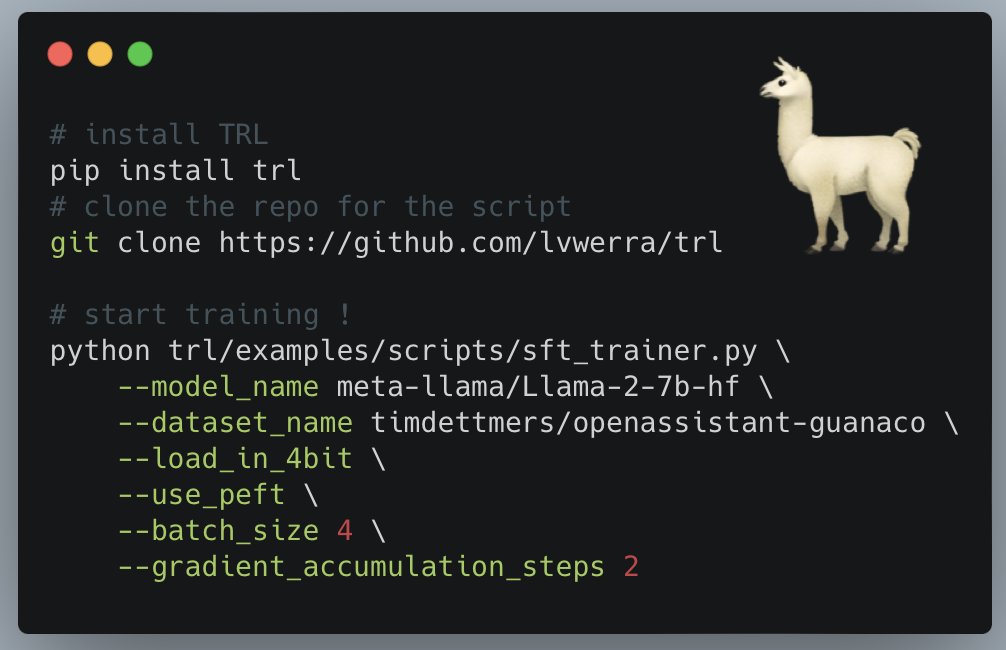

Excited to share my quarantine work of the past couple of weeks: The Transformer Reinforcement Learning (trl) library. Train @huggingface transformer language models with sparse rewards via reinforcement learning (PPO).

4

72

357

@EugeneVinitsky You could upload it to the Hugging Face Hub, which is free to host and download. It also adds a dataset viewer if it's any common format/modality, but it's git-lfs under the hood so you can upload whatever files format you want. You can either use the `datasets` or.

4

8

366

Excited to finally share what kept us up at night for the past few months: . Natural Language Processing with Transformers. A book about building real-world applications with 🤗 transformers in collaboration with @_lewtun, @Thom_Wolf, and @OReillyMedia.

8

77

326

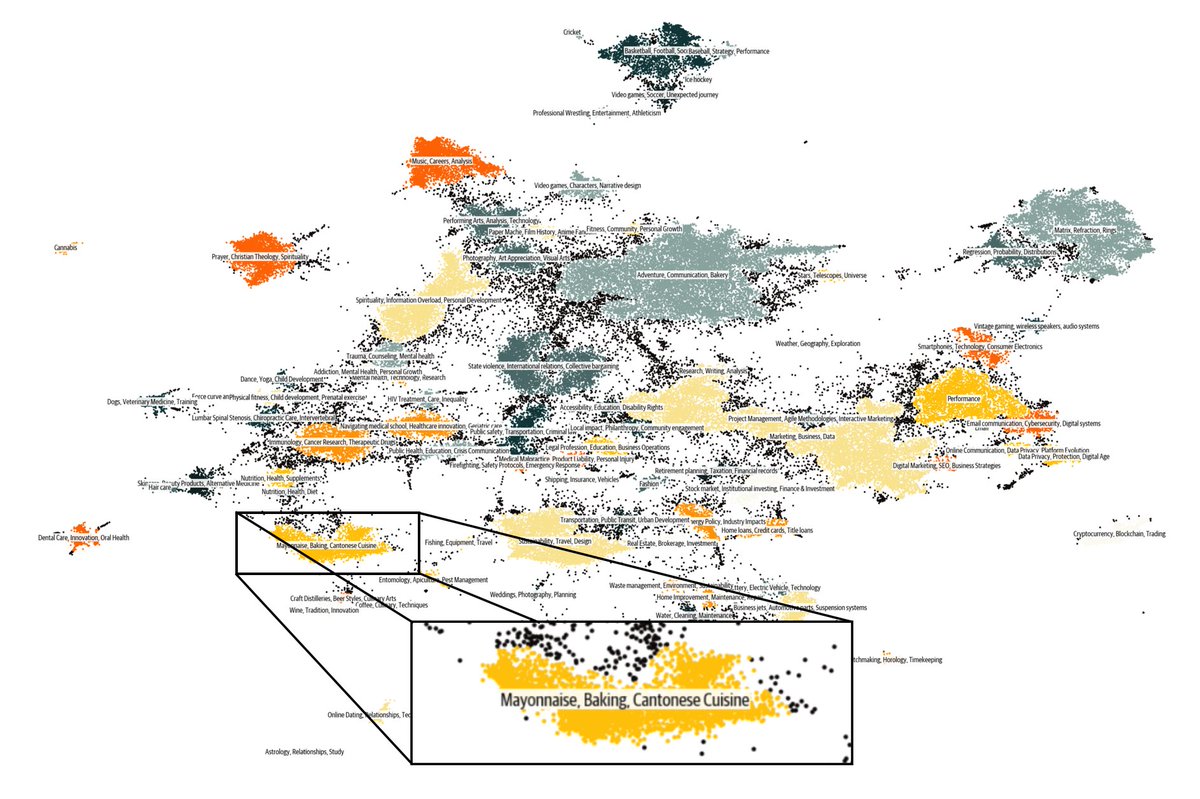

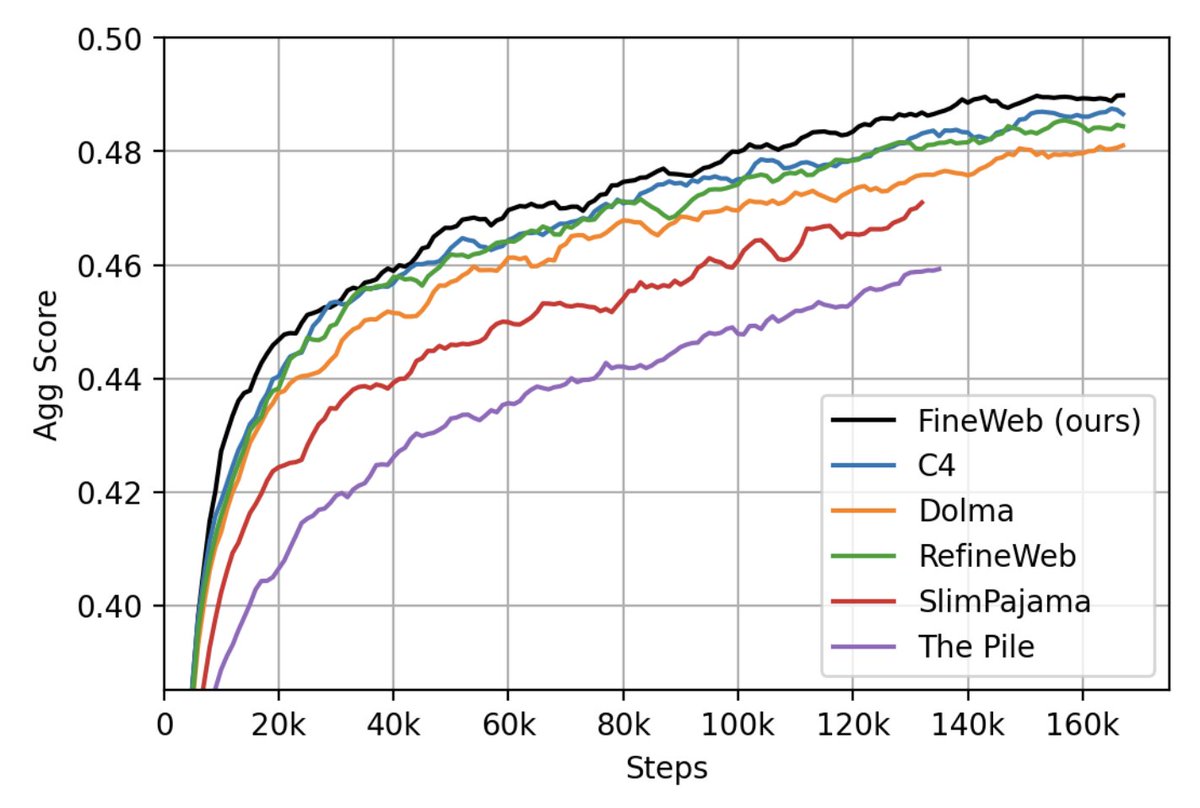

We released 🍷FineWeb: 15T high quality tokens from the web. It's the best ready-to-use AND the largest pretraining dataset. Outperforms all other datasets in our 350B token ablations but scales to much longer training runs due to its sheer size!.

We have just released 🍷 FineWeb: 15 trillion tokens of high quality web data. We filtered and deduplicated all CommonCrawl between 2013 and 2024. Models trained on FineWeb outperform RefinedWeb, C4, DolmaV1.6, The Pile and SlimPajama!

3

52

240

Not much is known about the pretraining data of Code Llama but there is some good evidence the @StackOverflow was part of it. Found some breadcrumbs while working on a demo with a hello world example: Suddenly the model started generating a discussion between two users.

8

42

179

Tokenizing 200k texts with @huggingface‘s FastTokenizers: <20s. Drawback: not enough time to get a fresh coffee 🙂.

4

19

171

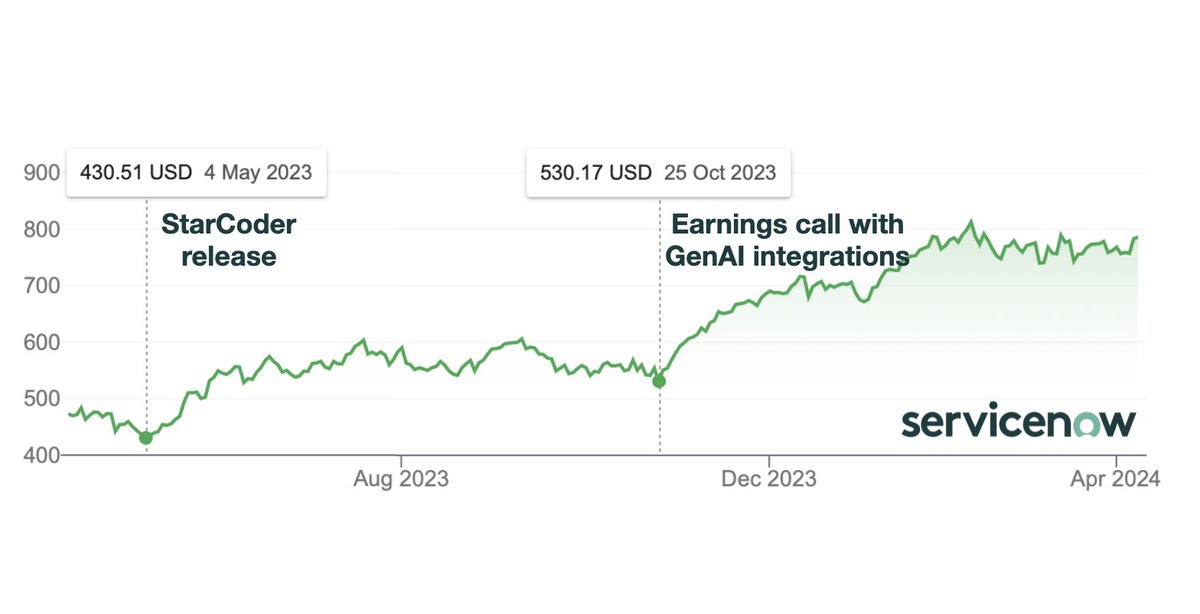

Why would any company release a strong LLM for free? . This is @ServiceNow (mkt cap ~$160Bn) stock price since the release of StarCoder. Only required a small amount of compute and a handful of people while building up a lot of valuable know-how fast. It's not a zero-sum game!

9

30

163

Finding the right code snippet solving your problem is a super useful skill. @novoselrok made this much easier with a new tool: And on top he wrote an excellent in-depth guide to how to build a search model with transformers:.

0

40

161

Working with nbdev ( is such a great experience!. Finally breaking the cycle of starting to code in notebooks, then exporting the code to a library, and finally writing documentation. Awesome job @jeremyphoward & @GuggerSylvain!.

2

27

168

With the release of ChatGPT we thought it is a good time to write a blog explaining how RL from human feedback works and what the current state of this exciting new field is. Collaboration with @natolambert and @carperai folks @lcastricato and @Dahoas1.

1

31

125

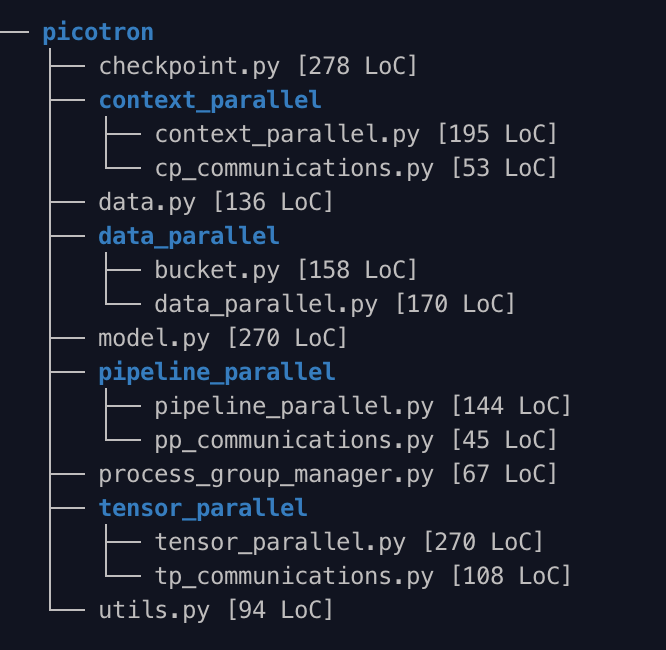

Checkout the code and super detailed video walkthrough!. Code: Video: Work lead by @Haojun_Zhao14 and @FerdinandMom!.

1

25

126

A few weeks back @harmdevries77 released an interesting analysis (go smol, or go home!) of scaling laws which @karpathy coined the Chinchilla trap. A quick thread on when to deviate left or right from the Chinchilla optimal point and the implications.🧵

1

27

107

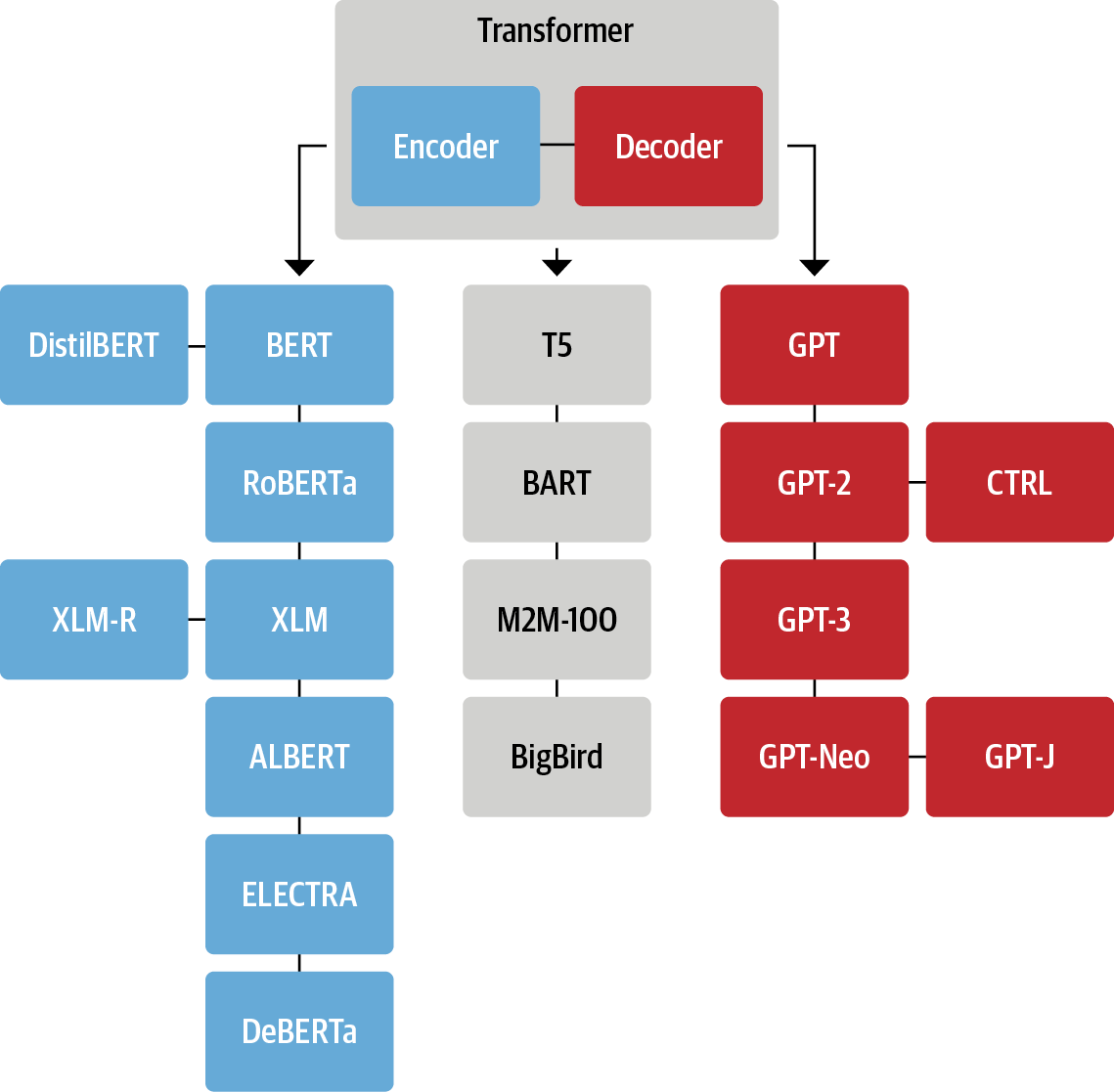

One frequent feedback we get on the paper version of our book is that color would add to the reading experience. We invested a lot of time to create the figures and thanks to @OReillyMedia we released ALL of them in the book's repository. A thread with a few of my favourites:

2

18

102

I had a blast last Wednesday at the RL Meetup Zürich talking about leveraging the @huggingface transformer library in combination with reinforcement learning to fine-tune language models. In case you are interested the talk is now online:.

5

25

99

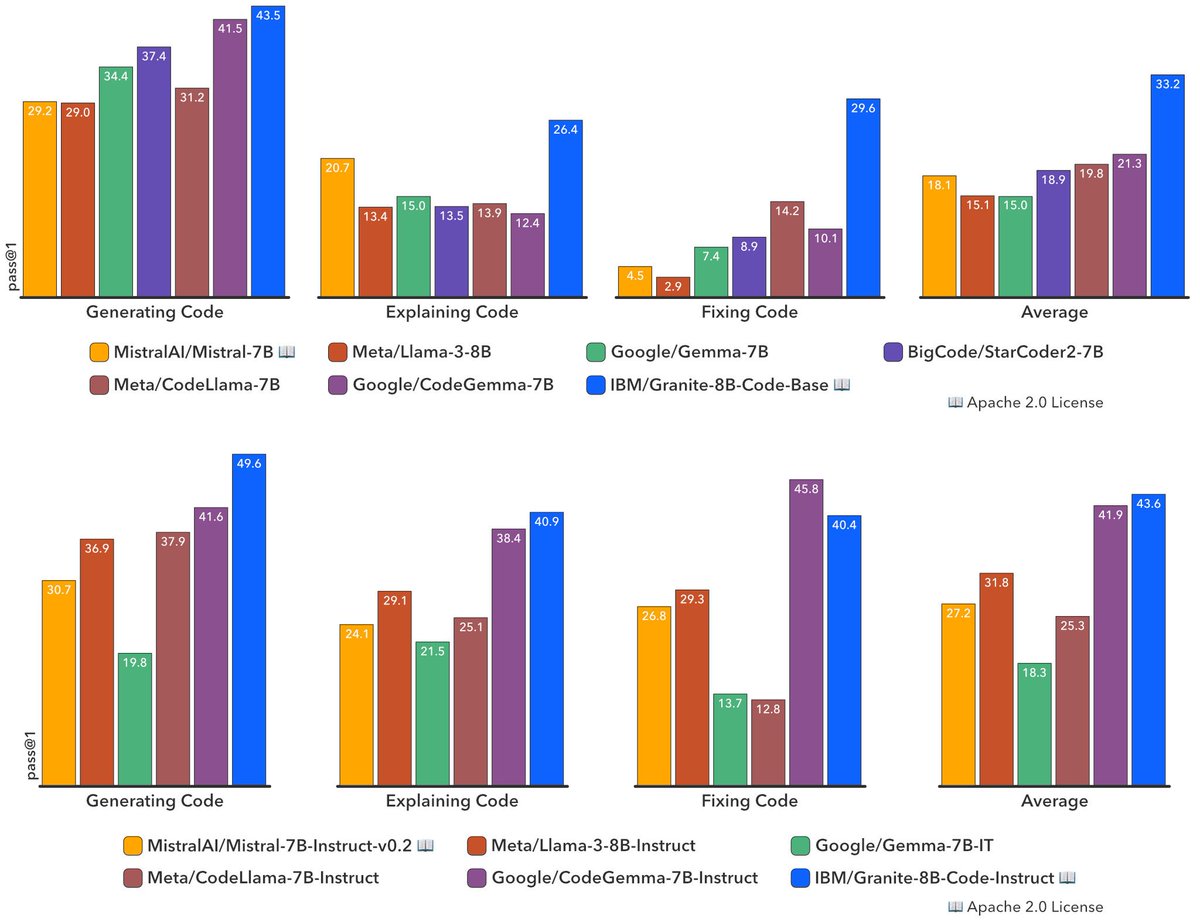

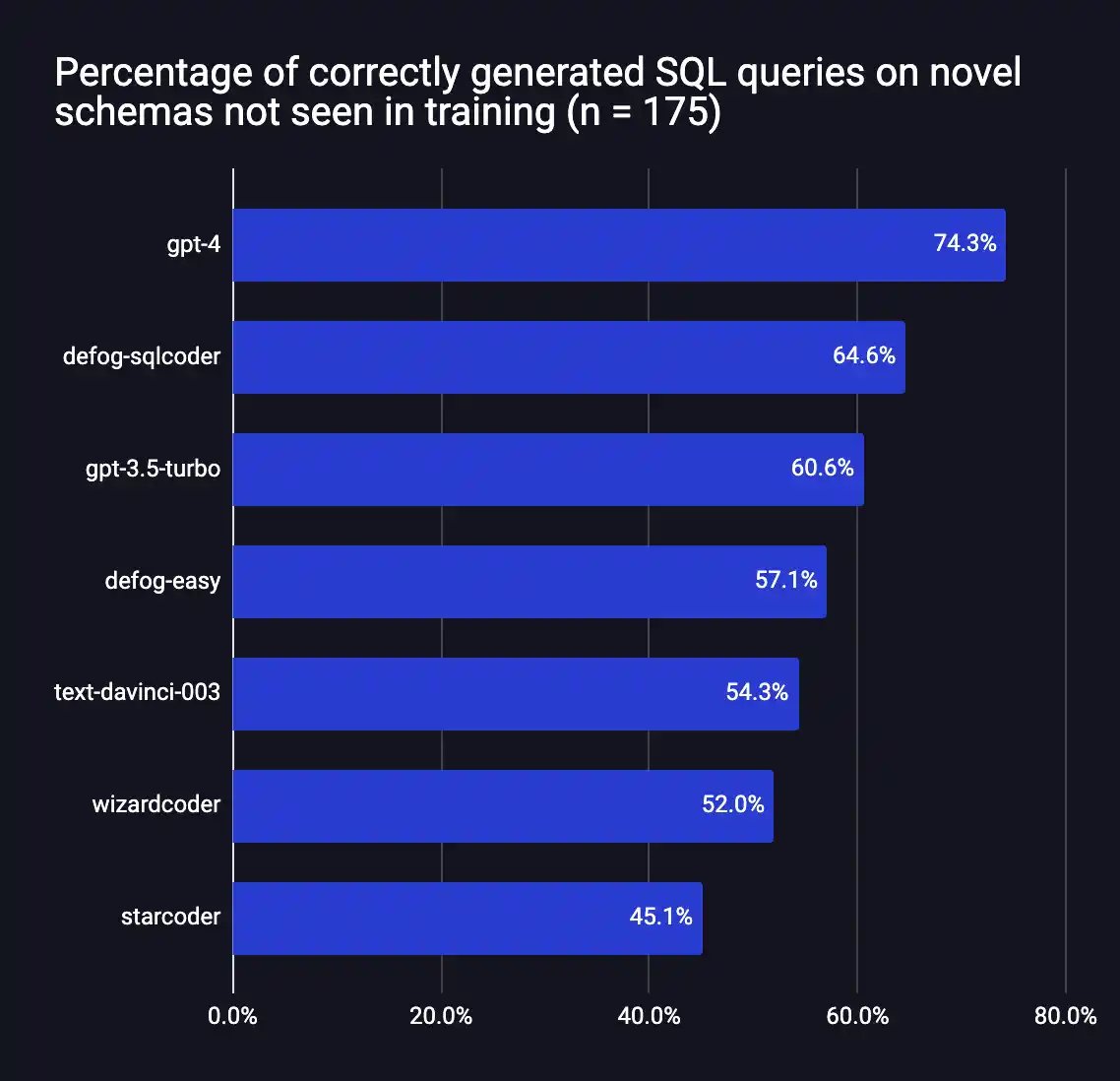

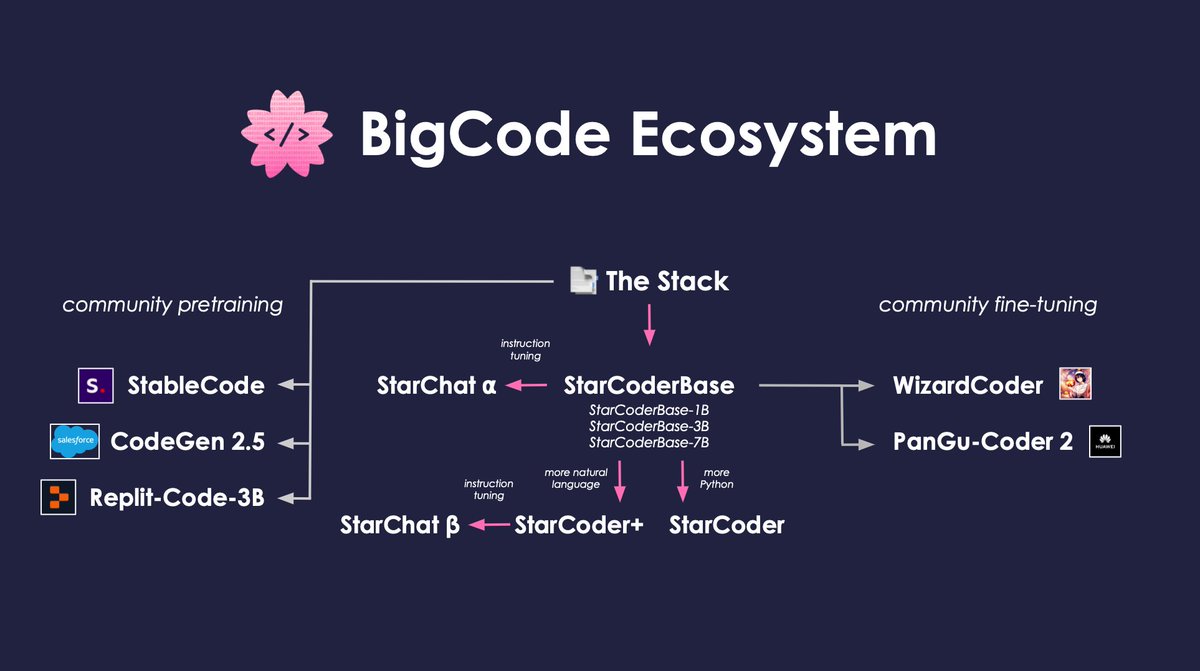

The code family is expanding extremely fast: Welcome SQLCoder!. Since I made this graphic two weeks ago there have been three new awesome code models. @deci_ai's small and powerful DeciCoder, @Muennighoff et al's OctoCoder trained on commits and now @defogdata's SQLCoder🚀

We just open-sourced SQL Coder, a 15B param text-to-SQL model that outperforms OpenAI's gpt-3.5! When fine-tuned on an individual schema, it outperforms gpt-4. The model is small enough to run on a single A100 40GB in 16 bit floats, or on a single

1

17

96

Our book about NLP with Transformers is taking shape and we are working on the final chapters. We would love to know what you want to see covered in the book. If you had to choose one topic that the currently available resources don't cover well what would it be? Let us know!.

Excited to finally share what kept us up at night for the past few months: . Natural Language Processing with Transformers. A book about building real-world applications with 🤗 transformers in collaboration with @_lewtun, @Thom_Wolf, and @OReillyMedia.

5

14

85

There is an incredible Chrome extension by @JiaLi52524397 that let's you use StarCoder in Jupyter Notebooks. It uses previous cells and their outputs to make predictions. As such it can infer your DataFrames columns from the cell outputs or interpret your markdown text!

Proud to be part of this incredible journey with @LoubnaBenAllal1, @lvwerra & the amazing team! 🎉 StartCoder has this unique capacity to incorporate jupyter text/output. Experience it for yourself here: Or

2

21

78

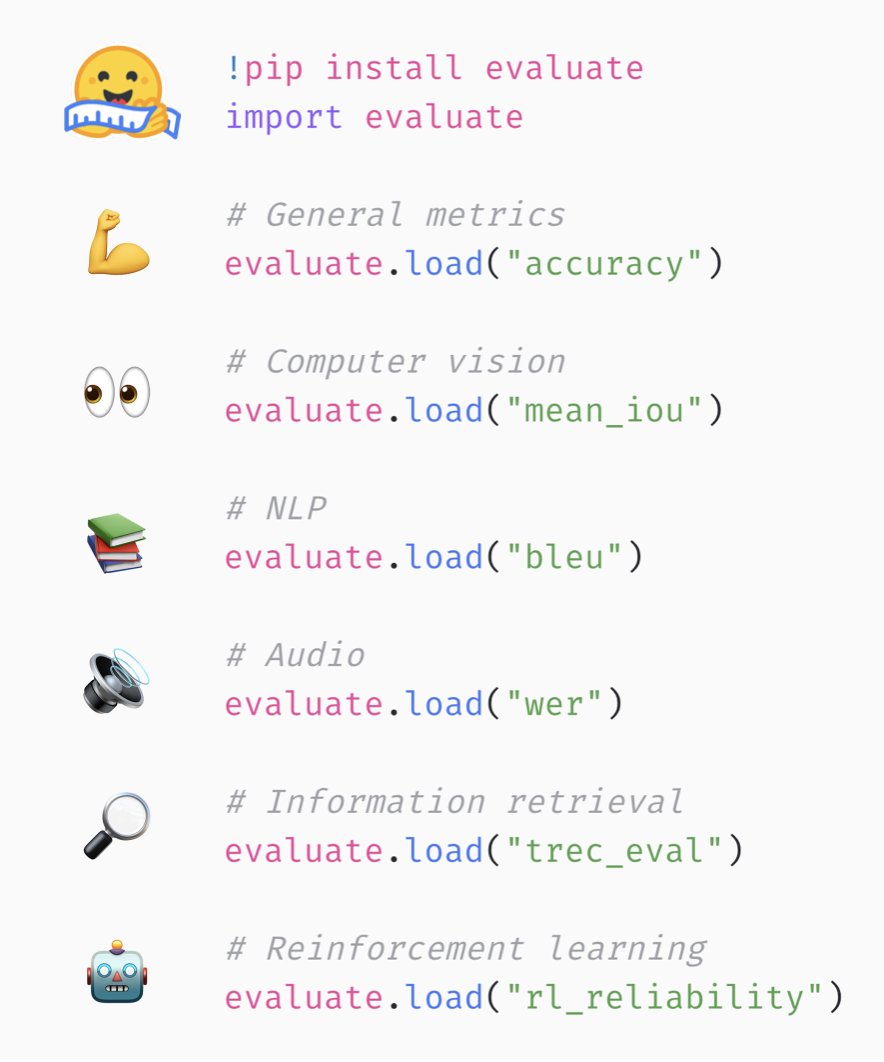

We released evaluate==0.3.0🎉🎉🎉. A few highlights:.- text2text evaluators (incl. translation and summarization)!.- new bias metrics!.- configure dataset splits and subsests in the evaluator!. Special thanks to contributors @mathemakitten & @SashaMTL♥️

0

17

75

The FineWeb tech report is out!🍷. Find all the details involved in building a high quality pretraining dataset for LLMs including the new super strong FineWeb-Edu subset with 1.3T tokens. [built with the beautiful @distillpub template by @ch402 et al]

2

13

77

This week's release of 💫StarCoder was the culmination of 6month incredibly hard work from a lot of collaborators in @BigCodeProject. It's great to see people are enjoying it and already using it for various things. It is also in fine company among trending models on the Hub!

1

10

71

Took some time to reflect on the past 1+year of the @BigCodeProject: Here are a few of my learnings from leading it during this time and some ingredients I think are important for a successful open collaboration in ML. What is BigCode?.BigCode is an open scientific collaboration.

1

18

69

Turns out you can also use the trl library to tune GPT-2 to generate negative movie reviews 😈. Experiment by @mrm8488 and model available on @huggingface models.

2

15

66

One consequence of the plot is that as you go left you need to pay with data but gain inference efficiency and as you go right you gain data efficiency but pay with inference cost. For example at the critical model size (1/3 model size, 100% overhead) you need 6x more data! 🧵.

There is a fascinating recent trend of training *smaller models for longer* w.r.t. Chinchilla optimal predictions. Best explanation I've seen of this? This new blog post by @harm_devries (with collaborators of the @BigCodeProject):. Clearly these are only

3

7

64

I am really excited to see that @OpenAI keeps pushing AI safety at the interface of NLP and RL using human feedback. If you want to take a @huggingface transformer model for a spin check out the TRL library.

We've used reinforcement learning from human feedback to train language models for summarization. The resulting models produce better summaries than 10x larger models trained only with supervised learning:

1

20

61

Slides here: Inspired by the nice talk from @Thom_Wolf earlier this year and updated with some material we are working on right now:.

0

9

60

In my opinion one of the most underappreciated features of the 🤗Hub is that you can add model performance metrics to the model card by simply adding tags to the model's Readme that even links to @paperswithcode🤯. Check out an example here:

0

15

60

The wild part is seeing a Google tutorial of the project that you worked on in a public library to get into NLP a few years ago.

Join Googler Wietse Venema for a deep dive into fine-tuning open models using Hugging Face Transformer Reinforcement Learning (TRL) using GKE. TRL is an #OpenSource library that provides all the tools you need to train LLMs, developed by @huggingface ↓

4

3

58

Have you ever played Taboo but ran out of cards or wanted to create custom cards? Look no further!. I have fine-tuned @OpenAI's GPT2 with the great @huggingface library to generate new Taboo cards. A few handpicked examples (none of them are in the training set):

2

15

53

Roughly 10% of BLOOMs training data has been code. So it is pretty good at coding🙂. Since it is multilingual you can even prompt it in arabic!

Pretty cool!! 0.155 Pass@1 is also pretty cool for a non-explicit code model.

2

13

55

It's been a wild year part 2: Just before the start of the year with @BigCodeProject we released The Stack and started training StarCoder. And this was just the starting point for many community projects that built cool models on top of it! . There is more to come very soon! 👀

1

9

45