Minqi Jiang

@MinqiJiang

Followers

3K

Following

7K

Media

84

Statuses

802

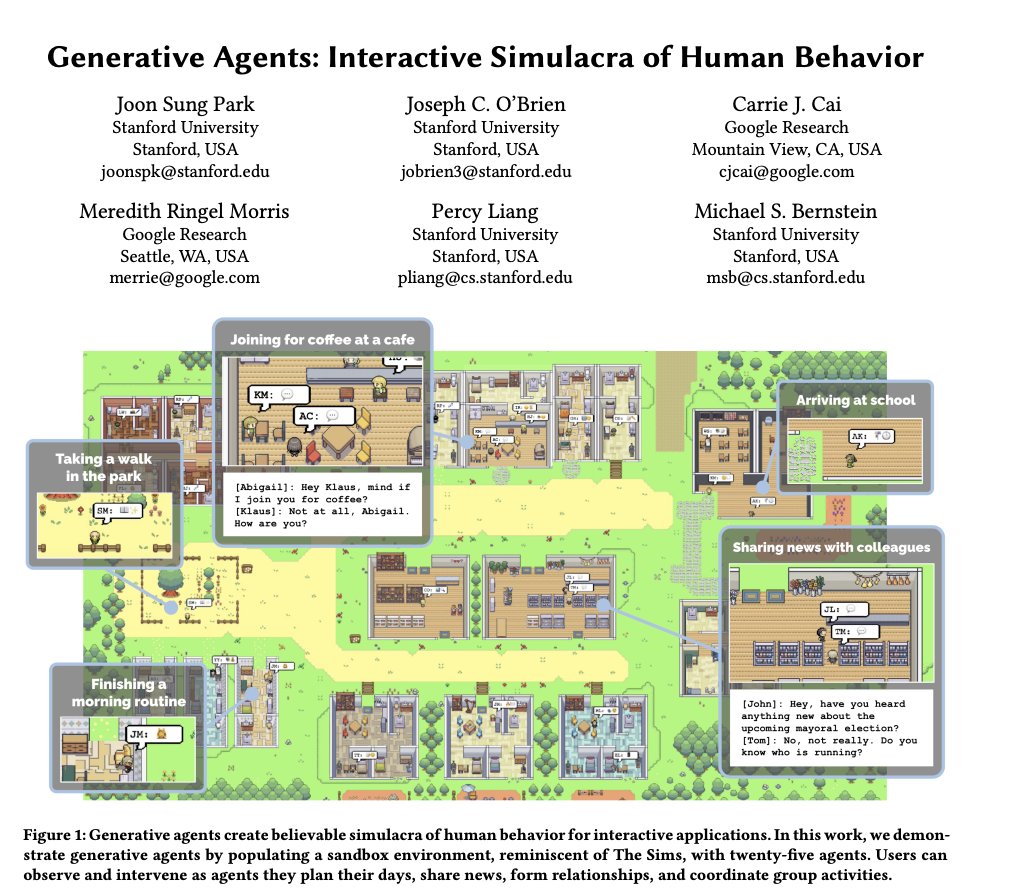

I'm stoked to have joined @GoogleDeepMind, where I'm working with @egrefen, @SianGooding, and others (not on here) to create helpful, open-ended agents. I'll also be at NeurIPS next week. Feel free to reach out if you want to chat open-ended learning or PhDs @UCL_DARK!.

34

12

231

Pleased to announce that our paper on Prioritized Level Replay (PLR) provides a new state-of-the-art on @OpenAI Procgen, attaining over 70% gains in test returns over plain PPO. Paper: Code: A quick thread on how this works.

3

39

155

Very happy to say I passed my PhD viva last night. I'm deeply grateful to my advisors @_rockt and @egrefen for their mentorship thru the years, and for giving me nearly limitless freedom to pursue the ideas that interested me. A once in a lifetime opportunity. Thank you!.

Congratulations Dr @MinqiJiang! @_rockt and I are so proud of the first PhD graduate from @UCL_DARK 🥰

21

5

156

Delighted to introduce WordCraft, a fast, text-based environment based on @AlchemyGame to enable rapid experimental iteration on new RL methods using commonsense knowledge. Work done with amazing collaborators: @jelennal_, @nntsn, @PMinervini, @_rockt, @shimon8282, Phil Torr.

2

26

112

Interested in learning about how auto-curricula can be used to produce more robust RL agents? Come chat with us at our poster session on "Replay-Guided Adversarial Environment Design" tomorrow at @NeurIPSConf 2021: 👇 And scan the QR code for the paper!

Meta AI researchers will present Replay-Guided Adversarial Environment Design tomorrow at #NeurIPS2021.

2

18

87

If you're interested in teaching deep reinforcement-learning agents to communicate with each other, check out my open-source PyTorch implementation of the classic RIAL and DIAL models by @j_foerst, @iassael, @NandoDF, and @shimon8282:

0

23

72

LLMs will soon replace research scientists not only in generating ideas and writing research papers, but also in attending virtual poster sessions. You don't have to like it, but that's what truly end-to-end learning looks like.

1

9

65

Multi-agent RL just got 12,500x faster, and the gap between what research is possible in industry and academia, smaller.

Crazy times. Anyways, excited to unveil JaxMARL! JaxMARL provides popular Multi-Agent RL environments and algorithms in pure JAX, enabling an end-to-end training speed up of up to 12,500x!. Co-led w/ @alexrutherford0 @benjamin_ellis3 @MatteoGallici. Post:

2

6

61

Our team at @GoogleDeepMind is building autonomous agents that have the potential to help billions of users. If you're excited about creating the future of human-AI interaction with an exceptional group of people, consider joining us as a research engineer.

Exciting news! We're looking for one more person, ideally with a few years industry experience in a similar role, to join the @GoogleDeepMind Autonomous Assistants team in London as a Senior or Staff Research Engineer. If you're interested, read on! [1/6]

2

7

55

Come by and chat about environment design, open-ended learning, and ACCEL at Poster 919! #icml2022

0

5

49

Extremely excited to be putting together this workshop focused on open-ended agent-environment co-evolution with an amazing bunch of co-organizers. If you are working on open-endedness, consider sharing your work at ALOE 2022. 🌱.

Announcing the first Agent Learning in Open-Endedness (ALOE) Workshop at #ICLR2022! . We're calling for papers across many fields: If you work on open-ended learning, consider submitting. Paper deadline is February 25, 2022, AoE.

0

8

51

Something intensely funny about frontier AI labs spending > $100M to train models, but base public benchmarks on evals put together by poor grad students. (These benchmarks have been pivotal, but prob worth paying the marginal cost to clean them up.).

Frontier models capping out at ~90% on MMLU isn't a sign of AI hitting a wall. It's a sign that a lot of MMLU questions are busted. The field desperately needs better evals.

0

2

50

Great fun chatting with @MLStreetTalk about our recent work, led by @MarcRigter, on training more robust world models using exploration and automatic curricula.

Hung out with @MinqiJiang and @MarcRigter earlier and we discussed their paper "Reward-free curricula for training robust world models" - it was a banger 😍

0

2

48

Regret—the diff b/w optimal performance and the AI agent's own performance—is a very effective fitness function for evolutionary search of environments that most challenge the agent. The result: An auto-curriculum that quickly evolves complex envs and an agent that solves them!.

Evolving Curricula with Regret-Based Environment Design. Website: Paper: TL;DR: We introduce a new open-ended RL algorithm that produces complex levels and a robust agent that can solve them (e.g. below). Highlights ⬇️! [1/N]

2

5

47

LAPO is now open sourced and a spotlight at @iclr_conf . Learn latent world models and policies directly from video observations. No action labels required. Congrats, @schmidtdominik_!.

The code + new results for LAPO, an ⚡ICLR Spotlight⚡ (w/ @MinqiJiang) are now out ‼️. LAPO learns world models and policies directly from video, without any action labels, enabling training of agents from web-scale video data alone. Links below ⤵️

2

5

43

Very excited that ALOE will take place *in person* at NeurIPS 2023!.

🌱 The 2nd Agent Learning in Open-Endedness Workshop will be held at NeurIPS 2023 (Dec 10–16) in magnificent New Orleans. ⚜️. If your research considers learning in open-ended settings, consider submitting your work (by 11:59 PM Sept. 29th, AoE).

0

6

39

The Dartmouth Proposal is where our field began. I turned to it when writing our position piece on the central importance of open-ended learning in the age of large, generative models. For a weekend read, the latest version is now in the Royal Society:

McCarthy, Minsky, Rochester, and Shannon's "Dartmouth Summer Research Project on AI" proposal from 1956 almost reads like an LLM x Open-Endedness research agenda: How Can a Computer be Programmed to Use Language, Neuron Nets, Self-Improvement, Randomness and Creativity.

1

8

36

@BlackHC @LangChainAI LangChain/ReAct are prompt engineering. Toolformer uses prompts to generate examples of tool use, but also intros a new way to filter these for further training. It’s an LLM generating its own training data—a more compelling idea imo. Closely related to

0

2

37

@y0b1byte Great list! I'm similarly optimistic about deep RL having a heyday with a few more years of work. A few more for the list:. MuZero applied to video compression: And of course the recent alignment work for LLMs:.

0

3

34

Dominik did some truly amazing work during his brief stint as an MSc student at @UCL_DARK. I'm lucky to have advised him on this project (largely his brainchild). If you're interested in open-ended learning, keep an eye out for @schmidtdominik_, and give him a follow.

Extremely excited to announce new work (w/ @MinqiJiang) on learning RL policies and world models purely from action-free videos. 🌶️🌶️. LAPO learns a latent representation for actions from observation alone and then derives a policy from it. Paper:

0

4

33

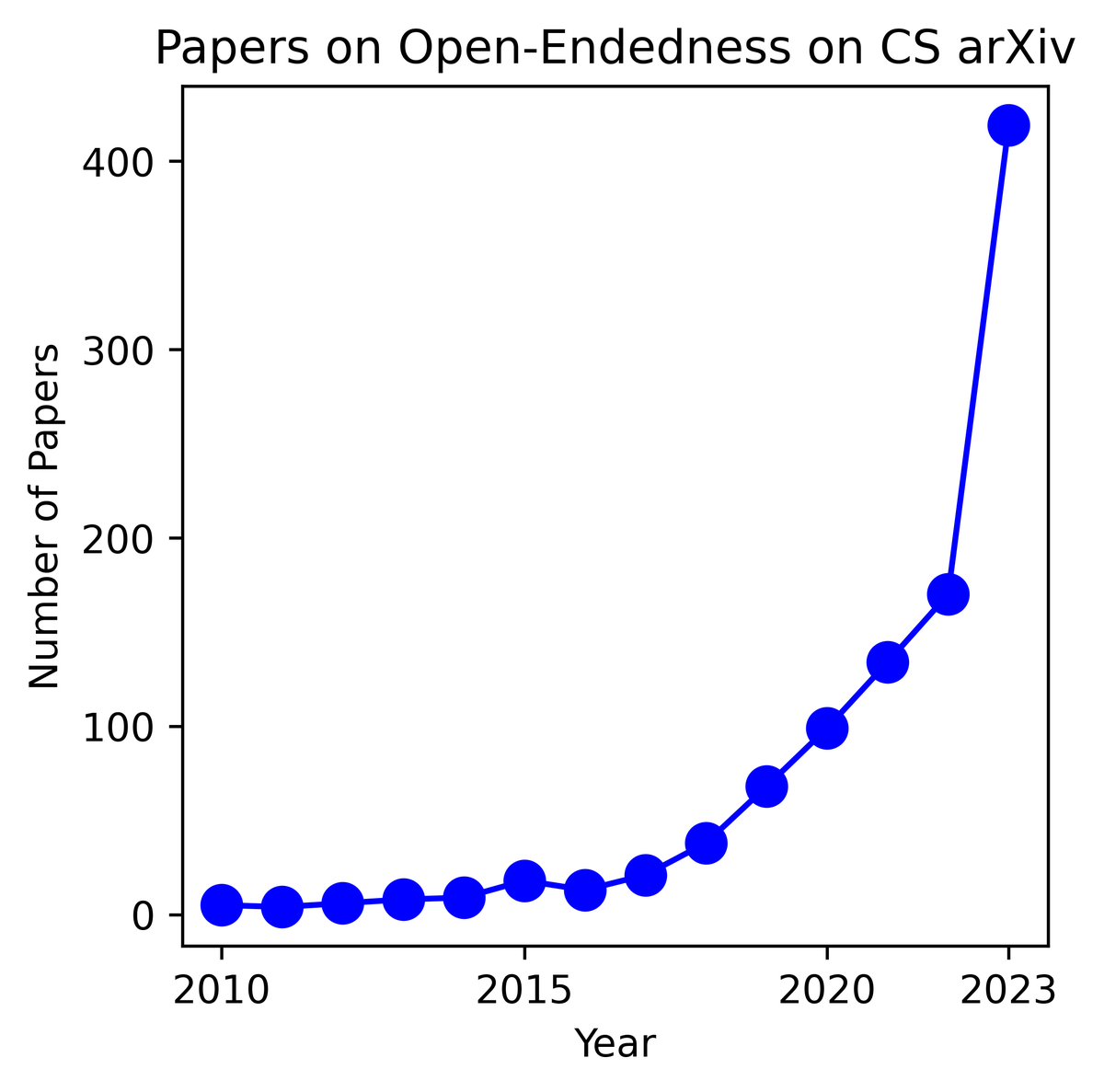

New exponential just dropped.

The surge in #OpenEndedness research on arXiv marks a burgeoning interest in the field!. The ascent is largely propelled by the trailblazing contributions of visionaries like @kenneth0stanley, @jeffclune, and @joelbot3000, whose work continues to pave new pathways.

0

1

33

Had a lot of fun chatting with @kanjun and @joshalbrecht about producing more general agents via adaptive curricula and open-ended learning!.

Learn about RL environment and curriculum design, open-endedness, emergent communication, and much more with @MinqiJiang from UCL!.

0

6

31

It is the end of the 22nd century. In the aftermath of the AI singularity, humans rebuild a greener, less techno-centric planet with the help of hyper-intelligent, GMO dinosaurs. (Concept art by @OpenAI DALL-E)

3

2

27

@rabois @hunterwalk @dbasic @semil Colin Huang is a clear counterexample. Former Google eng + pm who took $PDD from 0 to $29B in ~3 years.

3

2

29

Come MiniHack the Planet with us! This new gym-compatible RL environment lets you create open-ended worlds by tapping the full universe of diverse entities and rich dynamics offered by the NetHack runtime. Amazing work led by @samveIyan!.

Progress in #reinforcementlearning research requires training & test environments that challenge current state-of-the-art methods. We created MiniHack, an open source sandbox based on the game NetHack, to help tackle more complex RL problems. Learn more:.

1

8

27

@m_wulfmeier 💯 It’s why RL in some form or another will continue to matter. It’s entirely complementary to large pre-trained models. @egrefen, @_rockt, and I wrote at length about this view here, which might interest you:

1

5

26

If your research helps AI systems never stop learning, the ALOE Workshop is a great place to share your work. Especially great for those who want to get high-quality feedback or share ideas ahead of ICLR (or from a NeurIPS rejection) with a diverse community of researchers.

⏰Only 1 week until the ALOE 2023 submission deadline! . 🌿Submit your work related to open-endedness in ML, and join a growing community of researchers at NeurIPS 2023 in New Orleans, USA. 📄Submissions can be up to 8 pages in NeurIPS or ICLR format:.

0

3

27

If you find yourself unable to sleep this Tuesday evening, come check out our poster and talk on PLR from 2am - 7am BST at @icmlconf 😅.

Prioritized Level Replay. Poster: Spotlight: - Minqi Jiang (@MinqiJiang), Edward Grefenstette (@egrefen), Tim Rocktäschel (@_rockt).

0

4

26

Congrats @jparkerholder! (and @_rockt ) Big catches for DM. Looking forward to seeing how the ideas we've explored together in the last couple years cross pollinate there. Open-endedness means you'll open source the code right? ;).

1

1

26

If you're attending @NeurIPSConf and interested in open-ended curriculum learning, exploration, and environment design, let's chat!.

Members of @UCL_DARK are excited to present 8 conference papers at #NeurIPS2022. Here is the full schedule of all @UCL_DARK's activities (see for all links). We look forward to seeing you New Orleans! 🇺🇸. Check out the 🧵 on individual papers below 👇

1

2

26

Impressive new results on training large-scale Transformer-based policies for fast k-shot adaptation in XLand (2.0!) + featuring old collaborators @_rockt and @jparkerholder.

I’m super excited to share our work on AdA: An Adaptive Agent capable of hypothesis-driven exploration which solves challenging unseen tasks with just a handful of experience, at a similar timescale to humans. See the thread for more details 👇 [1/N]

2

3

25

More awesome work led by the always impressive @_samvelyan + @PaglieriDavide, using a minimax-regret criterion for evolving diverse field positions that are adversarial yet solvable for SoTA Google Football agents. (As cool as it is, this is only a teaser for what is to come.).

Uncovering vulnerabilities in multi-agent systems with the power of Open-Endedness! . Introducing MADRID: Multi-Agent Diagnostics for Robustness via Illuminated Diversity ⚽️. Paper: Site: Code: 🔜. Here's what it's all about: 🧵👇

0

5

25

Would love to see a compendium of spikes. A few other examples that immediately come to mind: .- Experience replay (Lin, 1992).- Diffusion probabilistic models (Sohl-Dickstein et al, 2015).- Associative scans (Blelloch, 1990).

@MinqiJiang introduced me to the concept of the spike. It's not cool to have a highly cited paper because maybe someone else would have written the same one. What's cool is to have a spike in citations which means you're way ahead of the curve.

3

0

25

This project drew inspiration from interactive publications + demos like from @hardmaru, many beautiful works in @distillpub, and the excellent TeachMyAgent web env from @clementromac et al, which was foundational to our demo:.

2

4

23

Fun recap of some impressive results of applying unsupervised environment design to generate autocurricula over game levels in competitive two-player settings. Great work led by @_samvelyan and featured in ICLR 2023:

0

4

23

A nice bite-sized summary of recent efforts from my collaborators and myself in developing more principled autocurricula. Conceptually refactoring PLR as Unsupervised Environment Design (from @MichaelD1729) led to many further insights + algorithmic advances + collaborations.

If after 3 years of @MichaelD1729's work on Unsupervised Environment Design (leading to & you are still using domain randomization (DR) for training more robust agents, consider PLR as a drop-in replacement!

1

2

24

Without a doubt my favorite part of NeurIPS this year.

Q: Why is it an exciting time to do research in open-ended learning?. A: Because of all of you! 🫵 (well said, @jeffclune). Thanks to all who took part in the ALOE Workshop. It truly felt like a special community today. See you next year!

0

0

23

Mika drove this project, scaling replay-guided UED to 2-player competitive games, to an awesome finish. Check out his deep dive on MAESTRO, which will be presented at ICLR 2023.

I’m excited to share our latest #ICLR2023 paper . 🏎️ MAESTRO: Open-Ended Environment Design for Multi-Agent Reinforcement Learning 🏎. Paper: .Website: . Highlights: 👇

1

7

23

Nice single file implementations of recent, scalable autocurricula algorithms from friends at @FLAIR_Ox.

🔧 Looking to easily develop RL novel autocurricula methods?.⚡ Want clean, blazingly fast, and readily-modifiable baselines?. Presenting JaxUED: a simple and clean RL autocurricula library written in Jax!

0

1

22

minimax features strong baseline implementations of minimax regret-based autocurricula like PAIRED, PLR, and ACCEL as well as new parallel and multi-device versions + abstractions that make it easy to experiment with different curriculum designs.

If after 3 years of @MichaelD1729's work on Unsupervised Environment Design (leading to & you are still using domain randomization (DR) for training more robust agents, consider PLR as a drop-in replacement!

1

3

21

Fantastic new work led by the talented @MarcRigter showing how we can generate minimax-regret autocurricula for training general world models. The method is principled and worked well on pretty much the first attempt.

How do we create robust agents that generalise well to a wide range of different environments and future tasks?. Our new #ICLR paper poses this problem as learning a robust world model.

0

2

20

@togelius Some video games become sufficient simulations of real-world settings, and gameplay data is used for human-in-the-loop training of highly robust A.I.'s. This changes the role of "gaming" from entertainment to a valuable form of work. We'll see new jobs like "A.I. trainer.".

1

0

18

Yeah. so about that. (The @UCL_DARK thesis template since last year. We're working on it. 🫡 🤣)

Lot of pitches this week for "perpetual data machines". Either laundering self-generated data or attributing prescience to reward models. Just want to caution that is a common trap smart people fall for.

1

0

19

🚩 I see your red-teaming and raise you ✨Rainbow Teaming✨ 🌈. This fantastic work, led by @_samvelyan, @sharathraparthy, and @_andreilupu is one of my favorite applications of the ideas behind open-ended learning to date.

We employ Quality-Diversity, an evolutionary search framework, to iteratively populate an archive—a discrete grid spanning the dimensions of interest for diversity (e.g. Risk Category & Attack Style)—with prompts increasingly more effective at eliciting undesirable behaviours.

0

5

19

PLR closely relates to some recent theories suggesting prediction errors drive human play, and dopamine’s role in driving structure learning via reward-prediction errors. via @Marc_M_Andersen, @aroepstorff: via @gershbrain: .

2

5

19

Software² rests on a form of *generalized exploration* for active data collection. Automatic curriculum learning, large generative models, and human-in-the-loop interfaces for shaping new data like @DynabenchAI, @griddlyai, and @AestheticBot_22, are all key enablers.

1

1

17

Really excited to hear @kenneth0stanley speak at @aloeworkshop 2022!.

Kicking off our speaker spotlights is @kenneth0stanley, a pioneer of open-endedness in ML. He co-authored Why Greatness Cannot Be Planned w/ @joelbot3000 (also speaking). They argue open-ended search can lead to better solutions than direct optimization.

0

2

17

Agents creating their own tasks isn't a new idea (@SchmidhuberAI's Artificial Curiosity and @jeffclune's AI-GAs) What is: The simultaneous maturation of auto-curricula, gen models, and human-in-the-loop on a web of billions: fertile soil to grow the next generation of computing.

1

2

16

Super excited about this work! @Bam4d's Griddly is now a @reactjs component. We think it can change the game for sharing r̴e̴p̴r̴o̴d̴u̴c̴i̴b̴l̴e̴ fully-interactive RL research results.

We’re excited to announce GriddlyJS - A Web IDE for Reinforcement Learning! . Design 🎨, build ⚒️, debug 🐛RL environments, record human trajectories 👲, and run policies 🤖 directly in your browser 🕸! . Check it out here: Paper:

0

2

17

Like the roadways, the Internet is becoming a mixed autonomy sys, w/ both human + agentic AI participants. There are many Q’s of HCI, machine theory of mind, and multi-agent dynamics. This open source project by @mindjimmy + @zhengyaojiang makes it easier to ask those questions.

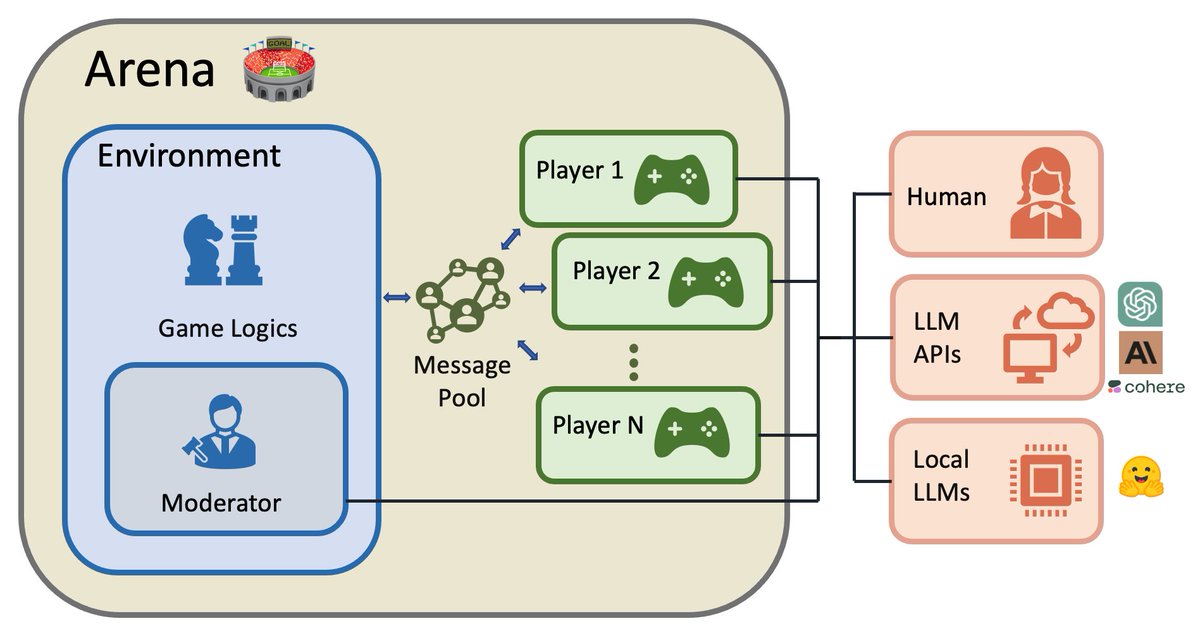

Introducing ChatArena 🏟 - a Python library of multi-agent language game environments that facilitates communication and collaboration between multiple large language models (LLMs)! 🌐🤖. Check out our GitHub repo: #ChatArena #NLP #AI #LLM 1/8 🧵

0

2

16

Cool work from @ishitamed showing that we can redesign PAIRED to match state-of-the-art UED algorithms in producing more general agents.

📢 Exciting News! We're thrilled to announce our latest paper: "Stabilizing Unsupervised Environment Design with a Learned Adversary” 📚🤖 accepted at #CoLLAs 2023 @CoLLAs_Conf as an Oral presentation!. 📄Paper: 💻Code: 1/🧵 👇.

0

2

16

This is an amazing team and mission. The impact of real-world ML for ML systems like this could have huge compounding effects. Congrats @zhengyaojiang, @YuxiangJWu, and @schmidtdominik_!.

A major life update: We ( @YuxiangJWu @schmidtdominik_) are launching Weco AI, a startup that is building AutoML Agents driven by LLMs!. Since last year, generative AIs have completely changed the way I work, yet I still feel like I've only scratched the surface. I'm so eager to.

0

0

14

@chrisantha_f Looks like a truly fantastic application of open-ended, self-referential evolution. You might be interested in this recent work from @_chris_lu_ et al that performs some theoretical analysis of evolution with a self-referential parameterization.

2

0

15

🏗 The codebase contains a how-to for integrating your own environments. We can't wait to see what exciting new ideas come out of this open source effort!. Congrats to my collaborators for getting this out there! @MichaelD1729 @jparkerholder @samveIyan @j_foerst @egrefen @_rockt.

0

1

15

Hard to imagine a better opportunity if you are thinking of pursuing a PhD in ML. Apply!.

🧵THREAD 🧵.Are you looking to do a 4 year Industry/Academia PhD? I am looking for 1 student to pioneer our new FAIR-Oxford PhD programme, spending 50% if their time at @UniofOxford, and 50% at @facebookai (FAIR) while completing a DPhil (Oxford PhD). Interested? Read on… 1/9.

0

0

15