Anka Reuel

@AnkaReuel

Followers

2,147

Following

1,186

Media

14

Statuses

707

Computer Science PhD Student @ Stanford | Geopolitics & Technology Fellow @ Harvard Kennedy School/Belfer | Vice Chair EU AI Code of Practice | Views are my own

Stanford, CA

Joined March 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

FEMA

• 1602165 Tweets

Joker

• 180765 Tweets

Grammys

• 154376 Tweets

1005 CHEERS TO XIAO ZHAN

• 143896 Tweets

Mayorkas

• 138649 Tweets

Mancuso

• 111923 Tweets

Record of the Year

• 89629 Tweets

Best Record

• 87656 Tweets

Ülke

• 67130 Tweets

Pogba

• 66451 Tweets

Fatih

• 60691 Tweets

Uyuşturucu

• 55857 Tweets

#turkishwomenareindanger

• 51375 Tweets

Valencia

• 34917 Tweets

Marseille

• 27839 Tweets

#ikbaluzuner

• 23345 Tweets

Jamie Dimon

• 21426 Tweets

Semih Çelik

• 19984 Tweets

Celtics

• 19138 Tweets

Dolly

• 18942 Tweets

Angers

• 15490 Tweets

Leeds

• 12621 Tweets

Greenwood

• 10644 Tweets

Last Seen Profiles

Pinned Tweet

Technical AI Governance research is moving quickly (yay!), so

@ben_s_bucknall

and I are excited to launch a living repository of open problems and resources in the field, based on our recent paper where we identified 100+ research questions in TAIG:

🧵

5

52

210

Our new paper "Open Problems in Technical AI Governance" led by

@ben_s_bucknall

& me is out! We outline 89 open technical issues in AI governance, plus resources and 100+ research questions that technical experts can tackle to help AI governance efforts🧵

11

51

187

We need an International Agency for Artificial Intelligence with support from governments, big tech companies, and society at large NOW.

#AIGovernance

13

39

134

Dear

@LinkedIn

, may I remind you of your own Responsible AI Principles? Remember, the ones about Trust, Privacy, Transparency, Accountability? Asking for consent is literally Responsible AI 101. Seems like these principles are – as for so many companies – just marketing BS.

5

43

129

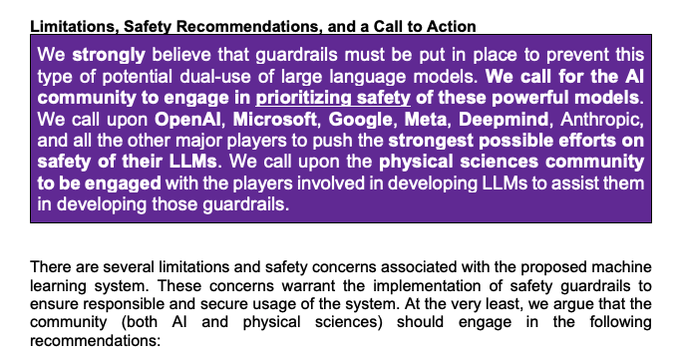

Our paper explores the risks of using LLMs for strategic military decision-making. With OpenAI quietly updating their ToS to no longer prohibit military and warfare use cases, this research is more crucial than ever. These models aren’t safe to use in high-stakes situations yet.

3

38

101

@PhDVoice

@PostdocVoice

Green flag: old PhD students come regularly back to visit the lab or join lab events.

Huge green flag: his name is

@aiprof_mykel

😁

1

1

99

🔍 Excited to share: I'm the lead researcher for the Technical AI Ethics chapter for

@Stanford

's 2024 AI Index, curated by

@StanfordHAI

. We're broadening our scope this year and your input on what research should be included is vital! 🧵 1/

4

12

92

It’s here!

@StanfordHAI

’s 2024 AI Index. 502 pages on everything that’s been happening in AI, backed by data and research. Extra proud of the Responsible AI chapter for which I served as Research Lead this year – give it a read and let me know what your highlight was!

📢 The

#AIIndex2024

is now live! This year’s report presents new estimates on AI training costs, a thorough analysis of the responsible AI landscape, and a new chapter about AI's impact on medicine and scientific discovery. Read the full report here:

15

370

722

6

11

73

My joint op-ed with

@GaryMarcus

on why we need an International Agency for AI. There really is not a lot of time to waste👇

“There is not a lot of time to waste,” write

@GaryMarcus

and

@AnkaReuel

. “A global, neutral non-profit with support from governments, big business and society is an important start”

5

13

63

4

6

73

@timgill924

While vertical networking (w/ people w/ a higher „rank“) can help in the short term, horizontal networking (building relationships w/ peers of the same „rank“) is what will lead to longterm growth and impact. These are the people you’ll spend decades with in your field.

1

1

73

This year at

@NeurIPSConf

was by far my worst experience with the reviewing process to date.

- 1 reviewer suggesting not to follow Neurips citation guidelines & many other unconstructive comments

- Reached out to AC to mediate & wrote detailed rebuttals to all reviewers

- We're

11

5

69

Just in! Our newest paper on designing AI ethics boards to reduce risks from AI!

How do you design an AI ethics board (that actually works)?

In our new paper, we list key design choices and discuss how they would affect the board’s ability to reduce risks from AI.

Paper: (with

@AnkaReuel

and Alexis Carlier)

7

36

167

4

14

66

I consider myself someone working on topics relevant to technical AI governance. I do not agree with this statement, and I’m sad that such accusations are being made without providing evidence or that they are made at all. 🧵

4

4

58

NeurIPS 2023 best paper awards announced!

1) Are Emergent Capabilities of LLMs a Mirage? by

@RylanSchaeffer

@BrandoHablando

and Sanmi Koeyo

2) Privacy Auditing with 1 Training Run by Thomas Steinke, Milad Nasr and Matthew Jagielski

Huge congrats!!!🎉

2

3

47

I’m co-leading a project that studies how AI benchmarks are being used by stakeholders. If you’ve ever used a benchmark (e.g. results from a benchmark to make a decision or ran a benchmark on a model to understand its performance), or decided not to, we’d love to talk to you!

3

13

46

Takeaway: When firms allow for “pre-deployment access for testing by independent third parties” the fine print matters. A week to comprehensively test a model is not enough (let alone the fact that pre-deployment testing is insufficient and limited in the risks it can capture).

0

2

44

In an article published today,

@TIME

discusses the UN‘s plans to shape the future of AI and approaches to international AI governance, featuring our work on an ICAO-inspired jurisdictional certification approach among others. 1/x

2

15

44

Thrilled to have been invited to speak at the UN’s

#AIforGood

Summit in Geneva this week, organized by

@ITU

. The buzz of profound discussions on international

#AIgovernance

was truly inspiring. Encouraging signs of widespread support for a robust international framework.

0

1

38

GPTBot by

@OpenAI

crawls the web for new data to train AI models. To opt out, website owners need to modify the robots.txt file. If you don’t know about GPTBot your data will be taken without your consent. Why does it have to be OPT OUT instead of OPT IN?

2

8

31

What worries me the most: All these big companies putting more and more resources into developing more advanced tech while laying off their responsible AI teams

@Twitch

@Twitter

@Microsoft

5

0

27

📢 We're seeking impactful AI ethics/safety research from late 2022 to 2023 for inclusion in Stanford's 2024

#AIIndex

. Submit your papers or nominate others’ work through our Google Form. Let's shape this chapter together!👇

2/

2

14

26

Is this a voluntary or a binding agreement

@sama

@OpenAI

? Will any of the details of the agreement be publicly available?

0

2

26

How to become a great

#NeurIPS

reviewer ⭐️

1. Read the paper

2. Don’t let ChatGPT write a review for you

3. Don’t just try to bash your colleagues. Make it your mission to actually help them improve with _constructive_ criticism

4. State that you’re willing to raise your score if

How to become a toxic Reviewer💀 for

#NeurIPS

? 🤔

1. List "Lack of technical novelty" as the only weakness.

2. Give the paper a rating 4 and confidence 4.

3. During rebuttal, acknowledge the authors' response but never take them into account. Tell the author that after

20

20

344

4

0

26

There must be a better way to address some of the most important questions of our time than through 15-hour+ night shifts, expecting to solve 10+ complex, highly debated issues in 1 negotiation. At least I know how well I resolve conflicts being sleep deprived and under pressure.

#AI

Act trilogue: after more than 15 hours since the beginning of the meeting, EU policymakers are still discussing bans with no light at the end of the tunnel yet. Looks like the timing of the Council's, and maybe even Parliament's, press conferences was over-optimistic.

2

44

89

2

2

25

Research is about gaining knowledge & applying it to drive positive change. In AI, this can feel like an uphill battle as a woman. But

@mmitchell_ai

&

@timnitGebru

show it's possible. Thank you for your perseverance & paving the way for others!

The final version of the approved EU AI Act even specifically recognizes model cards and datasheets: The work that me and my former Google Ethical AI team co-lead

@timnitGebru

spearheaded.

6

66

307

1

4

25

First paper of its kind that assembled a team of medical professionals and computer scientists to quantify how common LLMs would respond to mental health emergencies. Results are indeed alarming, and once again showcase that these models aren’t fit for high-stakes decisions yet.

2

7

25

Some people have started to reach out to us with additional resources on open problems that we missed (thanks! 💙) – please keep them coming, we’d be excited to cite them and also add them to our living online directory of open problems in AI governance (more info on that soon!).

@AnkaReuel

@ben_s_bucknall

1/ Verifiable black-box auditing when the platform tries to manipulate the audit

- Can be done efficiently for small models (linear, small trees ...):

- But is provably impossible for larger models (LLM, vision models, ...):

1

0

5

2

5

23

Just released: My interview with

@AlJazeera

alongside

@YolandaLannqist

and

@Jackstilgoe

! We delve into the potential and limitations of generative AI, and its impact on the job market. 🚀

1

3

22

@DavidSKrueger

@newscientist

No matter whether we’re talking about bias, misinformation, or worse,

@GaryMarcus

and I agree that regulation needs to be part of the solution. Would love to hear more about your thoughts on the topic!

3

1

23

I am absolutely delighted to announce that I will be speaking at the

@AIforGood

#ITUaiSummit

taking place on the 6th and 7th of July 2023, organized by the

@ITU

/

@UN

. Hit me up if you're in Geneva between the 4th and 10th of July! :-)

2

4

22

Still unsure about open-sourcing large models; it can help with democratizing access and public scrutiny but at the same time can lead to increased misuse and security risks. What’s everyone’s opinion on that?

15

0

20

🚨 Foundation models need to be regulated in the

#EUAIAct

. Not doing so means missing critical measures unique to FM developers, not tackling risks at their source, and creating burdens downstream. Waiting could cost years in revisiting/agreeing on regulations. Let's

#AIAct

now!

The last round of negotiations of the

#AIAct

are set for tomorrow, and the last bit is always the toughest. Yet I am hopeful a strong result will emerge; that should include regulating foundation models ↘️

7

34

102

0

3

20

With the final

#EUAIAct

trilogue negotiation coming up on Dec 6, this piece in

@TIME

by

@privitera_

and Yoshua Bengio, building on

@collect_intel

’s work, is more timely than ever: We don’t need to decide between safety and innovation. We can have both. Read how 👇

Should we choose AI progress or AI safety? Address present-day impacts of AI or potential future risks?

In our op-ed for

@TIME

, Yoshua Bengio and I argue that these are false dilemmas.

And we propose a “Beneficial AI Roadmap” (BAIR).

1/n

2

26

120

0

6

17

@ThePhDPlace

AI governance, (automated) red teaming, robust evaluations of foundation models, anything responsible AI

1

0

18

@BlancheMinerva

Same issue here, we just got a

@NeurIPSConf

D&B review with a similar vibe. Essentially saying following best practices is too hard for benchmark developers and that our paper is bad because we suggest best practices 😅🤦🏻♀️

0

0

18

Happening in 20min: livestream of the UN Security Council meeting discussing AI risks and opportunities for international peace and security

@un

@sec_council

0

1

18

It can’t be the case that they didn’t find *at least* one qualified woman to join the team. Nobody can tell me that it’s that difficult.

@mmitchell_ai

, thinking about your tweet from yesterday :/

0

5

18

Is this really a surprise to anybody? We can’t rely on industry alone to come up with and implement effective AI regulations.

4

3

16

That’s brilliant

@sarahookr

, congratulations! Can’t wait to read your thesis 🤩

Huge congrats to Dr.

@sarahookr

for defending her PhD! Sword will be in the mail shortly ⚔️

37

8

381

0

0

16

8/ Big thanks to

@StephenLCasper

,

@fiiiiiist

,

@lisa_soder_

, Onni Arne,

@lrhammond

,

@lujainmibrahim

,

@_achan96_

, Peter Wills,

@manderljung

,

@bmgarfinkel

,

@ohlennart

,

@iamtrask

,

@gabemukobi

,

@rylanschaeffer

,

@Mauric_Baker

,

@sarahookr

,

@IreneSolaiman

,

@SashaMTL

,

1

0

16

Technical point: We need at least some level of regulation of foundation models. Some risks cannot be addressed by downstream developers only.

Democratic point: Trying to undermine a consensus that was reached in due process after months of intense deliberations (+ two

1

7

15

A lot of people reached out to ask how good the AI Act is. My honest answer: I don’t know. There are too many details tbd. It’s a first step and I’m glad that esp FM regulations were included. But this is not the end; it’s the beginning of a long road to effective AI governance.

0

1

15

Final AI Act text leaked. 892 pages. I had other plans for this week but well here we are 😅

LEAK: Given the massive public attention to the

#AIAct

, I've taken the rather unprecedented decision to publish the final text. The agreed text is on the right-hand side for those unfamiliar with four-column documents. Enjoy the reading! Some context: 1/6

28

375

742

0

1

15

Okay, love goes out to our

@NeurIPSConf

D&B AC. They addressed all our concerns and saw the issues with our adversarial reviewer. Our paper BetterBench (🧵 will follow shortly) ended up getting accepted as spotlight 🎉 Dear AC, if you read this, thanks a lot, we appreciate you ❤️

This year at

@NeurIPSConf

was by far my worst experience with the reviewing process to date.

- 1 reviewer suggesting not to follow Neurips citation guidelines & many other unconstructive comments

- Reached out to AC to mediate & wrote detailed rebuttals to all reviewers

- We're

11

5

69

0

0

15

We

@kira_zentrum

clarified in a fact sheet where these concerns about extinction from AI come from and what can be done about them. Good news: many of the mitigation approaches for extreme risks are equally important for other AI risks.

@CharlotteSiegm

@privitera_

1

2

14

GPT4o just got announced by

@OpenAI

, where the o stands for ‚omnimodal‘. So the big announcement was….a new term for multimodal? 😅

0

1

14

There’s not only one risk but a variety of risks associated with generative AI models that we need to think about and mitigate.

@SabrinaKuespert

and

@pegahbyte

provide a great overview👇

With a comprehensive understanding of the range of risks associated with

#GeneralPurposeAI

models, policymakers can proactively mitigate these hazards -

with

@pegahbyte

we provide a risk map for this!

3 risk categories incl. current examples & scenarios:

3

27

80

0

0

13

In times of deepfakes & AI impersonations, having a leading company replicate someone's voice for their chatbot without consent is reprehensible. Thanks for standing up against it & using your visibility, Scarlett (but also, sorry that you have to in the first place).

0

1

13

@nitarshan

@NicolasMoes

@JeffLadish

@NeelGuha

@JessicaH_Newman

@TobinSouth

@alex_pentland

@sanmikoyejo

@aiprof_mykel

@RobertTrager

And here’s the full paper link: (for some reason, the shortened link in the first post didn’t show up as preview 🥲)

1

3

13

9/

@nitarshan

,

@NicolasMoes

,

@JeffLadish

,

@NeelGuha

,

@JessicaH_Newman

, Yoshua Bengio,

@TobinSouth

,

@alex_pentland

,

@sanmikoyejo

,

@aiprof_mykel

, and

@roberttrager

who all contributed their insights, wisdom, and open problems to the paper. You rock <3

1

0

13

Next stops:

05/28-06/01:

@AIforGood

(Geneva🇨🇭) to moderate part of the AI Governance Day at the conference

06/01-06/10:

@FAccTConference

(Rio 🇧🇷) to present two of my accepted papers.

HMU if you’re around for a coffee at the Jet d‘Eau ☕️ or a cocktail at the Copacabana 🏝️

0

0

12

Changing the narrative:

@manuchopra42

and

@karya_inc

show that there’s a different way to getting the data we need to power AI models – an ethical one.

0

1

12

Overview of AI-policy events at

#NeurIPS2023

. Hope to see many of you there!

Thanks

@rajiinio

for putting it together :)

Great to see so many policy-related events

@NeurIPSConf

this year!

First is this tutorial, organized by

@HodaHeidari

, Dan Ho & Emily Black.

The program for this is really well thought out - I'm sure it'll be an educational moment for many (+ excited to be on a panel for this)!

3

18

120

0

0

12

Excited for this year’s AI Index release on April 15 and especially the responsible AI chapter I worked on with a fantastic team over the last few months (esp.

@nmaslej

Loredana Fattorini

@amelia_f_hardy

and

@StanfordHAI

). Mark your calendars 🗓️

#AIIndex2024

What’s new and trending across the AI landscape? Find out on April 15 when

@StanfordHAI

publishes the

#AIIndex2024

Report. Sign up for our newsletter to receive a copy:

0

16

46

0

2

12

@willie_agnew

I see technical AI governance as a bridge to join forces, rather than siloing knowledge and pretending other things aren’t important. Happy to chat about how we can do that better!

0

1

11

7/ I've led the project together with

@ben_s_bucknall

and am incredibly grateful for the opportunity to work with him and 29 brilliant contributors from academia, industry, and civil society. Their diverse expertise has made this paper a truly comprehensive resource.

1

0

11

Comprehensive set of questions by the

@FTC

to OpenAI, investigating input data, personal data policies, risks from

@OpenAI

‘s LLMs, incl. defamation of people and prompt injection attacks, and taken safety and mitigation measures, incl. pre-deployment safety checks.

0

2

11

Love to see your efforts,

@UNESCO

, esp. in the Global South, to advance AI governance strategies. This should indeed be a global endeavor; it’s not only the G7 that need to work out how to handle AI on a national level.

1

1

11

Seems like

@OpenAI

’s ChatGPT and

@AnthropicAI

’s Claude are down (and have been for ~20min). Anyone else experiencing this? What’s going on?

12

0

11

To everyone I still owe a message to, that’s the reason. I’m sorry and I’m working on replying imperfectly and promptly rather than perfectly and never ❤️

0

0

10

@mmitchell_ai

One would think this is a relatively easy fix yet it’s been years and years of advocating for more representation in dev teams and we’re making marginal improvements at best. Even involving the people who’ll be impacted by the tech during testing would already be helpful.

0

3

9

We hear interdisciplinary calls for more safety regulations of LLMs across all fields. Dear decision makers, please listen to them and the call for effective

#AIgovernance

.

3

1

10

Exploring ChatGPT: what it is and why regulation is crucial. A thread.

#ChatGPT

#ResponsibleAI

#AIGovernance

1

3

8

@typewriters

@CarnegieEndow

[Honest question] How would a bill that actually makes us safer look like to you?

6

0

9

@random_walker

Which highlights another issue: that the big tech companies themselves are in charge of the definition and can adjust it as they see fit. Maybe that’s definitional capture? 😁

0

0

8

Just in: The US’ own version of a voluntary code of conduct for frontier AI model developers, covering red teaming, information sharing, watermarking, cybersecurity measures, third party checking, reporting of societal risks and safety research.

1

1

9

Shout out to the wonderful and talented human beings who worked with me on the chapter and/or provided in-depth feedback -

@nmaslej

Loredana Fattorini,

@amelia_f_hardy

and Andrew Shi,

@jackclarkSF

,

@vanessaparli

, Raymond Perault, and Katrina Ligett!

0

0

8

5/

@rylanschaeffer

,

@Mauric_Baker

,

@sarahookr

,

@IreneSolaiman

,

@SashaMTL

,

@nitarshan

,

@NicolasMoes

,

@JeffLadish

,

@NeelGuha

,

@JessicaH_Newman

,

@Yoshua_Bengio

,

@TobinSouth

,

@alex_pentland

,

@sanmikoyejo

,

@aiprof_mykel

,

@RobertTrager

1

0

9

I’ll be at

#NeurIPS

from today onwards – hmu if you wanna grab coffee or have a paper to share with me for the Technical AI Ethics chapter of

@StanfordHAI

’s 2024 AI Index!

@indexingai

#NeurIPS2023

#NeurIPS23

📢 We're seeking impactful AI ethics/safety research from late 2022 to 2023 for inclusion in Stanford's 2024

#AIIndex

. Submit your papers or nominate others’ work through our Google Form. Let's shape this chapter together!👇

2/

2

14

26

0

0

7

Low-key feeling so humbled that our analysis from the Responsible AI chapter that I led in the

@StanfordHAI

2024 AI Index was covered by the amazing

@kevinroose

in the

@nytimes

today 🤩 Must read if you want to know why current evaluations are not ideal!

0

2

8