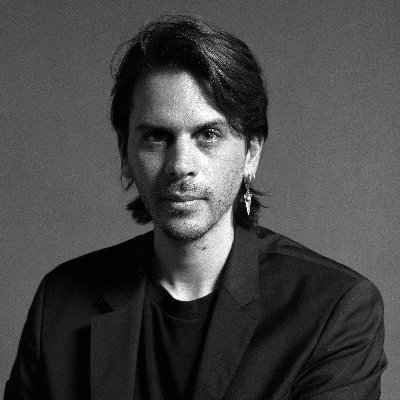

Wei Dai

@weidai11

Followers

8,271

Following

108

Media

5

Statuses

536

wrote Crypto++, b-money, UDT. thinking about existential safety and metaphilosophy. blogging at

Joined June 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

ROSÉ

• 478461 Tweets

西田敏行さん

• 334502 Tweets

AI学習

• 206551 Tweets

Bluesky

• 192807 Tweets

ブルスカ

• 187243 Tweets

ブルースカイ

• 170214 Tweets

スーパームーン

• 168990 Tweets

#わたしの宝物

• 164547 Tweets

ツイッター

• 138525 Tweets

FAYEYOKO BTS BLANK

• 100508 Tweets

ぐりとぐら

• 91154 Tweets

タイッツー

• 84759 Tweets

インスタ

• 83526 Tweets

Yahya Sinwar

• 79547 Tweets

ログイン

• 62020 Tweets

他のSNS

• 59762 Tweets

Misskey

• 58469 Tweets

블루스카이

• 53410 Tweets

WISHING FOR OLBAP

• 51116 Tweets

EFM X JACK AND JOKER

• 40999 Tweets

LINGLING MYCHERIE AMOUR EP9

• 24926 Tweets

オースティン

• 23588 Tweets

ソフトバンク

• 22862 Tweets

日本シリーズ

• 19884 Tweets

#يحيي_السنوار

• 19745 Tweets

#หนึ่งในร้อยEP9

• 19449 Tweets

ベイスターズ

• 18585 Tweets

RADWIMPS

• 16957 Tweets

冬月くん

• 15855 Tweets

PLUTO 1st Anniversary

• 15011 Tweets

ミスキー

• 14625 Tweets

#TOBE緊急生配信

• 14594 Tweets

ふっかさん

• 13173 Tweets

個人サイト

• 12140 Tweets

横浜優勝

• 11161 Tweets

Last Seen Profiles

Pinned Tweet

Among my first reactions upon hearing "artificial superintelligence" were "I can finally get answers to my favorite philosophical problems" followed by "How do I make sure the ASI actually answers them correctly?"

Anyone else reacted like this?

@janleike

You assume that you don't need to solve hard philosophical problems. But the superhuman researcher model probably will need to, right? Seems like a very difficult instance of weak-to-strong generalization, and I'm not sure how you would know whether you've successfully solved it.

1

0

16

12

2

55

@Arthur_van_Pelt

Haven't looked at all the evidence, court cases closely, but from what I've seen, it seems pretty unlikely that he's Satoshi.

20

16

293

Has Elon Musk not heard of the Median Voter Theorem? It implies that the US is unlikely to become a one-party states this way because the parties will adjust their platforms to evenly divide up the political spectrum. It's why we have low-margin swing states in the first place.

31

8

270

The people try to align the board. The board tries to align the CEO. The CEO tries to align the managers. The managers try to align the employees. The employees try to align the contractors. The contractors sneak the work off to the AI. The AI tries to align the AI.

4

21

225

Don't forget Daniel Kokotajlo

@DKokotajlo67142

, who started this whole chain of events by being the first OpenAI employee to refuse to sign the non-disparagement agreement, at enormous financial cost.

1

17

195

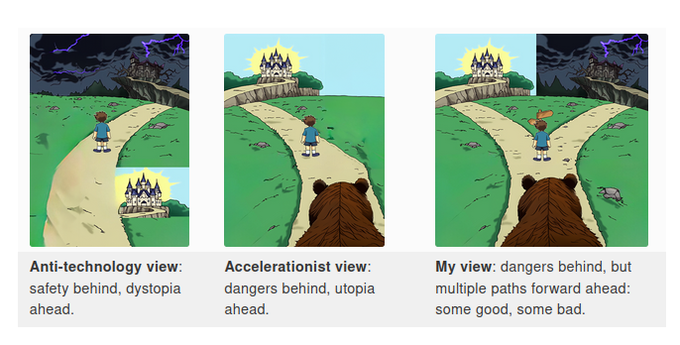

This is speaking my language, but I worry that AI may inherently disfavor defense (in at least one area), decentralization, and democracy, and may differentially accelerate wrong intellectual fields, and humans pushing against that may not be enough. Some explanations below.

2

16

189

Many people seem to care more about social status than either leisure or having descendants. This surprising fact (caring so much about positional/zero-sum goods) may have important implications for the nature of human values (e.g., the debates over egoism vs altruism and moral

@FoundersPodcast

South Koreans have mostly given up on having children. They are losing at the only game that matters.

14

9

991

12

7

187

For about a decade, I was one of maybe 5 people on Earth who had thought most about decentralized cryptocurrency (although the word wasn't invented yet). I don't think any of us were optimistic enough about it, despite selection effects. Really hope AI safety doesn't repeat this.

5

6

177

I'm actually not sure that EA has done more good than harm so far. The costs of its mistakes with FTX and OpenAI are just really high, and not fully accounted for. I would say that its mistakes with

@OpenAI

were not only in the recent episode, but also earlier, when EA 1/2

12

7

163

I wonder if there's a world in which Eliezer Yudkowsky became convinced earlier in his career that trying to build AGI is a bad idea, and what that world looks like now. (I'm afraid that it wouldn't actually look very different.) I tried to convince him a few times, but failed.

8

7

161

I once checked out an econ textbook from the school library and couldn't stop reading it because the insights gave me such a high. Imagine what our politicians would be like if that was the median voter. (Which is doable with foreseeable tech, e.g., embryo selection!)

Sometimes you just have to ignore the signaling function of prices, supply and demand, trade-offs, comparative advantage, the failures of economic planning, the workings of self-interest, the importance of incentives, etc.

#TheAgeOfEconomicIgnorance

42

76

396

13

11

139

Me: Humanity should intensively study all approaches to the Singularity before pushing forward, to make sure we don't mess up this once in a lightcone opportunity. Ideally we'd spend a significant % of GWP on this.

Others: Even $50 million per year is too much.

4

10

142

I've been wondering why I seem to be the only person arguing that AI x-safety requires solving hard philosophical problems that we're not likely to solve in time. Where are the professional philosophers?! Well I just got some news on this from

@simondgoldstein

: "Many of the

14

7

119

Re: persistent speculation that I'm Satoshi Nakamoto, this timeline of my publications (thanks to

@riceissa

for collecting them!) shows how much my interests had shifted away from digital money by the time Bitcoin was released.

12

15

105

I worry about the AI transition going wrong this way more than any other, partly because almost nobody else seems to be working on or thinking about it, even as it's coming true, with current AIs already accelerating math, coding, and biology while having little effect on

13

6

91

I think

@HoldenKarnofsky

(and EA as a whole to some extent) deserve negative credit for their role in OpenAI, including defending OAI with "I don’t know whether OpenAI uses nondisparagement agreements; I haven’t signed one." in 2022 instead of investigating the allegation.

6

6

86

I tried to DM this person but didn't get a reply. Could someone help me reach out and let him know that I've been thinking about AI x-risk since the 90s, even before crypto, and have been active on LW since its beginning, see if that changes his mind about "p(doom) cult"?

13

2

81

I can only find 2 mention of the Median Voter Theorem on Twitter outside this thread in the last 24 hours, one also in connection with Musk's tweet, with only 172 views, and the other celebrating Harold Hotelling's birthday! How to explain this, given Musk's 49.5M views?

8

2

70

@jessi_cata

My view is that given the small size of the Agent Foundations team, the amount of time they were given, and my priors for philosophical difficulty, it would be quite surprising if they did succeed. I wrote something along these lines in a 2013 post

1

2

48

A major source of my pessimism is that genuine altruism is rare compared to virtue signaling / status gaming and there's also a lack of alignment between people's status games and long-run outcome for human civilization. I.e. what's best for one's status usually isn't best for

3

2

46

@balajis

I can understand most of these but why "Freedom to compute — AI"? If you're not worried about AI risk, please check out this post of mine. There may be more reasons to be worried than you thought.

1

1

41

We absolutely need whistleblowers, but it has to be combined with other ways of changing AI company culture, or they'll start filtering out safety-conscious potential hires for fear of them becoming whistleblowers. Zach Stein-Perlman’s AI Lab Watch seems promising start.

4

4

39

@ohabryka

@NeelNanda5

It also seems incomprehensible that OpenPhil (or any of the people involved in making the grant to OpenAI, AFAIK) hasn't made any statement about OpenAI's plans to become fully for-profit.

2

2

31

Surely it's just because they read too much science fiction?

2

0

30

@davidmanheim

@LinchZhang

@rruana

@benlandautaylor

@elonmusk

Nick Bostrom Aug 5, 1998: The big question is: what can be done to maximize the chances that the arrival of superintelligences will benefit humans rather than harm them? The range of expertise needed to address this question extend far beyond that of computer scientists and

3

1

29

@danfaggella

Why is it not part of good governance to view people like Altman as "sociopaths", to morally condemn them, lower their social status, etc? This is perhaps the most basic form of governance that humans have, and I see no reason to abandon it instead of adding to it.

2

0

28

@bensig

@adam3us

Crypto++ had the fastest SHA-256 code at the time. It's documented in one of

@hashbreaker

djb's papers . I think I found an optimization that shaved off 1 instruction from critical path of the inner loop, or something like that.

4

2

25

Communism, anarcho-capitalism, classical liberalism, evolutionary psychology, analytic philosophy, microeconomics, game theory, transhumanism, LW-style rationality, longtermism, plus a self-censored item.

What's your list?

4

2

23

@theorizur

@simondgoldstein

It depends on the specific alignment approach, but I think metaethics and metaphilosophy are needed for most. Example problems: 1. Can the user be wrong about what they want or value, and if so what to do about that? 2. If the AI thinks of or receives a philosophical argument

2

1

25

@NathanpmYoung

@HoldenKarnofsky

And the failures continue: not reflecting on past mistakes, not writing down "lessons learned" to help others (e.g. other board members of OpenAI and similar orgs), forfeiting the PR battle to SamA who might well have won except for the heroics of Daniel Kokotajlo and

@janleike

1

0

24

@NathanpmYoung

@HoldenKarnofsky

I'm thinking of all of the mistakes that led to the present moment, including conflict of interest in the initial grant, DD failure in allowing SamA to become CEO, mishandling the firing and letting Sam further consolidate power, lending EA's credibility without enough oversight.

1

2

23

@ESYudkowsky

At the time I was like "Nobody has talked about the Median Voter Theorem yet. Free alpha for me." Little did I know that 12 hours later I would still be virtually the only person to have mentioned it. Where are the professional political scientists and economists???

4

1

23

Human attempts at altruism often turn out not just badly, but catastrophically. OpenAI seems on track to becoming another example of this. Contrast "Our mission is to ensure that artificial general intelligence benefits all of humanity." with what they do:

3

1

23

@BartenOtto

1. Not wanting to die is one value among many. Some really want to experience a post-Singularity future, for example. How to trade off between them is a philosophical question.

2. Lots of epistemological questions about how to think about x-risks. (Anthropic reasoning, etc.)

3.

2

0

22

Are there *any* Pareto improvements in the real world, after taking reallocation of power, and other forms of status, into account? Every proposal is a bid for power. Every argument or statement of fact is a bid for prestige. Every success makes everyone else less successful by

4

1

21

I'm worried about AI differentially accelerating STEM vs philosophy, but the same risk perhaps exists with human cultures/systems. Where is the corresponding crop of highly capable philosophers being produced in the People's Republic of China, for example?

4

1

20

@stuhlmueller

Why aren't professional economists and philosophers debating these ideas? I used to think that AGI was just too far away, and these topics will naturally enter the public consciousness as it got closer, but that doesn't seem to be happening nearly fast enough. What gives?

2

2

16

@davidmanheim

@LinchZhang

@rruana

@benlandautaylor

@elonmusk

Note that Eliezer was only 18 at the time, and Nick was doing his 3rd year of PhD in philosophy.

0

0

16

@bensig

@adam3us

@hashbreaker

Note that the OpenSSL acknowledgement is under "Legal". Crypto++'s license did not require acknowledgement for including individual files like the SHA-256 code. (It was only copyrighted as a compilation and individual files were put in the public domain.)

1

1

14

@teortaxesTex

I think a large part of it has to be motivated reasoning, wanting to be the hero that saves the world, just like the founders of OpenAI. "Because we are not a for-profit company, like a Google, we can focus not on trying to enrich our shareholders, but what we believe is the

0

0

16

@janleike

You assume that you don't need to solve hard philosophical problems. But the superhuman researcher model probably will need to, right? Seems like a very difficult instance of weak-to-strong generalization, and I'm not sure how you would know whether you've successfully solved it.

1

0

16

How many of these do you recognize?

metaphilosophy for AI safety

beyond astronomical waste

UDT

UDASSA

human-AI safety

logical risk

acausal threat/trade

modal immortality

internal moral trade

ontological crisis in human values

AGI merger/cooperation/economy of scale

0-2

41

3-5

32

6-8

22

9-11

10

2

2

14

Harold Hotelling can't be resting easy in his grave today.

1

1

15

@KelseyTuoc

"as we said"

Unbelievable that he straightforwardly lies like this and gets away with it. It's incredibly frustrating watching humanity take the AI transition so unseriously, e.g. letting obviously untrustworthy people lead the effort.

0

1

15

@MatthewJBar

If an AI commits a crime (say it kills someone), do you also punish its copies/derivatives, if so which ones? What if it trains or programs a new AI then deletes itself?

1

2

13

@gcolbourn

Perhaps worth noting that Dario Amodei and Ilya Sutskever also started for-profit companies after leaving OpenAI, so part of it is just that the non-profit structure was never going to last or be competitive.

2

0

14

@RokoMijic

I think there are many disjunctive ways for the AI transition to go wrong (in a way that destroys most value of the lightcone). Many of these have approximately 0 people trying to prevent them, and some seem too hard for current humans to solve. Related post:

3

1

13

@stuhlmueller

Thanks for the signal boost! It seems wild that with AGI plausibly just years away, there are still important and fairly obvious considerations for the AI transition that are rarely discussed. Another example is the need to solve metaphilosophy or AI philosophical competence.

1

1

13

@sama

, do you have a response to this?

0

0

11

@benchanceyy

I'm pretty uncertain about it. One draft of my tweet expressed the possibility that he was just saying it for political effect, but I ended up deleting that part to focus more on the political science as opposed to the politics.

0

0

12

@BogdanIonutCir2

@Gabe_cc

I think there's a high risk that automated research will speed up capabilities more than alignment, because alignment research involves philosophical questions, and may become bottlenecked by lack of AI philosophical competence, which ~nobody is working on. Curious if you've seen

4

0

10

@janleike

@AnthropicAI

Please push them to improve their governance, safety culture, and transparency, to avoid a repeat of OpenAI. I'm seeing some worrying signs, , for example.

0

0

11

@ohabryka

@NeelNanda5

I haven't been aware of the Dustin angle. Why would he be hurt if someone speaks out against OpenAI, or why would he not like that?

1

0

10

@RichardMCNgo

There's public choice theory, which I'm a fan of, but the understandings I get from it (e.g., median voter theorem, rational ignorance, capture by special interests) make me feel averse about and want to stay away from politics. Do you have some other kind of thing in mind?

2

0

9

@RokoMijic

How can Americans know the (instrumental) value of free speech, unless some otherwise similar countries occasionally experiment with giving it up? It's value could sign flip due to tech or other change, and unfortunately we don't have the tools to derive it from first principles.

2

0

10

@perrymetzger

I've connected with Bryan. Apparently Twitter hid my DM from him.

I'm more active on Twitter in part to find out why more people aren't concerned. Feel free to point me to anything that explains your thinking.

3

0

10

@RichardMCNgo

@ilex_ulmus

personally don't respond. I'm not doing it myself here, in part because I'm probably less sure than Holly about the object level ethics and I value intellectual discussions with you, so this is more of an intellectual commentary.

1

0

10

@RichardMCNgo

@ilex_ulmus

I think Holly is probably right on the object level (working for OpenAI is unethical) and the game theory (most people respond to social pressure so why believe that you are different). It also makes sense in terms of disincentivizing others from working at OpenAI, even if you

1

0

10

@webmasterdave

@AutismCapital

Quoting myself: A person who consciously or subconsciously cares a lot about social status will not optimize strictly for doing good, but also for appearing to do good. One way these two motivations diverge is in how to manage risks, especially risks of causing highly negative

0

1

9