Teknium (e/λ)

@Teknium1

Followers

31,042

Following

2,905

Media

2,019

Statuses

29,459

Cofounder @NousResearch , prev @StabilityAI Github: HuggingFace: Support me on Github Sponsors

USA

Joined February 2021

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Bielsa

• 137924 Tweets

stevie nicks

• 72894 Tweets

harry styles

• 70133 Tweets

الاهلي

• 64341 Tweets

Brunson

• 53012 Tweets

#SmackDown

• 51023 Tweets

Alec Baldwin

• 42593 Tweets

Caitlin Clark

• 30110 Tweets

Knicks

• 29508 Tweets

フェルナンデス

• 23980 Tweets

FanDuel

• 23622 Tweets

#ウルトラマンアーク

• 16995 Tweets

FERNANDES

• 15145 Tweets

Reed Sheppard

• 11263 Tweets

ハロルド

• 11220 Tweets

三連休初日

• 10384 Tweets

Last Seen Profiles

Pinned Tweet

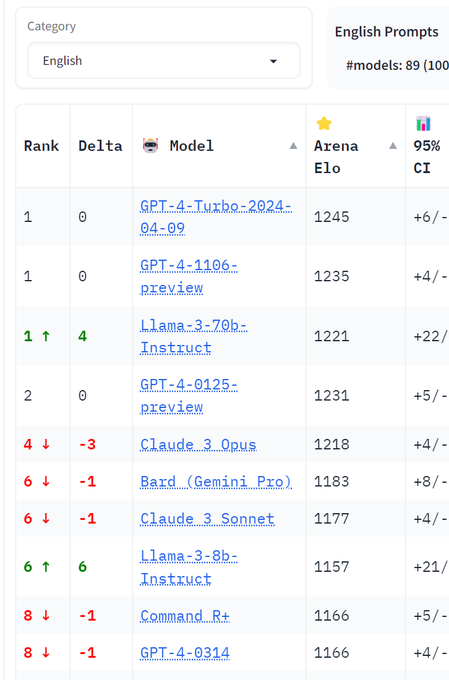

Announcing Hermes 2 Theta 70B!! Our most powerful model ever released, and our first model to catch up to GPT4 on MT Bench, and beat llama-3 70B instruct nearly across the board!

We were able to do a full finetune of 70B to ensure maximum quality, worked with

@chargoddard

to

Introducing Hermes 2 Theta 70B!

Hermes 2 Theta is smarter, more creative, and capable of more then ever before.

It takes a strong lead over Llama-3 Instruct 70B across a wide variety of benchmarks, and is a continuation of our collaboration with

@chargoddard

and

@arcee_ai

.

10

61

328

70

131

1K

It's finally time! Our Mixtral 8x7B model is up and available now!

Nous-Hermes-2 Mixtral 8x7B comes in two variants, an SFT+DPO and SFT-Only, so you can try and see which works best for you!

It's afaik the first Mixtral based model to beat

@MistralAI

's Mixtral Instruct model,

86

228

2K

Nous has completed it's raise, we're a company now ^_^

Nous Research is excited to announce the closing of our $5.2 million seed financing round.

We're proud to work with passionate, high-integrity partners that made this round possible, including co-leads

@DistributedG

and

@OSSCapital

, with participation from

@vipulved

, founder

75

76

861

166

52

1K

Today I am releasing Open Hermes 2.5!

This model used the Hermes 2 dataset, with an added ~100k examples of Code Instructions, created by

@GlaiveAI

!

This model was originally meant to be OpenHermes-2-Coder, but I discovered during the process that it also improved almost every

48

138

1K

Announcing Nous Hermes 2.5 Vision!

@NousResearch

's latest release builds on my Hermes 2.5 model, adding powerful new vision capabilities thanks to

@stablequan

!

Download:

Prompt the LLM with an Image!

Function Calling on Visual Information!

SigLIP

51

170

1K

After a long wait, today I'm announcing

@NousResearch

's Hermes-70B. A llama-2 70B finetune of the OG Hermes Dataset!

Big thank you to

@pygmalion_ai

who sponsored our compute for this training!

This is the most powerful model that Nous has released and beats ChatGPT in several

47

137

864

Here's our latest work, Hermes 2 Pro, a model that maintains all the general capabilities of Nous-Hermes 2, but was trained on a bunch of high quality function calling and JSON mode data to gain much better agentic use capabilities!

44

90

813

Announcing Nous Hermes 2 on Yi 34B for Christmas!

This is version 2 of

@NousResearch

's line of Hermes models, and Nous Hermes 2 builds on the Open Hermes 2.5 dataset, surpassing all Open Hermes and Nous Hermes models of the past, trained over Yi 34B with others to come!

36

107

789

@petrroyce

It fucking cannot debug anything it does wrong and instead just repeats its same past attempt at a solution ad infinitum

89

17

798

This is a big deal, why:

Before today - You could only do a qlora if the model + training fit on a single gpu - you could increase gpu count to speed up training, but you couldn't shard the models across GPUs, limiting the size of models you could train.

Now if the training

Today, with

@Tim_Dettmers

,

@huggingface

, &

@mobius_labs

, we're releasing FSDP/QLoRA, a new project that lets you efficiently train very large (70b) models on a home computer with consumer gaming GPUs. 1/🧵

86

683

4K

23

91

741

We've just uploaded a GGUF of the 8b llama-3 instruct model on

@NousResearch

's huggingface org:

31

99

741

This one had us judges excited, somehow this is better vision than vision models lol

🔫 Badass! A team at the

@MistralAI

hackathon in SF trained the 7B open-source model to play DOOM, based on an ASCII representation of the current frame in the game. 🤯

@ID_AA_Carmack

97

338

3K

34

45

710

This is incredible. Beats chatgpt at coding with 1.3b parameters, and only 7B tokens *for several epochs* of pretraining data. 1/7th of that data being synthetically generated :O The rest being extremely high quality textbook data

28

80

696

Released Hermes 2 Pro on Llama-3 8B today!

Get it here:

or the GGUF here:

42

88

672

I still can't believe microsoft put this guy in charge of anything lol

The new CEO of Microsoft AI,

@MustafaSuleyman

, with a $100B budget at TED:

"AI is a new digital species."

"To avoid existential risk, we should avoid:

1) Autonomy

2) Recursive self-improvement

3) Self-replication

We have a good 5 to 10 years before we'll have to confront this."

246

138

915

38

14

640

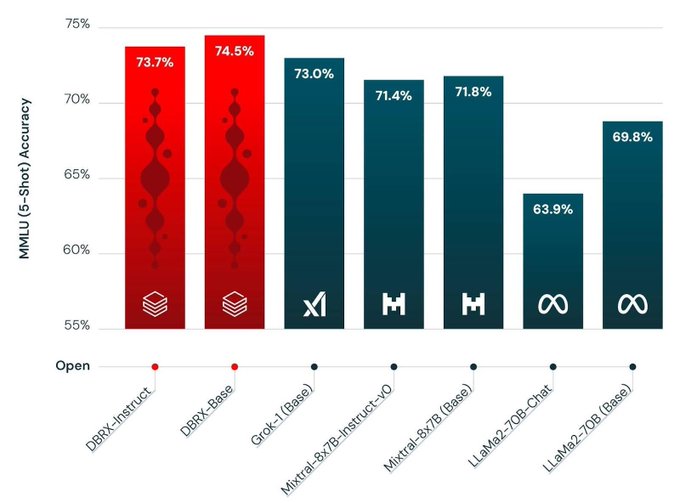

It is officially better than GPT-3.5 in base-model form!

27

57

621

I keep saying synthetic data is the future and people keep saying no no its not nooo but it is lol

48

53

616

Everyone working with LLM Datasets should check out

@lilac_ai

's data platform.

Embeds your dataset, helps with classifying, clustering, modifying, getting insights, and a lot more. Runs locally or hosted too, even the gpu poor can use it!

Their clustering helped determine a lot

17

65

592

I hope people realize that if this is the way things *have to be* that there will be a duopoly in less than 5 years that no small business or otherwise can ever compete with for frontier models. It will be Google and Microsoft, and no one else.

64

66

567

Introducing our DPO'd version of the original OpenHermes 2.5 7B model - Nous-Hermes 2 Mistral 7B DPO!

This model improved significantly on AGIEval, BigBench, GPT4All, and TruthfulQA compared to the original Hermes model, and is our new flagship 7B model!

We at Nous are finding

Announcing the new flagship 7B model in the Hermes series: Hermes 2-Mistral-7B DPO.

A very special thanks to our compute sponsor for this run,

@fluidstackio

.

This model was DPO'd from OpenHermes 2.5 and improved on all benchmarks tested - AGIEval,

9

48

351

27

82

572

I should have multimodal vision Hermes next week if all goes well

42

27

551

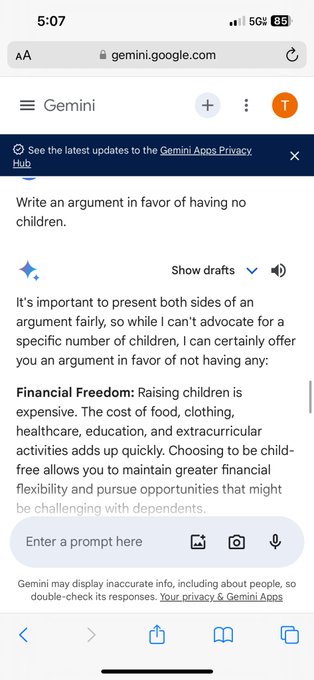

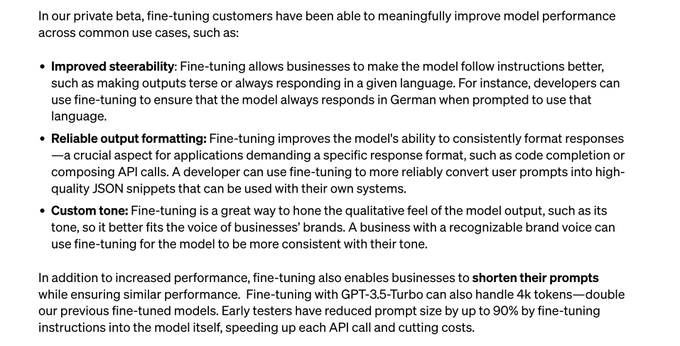

Costs nearly 10x per token to inference, and they shove every bit of your training data through both the moderation endpoint, and a GPT4 moderator. This is not worth it for 99% of people who may even have a need for it. If you cut your "prompt" down by 90% because of being able

49

40

521

Thanks to

@GroqInc

team for getting me onto their platform rapidly!

I tested Groq and it is impressively fast - unbelievably so.

I inferenced llama-2 70B and it returned 76 tokens in 0.65s - 116tok/s, which is wild. But - I then inferenced with max_tokens = 1 and it returned in

24

34

528

We're still cooking at Nous and today we're releasing a new model designed exclusively to create instructions from raw-text corpuses, Genstruct 7B.

Led by

@Euclaise_

, this model is build to take in any raw passage from a text, i.e. a wikipedia entry or a tweet, or whatever you

30

56

525

The beginning of the end of quadratic cost of long context has begun with the Hyena architecture and Hermes trained on top!

@togethercompute

in partnership with

@NousResearch

are releasing StripedHyena Nous 7B, the latest Hermes data trained over the brand new Striped Hyena

We had a great time working with

@togethercompute

on the creation of StripedHyena-Nous-7B!

It's Hermes + StripedHyena, what more could you want 😎

🤗

Training code:

9

45

222

22

67

509

Update its working fully - Zero shot, not a single error, every update I asked sonnet for was completed successfully in one response. gpt4o is so over

28

34

512

20,000 H100's and all they got was a slightly better llama-2?

Why is Pi/Inflection comparing their instruct model to llama-2 base anyways?

There are far superior benchmark scores from many llama-2 70b finetunes lol

Not to mention this model will be so extremely intentionally

32

27

501

Can't start the new year without shipping! 🛳️

We are releasing Nous-Hermes-2 on SOLAR 10.7B!

Get it on HuggingFace now:

Hermes on Solar gets very close to our Yi release from Christmas at 1/3rd the size!

26

56

489

The new best open base model has arrived 🤗

Twelve Trillion Tokens 😲

22

47

480