mkshing

@mk1stats

Followers

1,655

Following

360

Media

139

Statuses

497

research @SakanaAILabs 🐠🐡 | tweets are my own

Japan

Joined February 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#Mchoice2024

• 249004 Tweets

Ganesh Chaturthi

• 154881 Tweets

ホームラン

• 64991 Tweets

#マイナビTGC

• 64939 Tweets

ビッグラン

• 19295 Tweets

三千院帝

• 14836 Tweets

九能帯刀

• 14678 Tweets

クリスマスパレード

• 13362 Tweets

#AlonAlbumTracklist

• 11098 Tweets

#PABLOAlonAlbum

• 10736 Tweets

Last Seen Profiles

Stable Video Diffusionも早く触りたいでも、リソースやどうやって試せば良いのか分からないという方!

Colabのフリープランで試せるようにハックしました。どうぞお試しください〜。

Colab:

#StableVideo

初期画像は

#JSDXL

から生成。

10

78

322

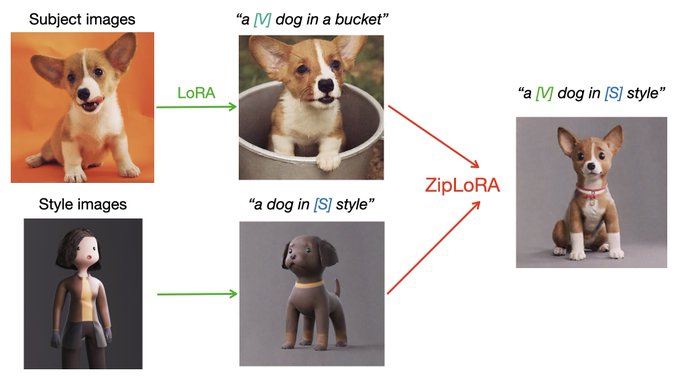

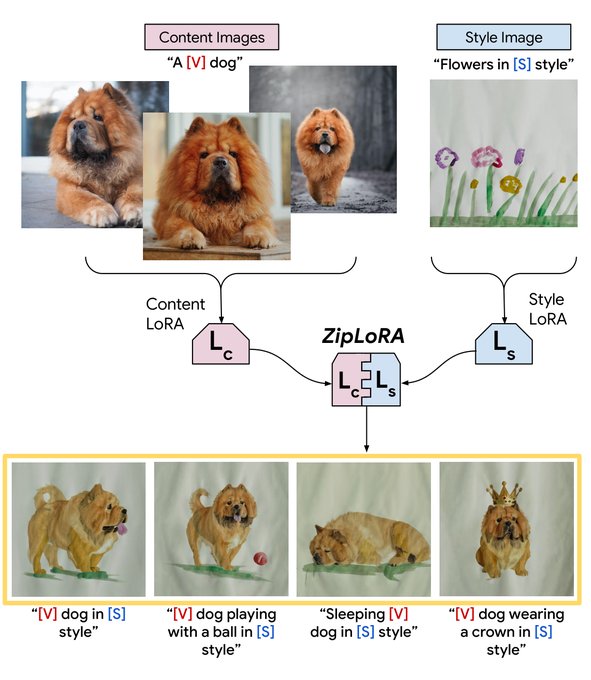

So, I quickly implemented the ZipLoRA by 🤗🧨 (Some people have already noticed though)

code:

I hope it helps somehow and feel free to drop your comments and feedback~

Big thanks to the authors for their awesome work 🙌

With collaborators

@Google

we're announcing 💫 ZipLora 💫! Merging LoRAs has been a big thing in the community, but tuning can be an onerous process. ZipLora allows us to easily combine any subject LoRA with any style LoRA! Easy to reimplement 🥳

link:

33

237

1K

5

60

319

この度、SVDiffという手法のコードを公開しました🎉

code:

LoRAに比べてより効率的な(学習するパラメータ数が少ない)手法であり、同等レベルの生成結果を得ることができます!ぜひ使ってみてください。

#stablediffusionsvdiff

#stablediffusion

#aiart

#generativeai

1

77

278

I am very honored to release our

#JapaneseStableDiffusion

blog on

@huggingface

's blog. Thank you very much for your kind support!

@_akhaliq

@osanseviero

@multimodalart

@julien_c

4

37

194

SVD 1.1 seems to have been released 👀

HF:

For those who can't wait for the official announcement, you can try it on this colab for free.

Colab:

1/3

#SVD

@StabilityAI

released a very powerful image-to-video model called "Stable Video Diffusion" 📽️

For those who are super excited to try it but don't have enough resources, I hacked it to work on Colab free plan 🤗 Check it out!

Colab:

#StableVideo

#SVD

4

10

69

6

51

173

I implemented SCEdit, especially SC-Tuner for now!

The experiments in the paper were done with SD 1.5/2.1 but you can apply it to any UNet-based diffusion models including SDXL.

code:

colab:

Any feedback is welcome!

3

41

153

Super excited to announce the release of Japanese Stable Diffusion 🇯🇵🎉

Japanese Stable Diffusion is a Japanese-specific Stable Diffusion capable of generating Japanese-style images given any Japanese text.

#JapaneseStableDiffusion

#StableDiffusion

#AIart

3

22

127

@dome_271

@pabloppp

@StabilityAI

Also, I hacked to run the Stable Cascade on Colab free plan (T4 16GB)!

Enjoy!

#stablecascade

colab:

5

31

108

SVDiff-pytorch was updated 🎉Now, you can get better results with less training steps!

Please see code for more details!

code:

"portrait of {gal gadot} woman wearing kimono"

My implementation of SVDiff was released 🎉

I hope everyone plays around it and we can hopefully make it better!

code:

#stablediffusionsvdiff

#stablediffusion

#aiart

#generativeai

4

11

60

2

24

105

In my experiment, SVDiff looks very promising!!

The file size is <1 MB, which is x3 smaller than LoRA and the result looks comparable as the paper.

left: LoRA, right: SVDiff

4

20

96

マージはLLM界隈で主に適用されてきましたが、我々はLLMに加えて、英語VLMと日本語LLMを融合させるといった新しいマージを実施しており、日本データセットで学習して作ったモデルより性能が良い結果になっております!

また、VLMのみならず、拡散モデルへの適用もうまくいっております。乞うご期待!

0

20

91

@StabilityAI

released a very powerful image-to-video model called "Stable Video Diffusion" 📽️

For those who are super excited to try it but don't have enough resources, I hacked it to work on Colab free plan 🤗 Check it out!

Colab:

#StableVideo

#SVD

4

10

69

SVDiffのコードをアップデートしました 🎉 いくつかの改善により、少ない学習ステップでより良い性能が出るようになりました!! 詳細はコードを参照ください。

code:

"portrait of {gal gadot} woman wearing kimono"

この度、SVDiffという手法のコードを公開しました🎉

code:

LoRAに比べてより効率的な(学習するパラメータ数が少ない)手法であり、同等レベルの生成結果を得ることができます!ぜひ使ってみてください。

#stablediffusionsvdiff

#stablediffusion

#aiart

#generativeai

1

77

278

1

15

63

昨日

@SakanaAILabs

からリリースした日本語画像言語モデルEvoVLM-JPは、誰でもすぐにお試しいただけます。

開発者の方々はモデルも公開していますので、ぜひお持ちの環境で試してみてください。

#EvoVLMJP

1

15

66

My implementation of SVDiff was released 🎉

I hope everyone plays around it and we can hopefully make it better!

code:

#stablediffusionsvdiff

#stablediffusion

#aiart

#generativeai

4

11

60

Würstchen v2, by

@pabloppp

and

@dome39931447

, is just awesome! Only 2 seconds of inference to generate 1024px images 🤩

Very excited about next week's release!

2

8

50

この度、商用利用可能な日本語VLM「Japanese Stable VLM」をリリースしました🎉🐶⚔️

1st ver.「Japanese InstructBLIP」と比べ、最新手法のLLaVA 1.5の構造を適用したほか、独自の「タグ条件付きキャプショニング」に対応しています。

Colabも用意しているので、ぜひ触ってみてください。リンク↓↓

1

16

43

I am very excited to announce the first release of the Japanese LLM by

@StabilityAI_JP

🎉

We have released 2 7B models and both are in 1st and 2nd place in the evaluation leaderboard 🤩🔥

base:

instruction:

2

5

37

Now, the other important parameters are supported!

For example, the lower `motion_bucket_id` (default: 127) leads to less motion.

Tested with the Windows background.

(top: default 127, down: 31)

@StabilityAI

released a very powerful image-to-video model called "Stable Video Diffusion" 📽️

For those who are super excited to try it but don't have enough resources, I hacked it to work on Colab free plan 🤗 Check it out!

Colab:

#StableVideo

#SVD

4

10

69

2

4

30

You can try SVDiff on

@huggingface

spaces with

@Gradio

👉

gradio demo:

If you want to test the UI locally, please see here 👉

1

6

28

月曜日にリリースしたJapanese Stable VLMに続き、最高性能であるJapanese Stable CLIPをリリースしました!

rinnaで学習したCLOOBのスコアを大幅に上回ることができました🥳そのまま使うのも良し、生成モデルに組み込むのも良し。ぜひ使ってみてください!

リンク↓↓

1

5

29

Stable Cascade (Würstchen v3) by

@dome_271

and

@pabloppp

is out!

I've been a big fan of Würstchen since v1 but v3 is absolutely the best as their results are shown.

code:

Big congrats to both of you and

@StabilityAI

!

It finally happened. We are releasing Stable Cascade (Würstchen v3) together with

@StabilityAI

!

And guess what? It‘s the best open-source text-to-image model now!

You can find the blog post explaining everything here:

🧵 1/5

90

83

422

1

0

27

Our latest work was finally released!

Model merging shows great performance w/o training. However, it relies on developers' experience and intuition a lot!

To address this issue, we introduced "Evolutionary Model Merge" to automate model merging based on specified metrics.

1/2

2

2

25

Another great model from AI Forever after ruDALLE! They always have a big impact on the non-English AI communities 👏

colab:

"日本の田舎風景 桜並木 デジタルアート"

0

3

16

Stable Video Diffusion is just awesome.

#StableVideo

@StabilityAI

released a very powerful image-to-video model called "Stable Video Diffusion" 📽️

For those who are super excited to try it but don't have enough resources, I hacked it to work on Colab free plan 🤗 Check it out!

Colab:

#StableVideo

#SVD

4

10

69

0

3

13

#JSDXL

は、SDXLに適用されている多くの拡張手法・機能にも対応可能です。

例えば、以下では、JSDXLを用いたLoRAによるドメインチューニングをお試しいただけます。

Colab:

0

3

13

DiffFit was applied to DiTs but can be applied to

#stablediffusion

too as a PEFT.

Here's my WIP implementation of DiffFit.

code:

I'm still struggling with good results so I am happy to get feedback!

1

3

12

We have released another 2 kinds of powerful Japanese Stable LM (JSLM) under the commercial license 🎉🎉

JSLM 3B-4E1T:

(base)

(instruct)

JSLM Gamma 7B:

(base)

(instruct)

1

2

12

Maybe I should emphasise what our

#JapaneseStableDiffusion

is good and what is the difference from other fine-tuned models like waifu-diffusion.

1/5

1

3

12

A single-step SDXL is out! SDXL Turbo was trained w/ a new distillation technology called Adversarial Diffusion Distillation.

You can quickly try it on

@clipdropapp

and here's my result😺

clipdrop:

paper:

HF:

0

2

11

Compared

#stablediffusion

sampling methods (ddim, plms, k_euler, k_euler_ancestral, k_heun, k_dpm_2, k_dpm_2_ancestral, k_lms)

code:

"A beautiful painting of a view of country town surrounded by mountains, ghibli"

#stablediffusion

#AIart

#AiArtwork

1

0

7

ImageReward, a t2i human reference reward model (RM), is out under Apache2.0.

"ImageReward outperforms existing text-image scoring methods, such as CLIP, Aesthetic, and BLIP, in terms of understanding human preference in text-to-image synthesis"

1

4

6

ちなみに、Japanese Stable VLM、Japanese Stable CLIPの画像ももちろん、

#JSDXL

から生成 🐶

0

2

5

@StabilityAI_JP

Now, you can try Japanese StableLMs with free Colab plan 😄 Check it out!

colab;

0

2

4

"A beautiful painting of a view of country town surrounded by mountains, ghibli" by "k_lms"

#stablediffusion

#AIart

#AiArtwork

0

0

4

先週発売の日経サイエンスの「特集 話すAI 描くAI」にて、昨今話題のLLMや画像生成モデルについて取材を受けました。最近の怒涛のAI進化にキャッチアップできるだけでなく、仕組みを分かりやすく記載頂いております!

日本のAIコミュニティに少しでもお役に立てれば嬉しいです!

📗3月25日発売!

日経サイエンス2023年5月号

🤖特集:話すAI 描くAI

【特別解説:数学の数学「圏論」の世界】

・クール・コンピューター 熱くならない計算機を作る

#科学

#AI

19

192

455

1

1

4

This was released a month ago but I noticed that I haven't retweet it!

I was honored that I joined this project as the main AI researcher :)

The short anime is very touching, so please don't miss watching it~

0

0

3

Because we want a model to understand our culture, identity, and unique expressions.

For example, one of the famous Japanglish is "salary man" which means a businessman wearing a suit.

This is from

#stabledifussion

w/ "salary man, oil painting"

1

1

3

Comparison between sampling methods

"Japanese samurai with sunglasses"

#stablediffusion

#AIart

0

0

3

Do you want to use `init_image` in

#stablediffusion

before they release?

Now I implemented k-diffusion samplers in

#stablediffusion

as glid-3-xl.

Here's the code 🎉

code:

prompt: "A ukiyoe of a countryside in Japan" by k_lms sampler

Implemented k-diffusion samplers in glid-3-xl because

#stablediffusion

uses `k_lms` sampler by default.

All k samplers (k_euler, k_euler_ancestral, k_heun, k_dpm_2, k_dpm_2_ancestral, k_lms) are supported.

"A ukiyoe of a countryside in Japan"

1

0

0

1

0

2