Minqian Liu

@minqian_liu

Followers

430

Following

1K

Statuses

89

PhD student @VT_CS | Previous Research Intern at Microsoft and AWS AI | he/him

Blacksburg, VA

Joined August 2017

Thrilled to announce that our work has been accepted by #EMNLP2024 (Main Conference)! We introduced a highly challenging benchmark for interleaved text-and-image generation along with a strong multi-aspect evaluator. See you in Miami! 🌴 Paper: Dataset:

🚨 New paper alert! We introduce InterleavedBench📚, the first comprehensive evaluation benchmark for interleaved text-and-image generation, as well as InterleavedEval🔍, a powerful GPT-based evaluator that supports multi-aspect assessment. arXiv: (1/n)

0

0

4

🔥Thrilled to announce ReFocus, our latest work led by @XingyuFu2 that teaches MLLMs to generate “visual thoughts” 🧠 via visual editing on tables and charts 📊 to improve reasoning. Huge thanks to all the amazing co-authors! 🙌

Teach GPT-4o to edit on charts and tables to ReFocus 🔍 and facilitate reasoning 🧠! 🔥 We introduce ReFocus, which edits input table and chart images to better reason visually 🤔 Can we teach smaller models to learn such visual CoT reasoning? 🚀 Yes -- They are better than QA and CoT data! 📈 ReFocus + GPT-4o brings +11.0% on tables and +6.8% on charts without using any tools🔧! 📊 We release a 14K Visual CoT Reasoning *Training Dataset* that provides intermediate refocusing supervision. 🤖+🔍 > CoT: ReFocus VCoT is 8.0% better than QA data and 2.6% better CoT data with supervised Finetuning on Phi3.5v. Trained model also released. 📑 Check out This work is done during intern @Microsoft with amazing coauthors @minqian_liu

@zhengyuan_yang @JCorring36990

@YijuanLu @jw2yang4ai

@DanRothNLP @DineiFlorencio @ChaZhang. A huge shoutout to everyone!

0

0

2

RT @tuvllms: 📢✨ I am recruiting 1-2 PhD students at Virginia Tech this cycle. If you are interested in efficient model development (inclu…

0

78

0

👏 Big thanks to @zhiyangx11, @lifu_huang, and all other awesome collaborators for their great effort in this work! The dataset and code will be released soon at Stay tuned! (6/n)

0

0

5

RT @GuoOctavia: Ever wondered if style lexicons still play a role in the era of LLMs? 🤔 We tested 13 established and 63 novel language sty…

0

9

0

Thank you for the great work! Will definitely check it out.

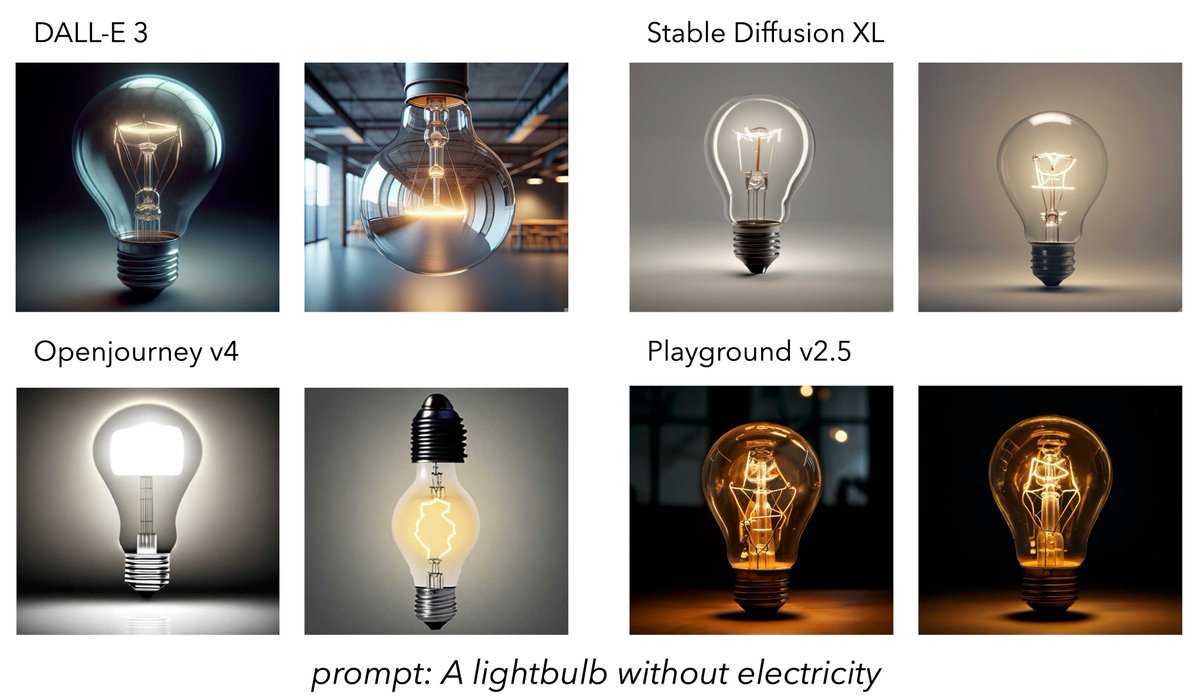

Can Text-to-Image models understand common sense? 🤔 Can they generate images that fit everyday common sense? 🤔 tldr; NO, they are far less intelligent than us 💁🏻♀️ Introducing Commonsense-T2I 💡 a novel evaluation and benchmark designed to measure commonsense reasoning in T2I models 🔥🔥 Paper: (1/n)

0

0

1

RT @XingyuFu2: Can Text-to-Image models understand common sense? 🤔 Can they generate images that fit everyday common sense? 🤔 tldr; NO, t…

0

39

0

RT @YingShen_ys: 🚀 Excited to introduce my internship work at @Apple MLR : Many-to-many Image Generation with Auto-regressive Diffusion Mo…

0

34

0

RT @_akhaliq: Vision-Flan Scaling Human-Labeled Tasks in Visual Instruction Tuning Despite vision-language models' (VLMs) remarkable capa…

0

42

0

RT @DrogoKhal4: Knowledge Conflict gets accepted in #ICLR24 #Spotlight! We updated more results on 5 open- and 3 close-source LLMs and foun…

0

12

0

RT @barry_yao0: Our entity linking work has been accepted by #EACL2024. Check our work: Ameli: Enhancing Multimodal Entity Linking with Fin…

0

1

0