Maciej Wołczyk

@maciejwolczyk

Followers

738

Following

1K

Statuses

148

Research Scientist @ Google, alumni of Jagiellonian University and IDEAS NCBR. Interested in reinforcement learning & continual learning, Also on 🦋

Joined March 2011

RT @jahulas: (1/n) Introducing Joint MoE Scaling Laws: Mixture of Experts Can Be Memory Efficient We show that MoE is so compute-efficient…

0

39

0

The previous summer schools have been an absolute blast, I strongly recommend applying! Also it's a great opportunity to explore the beautiful city of Krakow while you learn!

📢You’re invited to the Machine Learning Summer School on Drug and Materials Discovery (MLSS^D 2025)! 🚀🔬 🌟 MLSS^D is an intensive summer school focused on cutting-edge machine learning techniques and their applications in drug discovery and materials science. By joining MLSS^D, you will: ✅ Learn from leading experts in ML and computational sciences ✅ Explore real-world case studies and practical applications ✅ Share your research with the world during a poster session ✅ Connect with researchers and professionals from around the world 🔗 Registration is now open! Register now: 🔥 When? July 1–6, 2025 📍 Where? Kraków, Poland 🌐 Find out more on our website: 🚀 Don’t miss this unique opportunity to expand your knowledge and network in one of Europe’s most beautiful cities! #MLSSD2025 #MachineLearning #DrugDiscovery #MaterialsScience #MLinPL

0

0

9

RT @CupiaBart: BALROG, our benchmark for agentic LLM and VLM reasoning on games, has just been accepted to #ICLR! See you in Singapore 🇸🇬!

0

4

0

RT @PaglieriDavide: 🚨BALROG leaderboard update This week's new entries on are: Llama 3.3 70B Instruct 🫤 Claude 3…

0

5

0

RT @PaglieriDavide: It's great to see BALROG featured on @jackclarkSF's Import AI newsletter! Check out what he had to say about it here:…

0

3

0

RT @CupiaBart: 🎮 Created a 3D-rendered version of @danijarh Crafter environment using Minecraft-style models & textures, replacing the ori…

0

9

0

RT @emollick: This may sound odd, but game-based benchmarks are some of the most useful for AI, since we have human scores and they require…

0

110

0

RT @gracjan_goral: Quick poll: What's 2 + 2? [ ] 3 [ ] 5 [ ] 22 [ ] 🍎 Feeling confused? That's the point! Our new study explores how AI (…

0

5

0

RT @marek_a_cygan: Bardzo się cieszę z deklaracji utrzymania (lub nawet zwiększenia) finansowania dla IDEAS oraz planu przekształcenia w in…

0

27

0

RT @PiotrRMilos: Z 7 osób w mojej grupie badawczej w @IDEAS_NCBR , 3 już rozważają odejście, a 2 nowe które miały dołączyć nie dołączą - pr���

0

100

0

RT @boleslawbreczko: Dotarliśmy do audytu, jaki NCBR przeprowadził w @IDEAS_NCBR na początku roku. Czy to o nim mówi min. @m_gdula? Jeśli…

0

17

0

RT @piotrsankowski: Przede wszystkim chcę zacząć od słowa: dziękuję. Tym, którzy tak licznie zaangażowali się we wsparcie dla mnie, mojego…

0

2K

0

Niedawno obroniłem doktorat ze sztucznej inteligencji. Gdyby nie IDEAS z dużym prawdopodobieństwem pracowałbym już za granicą i wielokrotnie podobne historie słyszałem od swoich rówieśników. To miejsce daje szansę dla rozwoju nie tylko polskiej nauki, ale też polskich naukowców.

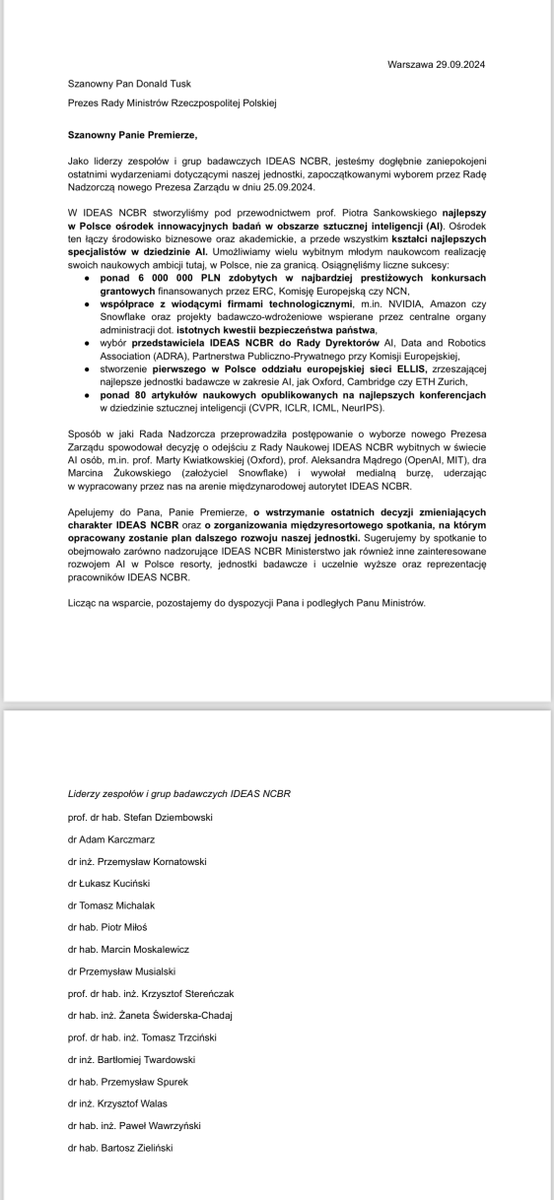

List do Premiera RP @donaldtusk @donaldtuskEPP @JanGrabiec podpisany przez wszystkich liderów @IDEAS_NCBR przez @SteDziembowski @P_M_Kornatowski @LukeKucinski @PiotrRMilos @MMoskalewicz cc @sylvcz @kawecki_maciej @annawitten @boleslawbreczko

46

268

1K

RT @tomasztrzcinsk1: List do Premiera RP @donaldtusk @donaldtuskEPP @JanGrabiec podpisany przez wszystkich liderów @IDEAS_NCBR

https://t.co…

0

180

0

RT @merettm: @marek_a_cygan AI postępuje szybko i będzie miało coraz większy wpływ na gospodarkę. Bardzo ważne są badania podstawowe, budow…

0

175

0

RT @ChrSzegedy: IDEAS has been a great partner at pushing AI for sciences and it has developed into an exceptional powerhouse of European i…

0

230

0

RT @tomasztrzcinsk1: @MatthiasBethge @donaldtuskEPP @MatthiasBethge thank you for your support for @IDEAS_NCBR 💪 @aleks_madry is one of man…

0

11

0

RT @MatthiasBethge: Deeply concerned about the future of IDEAS NCBR, a pioneering AI research institute & newly approved ELLIS unit. Intern…

0

136

0