Daniel Kang

@daniel_d_kang

Followers

3,706

Following

89

Media

22

Statuses

262

Asst. professor at UIUC CS. Formerly in the Stanford DAWN lab and the Berkeley Sky Lab.

Stanford, CA

Joined November 2010

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Arlington

• 738010 Tweets

Elon Musk

• 409634 Tweets

مدريد

• 393862 Tweets

Champions

• 366870 Tweets

Brazil

• 286683 Tweets

#انقاذ_النصر_مطلب_عشاقه

• 269475 Tweets

Colorado

• 242591 Tweets

لاس بالماس

• 190079 Tweets

UEFA

• 168949 Tweets

#UCLdraw

• 168737 Tweets

Beşiktaş

• 125063 Tweets

Xandão

• 84035 Tweets

Bayern

• 84003 Tweets

Bluesky

• 54008 Tweets

#BJKvLUG

• 47787 Tweets

#الاتحاد_التعاون

• 42038 Tweets

McDonalds

• 38715 Tweets

Lugano

• 36390 Tweets

Mustafa Kemal Atatürk

• 28071 Tweets

Las Palmas

• 26072 Tweets

Girona

• 25516 Tweets

Jurassic World

• 21140 Tweets

Rafa Silva

• 19059 Tweets

Brahim

• 16403 Tweets

Servette

• 14710 Tweets

بنزيما

• 14065 Tweets

Semih

• 12755 Tweets

Last Seen Profiles

As LLMs have improved in their capabilities, so have their dual-use capabilities. But many researchers think they serve as a glorified Google

We show that LLM agents can autonomously hack websites, showing they can produce concrete harm

Paper:

1/5

12

107

427

To safeguard trade secrets, LLMs like

@OpenAI

's ChatGPT are closed off, impacting trust. Recent alterations in ChatGPT outputs sparked cost-saving downgrade rumors (see link). How can we reconcile trade secret protection & trust? New blog post on how:

1/

10

17

120

@OpenAI

claimed in their GPT-4 system card that it isn't effective at finding novel vulnerabilities.

We show this is false. AI agents can autonomously find and exploit zero-day vulnerabilities.

Paper:

🧵 1/7

5

40

119

Honored to be awarded the ACM SIGMOD Jim Gray Doctoral Dissertation award!

It wouldn't have been possible with amazing support from my advisors

@pbailis

,

@matei_zaharia

, and

@tatsu_hashimoto

, and many many others who supported me throughout my PhD :)

Congratulations to

@daniel_d_kang

, recipient of this year's ACM SIGMOD Jim Gray Doctoral Dissertation Award for his thesis (co-advised with

@matei_zaharia

and

@tatsu_hashimoto

) on "Efficient and accurate systems for querying unstructured data"!

3

8

61

14

7

107

We showed that LLM agents can autonomously hack mock websites, but can they exploit real-world vulnerabilities?

We show that GPT-4 is capable of real-world exploits, where other models and open-source vulnerability scanners fail.

Paper:

1/7

5

31

101

Bad data can lead to bad models!

@cgnorthcutt

,

@anishathalye

, and Jonas Mueller shows that bad data can effectively reduce model capacity by 3x ()! (2/5)

1

2

77

AI-generated audio is increasingly realistic and is being used for fraud, etc.

We (

@kobigurk

,

@AnnaRRose

) show how to fight AI-audio with cryptographic techniques! Read more about our attested audio experiment:

And listen:

1/

5

16

73

I had a blast talking at ZkSummit, which was live streamed. The recording is here - I talk about how zkml can be used and a bit about how we scaled it:

Thanks to

@AnnaRRose

for hosting such a fun event!

2

6

63

@alexalbert__

@vaibhavk97

Check out our work on exploiting LLMs:

Maybe there are some other ways to bypass GPT-4 content filters!

4

5

52

Had a blast talking to congressional staffers the other day! Lots of excitement on the hill about AI policy :)

Today,

@IllinoisCS

Professor Daniel Kang briefed congressional staff about emerging technologies in AI and machine learning at the invitation of the Senate AI Caucus

2

1

11

1

2

44

I had a great time chatting with

@AnnaRRose

,

@tarunchitra

, and

@theyisun

about ZK + ML!

And stay tuned for an open-source code release in the coming weeks :)

This week,

@AnnaRRose

and

@tarunchitra

dive into the topic of ZK ML with guests

@theyisun

&

@daniel_d_kang

. They discuss their move into ZK, the fascinating intersection between ZK+ML and the potentially powerful uses for these combined technologies

2

12

49

0

3

38

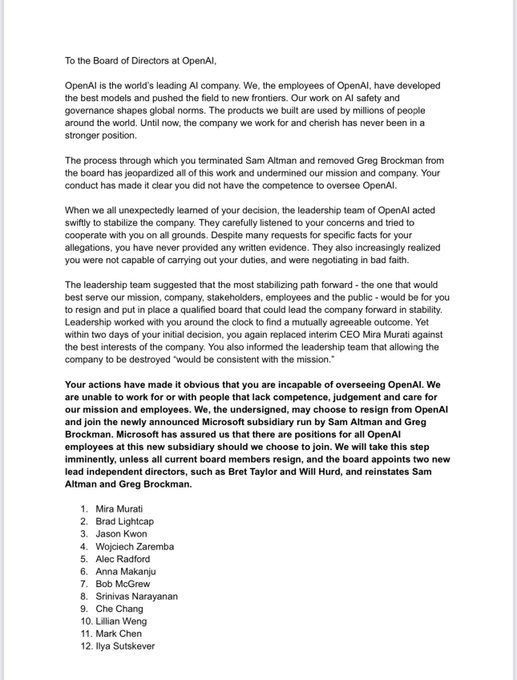

Can someone explain to me like I'm five how any of this makes sense:

1. No safety concerns, no impropriety

2. Ilya is on the board and could have voted to not fire Sam (3-3)

3. Ilya signs this letter

🤔

8

1

31

Not sure how I missed it, but congratulations to my former labmate

@kexinrong

for the Honorable Mention for the 2022 SIGMOD Jim Gray Doctoral Dissertation Award!!

Kudos to

@pbailis

and Phil Levis for their amazing advising as well!

0

1

30

I'll also be at ZkSummit () giving a talk about zkml at 5:30PM local time! Say hi if you see me :)

3

2

27

Apparently Twitter hates blog links in the main thread, so check out our blog post here:

1

1

24

ZKML has incredible potential. It could audit Twitter timelines, tackle deepfakes, and even help create transparent ML systems.

@labenz

even proposed autonomous lawyers! However, it's too slow and too expensive today

2/9

1

2

20

It's always astonishing to me how many claims are made about LLMs that have no empirical backing. Love the science in this paper!

tl;dr: LLMs learn real English easier than "impossible" languages, refuting claims by Chomsky et al

We always knew that Chomsky was wrong about language models, it’s nice to have a paper showing you just how wrong he was!

#ACL2024

best papsr.

28

176

981

2

0

20

This work was done in collaboration with

@OpenAI

as part of a red-teaming effort. We’d like to thank them for their support!

6/6

0

0

16

This work wouldn't have been possible without

@punwaiw

, who spearheaded the development!

9/9

1

1

17

@alex_woodie

It may be the norm, but I hope that this brings attention to data quality issues in mission-critical settings! Similarly, hopefully ML deployments will start to tools to vet this data, like LOA :)

0

0

15

I had a blast talking with

@labenz

on the

@CogRev_Podcast

about ZK + AI!

AI and crypto: for months I looked for someone who could help me understand how they might interact

Finally I found that person in

@daniel_d_kang

His application of zero-knowledge cryptographic proofs to AI inference makes it possible to prove that a model has been faithfully

1

4

38

0

5

14

We've updated our estimates of producing personalized spam with ChatGPT using their new API costs! Personalized spam email costs as little as $0.00064 with gpt-3.5-turbo, showing the need for better mitigations

Read more:

0

2

12

Scaling pandas across machines (e.g., for business) is now commonplace, but the lowly single machine is overlooked.

I've been working closely with domain experts (e.g., law profs) and even spinning up servers is a huge pain. Dias accelerates pandas workloads on their laptop! 1/

1

1

11

One of our

@AddisCoder

alum presented his first research paper at an ACL workshop!

@AddisCoder

2018 alum from Bahir Dar (Henok Biadglign Ademtew) just sent me this image: presenting his first research paper at an ACL workshop.

Find the paper here:

@timnitGebru

@daniel_d_kang

@boazbaraktcs

@aclmeeting

2

5

45

0

0

10

Our paper was accepted to

#NAACL2024

!

@ZhanQiusi1

will be presenting in ‘Ethics, Bias, and Fairness 2’ session on Monday from 4:00 PM to 5:30 PM in DON ALBERTO 1. Go watch her presentation :)

0

2

10

I'm grateful to my advisors

@pbailis

,

@tatsu_hashimoto

,

@matei_zaharia

, and colleagues at Stanford who made my PhD possible

2/4

1

0

8

I'll be speaking in-person at

@scale_AI

on Thursday, April 28th about finding errors in ML models and in human labels! RSVP here:

0

2

8

And here's a blog post on the topic:

@OpenAI

claimed in their GPT-4 system card that it isn't effective at finding novel vulnerabilities.

We show this is false. AI agents can autonomously find and exploit zero-day vulnerabilities.

Paper:

🧵 1/7

5

40

119

0

3

7

Check out SkyPilot! I've been helping out at Berkeley and it's amazing to see how helpful it's been for managing cloud jobs

1

1

7

This is joint work with

@akashmittal1795

,

@conrevo0

,

@sathyasravya

,

@tengjun_77

, Chenghao Mo, Jiahao Fang, and Timothy Dai

7/7

0

1

6