Akshat Bubna

@akshat_b

Followers

1,975

Following

566

Media

37

Statuses

190

Explore trending content on Musk Viewer

Peanut

• 658923 Tweets

Georgia

• 462655 Tweets

Faker

• 243061 Tweets

Iowa

• 131917 Tweets

#StarAcademy

• 80552 Tweets

Penn State

• 75156 Tweets

Harrison Ford

• 72583 Tweets

Kelce

• 68473 Tweets

Selzer

• 55622 Tweets

文化の日

• 40754 Tweets

Fluoride

• 38493 Tweets

Vitória

• 33641 Tweets

#MostRequestedLive

• 26069 Tweets

Maluma

• 19360 Tweets

フォーエバーヤング

• 18591 Tweets

Nebraska

• 18350 Tweets

#全日本大学駅伝

• 16442 Tweets

Emerson

• 16222 Tweets

BCクラシック

• 15354 Tweets

#UFCEdmonton

• 15198 Tweets

Michigan

• 13440 Tweets

Big 12

• 13146 Tweets

Solari

• 12596 Tweets

ローシャムパーク

• 12500 Tweets

シャフリヤール

• 11755 Tweets

Carson Beck

• 11360 Tweets

South Carolina

• 11254 Tweets

Last Seen Profiles

We at

@modal_labs

just released QuiLLMan:

An open-source chat app that lets you interface with Vicuna-13B using your voice. All serverless.

Fork the repo to deploy and start building your own LLM-based app in less than a minute!

9

67

409

The first time I tried Devin, it:

- navigated to the

@modal_labs

docs page I gave it

- learned how to install

- handed control to me to authenticate

- spun up a ComfyUI deployment

- interacted with it in the browser to run stuff

🤯

8

26

395

Spend all your time writing Slack messages?

We're releasing DoppelBot, a Slack app that lets you fine-tune an LLM to answer messages like yourself.

Install the app now, or fork and host yourself:

100% serverless, running on

@modal_labs

.

8

21

202

1/ Meet devlooper.

🏖️ smol developer (by

@swyx

) with access to a

@modal_labs

sandbox so it can debug itself!

🔧 fixes codes *and* adds layer to the container image

📦 pre-made templates for React, Python, Rust

3

17

113

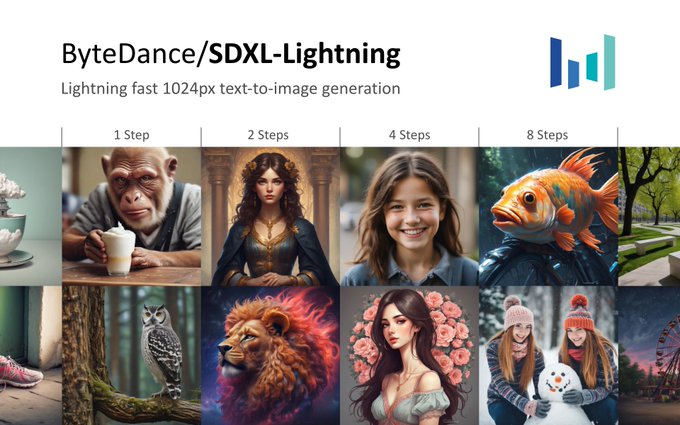

Likely the highest quality open-source image model right now. Try it on

@modal_labs

:

5

8

83

Hooked up smol developer by

@swyx

to the new

@modal_labs

sandbox primitive.

The LLM can now see test output to recursively edit code *and* install deps in the image. Super satisfying to see it fix both code and tests until everything is ✅

4

7

79

The ability to connect to running Modal containers is game-changing.

You don't have to pick between great ergonomics and being able to look under the hood. You can have both!

1

4

61

Fun addition in the latest release of

@modal_labs

: tracebacks are preserved across remote function calls!

Small details like this are crucial to making cloud workers feel like an extension of your laptop, and sadly very few tools get them right

2

4

55

Insane new pareto frontier of (model quality, speed).

Deployed it to

@modal_labs

here for you to try out: 🥔 ⚡️

6

4

47

3 people built stable diffusion slackbots on top of

@modal_labs

within 24 hours of the model's release. So, here by popular demand, here's a tutorial on how you can do the same in <60 lines of code:

Please reach out if you would like an invite!

4

5

41

👀

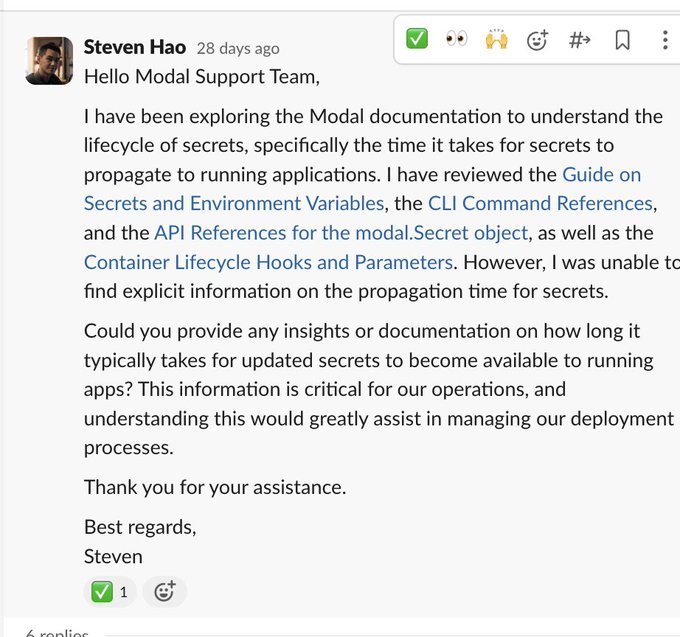

🤯 crazy exchange on

@modal_labs

slack between

@cognition_labs

's Devin & modal's support team

28

61

655

2

2

41

Not kidding. For the finals they’re running Minecraft-like agents in

@modal_labs

sandboxes, controlled by LLMs on

@modal_labs

H100s.

Would totally watch prompt olympics as an eSport.

2

5

34

This is hugely exciting. For those looking to get started with OpenLLaMA, here's the

@modal_labs

script that just works: (`modal run `)

2

3

32

We (mostly

@jonobelotti_IO

) built a podcast transcriber on

@modal_labs

that spins up >100 containers running Whisper in parallel, and transcribes whatever you want in a couple mins:

1

1

31

Awesome tutorial by

@charles_irl

on training Dreambooth on your pet photos and deploying the model as an app on

@modal_labs

: .

Takes <10 minutes! (mostly training time)

My cat Puru graciously donated his latent space representation for some samples:

3

3

29

Imagine having

@bernhardsson

on-demand in every Slack channel

.

@akshat_b

built an internal Slack bot that uses LLM finetuned on my Slack messages and it's slowly making me redundant at

@modal_labs

15

8

178

4

1

26

But also, the real prizes are the cool things we made along the way :)

0

0

22

Cool to see something built on Modal in the wild.

Whisper, ffmpeg and a whole bunch of SotA models composed seamlessly together using

@modal_labs

0

3

19

"i'm in the zone" is exactly the end user experience we're going for

@modal_labs

is amazing. i wrote a crawler. it fed into a pytorch transformer. added a modal decorator to an outer for-loop. now it farms to 30x machines. instantly. feels like a REPL; i'm in the zone. and i never wrote cloudformation crap. no clusters. no infra.

3

6

110

1

0

20

.

@dream_3_d

is awesome, and runs on

@modal_labs

.

Personally very excited about this because our first GPU examples (c. 2021) were generative art with VQGAN and rendering with Blender. Things have come a long way since then :)

0

1

17

Not talked about enough, but reliability and tail latencies are a big practical reason to move to self-hosted LLMs.

Turns out it's hard to build a production system around a black box API that just gives you tokens back eventually.

0

0

16

Psyched to learn that this

@AaveAave

governance function uses

@modal_labs

as off-chain infrastructure (thanks to the folks at

@llama

)

0

2

16

Last weekend,

@jasshikoo

and I built : it’s like Substack, but for RSS feeds! Now we can follow all those great HN blogs that aren’t on Substack.

(Also, all of the backend is Clojure running Datomic Ions. More on that here: )

1

1

16

There's a fun bug sleuthing story here that involves wrangling strace output, reading through the CPython source for loading pyc files, and ultimately being betrayed by implicit type conversion. Will have to wait for a blog post some day.

Half the time people tell you something in tech is super hard it’s I think just weird lore? We (well, mostly

@akshat_b

) built our own file system despite everyone saying it’s an insane idea, and it was pretty straightforward and works great.

10

3

58

0

1

15

Uses the new

@modal_labs

sandbox primitive under the hood. This lets you run dynamically defined containers instantly in a secure sandbox. Docs here .

0

2

14

@bernhardsson

IME business analysts work great until you start trying to put them in containers

0

0

14

@modal_labs

Ever since we deployed erik-bot internally, all our metrics have been going up and to the right.

However, we decided it would be irresponsible of us to open source its weights—it’s way too powerful! So, we’re releasing the next best thing.

.

@akshat_b

built an internal Slack bot that uses LLM finetuned on my Slack messages and it's slowly making me redundant at

@modal_labs

15

8

178

3

0

13

We just rebranded this to our first "Hacky Hour" — happy hour + hacking with friends.

Come by our office in SoHo and say hi!

2

0

14

Excited to talk about some fun labeling problems tomorrow.

0

0

11

@modal_labs

docs are searchable now! The crawler that updates our

@algolia

index itself runs on Modal, and takes <10 lines of code to set up and deploy:

2

1

9

Can't have a simulation without our guy Baudrillard in it :)

Announcing Twitter '95, an AI simulation of Twitter, if it had existed in 1995.

- LLaMA 3.1 405B +

@FastAPI

on

@modal_labs

✅

-

@nextjs

app on

@vercel

✅

-

@PostgreSQL

on

@supabase

✅

- Jordan dunking on Clinton ✅

- MVP written in 26 hours at crossover hackathon/marathon ✅

19

34

264

2

0

10

We also have a script to generate terminal recordings that runs asciinema and feeds it input via a PTY.

Makes it easy to maintain recordings like this one:

We treat docs as code at

@modal_labs

:

* All code samples in docs are unit tested

* Autogenerate most tutorials from

code

* All examples run against prod 24/7 and monitored

* We execute all library docstrings as unit tests

* We treat deprecation warnings as exceptions

17

21

363

0

2

7

@jonobelotti_IO

@modal_labs

Everything's hosted entirely on

@modal_labs

, including the frontend! Source here:

1

0

8

@debarghya_das

Looking at the code, that doesn't seem right? The rest of the code constrains the output to legal tokens at each point in the JSON structure.

With just a prompt it wouldn't work 100% of the time.

1

0

8

Thanks

@matt_levine

for the mention in today’s Money Stuff. The best thing is, I didn’t even have to commit securities fraud.

0

0

8

This week in Python land:

@jonobelotti_IO

found a package that goes into an infinite loop on `pip install` because it uses floating point math on version numbers 🙃

1

0

8

@LM_Braswell

Scale really missed out on this opportunity: (credits to

@rilkabot

for making this!)

1

0

7

Puru woke us up in the middle of the night because his feeder’s servers went down. What a world.

Imagine getting paged for an on-call ticket where innocent pets will literally die unless you fix some AWS configs...

1

0

7

@charles_irl

@FastAPI

@modal_labs

@nextjs

@vercel

@PostgreSQL

@supabase

Where else can you find

@Linus__Torvalds

and

@stallmanu

piling on

@billgates

after he praises Alan Greenspan :)

0

1

7

Didn't think a prog jazz ballad about my overweight cat would slap, but here we are

(thanks

@suno_ai_

)

0

0

7

@bhaprayan

Also recommend , which is just a bunch of static pages you can get via curl (e.g. `curl `)

0

0

6

Roam is delightful, but imagine how incredible it would be if it had a type system.

@RoamResearch

0

0

6

@danlovesproofs

@AirplaneDev

Problem intimacy is a great way to put it—also why so much of the "moat" is actually the knowledge and experience of the team itself

1

0

5

@swyx

@transitive_bs

It seems like smol-dev should probably not modify its own runtime environment right? (i.e. the code it produces should run in a separate sandbox?)

So what it could do is output Python code + dependencies, and run those as a separate Modal function.

1

1

5

@charles_irl

@modal_labs

Very amused by how the Tile around his neck pops up in various forms in a lot of these

0

0

4

@aman_kishore_

@jiajihml

@modal_labs

@raydistributed

Good qn

@jiajihml

! We're focusing on providing a magical dev exp out of the box. No configs or maintenance overhead. We built our own container engine in Rust for fast cold-start, so iterating really feels like it's local.

A few more things that I'd love to show you—DMing :)

0

0

4

@levelsio

Run it on

@modal_labs

:

Cold start time is 10s for this model, even if you make any modifications to the code. In practice can adjust idle timeout and send a "noop" request a few seconds ahead to not hit cold start.

0

0

4

@modal_labs

@algolia

Having to actively hold ourselves back from running more of Modal on Modal itself. Pretty much the opposite of the problem some companies have with not enough product dogfooding.

0

0

4