Alexander Kolesnikov

@__kolesnikov__

Followers

4,838

Following

175

Media

29

Statuses

380

Staff Research Scientist at @googledeepmind , Zürich. I like making things simpler. Co-creator of BiT, ViT, MLP-Mixer, UViM, SigLIP, Paligemma.

Zurich

Joined January 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Cheney

• 362216 Tweets

Eagles

• 228072 Tweets

Packers

• 131884 Tweets

ENGFA ACTRESS 100M

• 102564 Tweets

श्री गणेश

• 101074 Tweets

Ganesh Chaturthi

• 87524 Tweets

Ganesh Chaturthi

• 87524 Tweets

#ATIPASHOPxCHARLOTTESNACK

• 82202 Tweets

Saquon

• 74596 Tweets

Perú

• 73840 Tweets

गणपति बप्पा

• 73341 Tweets

Jalen

• 57356 Tweets

Green Bay

• 37672 Tweets

भगवान गणेश

• 35959 Tweets

#キントレ

• 32975 Tweets

Jordan Love

• 30718 Tweets

#二度と撮れない画像を貼れ

• 27534 Tweets

Duke

• 25916 Tweets

#GanpatiBappaMorya

• 25546 Tweets

श्री सचिन पायलट

• 20349 Tweets

Lucho

• 14854 Tweets

#音泉祭り

• 14253 Tweets

ビッグラン

• 14188 Tweets

Jayden Reed

• 13860 Tweets

Party Love AndaLookkaew

• 13740 Tweets

JustinOn NCAA100Kickoff

• 13304 Tweets

イコラブ

• 12510 Tweets

#2024TRUSTY_IN_BANGKOK

• 10360 Tweets

Last Seen Profiles

Also an interesting survey on MLP-Mixer and concurrent/follow-up research:

Crazy all of it happened in ~6 months only.

1

47

199

Our PaliGemma technical report is finally out: .

We share many insights that we learned while cooking the PaliGemma-3B model. Both about pretraining and transfer.

3

30

168

Concurrent Mixer-like model, but applied to NLP and with fixed token-mixing MLPs with Fourier features. When writing Mixer paper we looked at our learned params and

@tolstikhini

had an immediate reaction: "looks like Fourier" and then we thought of doing exactly same thing later.

1

23

119

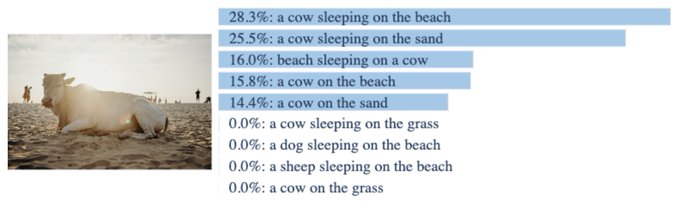

Plot twist: the model uses a LM pretrained on the whole internet, and, in particular, it read and memorized our paper showing CIFAR10 test annotation mistakes. As a result, it got to 100% on a noisy test set.

3

10

97

We had a similar observation when doing preliminary investigations for . Vanilla REINFORCE with 2 samples (one for baseline, the other for the update) is good enough to steer vision models in the right direction.

PPO has been cemented as the defacto RL algorithm for RLHF.

But… is this reputation + complexity merited?🤔

Our new work revisits PPO from first principles🔎

📜

w

@chriscremer_

@mgalle

@mziizm

@KreutzerJulia

Olivier Pietquin

@ahmetustun89

@sarahookr

13

100

483

2

15

97

And now we fell victims ourselves: the absolutely great DALLE 2 paper does not cite ViT paper🥲.

@giffmana

On this topic, its funny how some things are too well known to cite (they are just part of common language, and often lower-cased), but not others. Adam really hit a sweet spot, being nearly ubiquitous and cited in most usages. My guess is its partialy in the name.

1

0

19

4

5

91

@karpathy

number of iterations is not enough when there is a batch dimension of some sort. For example, in vision the "number of images seen" is by far the best proxy for measuring training duration in the large-scale regime.

3

2

88

@sherjilozair

@evgeniyzhe

can be quite good. T5 paper uses such learning rate: . And I often use it, eg here we even have a section (3.5) about it.

3

4

65

PaliGemma VLM was pre-trained with segmentation tasks and has the ability to produce dense masks. This capability needs some extra complexity related to (de)tokenization of segmentation masks and we have not documented it well yet.

But

@skalskip92

has it all figured out 👇

I finally managed to fine-tune PaliGemma on the custom segmentation dataset

most of you have probably noticed that I've been spamming all sorts of PaliGemma tutorials for the past few weeks; I have one more

shoutout to

@__kolesnikov__

for all the help!

↓ read more + code

10

51

288

0

9

51

@FrancescoLocat8

@giffmana

ArXiv's 1-3 days publication cycle is too slow given the current research pace. Publishing in-flight overleaf projects is the future.

3

2

50

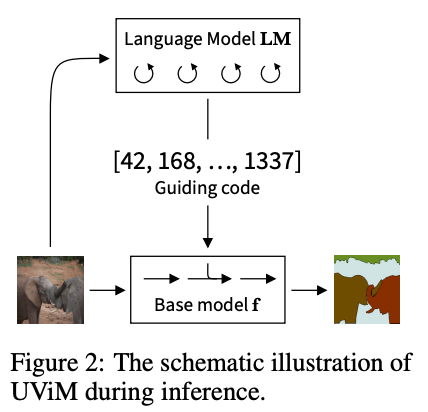

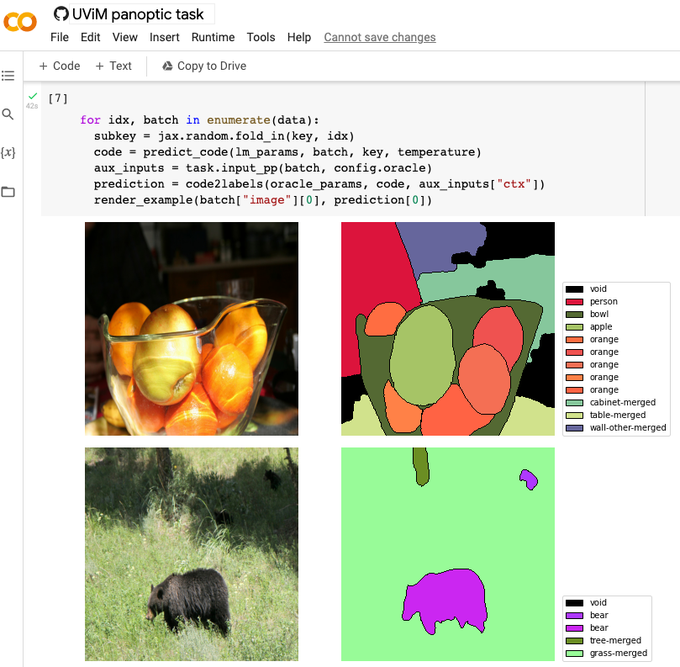

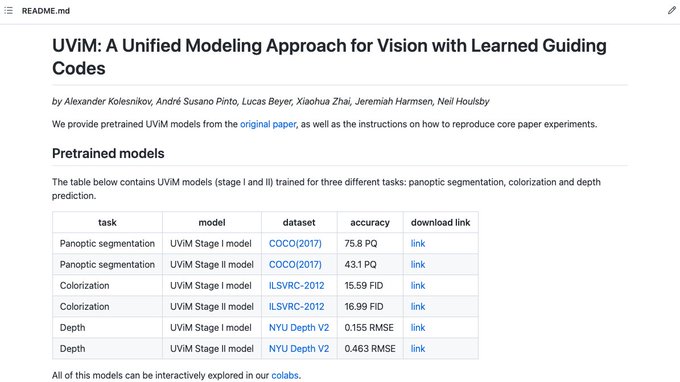

Checkout our paper for more: .

The code will be made available at , stay tuned.

This is the joint work with André Susano Pinto,

@giffmana

,

@XiaohuaZhai

,

@JeremiahHarmsen

and

@neilhoulsby

.

0

4

42

Incredible to see people already doing useful stuff with PaliGemma by finetuning it on custom data. Great progress,

@skalskip92

@__kolesnikov__

Awesome! After a bit of trial and error, I managed to fine-tune the model using a custom object detection dataset. The Google Colab works great.

I have one more problem. How can I save a fine-tuned model? Really sorry if that's a stupid question, but I'm new to JAX and FLUX.

3

3

16

0

7

40

Are we still making meaningful progress on ImageNet? What happens if we carefully re-annotate ImageNet val set? How to improve ResNet-50 top-1 accuracy by 2.5% by cleaning training data and using different loss function? See our new paper for the answers: .

Are we done with ImageNet? That's what we set out to answer in with

@olivierhenaff

@__kolesnikov__

@XiaohuaZhai

@avdnoord

. Answer: it's complicated. On the way, we find a simple technique for +2.5% on ImageNet.

2

33

95

2

11

40

and after 7 years I can still tell in 0.1 seconds that it is pixelcnn++ samples

0

0

39

Thank you

@ISTAustria

and

@hetzer_martin

for the award, I am deeply honored to receive it. And thank you to

@thegruel

for being the exceptionally great and supportive PhD supervisor.

@__kolesnikov__

receives the

@istaustria

Alumni Award 2023! “This recognition holds special significance for me, as ISTA has played a pivotal role in shaping me as a scientist”. Congratulations and we are excited to see what comes next for you.

2

1

10

4

3

36

Finally, a Google AI Blog post about BiT, our state-of-the-art visual model. And to show that we are serious, we release code in three deep learning frameworks: TF2, PyTorch and Jax. Check it out:

0

11

35

At ICCV and curious about semi-supervised learning with self-supervision? Come to our talk today at 15:20 in Hall D1 or chat with us at poster

#20

from 15:30 to 18:00. , Code: . Joint work with

@XiaohuaZhai

@avitaloliver

and

@giffmana

0

15

35

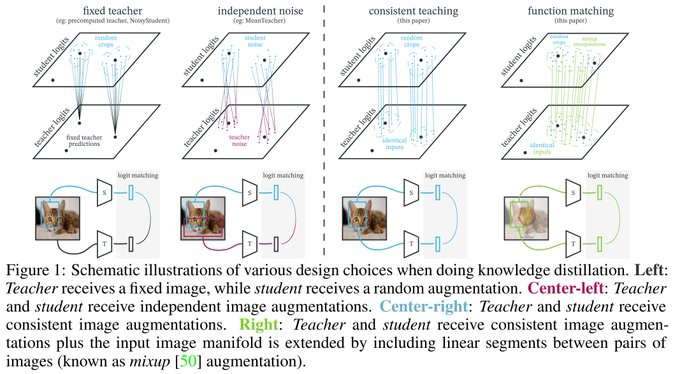

It turns out that distillation yields wildly different results depending on subtle choices. But if done right, works consistently great for model compression, i.e. results in ~83% ImgNet with R50. What does "right" mean? Check out or a 🧵 from

@giffmana

👇

So you think you know distillation; it's easy, right?

We thought so too with

@XiaohuaZhai

@__kolesnikov__

@_arohan_

and the amazing

@royaleerieme

and Larisa Markeeva.

Until we didn't. But now we do again. Hop on for a ride (+the best ever ResNet50?)

🧵👇

8

128

654

0

8

35

Check out a thread on our recent image-text project from

@giffmana

.

My personal tl;dr: when learning a joint embedding space for image-text (e.g. CLIP-style), you are much better off by *freezing* an image embedding that was pre-trained on the standard image data like ImageNet.

2

10

33

That is an appealing perspective on ViT and Mixer. Nevertheless, I think it really misses the point. There is a lot to unpack here, so let's do a thread(🧵).

@ykilcher

@GoogleAI

@neilhoulsby

@giffmana

@__kolesnikov__

This only really works when you have access to JTF300M and google money/compute. As EfficientNetV2 showed, if you have constraints (eg training efficiency - Fig 1; model size, FLOPs, and inference latency - Fig 5) use the EfficientNetV2 network instead of a "crap architecture".

1

0

9

2

7

33

This is a nice idea and also a perfect excuse to advertise my quite old paper, where we propose almost exactly the same: a method to categorise class maps into "objects" or "distractors" with virtually zero human supervision (few clicks per class):

📢 We now release "Salient ImageNet", a dataset with "core" and "spurious" masks for entire ImageNet!

Website:

with

@sahilsingla47

,

@MLMazda

Such a richly annotated dataset can be useful for model debugging, generalization, interpretation, etc. 1/n

3

25

109

0

6

32

Waiting for timm to follow the trend ;)

🔥JAX meets Transformers🔥

@GoogleAI

's JAX/Flax library can now be used as Transformers' backbone ML library.

JAX/Flax makes distributed training on TPU effortless and highly efficient!

👉 Google Colab:

👉 Runtime evaluation:

3

113

532

1

2

31

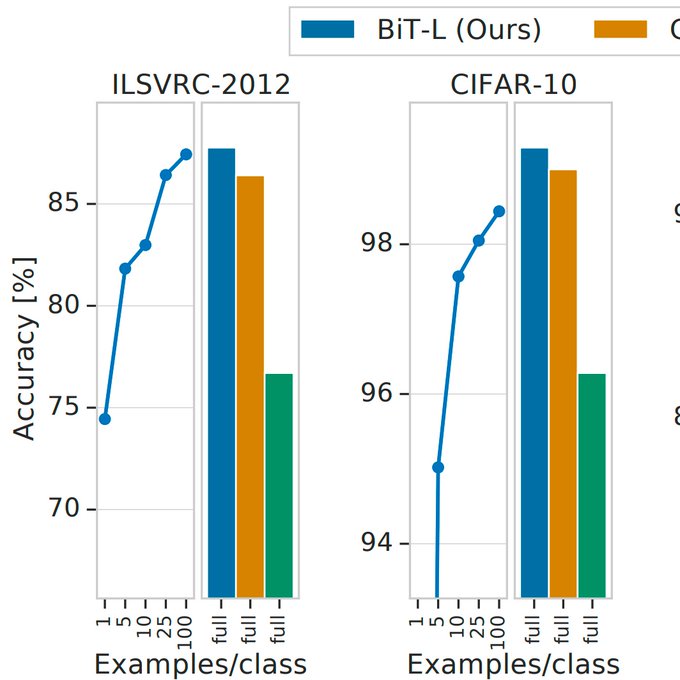

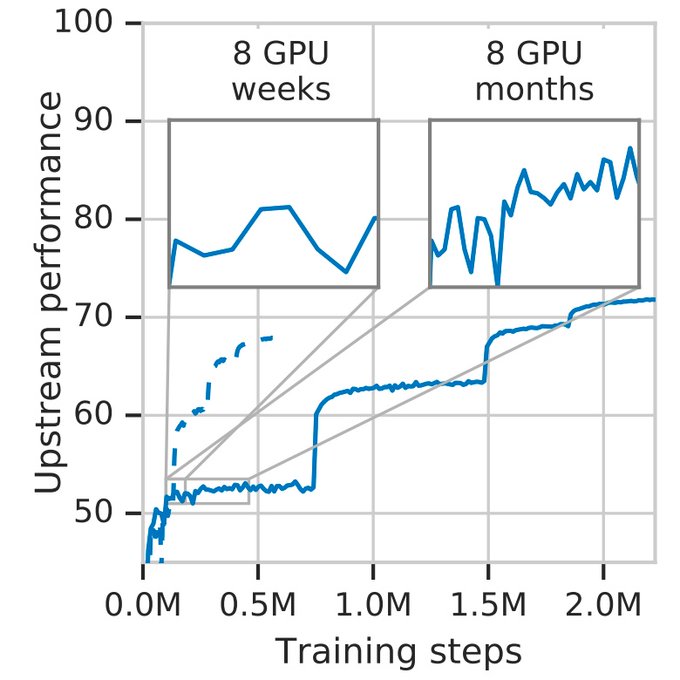

Training models at scale beyond ImageNet is challenging. We devise a recipe for learning visual representations from >100M images. Beside "obligatory" SOTA on ImageNet/CIFAR, we get great results in the low data regime transfer with a single hyper-param!

0

8

31

@karpathy

The question is partially addressed here (as a by-product of studying the effect of the batch size): . For example for ImageNet they show that until the batch size becomes huge, the number of images seen is the only thing that matters:

1

1

30

Happy to see that my past "Imagenet SOTA" academic exercises turned out to be beneficial for real and useful vision applications. Deep down I was not sure it will ever be the case.

New paper from our team

@GoogleHealth

/

@GoogleAI

() Pre-training at scale improves AI accuracy, generalisation + fairness in many medical imaging tasks: Chest X-Ray, Dermatology & Mammography! Led by

@_basilM

,

@JanFreyberg

, Aaron Loh,

@neilhoulsby

,

@vivnat

1

20

97

0

2

26

PaliGemma being used as intended 👇

I fine-tuned my first vision-language model

PaliGemma is an open-source VLM released by

@GoogleAI

last week. I fine-tuned it to detect bone fractures in X-ray images.

thanks to

@mervenoyann

and

@__kolesnikov__

for all the help!

↓ read more

30

196

1K

2

2

23

Nice PaliGemma tutorial, enjoyed watching it.

A comment regarding your struggles with getting good mAP for detecting multiple objects: it is a known limitation of all log-likelihood generative models and we wrote a whole paper on how to address it: .

2

2

24

It was indeed a great year (in science at least), thanks to all amazing collaborators who made this happen

@giffmana

@XiaohuaZhai

@joapuipe

@JessicaYung17

@sylvain_gelly

@neilhoulsby

Alexey Dosovitskiy

@dirkweissenborn

@TomUnterthiner

@m__dehghani

@MJLM3

Georg Heigold

@kyosu

.

I always see people humble-brag on twitter. What happens if I just plain shameless-brag? Will a black hole appear under my feet? Let's find out! Two of my papers with code (BiT + ViT) are in top10 of 2020, yay! Let's keep up that trajectory

@__kolesnikov__

@XiaohuaZhai

etal. :)

2

4

67

0

1

20

We have released very strong image-text contrastive models from the SigLIP paper . Check out this colab: .

0

1

16

This is the joint work with

@ASusanoPinto

,

@YugeTen

,

@giffmana

and

@XiaohuaZhai

, done at

@GoogleAI

, Brain Team Zurich.

2

0

14

A novel interpretation of VAE as non-linear PCA.

We looked into visual disentanglement mathematically. Turns out the canonical (beta-)VAE (by accident) does what one would want from deep PCA! Accepted to

#cvpr2019

.

#deepPCAexists

5

108

435

0

2

15

@yoavgo

GroupNorm works equally well in Vision and it does not depend on the batch dimension. I doubt this explanation is correct

1

0

15

Work done with

@tolstikhini

,

@neilhoulsby

,

@giffmana

,

@XiaohuaZhai

,

@TomUnterthiner

,

@JessicaYung17

,

@keysers

,

@kyosu

,

@MarioLucic_

, Alexey Dosovitskiy. And special thanks to Andreas Steiner for outstanding support with opensourcing.

1

1

12

SigLIP in timm 🎉

Thanks

@wightmanr

!

Don't forget to update your timm version to latest (0.9.8+)! The SigLIP support is via timm backbone and can be loaded in both timm and OpenCLIP from same

@huggingface

hub models.

0

0

6

1

1

13

There are likely some rough edges in the revamped code that we will improve over time. Glad to see big_vision is recognised by the leading OSS engineers: . Gives us additional motivation to keep big_vision up-to-date with the latest advances.

1

2

11

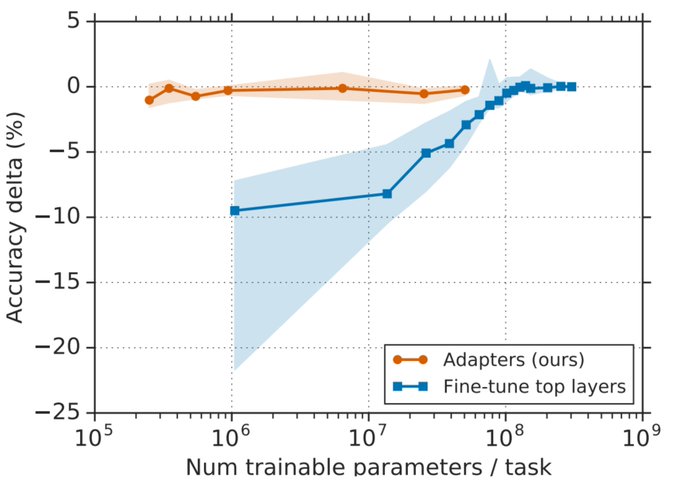

Impressive demonstration of how to successfully adapt the BERT model for solving custom NLP tasks by tuning a small amount of parameters.

It turns out that only a few parameters need to be trained to fine-tune huge text transformer models. Our latest paper is on arXiv; work

@GoogleAI

Zürich and Kirkland.

#GoogleZurich

#GoogleKirkland

0

43

121

0

3

10

Self-supervision is effective for semi-supervised learning. Check out our work () with

@XiaohuaZhai

,

@avitaloliver

and

@giffmana

for details.

Want to turn your self-supervised method into a semi-supervised learning technique? Check out our S⁴L framework ()!

Work done at

@GoogleAI

with

@avitaloliver

,

@__kolesnikov__

and

@giffmana

.

2

35

117

0

4

11

All powered by the genius jax.Array and jax.jit API, which was recently revised to support global sharded computations. Shout-out to jax developers for doing such great work

@yashk2810

@jakevdp

@froystig

@SingularMattrix

(and more, but it is everyone I found on X).

1

1

11

@skalskip92

You can use for this:

import big_vision.utils as bv_utils

flat, _ = bv_utils.tree_flatten_with_names(flat)

with open("ckpt.npz", "wb") as f:

np.savez(f, **{k: v for k, v in flat})

And then load:

bv_utils.load_checkpoint_np("ckpt.npz")

2

1

10

big_vision is a joint work with

@giffmana

,

@XiaohuaZhai

and myself, with many great contributions from Brain team members. Special shout-out to

@AndreasPSteiner

for making a great optax-based optimizer library for big_vision.

0

0

9

This code upgrade was done together with my

@GoogleDeepMind

colleagues

@giffmana

@ASusanoPinto

@AndreasPSteiner

@XiaohuaZhai

.

1

0

9

@stanislavfort

@sarahookr

shameless plug: my colleague

@ibomohsin

have also extended scaling laws further to infer model's optimal shape (width, depth, etc): . We use these laws routinely.

1

0

9

@norpadon

This paper also prescribes how it can be done in a principled way: . And it has many more gems.

1

1

8

@giffmana

Exponential spike in space garbage that will prevent or hinder space exploration is a real scenario:

1

0

8

@roydanroy

@askerlee

this was a joke, though I would not rule out that a well-done hybrid of a LLM and an image recognition model will do this, when prompted appropriately.

3

0

8

@skalskip92

Yes! The prefix (aka prompt) looks like this:

detect: red car ; cat ; yellow bus

The corresponding suffix (aka output) is

<loc0072><loc0003><loc0905><loc1019> red car ; <loc0272><loc0140><loc0821><loc0851> cat ; <loc0378><loc0003><loc0424><loc0069> yellow bus

3

1

8

It turns out that massively scaling up model size and training data results in a drastic jump of image classification accuracy in a challenging real-life setup. See our updated paper for details and additional surprising results that include low-data eval:

0

0

8

Check out our tensorflow2 tutorial: fine-tuning recent state-of-the-art BiT visual models () without pain (and BatchNorm!) on your data of choice.

0

1

6

@skalskip92

We generally encourage to use tensorstore in big_vision, see `tssave` and `tsload` functions in big_vision , as it scales much better for really big checkpoints. That is why numpy utils are not very prominent, but numpy should be good enough for paligemma.

1

0

6

@karpathy

In my personal experience, I was able to scale batch size even further without taking any losses. That said, at some point this nice relation seems to break and for ImageNet-like tasks it seems to hold the best.

0

0

6