Xiaohua Zhai

@XiaohuaZhai

Followers

3,411

Following

243

Media

41

Statuses

263

Senior Staff Research Scientist @GoogleDeepMind team in Zürich

Zürich, Switzerland

Joined March 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Terörist

• 129299 Tweets

Devlet Bahçeli

• 66919 Tweets

期日前投票

• 64071 Tweets

Öcalan

• 62764 Tweets

Chris Kaba

• 56359 Tweets

TBMM

• 43182 Tweets

LINE MAN JOONG DUNK

• 31441 Tweets

Gazi

• 27265 Tweets

WELCOME BACK GUCCI JIWOONG

• 24874 Tweets

#よにのANNP

• 22687 Tweets

Meclise

• 21647 Tweets

Yazıklar

• 16752 Tweets

かぼちゃ大作戦

• 16591 Tweets

Star Time

• 16464 Tweets

DEM Parti

• 14664 Tweets

Milliyetçi

• 14096 Tweets

#一番遠い親戚さん

• 11333 Tweets

NICE2MEET ARCARM

• 10167 Tweets

Last Seen Profiles

📢📢 I am looking for a student researcher to work with me and my colleagues at Google DeepMind Zürich on vision-language research.

It will be a 100% 24 weeks onsite position in Switzerland. Reach out to me (xzhai

@google

.com) if interested.

Bonus: amazing view🏔️👇

6

28

241

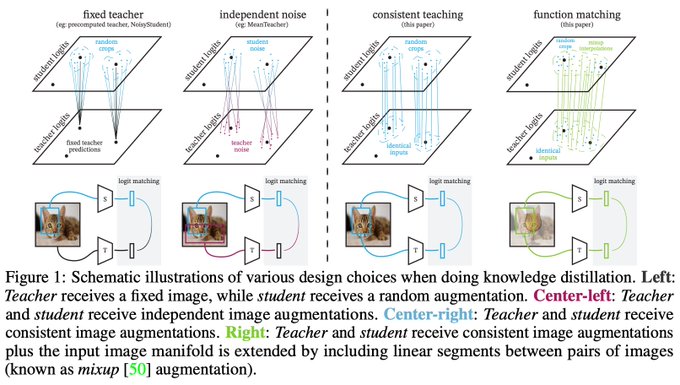

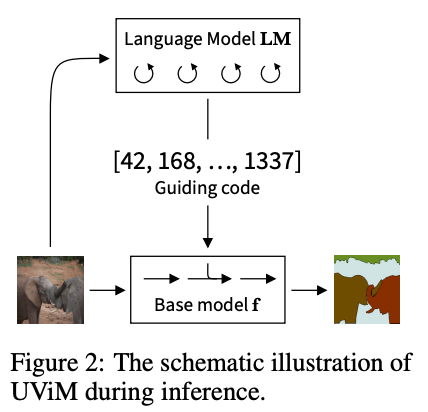

Want to turn your self-supervised method into a semi-supervised learning technique? Check out our S⁴L framework ()!

Work done at

@GoogleAI

with

@avitaloliver

,

@__kolesnikov__

and

@giffmana

.

2

35

116

I'm excited to share PaliGemma, an open vision-language model that can be fine-tuned within 20 minutes.

You'll be impressed by how far it goes with only batch size 8 and step 64. Try it out yourself, with your free Google Colab account and T4 GPU:

We’re introducing new additions to Gemma: our family of open models built with the same technology as Gemini.

🔘 PaliGemma: a powerful open vision-language model

🔘 Gemma 2: coming soon in various sizes, including 27 billion parameters

→

#GoogleIO

26

127

658

0

14

94

Locked-image Tuning (LiT🔥) is an alternative method to fine-tuning. It turns any pre-trained vision backbone to a zero-shot learner!

LiT achieves 84.5% 0-shot acc. on ImageNet and 81.1% 0-shot acc. on ObjectNet, and it's very sample efficient.

Arxiv:

2

16

67

We are excited to release LiT🔥 (Locked-image Tuning) models. Want to try out LiT models on your own problems? Check out

#lit_demo

first, please tweet fun examples!

Demo:

Models:

Arxiv:

2

7

56

Though we didn't release the PaLI-3 models, we have released multilingual and English SigLIP Base, Large and So400M models to .

Thanks to

@wightmanr

, SigLIP models are now available in Hugging Face🤗

Try them out!

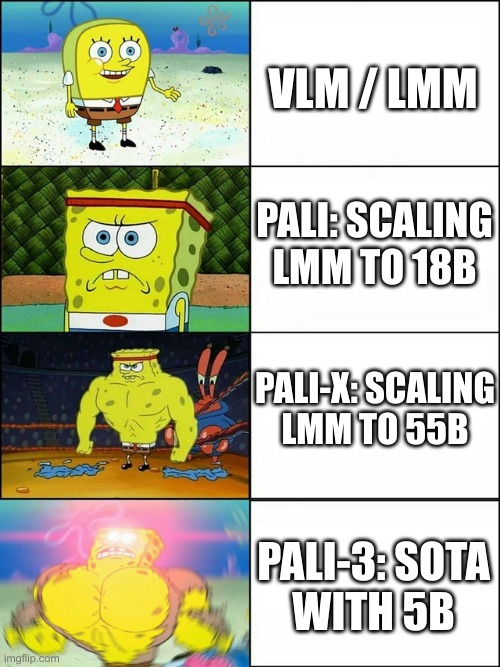

🧶PaLI-3 achieves SOTA across many vision-language (and video!) tasks while being 10x smaller than its predecessor PaLI-X.

At only 5B parameters, it's also smaller (and stronger) than the concurrent Fuyu-8B model, though sadly we cannot release the model (props to

@AdeptAILabs

)

9

59

445

1

8

48

Loss != Metric? Check our paper on optimizing directly for your vision problem with complex metrics.

A typical example is reward tuning for pix2seq style object detection: it improves the mAP from 39% (without tricks) to 54% on COCO with a ViT-Base architecture.

0

7

48

I recommend the authors checking Fig. 8 from BiT paper (). On Cifar10 there’re some wrong ratings, an expert may not perform 100% on Cifar10. There’s either a bug in their eval code, or the model sees all the test examples.

1

3

43

PaliGemma is now available in KerasNLP👏

0

4

23

We present Multi-Modal Moment Matching (M4) algorithm to reduce both representation and association biases in multimodal data.

This not only creates a more neutral CLIP model, but also achieves competitive results (SigLIP B/16 INet 0-shot 77.0% -> 77.5%!) Check the thread👇

Excited to share our

#ICLR2024

paper, focused on reducing bias in CLIP models. We study the impact of data balancing and come up with some recommendations for how to apply it effectively. Surprising insights included! Here are 3 main takeaways.

3

23

88

0

7

37

I'm excited to co-organize an

#IJCV

special issue on The Promises and Dangers of Large Vision Models with

@kaiyangzhou

,

@liuziwei7

,

@ChunyuanLi

,

@kate_saenko_

.

If you are working on related topics, consider submitting a paper, deadline in 163 days!

Link:

2

11

26

Big_vision () just released the new shining flexible weight sharding feature.

It's a game changer if you want to train Giant models using a small amount of accelerators. We have upgraded all of our models in big_vision, please check below 🧵👇👇👇

0

3

26

I just checked our online LiT demo, and it's doing a reasonable job for this case, with only the smallest "tiny" model.

Please try our demo with your own prompts, if one is interested to see whether it understands language at "a deep level".

Demo:

🙄

@GoogleAI

, “a deep level understanding”?

Seriously?!

Your system can’t distinguish “a horse riding an astronaut” from “an astronaut riding a horse”.

🙄

16

6

94

3

2

24

Check our SOTA vision BiT models (), code and checkpoints are available in Tensorflow, Pytorch and Jax:

0

1

16

Milestone achieved, "How to train your ViT" becomes the first ever TMLR paper in the history! Big thanks to the TMLR reviewers and the action editor!

Wohoo, we achieved something nobody ever will again in all of human history: our paper is the FIRST accepted TMLR paper 🍾🥂

@AndreasPSteiner

@__kolesnikov__

@XiaohuaZhai

@wightmanr

@kyosu

(technically, every single paper has such achievement for "Nth", but let me have my fun)

11

12

191

0

0

13

Thinking of saturating performance on classification with large vision models? We thought of that too, until we tested V+L tasks.

Scaling from ViT-G(2B) to ViT-e(4B), with

@giffmana

@__kolesnikov__

, PaLI got a big boost on V+L tasks. It shows headroom for even larger models!

1

3

11

I will be giving a talk with

@giffmana

at the 5th UG2+ challenge workshop at CVPR'22.

If you are working on anything related to robust object detection, segmentation or recognition, consider participating in this challenge. Check out for more details.

0

5

10

Share with us examples only available in your mothertongue, to help us testing OSS SigLIP models.

I do come up with delicious Chinese dishes in our SigLIP colab (), please share yours!

I wanna test our i18n OSS SigLIP model more, so I need your help with ideas!

What are things in your mothertongue that, translated literally, are super weird?

@MarianneRakic

, a fellow Belgian, came up with some great francophone ideas.

More plz! Colab:

14

4

20

0

1

9

Want to try it out? Follow the instructions to create your own

@googlecloud

TPU VMs and setup everything, within 30 minutes!

We link the up-to-date executable command line at the top of each config file, for your convenience.

3/n

1

0

8

Merve made a nice summary for PaliGemma in HuggingFace:

And… also prepared a nice colab for object detection and segmentation with PaliGemma:

New open Vision Language Model by

@Google

: PaliGemma 💙🤍

📝 Comes in 3B, pretrained, mix and fine-tuned models in 224, 448 and 896 resolution

🧩 Combination of Gemma 2B LLM and SigLIP image encoder

🤗 Supported in

@huggingface

transformers

Model capabilities are below ⬇️

8

70

430

1

2

8

Arxiv:

A benchmark comprising of 90 scaling laws evaluation tasks will be released soon, stay tuned!

Work done with

@ibomohsin

and

@bneyshabur

.

@GoogleAI

0

0

7

We would like to invite academic researchers to perform research using PaliGemma, please apply at

We intend to support selected researchers with a grant of USD $5,000 in GCP credits

Email paligemma-academic-program

@google

.com if you have any questions.

2

1

6

Congratulations to my colleague from Google Brain Zurich for the ICML 2019 Best Paper award!

I am excited and honored that we received the

#ICML2019

Best Paper Award with "Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representations" (). W/

@francescolocat8

, S. Bauer,

@MarioLucic_

,

@grx

,

@sylvain_gelly

,

@bschoelkopf

14

65

452

0

0

6

Please check out Alex's thread for more details:

Paper: .

Github (coming soon):

Work done with

@__kolesnikov__

, André Susano Pinto,

@giffmana

,

@JeremiahHarmsen

,

@neilhoulsby

.

0

0

6

Big Vision codebase is developed by

@giffmana

@__kolesnikov__

@XiaohuaZhai

, with contributions from many other colleagues in the

@GoogleAI

Brain team.

8/n

0

0

6

Check

@giffmana

's summary of what we have done for OSS Vision with our amazing close collaborators!

We always have OSS at the top of our mind, from releasing the I21K models since BiT, to releasing the WebLI pre-trained SigLIP models very recently.

0

0

5

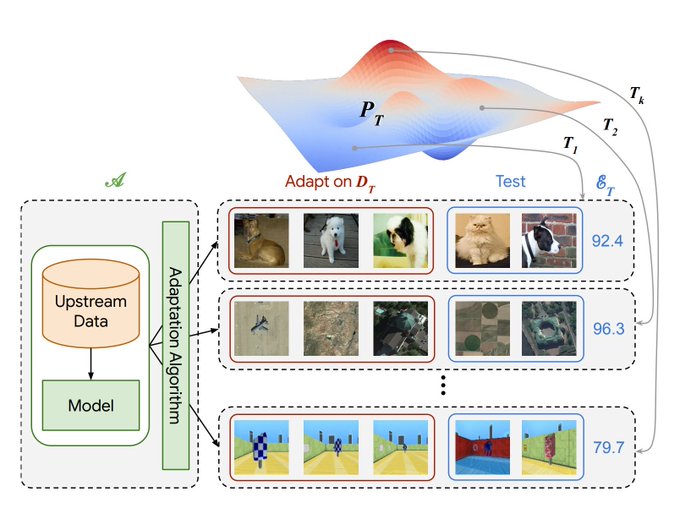

Check our Visual Task Adaptation Benchmark, with a large scale visual representation study on {generative, from scratch, self supervised, semi supervised, supervised} models. Try it on GitHub () with TF-Hub models ()!

How effective is representation learning? To help answer this, we are pleased to release the Visual Task Adaptation Benchmark: our protocol for benchmarking any visual representations () + lots of findings in .

@GoogleAI

1

59

212

0

1

5

As always, work done at Google Research, Brain Team, with

@giffmana

,

@royaleerieme

, Larisa Markeeva,

@_arohan_

and

@__kolesnikov__

.

0

0

5

SigLIP meets Mistral!

Announcing Nous Hermes 2.5 Vision!

@NousResearch

's latest release builds on my Hermes 2.5 model, adding powerful new vision capabilities thanks to

@stablequan

!

Download:

Prompt the LLM with an Image!

Function Calling on Visual Information!

SigLIP

51

170

993

0

1

5

To train multilingual PaLI,

@brainshawn

drove building the multilingual WebLI dataset, with over 10 billion images and more than 100 languages!

This helps a lot on the XM3600 benchmark, though English still performs the best. This suggests more future multilingual research!

1

1

5

Unreasonable patience is needed for the distillation (Step 3), far beyond the standard expectations... And it will pay off.

0

0

5

In this typographic attack example, LiT is able to properly recognize it's "an apple with a sticker with the text ipod". Please try your own prompts with

#lit_demo

👇👇

LiT-tuned models combine powerful pre-trained image embeddings with a contrastively trained text encoder. Check out our blogpost and the 🔥 interactive demo ↓↓↓

#lit_demo

7

110

592

0

1

4

We pretrain PaliGemma on billion scale data, covering long tail concepts. This makes the models so well prepared for fine-tune transfer, or a short instruction tuning.

Check the following colab, and consider using PaliGemma for your VLM research!

1

0

4

Check our paper for the answer to "where are we on ImageNet?" and "what are the remaining mistakes?". Based on our insights, simply removing the noisy train labels and using sigmoid cross-entropy loss, yields 2.5% improvement on ImageNet with ResNet50.

Are we done with ImageNet? That's what we set out to answer in with

@olivierhenaff

@__kolesnikov__

@XiaohuaZhai

@avdnoord

. Answer: it's complicated. On the way, we find a simple technique for +2.5% on ImageNet.

2

33

95

0

2

4

Work done at Google Research, Brain Team Zürich, with

@__kolesnikov__

,

@neilhoulsby

and

@giffmana

.

0

0

4

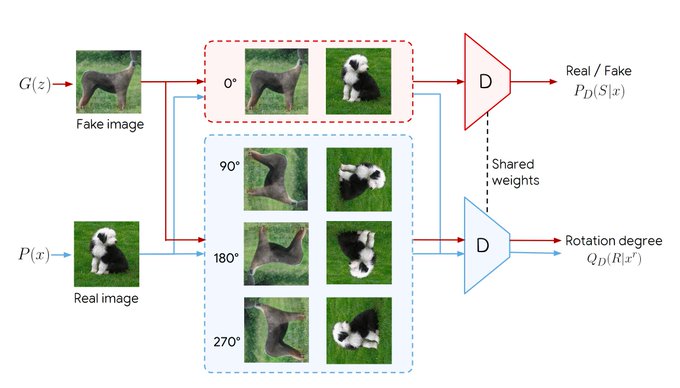

Check our latest CVPR work on GANs with self supervision!

Combining two of the most popular unsupervised learning algorithms: Self-Supervision and GANs. What could go right? Stable generation of ImageNet *with no label information*. FID 23. Work by Brain Zürich to appear in CVPR.

@GoogleAI

#PoweredByCompareGAN

0

47

158

0

0

3

Check Alex's thread for all the useful links:

I'm so impressed to see

@skalskip92

has already created a customized fracture detector with PaliGemma using colab.

@__kolesnikov__

Awesome! After a bit of trial and error, I managed to fine-tune the model using a custom object detection dataset. The Google Colab works great.

I have one more problem. How can I save a fine-tuned model? Really sorry if that's a stupid question, but I'm new to JAX and FLUX.

3

3

16

3

0

3

Work done at

@GoogleAI

Brain Team Zürich, with

@__kolesnikov__

,

@giffmana

,

@joapuipe

,

@JessicaYung17

,

@sylvain_gelly

,

@neilhoulsby

.

0

0

3

SigLIP and mSigLIP models are developed by

@XiaohuaZhai

,

@_basilM

,

@__kolesnikov__

and

@giffmana

, from Google Deepmind Zürich. Huge thanks to all contributors behind the WebLI dataset and the big_vision codebase .

0

0

3

@wightmanr

@bryant1410

You’re right. We are on it, but cannot promise anything at the moment yet :) I’m really excited to see the current SigLIP models being adopted!

0

0

2

Work done at Google Research (Brain Team) in Zurich and Berlin, with a great team:

@tolstikhini

@neilhoulsby

@__kolesnikov__

@giffmana

@TomUnterthiner

@JessicaYung17

@keysers

@kyosu

@MarioLucic_

Alexey Dosovitskiy.

0

0

1

As usual, work done with an amazing team based in Zürich:

@giffmana

@AndreasPSteiner

@_basilM

@brainshawn

@__kolesnikov__

@keysers

@GoogleAI

1

0

2

We have deployed the PaliGemma models on Cloud Vertex AI Model Garden, which makes research with PaliGemma extremely smooth on Google Cloud platform.

1

0

2

More updates with a blog about "How to Fine-tune PaliGemma for Object Detection Tasks"

I fine-tuned my first vision-language model

PaliGemma is an open-source VLM released by

@GoogleAI

last week. I fine-tuned it to detect bone fractures in X-ray images.

thanks to

@mervenoyann

and

@__kolesnikov__

for all the help!

↓ read more

30

194

1K

0

0

2

Research with code on disentanglement from my college at Google Brain Zurich.

Interested in doing research on disentanglement? We just open-sourced disentanglement_lib (), the library we built for our study “Challenging Common Assumptions in the Unsupervised Learning of Disentangled Representation” ()

@GoogleAI

3

53

144

0

0

1

Training SOTA GANs with self supervision and semi supervision!

How to train SOTA high-fidelity conditional GANs usin 10x fewer labels? Using self-supervision and semi-supervision! Check out our latest work at

@GoogleAI

@ETHZurich

@TheMarvinRitter

@mtschannen

@XiaohuaZhai

@OlivierBachem

@sylvain_gelly

1

67

241

0

0

1

We care about i18n performance too. That's why we created the i18n WebLI dataset in the first place.

A mSigLIP Base model achieves 42.6% text-to-image 0-shot retrieval recall

@1

across 36 langs on XM3600. This is on par with the current SOTA using a Large model.

1

0

1

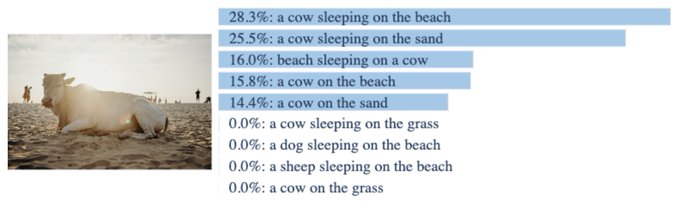

Check out the demo prepared by

@giffmana

, SigLIP is able to tell the difference between "a cold drink on a hot day" and "a hot drink on a cold day".

SigLIP is doing great on our favorite "a cow on the beach" example.

Try to slide the "Bias" bar to calibrate scores online!

1

0

5

@AarishOfficial

The Chinchilla paper () answers partially your questions in the language domain: optimal model size under a given compute budget. In their Table A9, concrete model shapes are listed for various model sizes.

1

0

1

@wightmanr

@giffmana

@_basilM

@__kolesnikov__

Thanks for sharing, Ross. Your B/32 @ 256 (64) results are pretty strong! We will consider it for SigLIP as well :)

1

0

1

Check our ICML 2019 work from Google Brain Zurich. Code available online:

Our work "A Large-Scale Study on Regularization and Normalization in GANs" has been accepted to

#ICML2019

! Great to see thorough and independent scientific verification being valued at the top ML venue.

@icmlconf

@MarioLucic_

@XiaohuaZhai

@sylvain_gelly

@GoogleAI

3

24

119

0

0

1