Rex "garbage in" Douglass Ph.D.

@RexDouglass

Followers

5K

Following

11K

Media

435

Statuses

11K

Applied Scientist in Industry. Previously UCSD. Princeton PhD. Follow me for recreational methods trash talk.

Joined September 2013

I got falsely diagnosed once with a disease so rare I would have literally been a named case. I was showing him a bayesian calculator in the urgent care begging him to think for a second.

Well, 4 out of 5 doctors can't answer a basic question about statistics, even at Harvard. Medical professionals tend to have an incredible amount of crystallized knowledge, but often aren't actually that intelligent.

34

209

5K

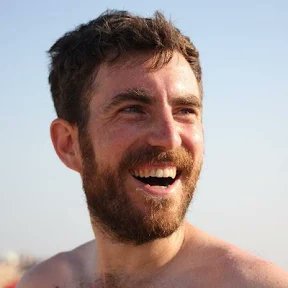

I'll give this answer again for why Phds are mentally taxing. You spend 6 years discovering exactly what you are incapable of. /n.

Pretty strong evidence of negative mental health effects of doing a PhD. Recent working paper by.@EvaRanehill, @annahsandberg, Sanna Bergvall, and Clara Fernström. Paper link:

7

101

968

@tcarpenter216 This is one of those when priors and ev disagree this much it's actually more likely that some other assumption is wrong and now we're really unsure about everything.

7

1

483

Here's the breakdown:. -90% of all papers are too vague to even be wrong. It would take years of work to get them to wrong. -9% are clearly wrong. They draw stronger than reasonable conclusions from weak ev/designs. -1% should impact your beliefs at all, probably weakly.

3

32

341

@thezahima It was communicable and he ordered me to quarantine at home and wait for labs to come back. I basically told him he was an idiot and that I would comply but wanted something for the not insane thing on his differential and he gave me a z pack and stormed out. Better the next day.

5

3

338

All of the action in censorship is in self policing. The censor creates uncertainty which forces the target to alter their behavior. The censor keeps the rules ambiguous so the target can never be safe and the censor can decide after the act. You now have to pay protection rents.

@sebkrier talking about extreme censorship opens the overton window to little censorship. planting the idea of prison for memes is supposed to make us think 'it could be worse'. i don't think the elites really want to censor everything. but they REALLY need to censor some things.

10

47

289

FWIW, American Phd programs have an insane structure. You take a 24 year old who just barely survived comps and who hasn't published a single paper yet much less a good one and then give them a 3 year paid book sabbatical. What did you expect to happen?.

You then spend 6 years finding out exactly how you're going to fall short of those expectations. Every single day, even if you make amazing, unbelievable progress, you are narrowing the confidence bands further away from what you thought you were going to be capable of.

12

7

238

The only way to even think coherently about a modeling approach is to get it into DAG form. The reader should not have to do this. I've gotten it DAGified here below. Given that structure we can start to think about whether it's identified or not:

Just published in @JPubEcon:. "How does parental divorce affect children’s long-term outcomes?". By @WFrimmel (@jku_econ), @HallaMartin (@WU_econ), @EbmerWinter (@IHS_Vienna).

5

34

211

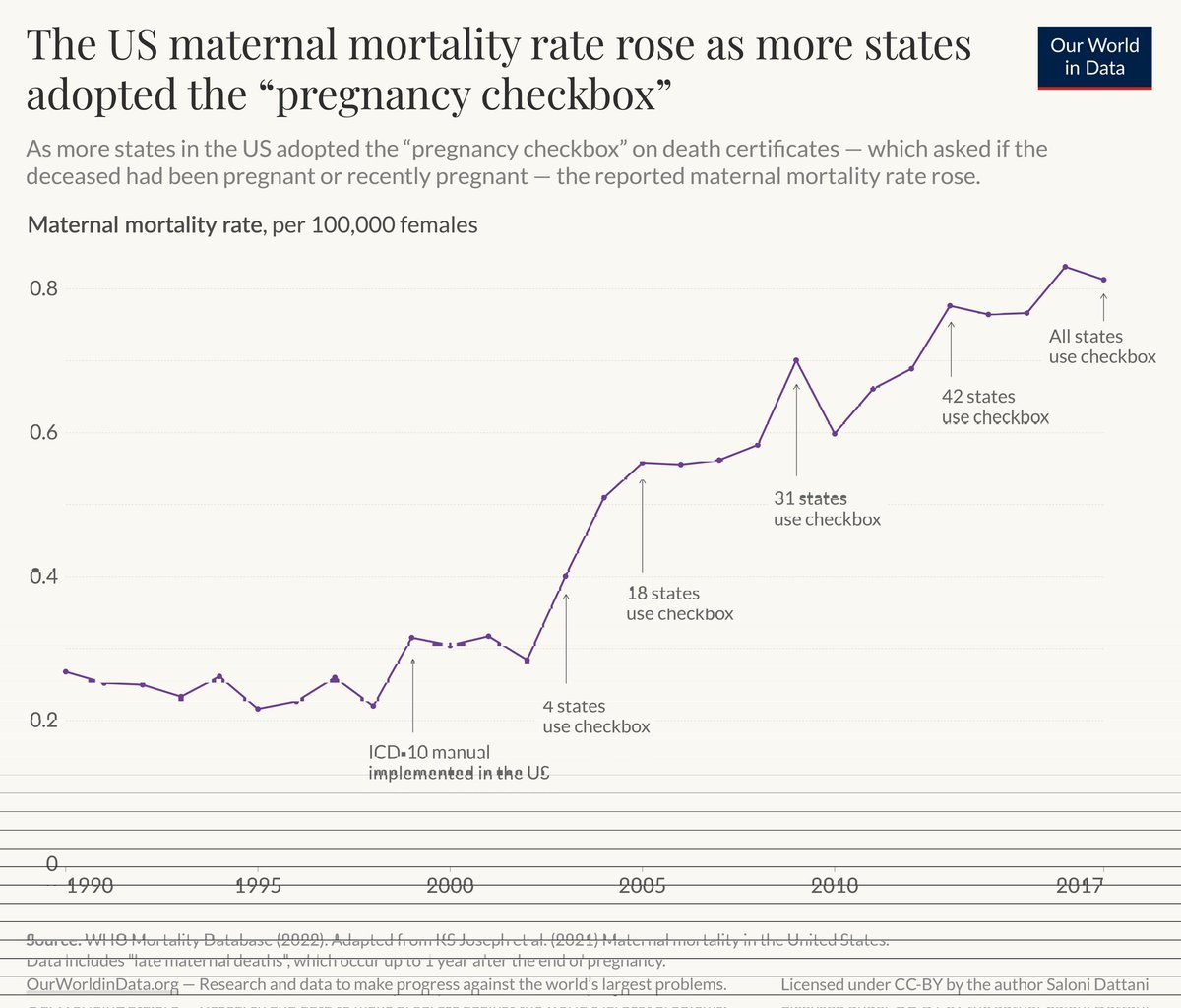

Almost every panel data paper sneaks in an implicit assumption that the reporting mechanisms remain constant over time and units. That's never, ever, true. The author just doesn't know how it changed, and the reader is forced to try to guess.

This is the most compelling case I’ve seen against the idea that smartphones are causing a mental health epidemic among teens. Apparently Obamacare included a recommended annual screening of teen girls for depression and HHS also mandated a change in how hospitals code injuries.

4

20

195

Doctors are primarily walking decision trees. They aren't doing long run Bayesian updating on you specifically. They're throwing you down a plinko board and sending you to the next station or home. A false negative on a test is a death sentence.

Here’s a fun related story. I went to the ER with severe stomach pain a few years ago. I googled and one of the most likely explanations is appendicitis. They do MRI. No appendicitis. I say “Isn’t appendicitis bacterial? And therefore subject to exponential growth rates? So it.

5

10

190

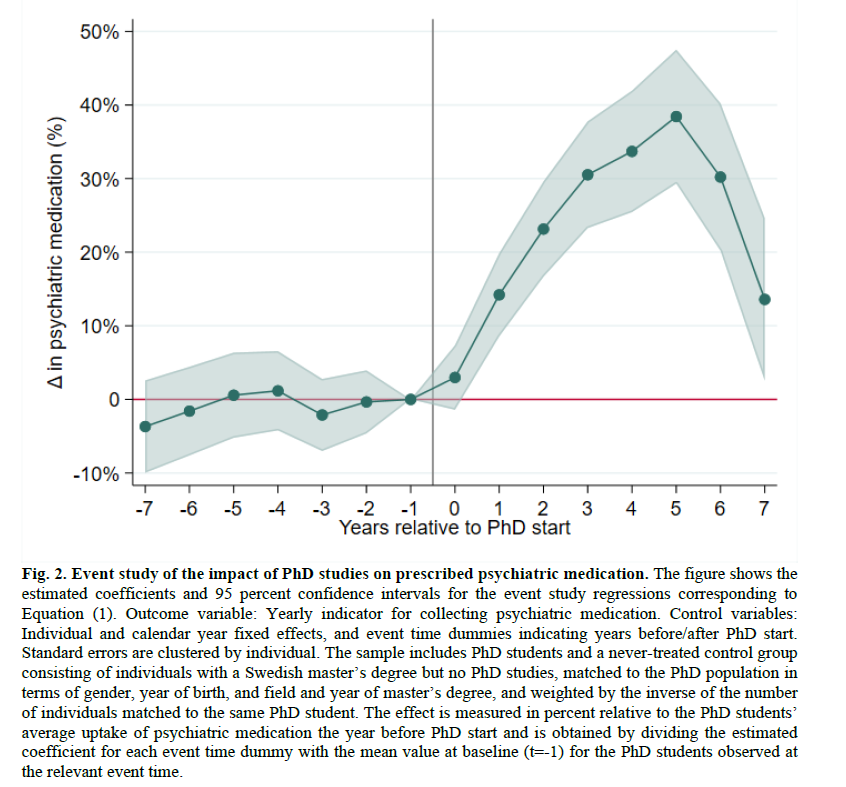

People think anonymous peer review is critical. It's not. It's performative. Nobody is checking your code or data. You drop a strategically crafty natural language narrative with a 70pg appendix on 3 rando volunteers. And then venue shop until you get friendlies. That's not real.

For what it's worth, in neither academia nor industry is there any incentive to be critical. There's a creepy creepy cult of positivity. It's sold as pluralism, or being pedagogical, or 'not being toxic.' But it's just politics. Institutionalized corruption trading in fake facts.

10

12

178

Statistics are exact calculations based on data on hand. They have no uncertainty. 'Confidence' is scientific model of how a statistic might map to a real unobserved estimand we care about. That model usually isn't very good. Incorporating the simplest sources of known error.

Random thoughts about confidence intervals:. When we teach precision & accuracy, we often use images of a target, like these from the Wikipedia article: . But I think this analogy leads to confusion about the interpretation of the confidence interval. 1/4

2

10

180

Just to be clear, a bunch of really misleading work tried to torture data into showing a predecided conclusion. A GRAD STUDENT stuck their neck out and did a ton of work to prove the negative the ev doesn't show that. Now it's being co-opted as a joint dialogue. LoL.

Our exchanges with @MatthewBJane (public and private) and @drjthrul are perfect illustrations of JS Mill’s doctrine: . “The steady habit of correcting and completing his own opinion by collating it with those of others, so far from causing doubt and hesitation in carrying it.

3

18

164

"there are no weak instruments, only weak men". "we then cat-fished several hundred happily married fathers".

Just published in @JPubEcon:. "How does parental divorce affect children’s long-term outcomes?". By @WFrimmel (@jku_econ), @HallaMartin (@WU_econ), @EbmerWinter (@IHS_Vienna).

3

7

112

Can't stress this enough. Almost all observational panel data results are at least in part hallucinations from unknown subtle changes in measurement over time. Almost no real world data is produced consistently with a bad fixed effects model 20 years from now in mind.

New article by me:. The rise in reported maternal mortality rates in the US is largely due to a change in measurement. The change was adopted by different states at different times, resulting in what appeared to be a gradual rise in maternal mortality.

3

13

101

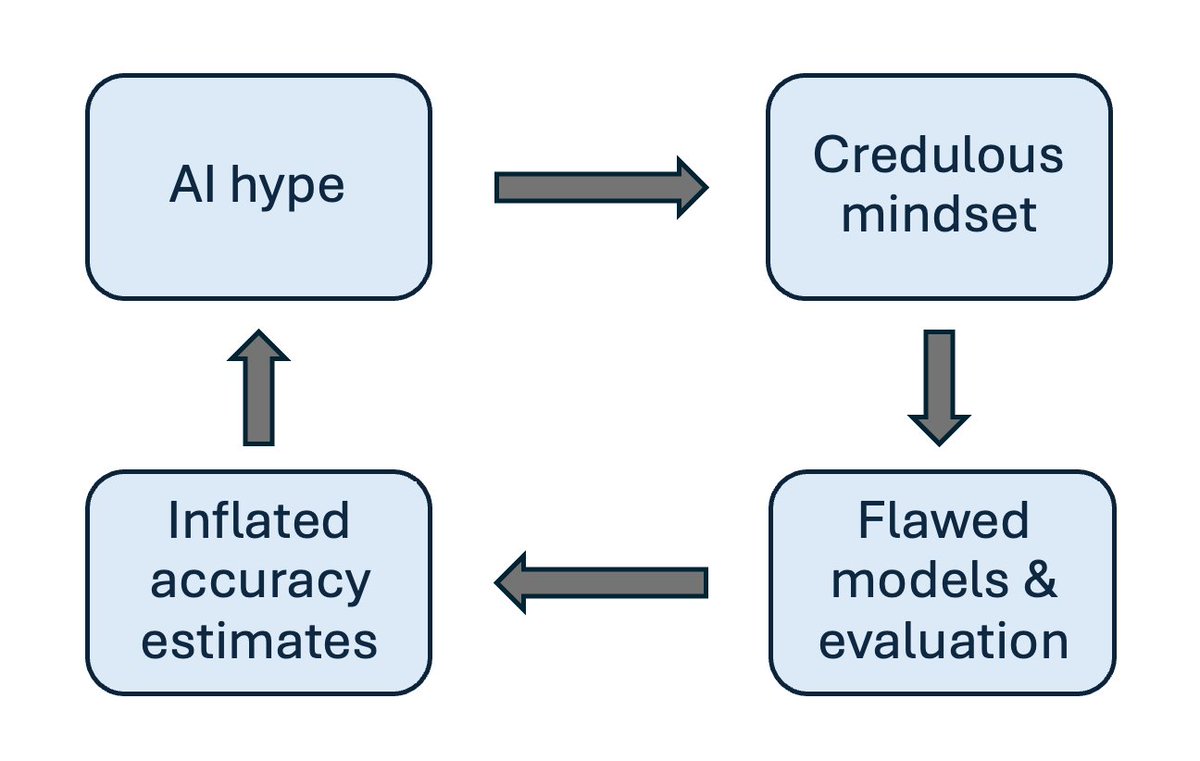

This isn't just ML. Gelman has been making the same argument for decades about social science. That researchers have a religious conviction for their argument and the evidence is just used as a stage prop to illustrate it to the audience rather than prove it.

New essay: ML seems to promise discovery without understanding, but this is fool's gold that has led to a reproducibility crisis in ML-based science. (with @sayashk). In 2021 we compiled evidence that an error called leakage is pervasive in ML models

5

11

103

Modest proposal- the autocratization of statistics. Only one person is allowed to run a regression. It must be done with punch cards on an IBM 360 mainframe plated in gold. They are chosen by god and inbreeding. If they die, it passes to their son/cousin.

8

7

99

Meta-analysis is largely the same free lunch used car selling of the underlying studies. It's almost always taking mismeasured, unidentified, unreplicable, trash, and pretending if piled together it magically averages out to a good study. It's intellectual money laundering.

This is where I feel frustrated by the state of research on this important question. How much is a meta-analysis actually worth with so much heterogeneity in the study designs, outcomes, samples, duration, etc? I think very little.

7

12

98

Not for nothing but every time you say "reviewers shouldn't have to review code because not enough of them know how to code".you are literally saying."modeling is reviewed by people who aren't competent to review modeling".Correct. Exactly. 💯. You get it.

People think anonymous peer review is critical. It's not. It's performative. Nobody is checking your code or data. You drop a strategically crafty natural language narrative with a 70pg appendix on 3 rando volunteers. And then venue shop until you get friendlies. That's not real.

3

6

82

The really funny thing is that people are dunking on Nate because the bet showed he was confident in the moderate prediction, but ignoring not taking the bet meant Rabois wasn't confident in the extreme prediction.

Econometrics Twitter to students: you have to really understand the fundamental problem of causal inference otherwise you'll end up looking goofy like Mr. Rabois. Meanwhile, Mr. Rabois:

5

3

80

There are two kinds of people in science. Those who.1) See the data and code as the actual science.2) See the english and meme as the actual science .Never going to bridge that gap. Those are two totally different sets of professional standards and incentives. Two species.

Hard disagree. Code for the paper should be in a ready to go repo already. What are you doing submitting a paper that doesn't have working code? Journals should be demanding it and reviewers ought to be reviewing it.

4

12

77

I can't stress this enough - your audience when debunking bad statistics isn't civilians. Civilians consume research as edutainment. So do most producers for that matter. Your audience is other methods people. We're the only ones who 1) care 2) can tell what's a good job.

In response to this community note and other critiques, we have added a preface to the post, explaining that we did NOT offer a meta-analysis, and explaining why the note is incorrect. Here is the revised post with new text at top and bottom:.

4

3

72

I don't know how to disrespect this attitude harder. Let's try.-If you don't want your code reviewed then you aren't a competent modeler. -If reviewers won't review your code then they aren't competent modelers either. -If neither side is competent then no modeling was 'reviewed'.

Hard disagree. Code for the paper should be in a ready to go repo already. What are you doing submitting a paper that doesn't have working code? Journals should be demanding it and reviewers ought to be reviewing it.

3

9

73

Lol. How pathetic is social science that an influencer getting quickly debunked by a grad student gets a dramatic chronicle write-up. Just every day an embarrassment.

“[P]utting your work out and having half of stats Twitter critique” may be a better paper check than peer review, says researcher in the midst of a social media war.

4

7

70

Do bad work on questions that people will find interesting is maybe the single most destructive training we get in social science.

Personally, I believe both types of research deserve recognition. 50 years ago, Hayek observed that when studying complex phenomena, we will never arrive at perfect answers. If we follow Noah's suggestion, researchers might be less motivated to tackle the big questions. 2/3.

2

7

69

Bayes is complete overkill for most jobs. If your job doesn't involve:.-synthesizing existing knowledge.-acquiring new evidence, or.-correctly telling others how that new evidence should alter their beliefs.then you absolutely don't need Bayes.

Compelling reasons not to use Bayes:.-you know literally nothing about your subject.-all potential values are equally reasonable, including impossibly large ones.-you've got so much impossibly high quality evidence on your exact estimand that we'll have to build a statue of you.

4

7

52

Bayes is the math for formally incorporating previous information with new information. That's it. The alternative is to incorporate prior information informally, in natural language, with a bunch of ad hoc and post hoc rationalizations and decisions. You know, objectivity. .

3

9

48

Math isn't something you learn it's something you get used to. I haven't proved OLS is BLUE since grad school. Being able to do that on demand isn't what's important, it's enough experience to understand why it was necessary, to read results based on it, to know what it buys.

after working with data scientists whose jobs are more ostensibly math related than SRE, I think you could banish 90% of them to the shadow realm if you pop quiz them on high school math. There is no shame in admitting you need the refresher and then doing the refresher.

2

3

47

Scientific careers aren't built on correctness but on social communities around shared memes. You're judged by your ability to reproduce and add more professionally pleasing memes to that pile. Not by whether any of it is true.

Scientists: if you would decide to do your future research at a very high level of scientific rigor, how would that affect your future career in the field?.

3

5

49

The most horrifying part of rising up the ranks of any field is getting at the top & realizing nobody knows what they're doing, there are no adults, it's a bunch of people just trying to feed their kids and selling whatever people are buying. Trust is for children.

For most people in most contexts, it's more reasonable to trust the science than to question it, precisely because they neither have the time nor the ability to do science.

1

3

47

This is the right way to think about it. Synthetic data mathematically cannot teach your model anything new that isn't in the original corpus. It's just an inductive bias that you're applying as the modeler, like any other weighting, cleaning, model architecture decision.

One thing I was very wrong about ~4yrs ago is how fundamental “synthetic data” in ML would be. “Obviously” due to data-proc-inequality, synth data should not help learning. The key flaw in this argument is: we do not use info-theoretically optimal learning methods in practice 1/.

2

8

47

How to keep economists from getting away with measuring literally nothing correctly as long as they have a cute identification strategy is a question pretty much everyone in science is struggling with right now. Science was out manoeuvered by PR from the 'credibility revolution'.

This should lead academics in both fields, economics and history, to a series of unpleasant but necessary questions. How can our economist colleagues undertake this kind of work without securing minimal advice from competent specialists in relevant fields ?.

2

7

42

The burden of proof is always on the modeler, never the reader. For APSR to basically say "readers should know small N work we publish is trash" is an amazing misunderstanding of the burden of proof in science.

However, the APSR started an investigation which lasted until December ’22 and concluded that no corrigendum is necessary. This decision was based on an undisclosed response from the authors and a single reviewer report, summarized by the APSR as described in the picture below.

1

1

45

You have no idea how hard it is to teach people that their fancy ML blackbox isn't interpretable. It's doing things that you don't understand, and unless you have an absolute 1:1 mapping between it an the expected outcomes notation for the experiment you wish you had, just don't.

Crucial point here is that regularization is not always conservative. Normal priors/ridge regression will prioritize shrinking larger coefficients. If those were blocking backdoor paths (removing confounding), you can end up inflating your estimate of interest.

2

3

45

"Now what if, and hear me out, instead of using 8 parameters to fit this regression we use 200 million?".

Google presents OmniPred: Language Models as Universal Regressors. Presents a framework for training language models as universal end-to-end regressors over (x, y) evaluation data from diverse real world experiments. repo: abs:

3

3

43

Blue is 2016-7 Twitter. A group of people who perceive themselves to be sufficiently homogeneous to crank ideological signalling and aggression to 11 without fear of losing followers. It's 4chan for Phds right now. You get this with mono-cultures like Subreddits or early adopters.

Friends, I don't think the blue site is new town square. Announced our new AI tool to improve education (). Response: I'm "only good as firewood", shame!, gross, scab. not from randos, but from academics & a Yale Review Editor. "Trust, Safety, Community"?

3

2

45

FWIW, DAGs are just an intermediate representation of a possible data generating process. Their primary value are.-making it clear what assumptions are being made for a given specification.-letting the reader more easily imagine alternatives .They SHOULD generate disagreement.

@rlmcelreath I doubt that at the end there would be full disclosure of ALL the DAGs tried and discarded. Wouldn’t all of that be the equivalent of DAG-hacking? . Isn’t there an “inferential penalty” to be paid for it?.

1

6

39

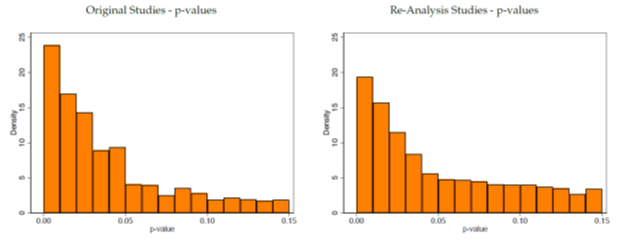

Some initial thoughts on this amazing work:.1) It's not good news. Of the work that's most likely to be passable, of those that the authors provided sufficient information, in just the narrow area of computational reproducibility, there's usually at least one major problem.

Our first meta paper is out!! This paper combines our first 110 completed reproductions/replications. This is joint work with 350+ amazing coauthors. We summarize our findings below.

2

3

42

With excitement around O3, good time to review some basics around what models are and how we should talk about them. There's an unfortunate 'god of the gaps' problem where the more performance we observe with less understanding, the more creative and unscientific the fanboying.

@RexDouglass So, what would you describe as a convincing evidence for not being a parrot?.For me, inability to generalise outside distribution according to inherent correlational (or better, causal) patterns, that is giving parrot evidence.

1

3

42

The nightmare of it is that.-to be identified.-an estimate must have some justification for that specific DAG and not any of the other ones.-which never happens so it's just implicit,degenerately strong spike 0 priors doing all the work.-and then finding 2 reviewers who don't.

"Causal inference is a trivial problem if you know the DAG. Research design is outdated.". season's greetings!

2

3

39

There's nothing in bayesianism you can't find in frequentism, just done worse and in natural language. You can find the same cultural fight between any group and its more formalized version. The more formalized group is always annoyed with the less formalized one and vice versa.

what keeps me somewhat sceptical wrt bayesians is the slightly weaker version of the cult mentality that i know from the mmt crowd: (1) learning about p(a|x) is treated as quasi-religious revelation, (2) its grandious claims ("science itself is a special case of bayes’s theorem").

3

1

35

Qualies coming out of the woodwork to explain how asking ideologues to tell you their feelings about something is considered a legitimate way of learning about that thing. Yup.

(1/2) We need more attention to selection bias in qualitative research. A new study in a top sociology journal examines "how young people experience policing," but it draws only on interviews of youth in an organization devoted to abolishing the police, one that bombards.

1

0

36

To recap, half of researchers perceive themselves as.-Faking lit reviews that can't possibly serve as a prior.-Faking hypotheses to retroactively make a known correlation in the data look like a test of a prior theory.-Picking the model that gets the needed p-value.What is.

New survey: Over half of researchers in Denmark and an international sample from Britain, America, Croatia, and Austria anonymously admitted that they:. - Cite papers they don't read.- Cite irrelevant papers.- Don't put in effort in peer review.- Misreport nonsignificant findings

1

7

36

"The statistical power of Christ compels you. ".

In 1599 the church used a placebo controlled trial to test if a French girl was possessed by a demon. Holy objects and identical, non-blessed objects were shown to the girl. "She reacted similarly when exposed to both genuine and sham religious objects"

2

4

35