Nicolas Loizou

@NicLoizou

Followers

1K

Following

742

Statuses

168

Assistant Professor @JohnsHopkins. Optimization and Machine Learning.

Joined June 2020

Not to be missed! Check out Sayantan's recent work on a Scale-Invariant Version of Adagrad! He will present this at NeurIPS this week: Wed 11 Dec 11 a.m.- 2 p.m. PST He’s also on the lookout for exciting opportunities starting next summer!

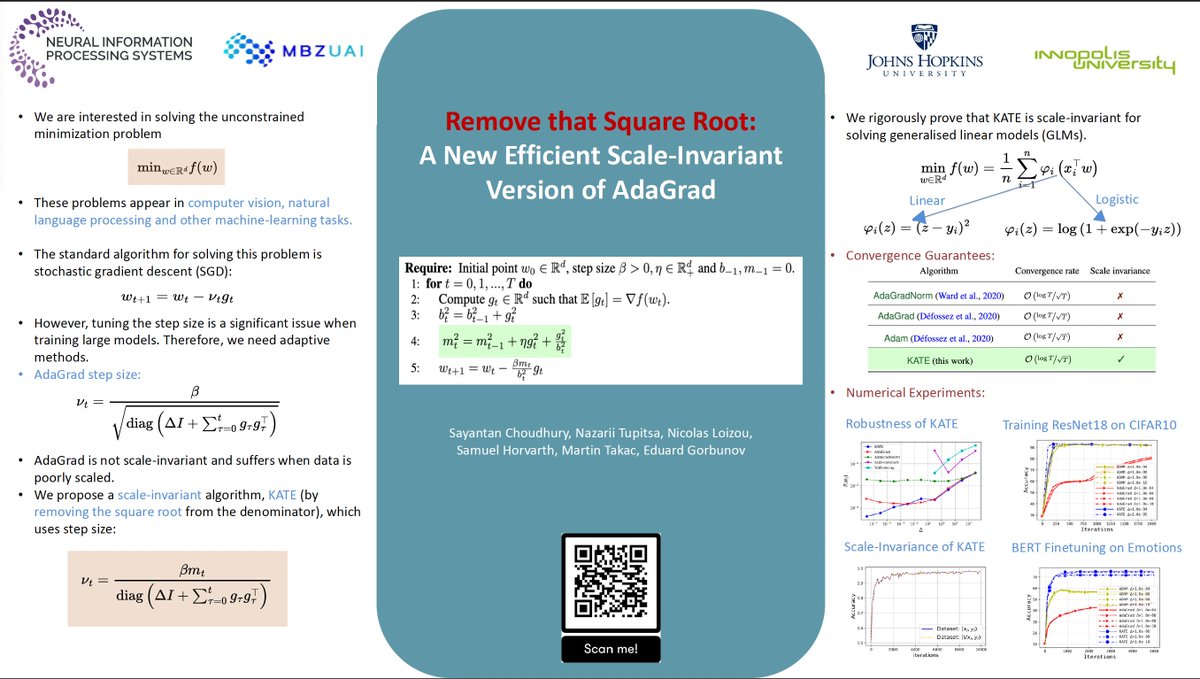

(1/4) Excited to share our NeurIPS 2024 paper: Remove that Square Root: A New Efficient Scale-Invariant Version of AdaGrad 🚀 🤝work with @dr_nazya @NicLoizou @sam_hrvth @TakacMartin @ed_gorbunov 📍Poster: West Ballroom A-D #6103 📅 Wed 11 Dec 11 a.m.- 2 p.m. PST

0

1

10

RT @ErnestRyu: @shuvo_das_gupta (Columbia) and I (UCLA) are starting an optimization seminar series! Our first speaker, @aaron_defazio (Met…

0

10

0

Applications are now open for the Data Science and AI Institute Postdoctoral Fellowship Program @HopkinsDSAI for the 2025-2026 academic year. Excellent environment, great benefits, and many researchers to collaborate with:

0

6

18

Are you looking for a great place to pursue your PhD in Canada? Check out @Mila_Quebec! It's a fantastic environment to advance research at the intersection of optimization and machine learning alongside amazing colleagues. @bouzoukipunks, @SimonLacosteJ, @gauthier_gidel

Mila's annual supervision request process opens on October 15 to receive MSc and PhD applications for Fall 2025 admission! Join our community! More information here

0

0

7

RT @BachFrancis: How fast is gradient descent, *for real*? Some (partial) answers in this new blog post on scaling laws for optimization. h…

0

85

0

#neurips2024 In one of the most unexpected decisions, our paper, with scores 6, 6, 6, and 7 (average rating: 6.25, confidence: 3), was rejected with no reasonable justification from the AC. @jmtomczak @ulrichpaquet @DaniCMBelg ACs should be held accountable for such decisions.

#neurips2024 An adversarial AC rejected our paper with avg score of 6.3 with ALL Reviewers accepted the paper because "the reviewers were not responding on a clear paper during discussion" WTH is going on? @jmtomczak @ulrichpaquet @DaniCMBelg Any accountability for such an AC?

6

6

54

RT @JohnsHopkins: Johns Hopkins University achieves its highest-ever position in the U.S. News & World Report rankings coming in at No. 6 f…

0

71

0

RT @deepmath1: Join us in Philadelphia at the 8th iteration of the conference on mathematical theory of deep neural networks @deepmath1, su…

0

7

0

@bremen79 @gautamcgoel @MatharyCharles For this, check out the upper conditions in the paper: I believe your exact condition might be related to one or two conditions there. Remark: Your condition could also be related to the Polyak steps! ;)

1

0

3

I am looking forward to further extensions of Schedule-Free Algorithms in the future! Many Congrats to @aaron_defazio @konstmish @alicey_ang! Well-deserved recognition!

Schedule-Free Wins AlgoPerf Self-Tuning Track 🎉 I'm pleased to announce that Schedule-Free AdamW set a new SOTA for self-tuning training algorithms, besting AdamW and all other submissions by 8% overall. Try it out:

0

2

16

On my way to #ISMP2024! Tomorrow, I’ll be presenting our latest findings on Stochastic Extragradient Methods at On Thursday, don’t miss our sessions on: "Adaptive, Line-search, and Parameter-Free Stochastic Optimization".

1

2

24

@orvieto_antonio @konstmish Is NGN provably at most twice the SPSmax value? If you fix the problem, then will 1.5*SPSmax have a similar performance to this? In other words, is the beneficial performance an outcome of the slightly larger step-size selection?

2

0

1

I have been waiting for this to drop since Antonio's excellent PhD thesis! Looking forward to exploring the connections with Polyak steps and extensions to coordinate-wise and momentum variants!

NGN introduces a new paradigm for optimization in deep learning: cheap curvature estimation with state-of-the-art theory. With Lin Xiao, we took one year to write this - it was just too good to rush. A lot still has to be done, this is just the beginning.

1

0

11